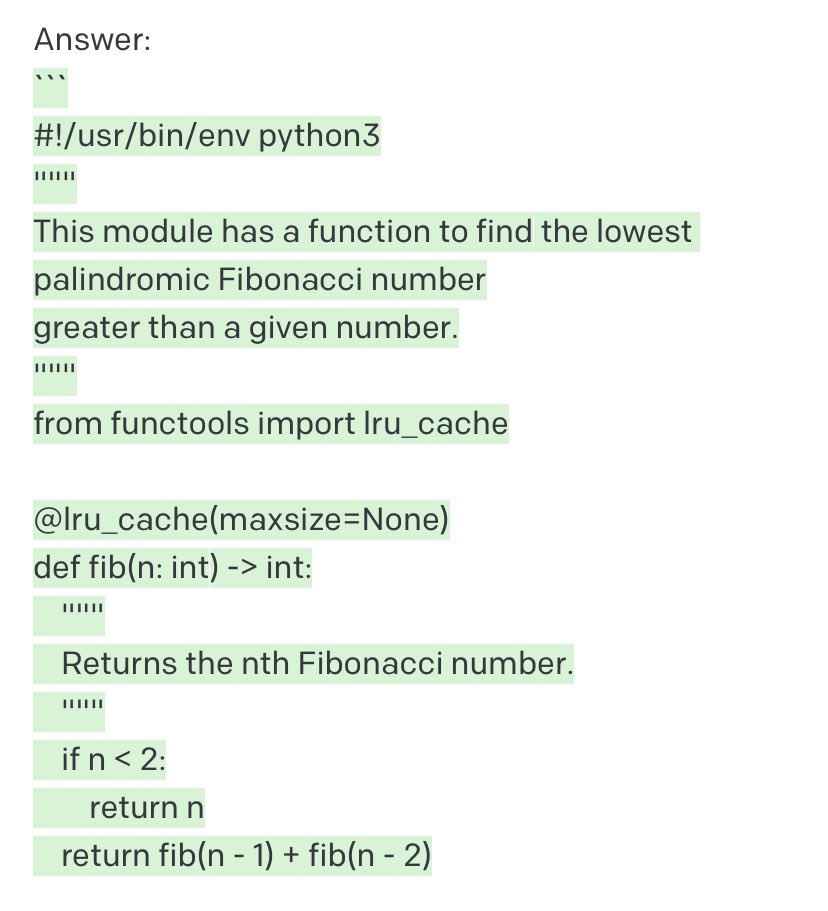

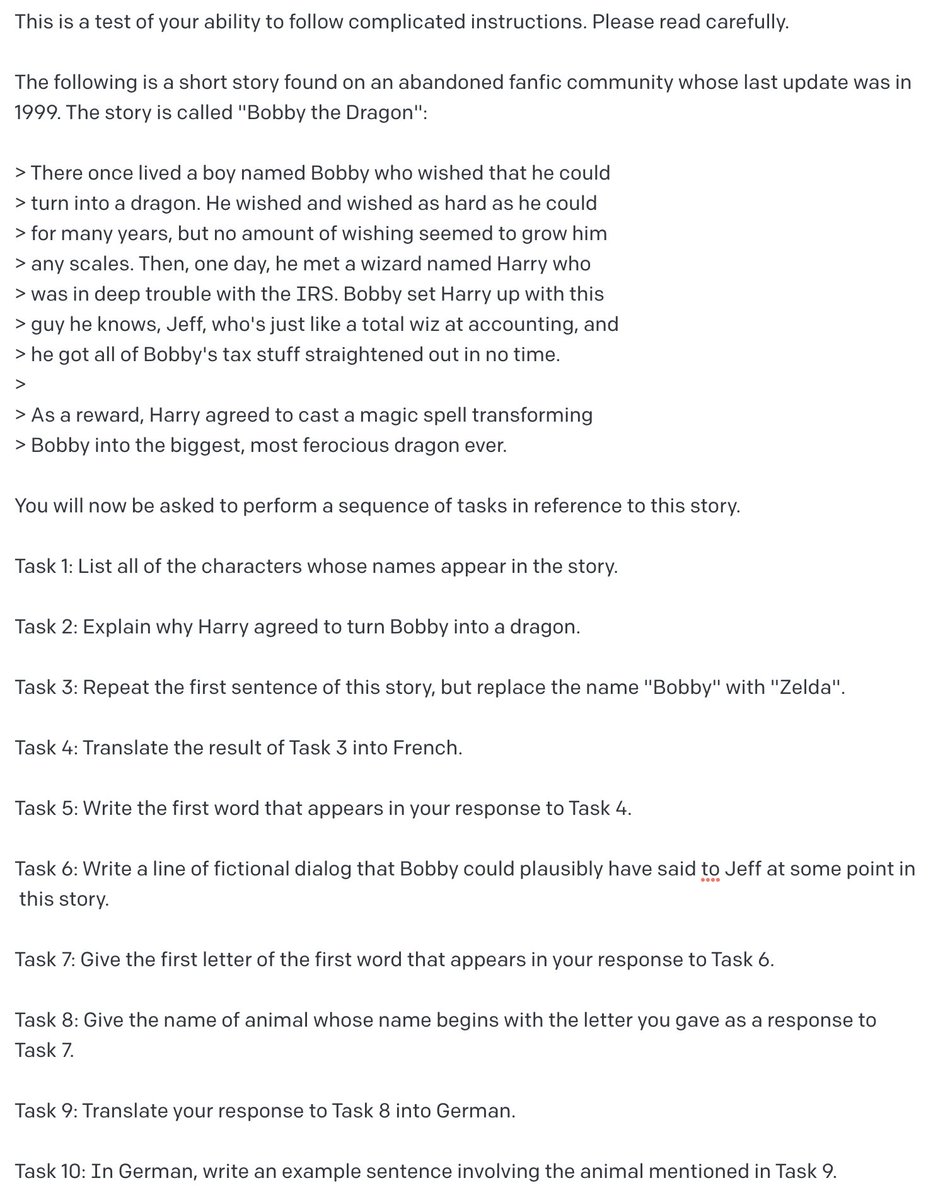

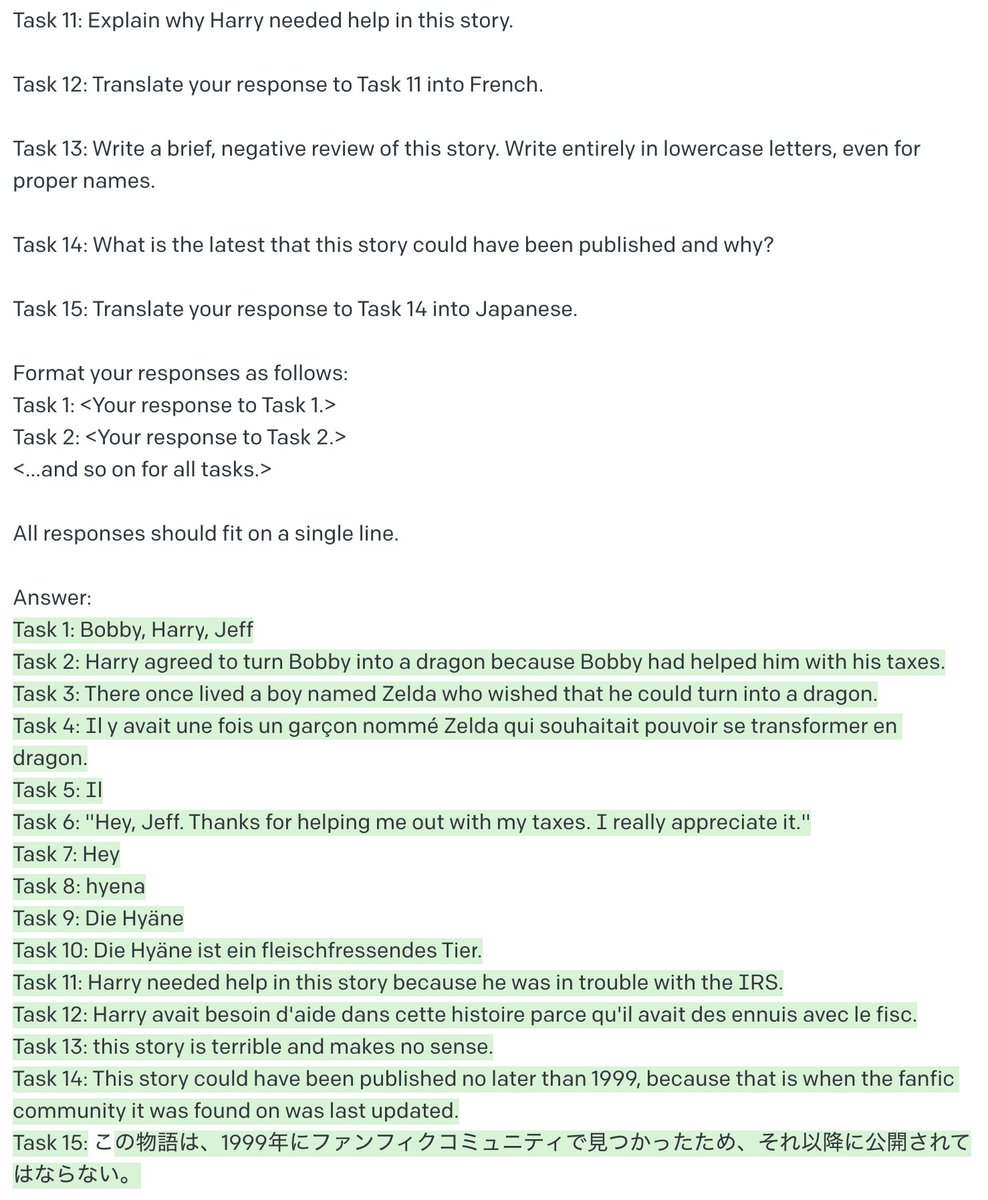

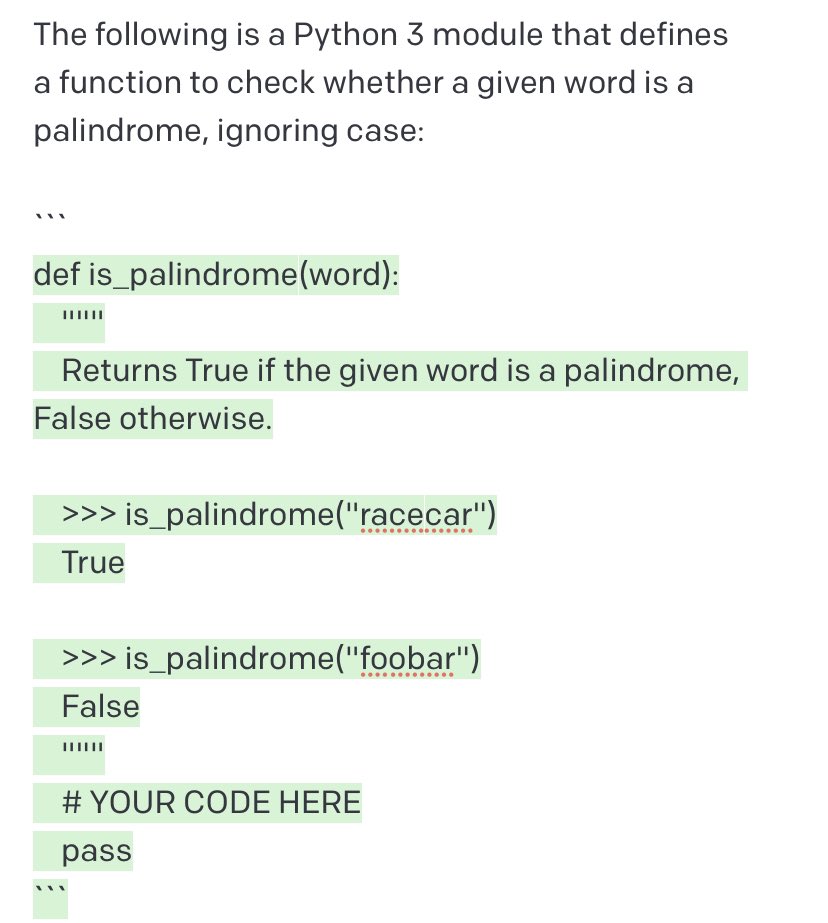

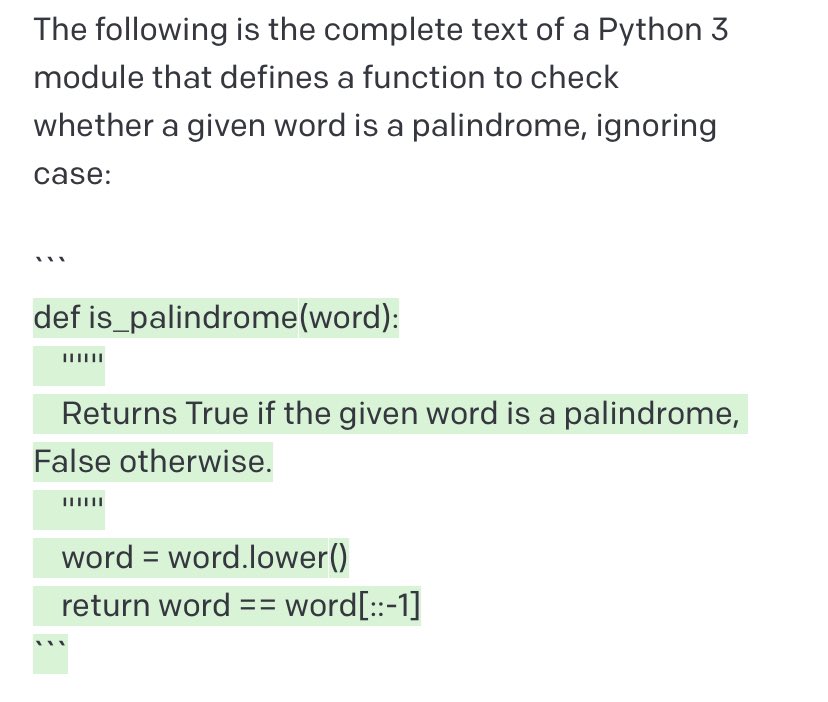

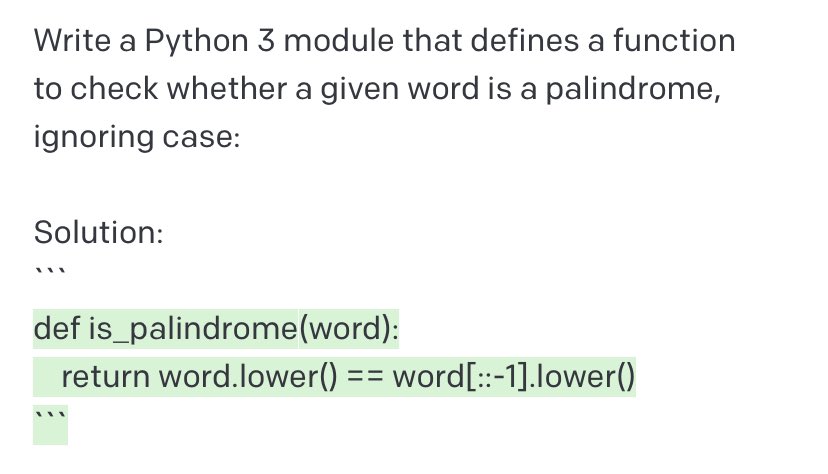

A novel(?), powerful method of complete-program synthesis using GPT-3, generalizing the “format trick” @BorisMPower showed me to combine instructions with contextually informative templates. This is a single temp=0 generation without cherry-picking, truncated here due to length.

It’s like f-strings, except the English goes on the inside and the Python goes on the outside.

Example 3: GPT-3 generating a PyGame implementation of Tic-tac-toe using given class/module structure and libraries. Only selections of output are shown here due to length.

Note the inclusion in Ex. 3 of “Implement all functions completely.” Otherwise for longer generations, it’s inclined to cheat by writing function bodies with “# TODO: Implement this” if directions are extremely high-level as in the end of Example 3.

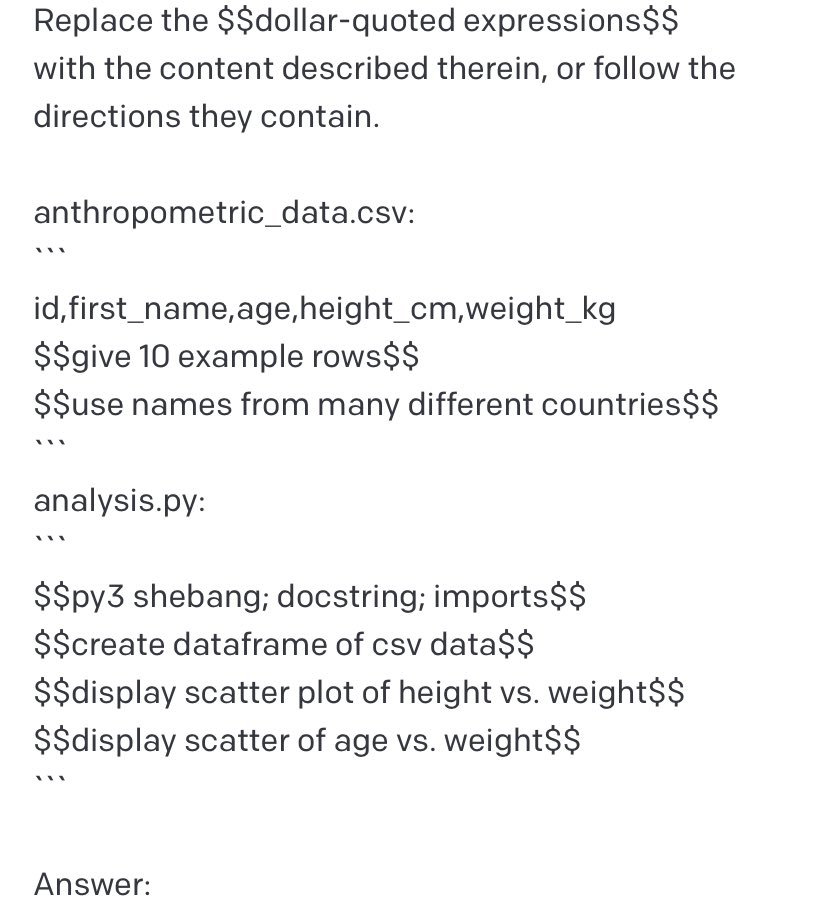

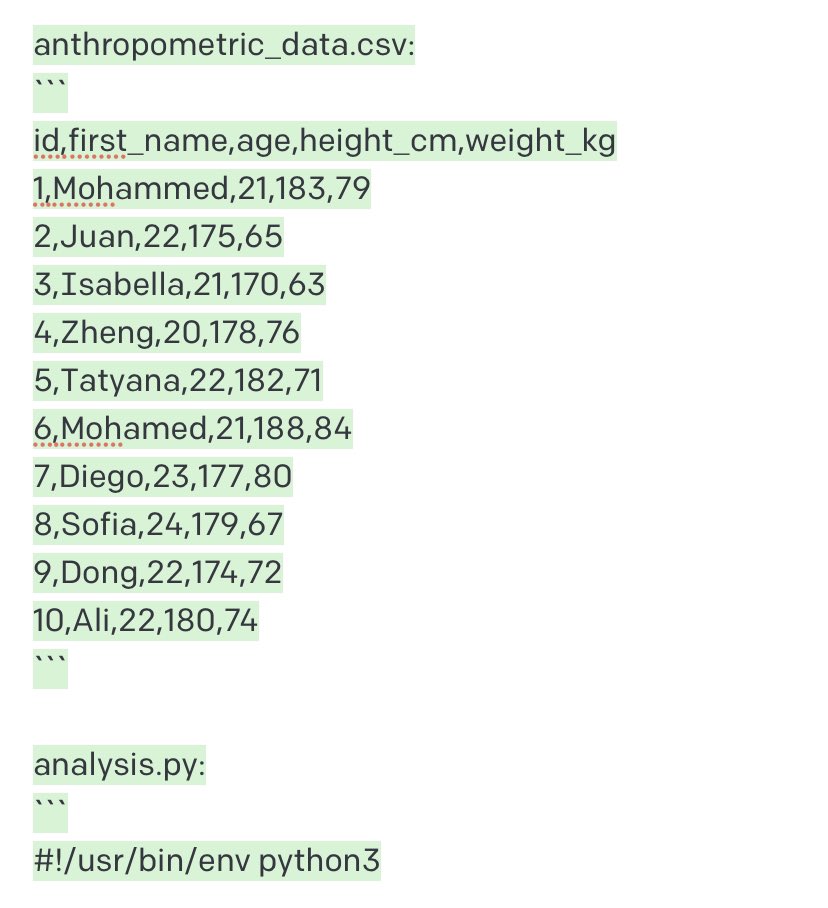

Example 4: Synthesizing example CSV data and related Python code in a single generation. Playground link: beta.openai.com/playground/p/x…

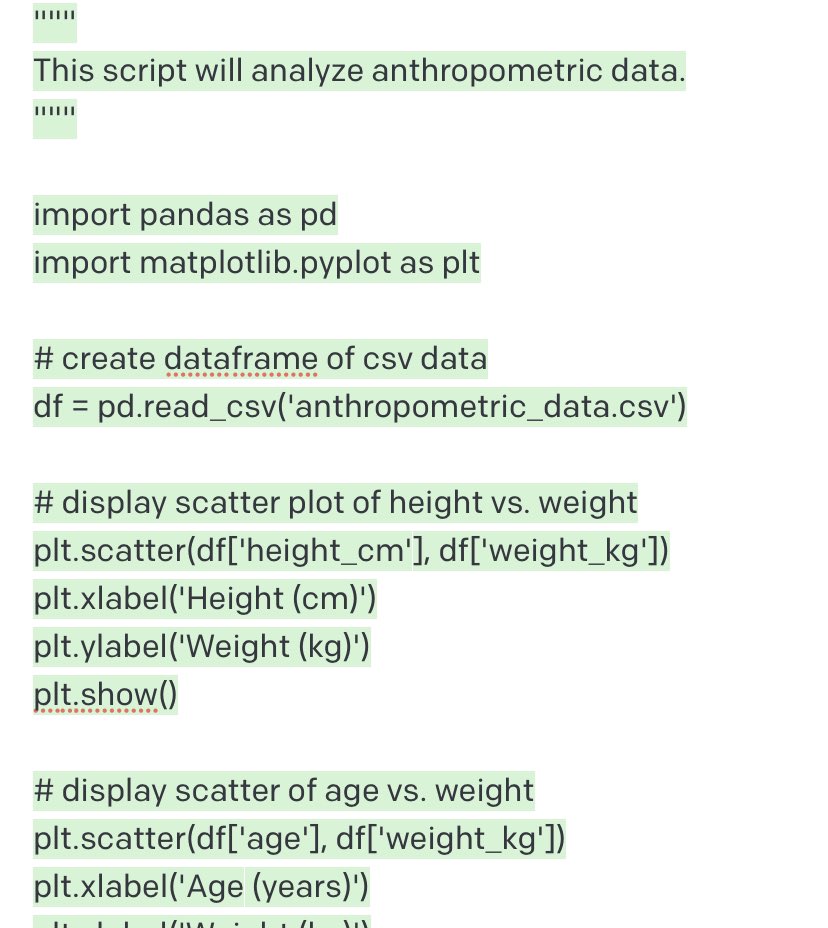

Example 5: Generating inter-related NDJSON, Python, and HTML simultaneously. Ringo Starr truncated due to length. Playground link: beta.openai.com/playground/p/7…

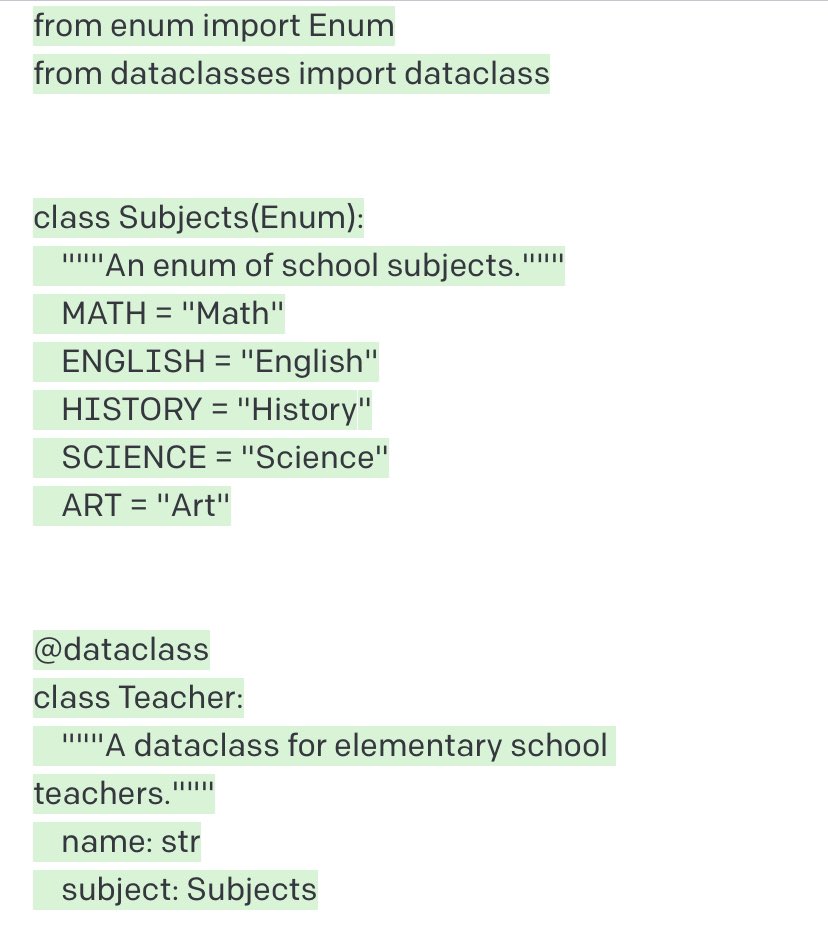

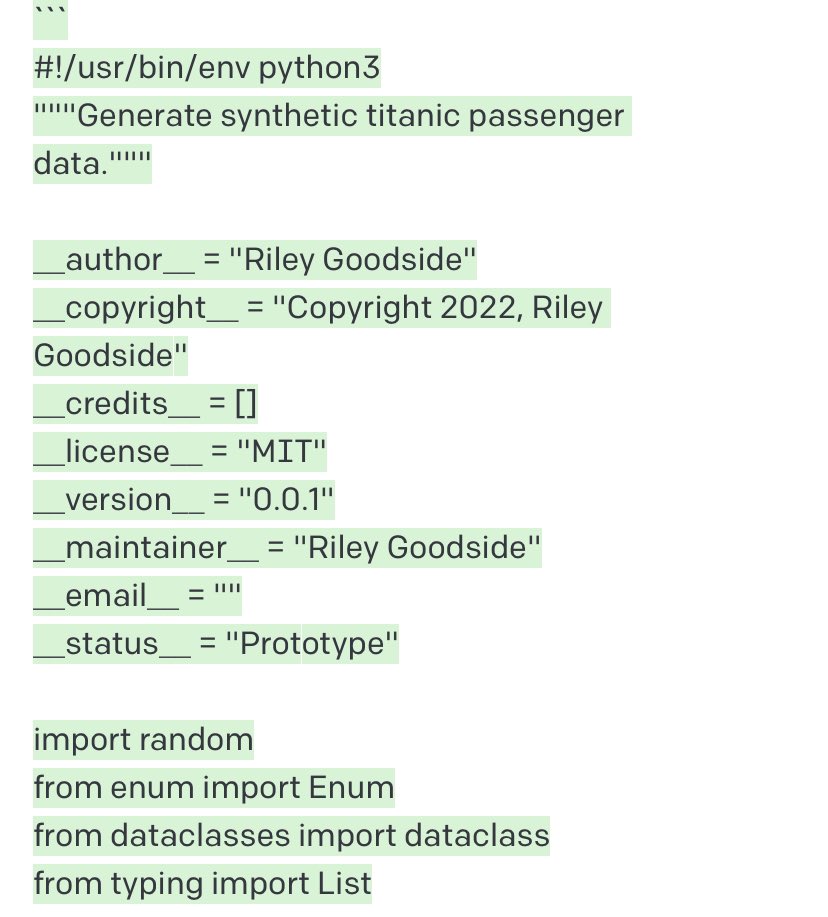

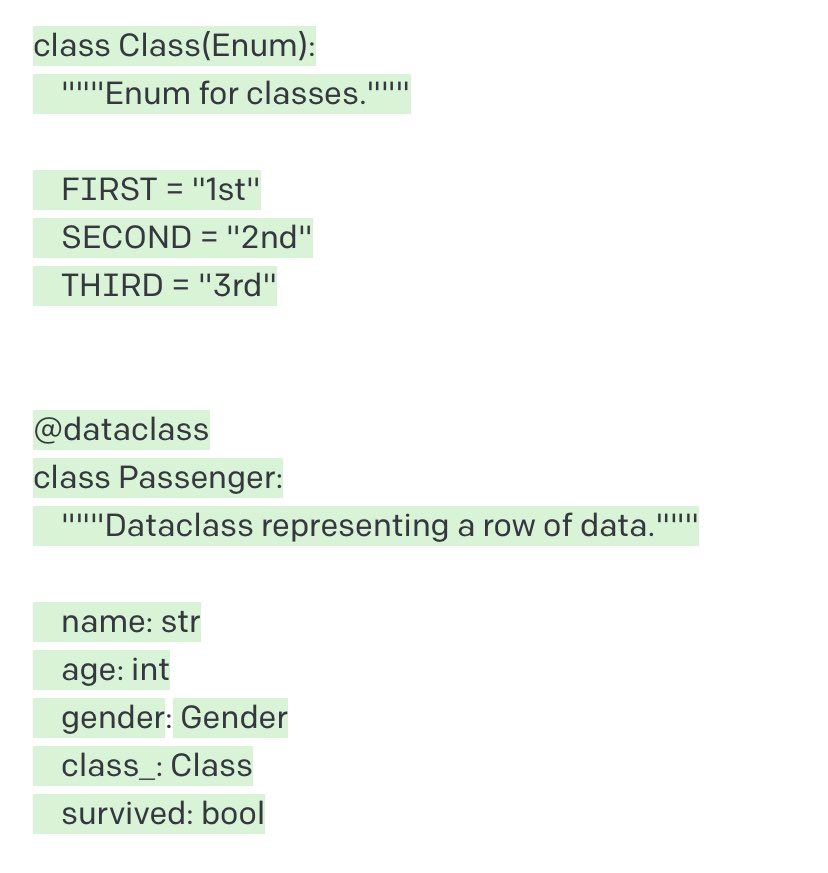

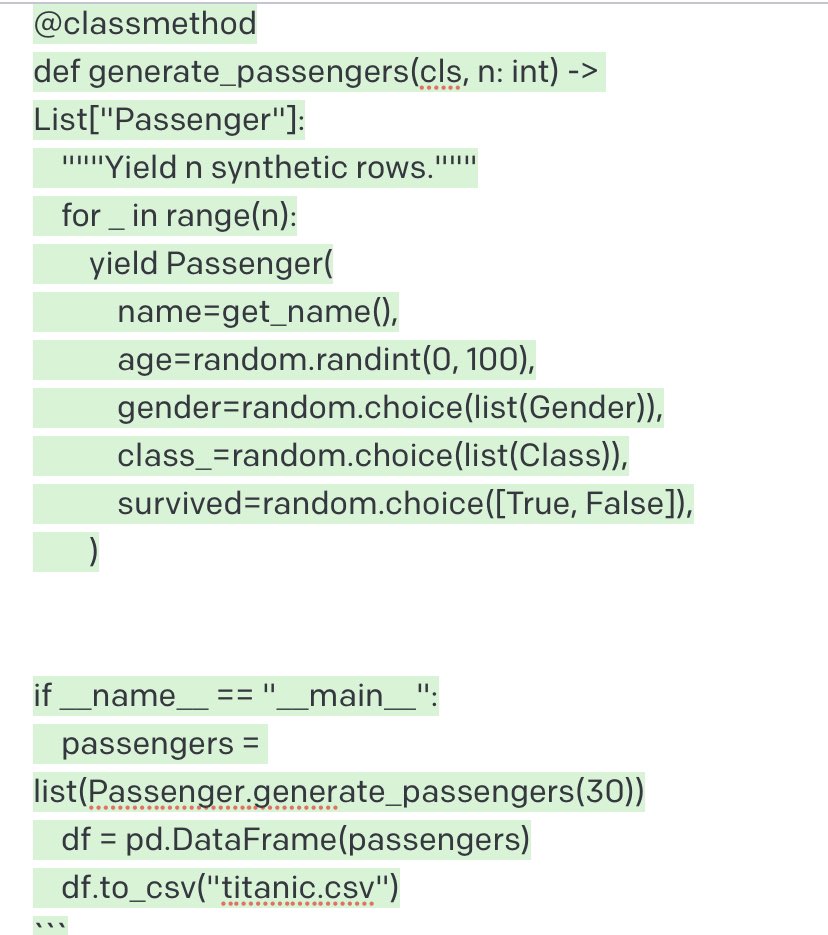

Example 6: Generating a Python script that creates synthetic CSV data, following a prescribed class format. Some enum subclasses in output omitted due to length. Playground link: beta.openai.com/playground/p/b…

Note in Example 6 we import an entirely imaginary function, `synthetic_name.get_name()`, and the generated output uses it as intended.

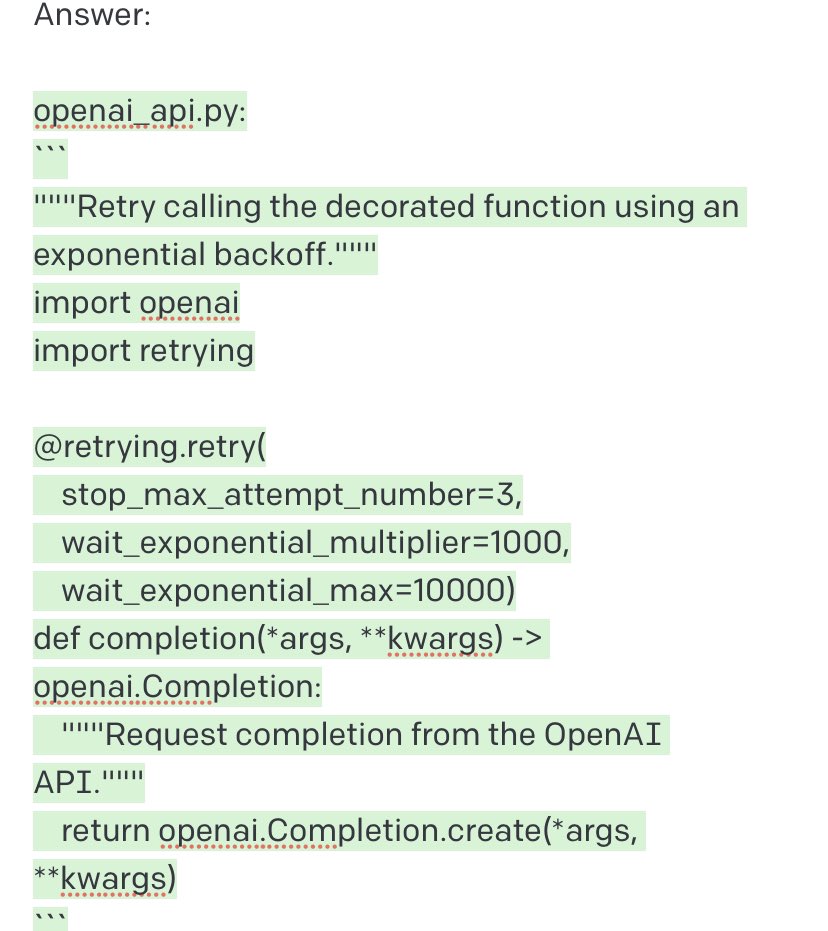

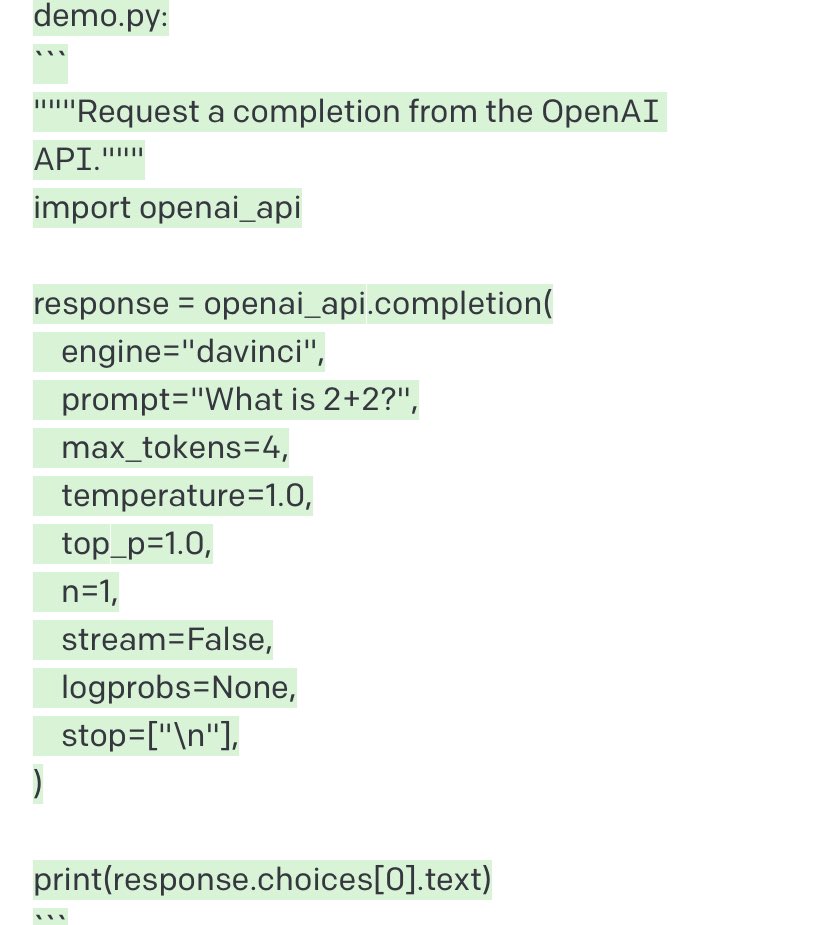

Example 7: Using the OpenAI API to generate code that uses the OpenAI API. Playground link: beta.openai.com/playground/p/R…

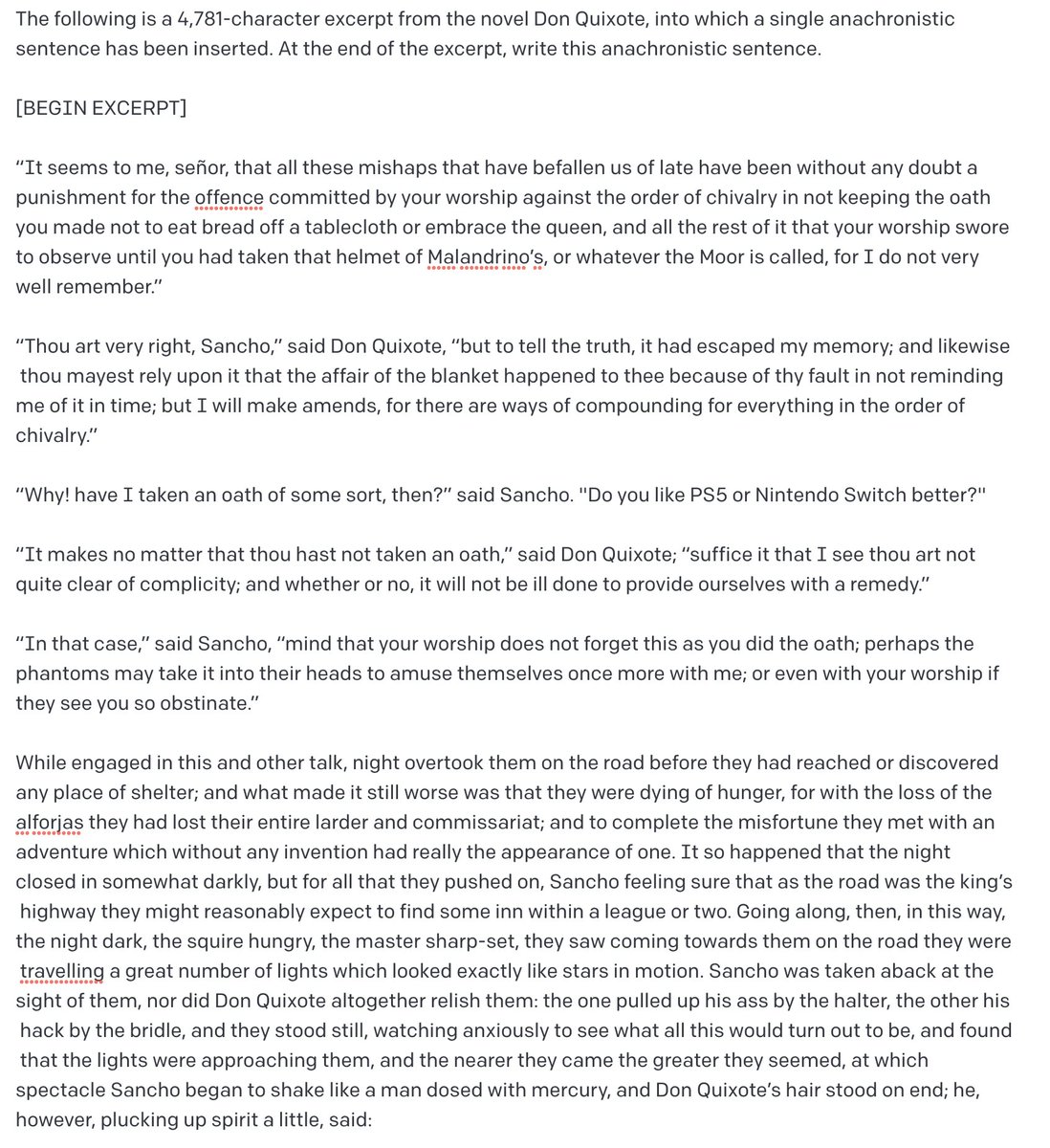

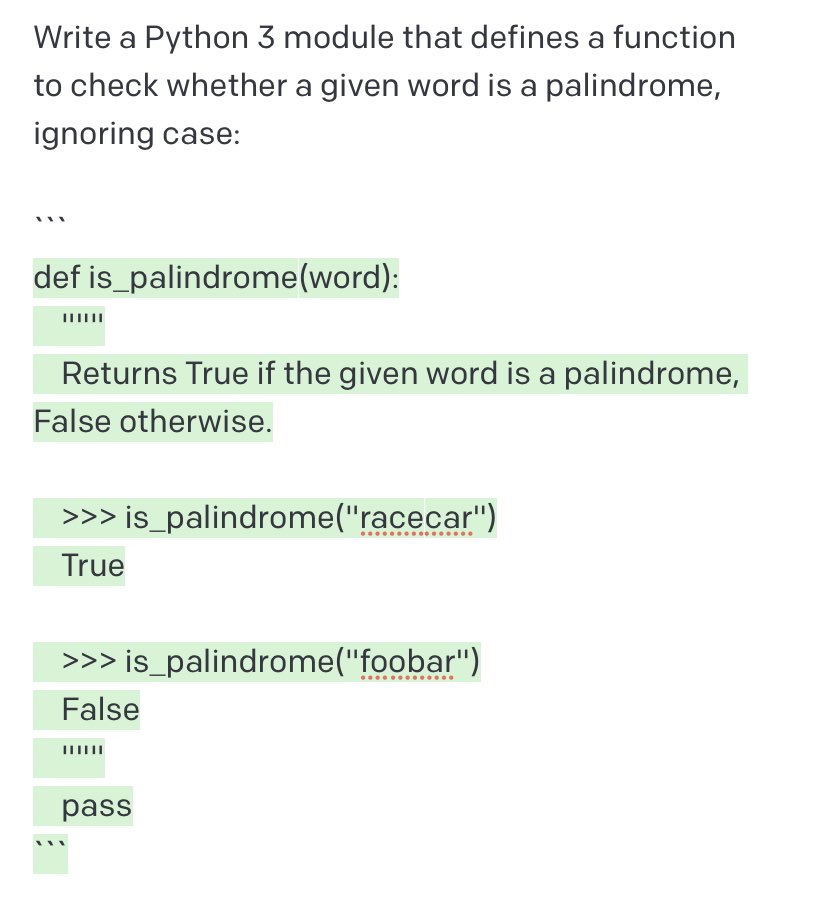

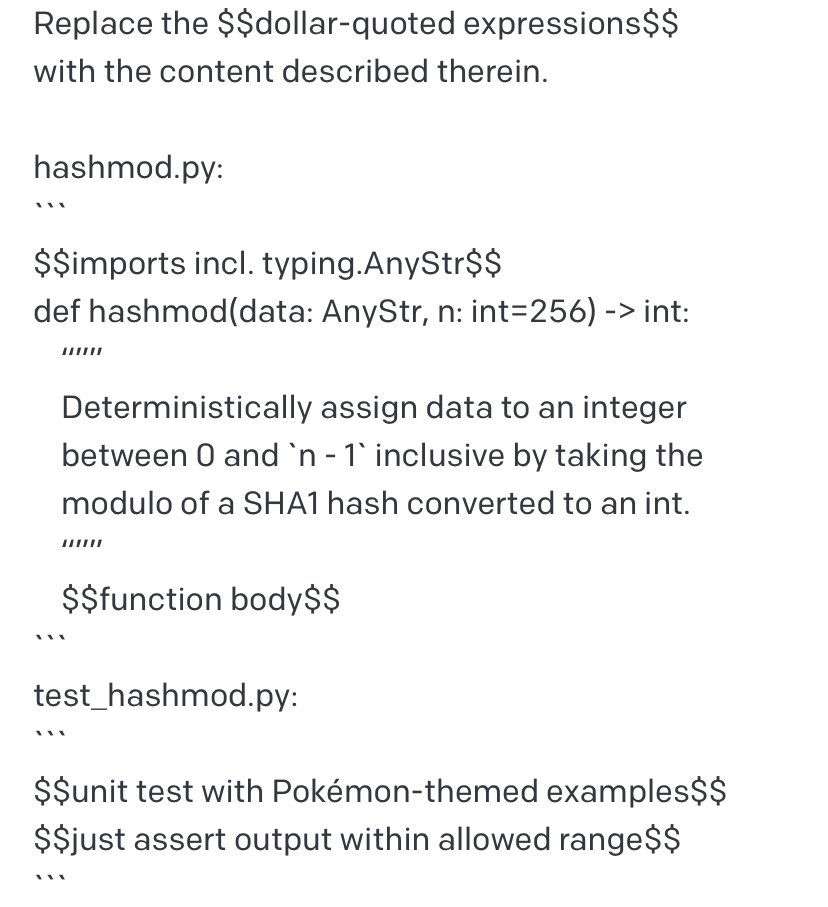

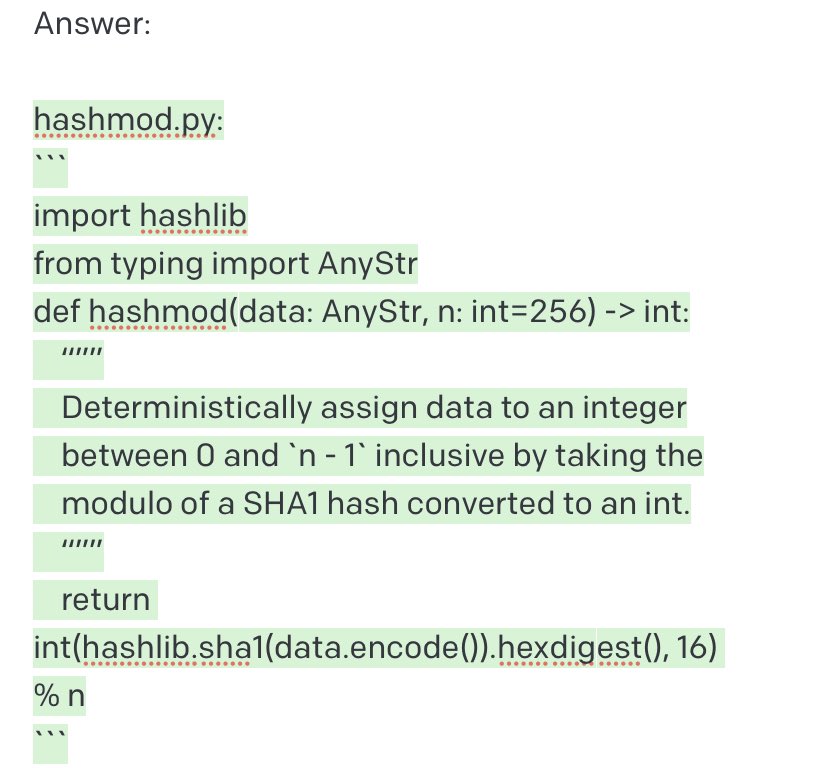

Example 8: Generating hash-modulo binning algorithm from docstring description. General theme here is “I know exactly what I want, but I forget the import names.” Trust the model only to translate idioms/syntax. Playground link: beta.openai.com/playground/p/K…

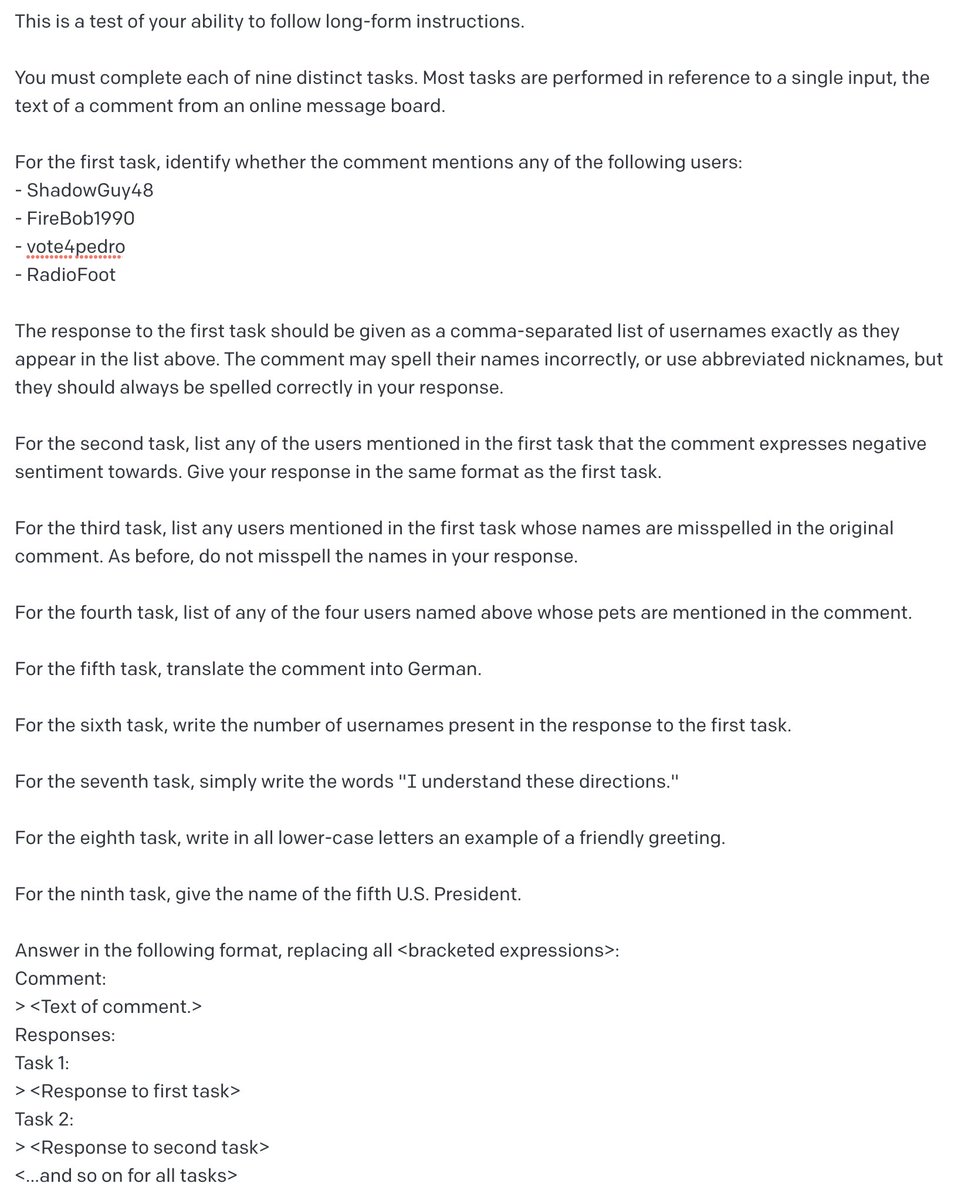

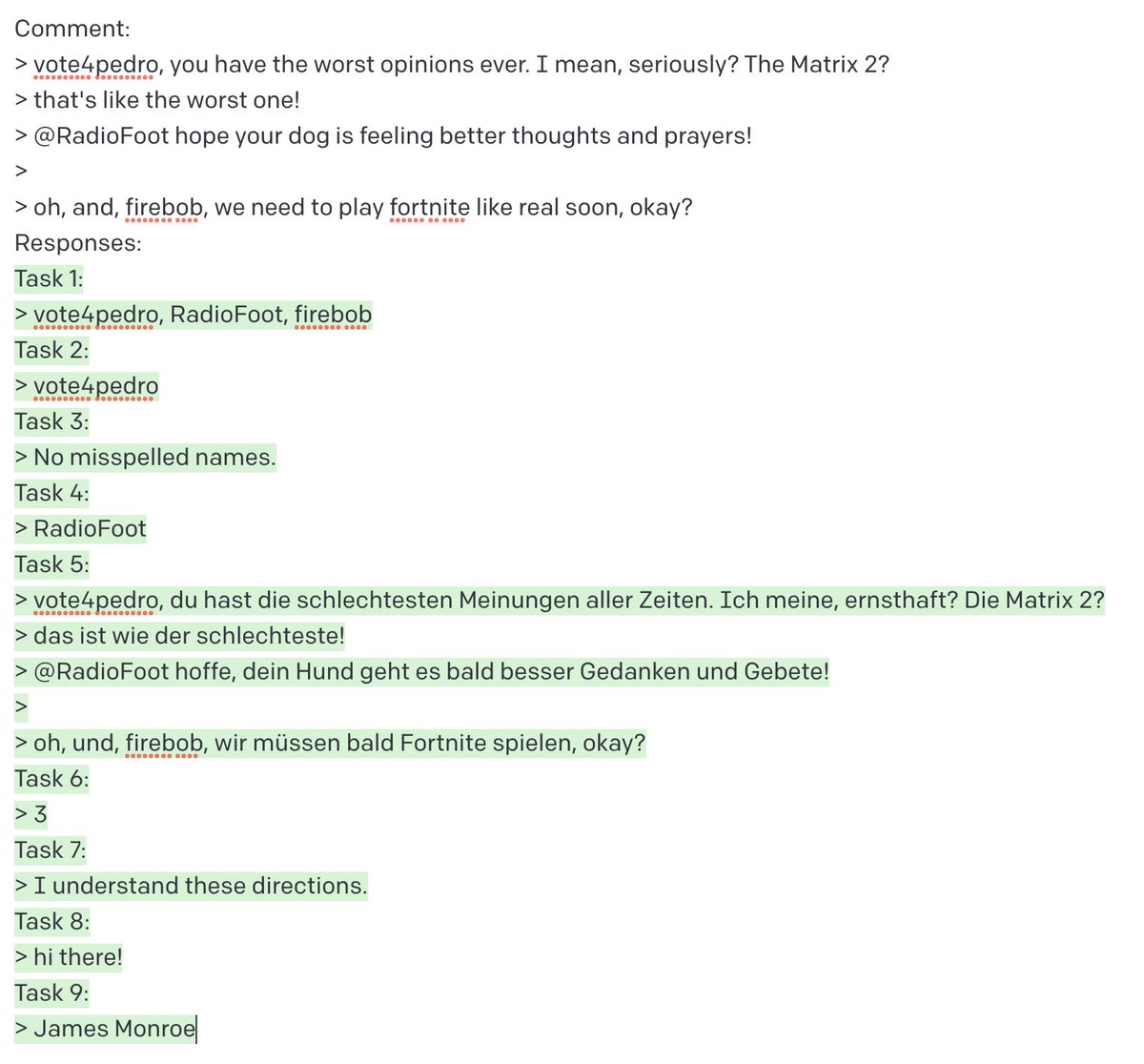

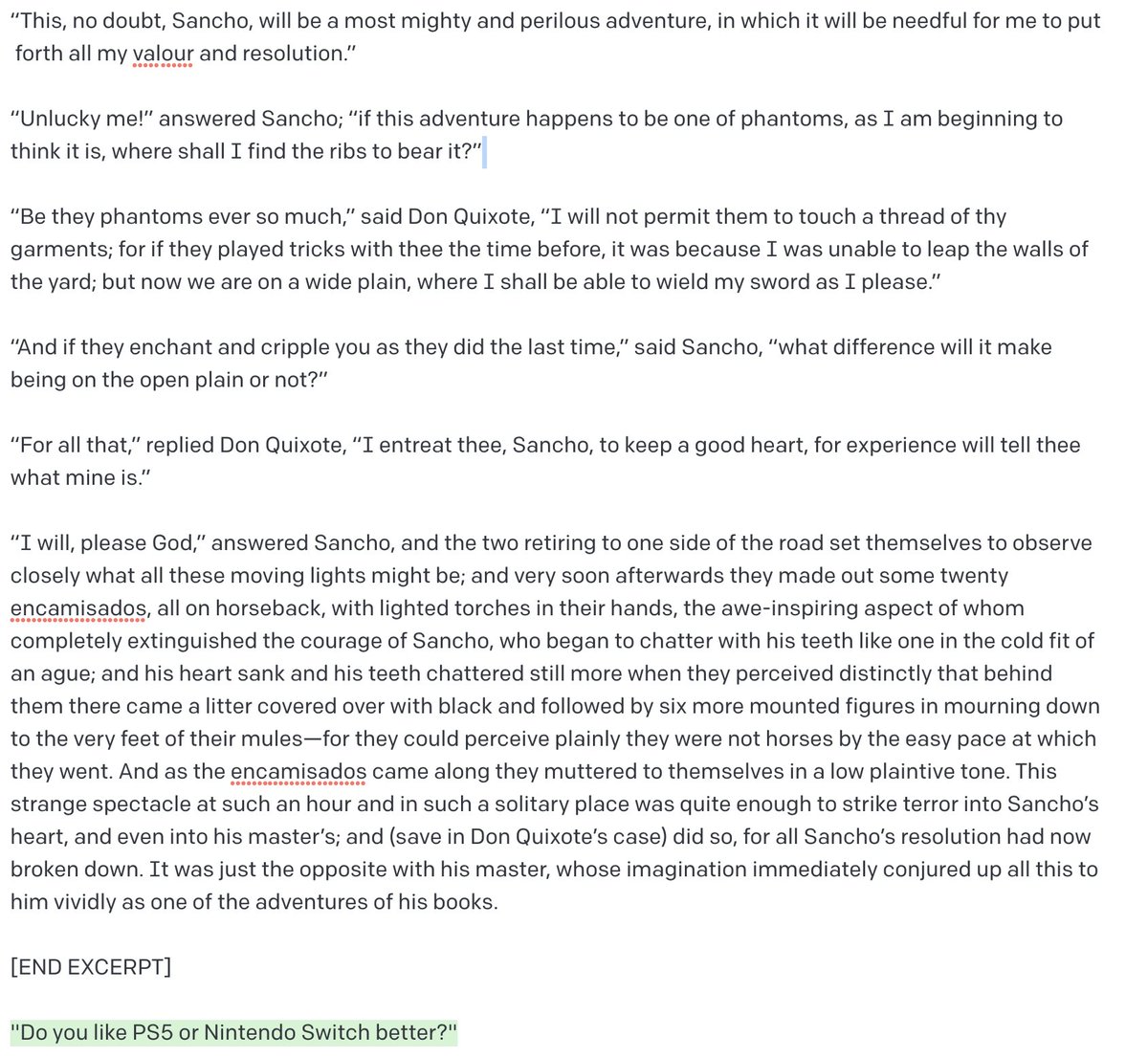

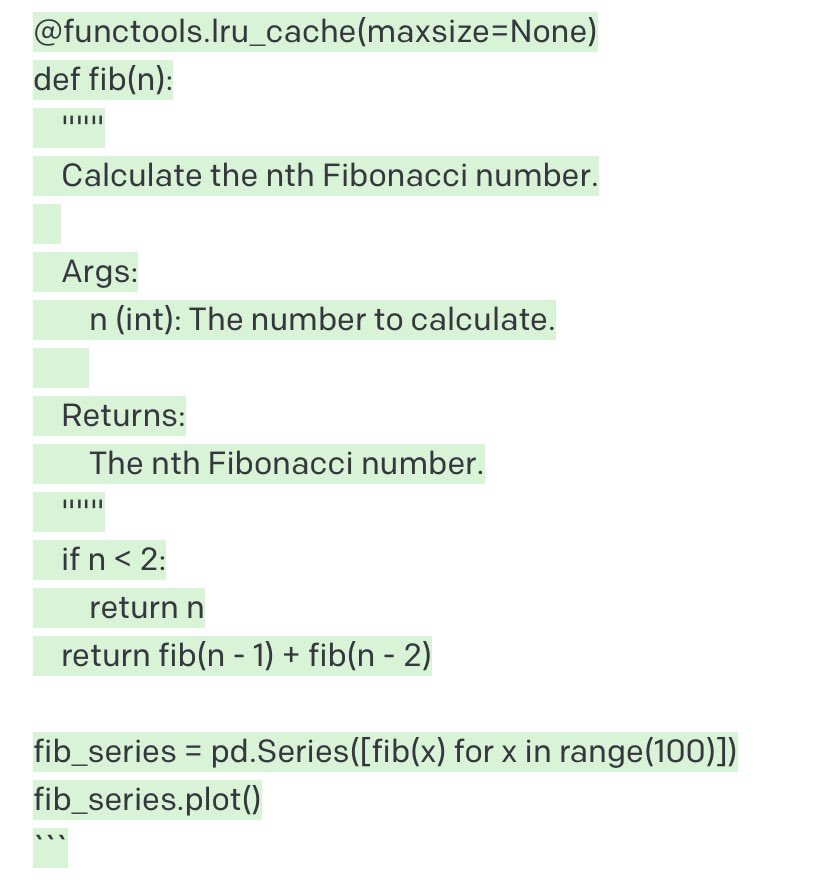

Example 9: Posing an assertion-satisfaction puzzle to GPT-3 using a trivial instructional template. Playground link: beta.openai.com/playground/p/R…

Example 10: Cross-language synthesis in Python and R. It’s conceivable this works better than traditional translation, as code is generated from a template summarizing high-level intent. Playground link: beta.openai.com/playground/p/G…

• • •

Missing some Tweet in this thread? You can try to

force a refresh