PLEASE JUST LET ME EXPLAIN PT 1:

AI ISN'T AN AUTOMATIC COLLAGE MACHINE

i'm not judging you for thinking so! the reality is difficult to explain because machine learning is a deep field. *there is no plagiarism involved*.

i am not here to defend nfts just to be clear.

A THREAD!

AI ISN'T AN AUTOMATIC COLLAGE MACHINE

i'm not judging you for thinking so! the reality is difficult to explain because machine learning is a deep field. *there is no plagiarism involved*.

i am not here to defend nfts just to be clear.

A THREAD!

this is a sequel to the legendary monkeys with cigarettes thread. you can read that here. i am also not here to debate the ethics of image scraping as a concept, which is far, far beyond my intellectual ability to discuss and i will freely admit that.

https://twitter.com/ai_curio/status/1532787119902572546

THE AUTOMATIC COLLAGE MACHINE: it doesn't actually know how to do that.

the process of creating a "model" (the ai's "brain" that "knows how to draw", BIG AIR QUOTES IT'S NOT CONSCIOUS) is necessarily destructive (information is not preserved). it is not storing pics anywhere

the process of creating a "model" (the ai's "brain" that "knows how to draw", BIG AIR QUOTES IT'S NOT CONSCIOUS) is necessarily destructive (information is not preserved). it is not storing pics anywhere

STATISTICS IS HARD: it's not creating an average image

the exact method used to actually perform the process of making the image is sort of beyond the scope of this thread (look up "gradient descent" to start). it hasnt "memorized pictures". it's "memorized traits" about things

the exact method used to actually perform the process of making the image is sort of beyond the scope of this thread (look up "gradient descent" to start). it hasnt "memorized pictures". it's "memorized traits" about things

IT DOES ACTUALLY """KNOW WHAT A HAND IS""", think those image recognition things. this hasn't memorized "a picture of a contempt face" it's seen a bajillion images that have been labeled with emotions and memorized the "features of contempt". it's not duplicating it's learning

sidebar: these image recognition things are in fact *what our modern image synthesis ais were built out of* - "if the ai knows what a contemptuous face looks like if you give it faces to look at, what happens if we ask it to show us what it thinks a contemptuous face looks like"

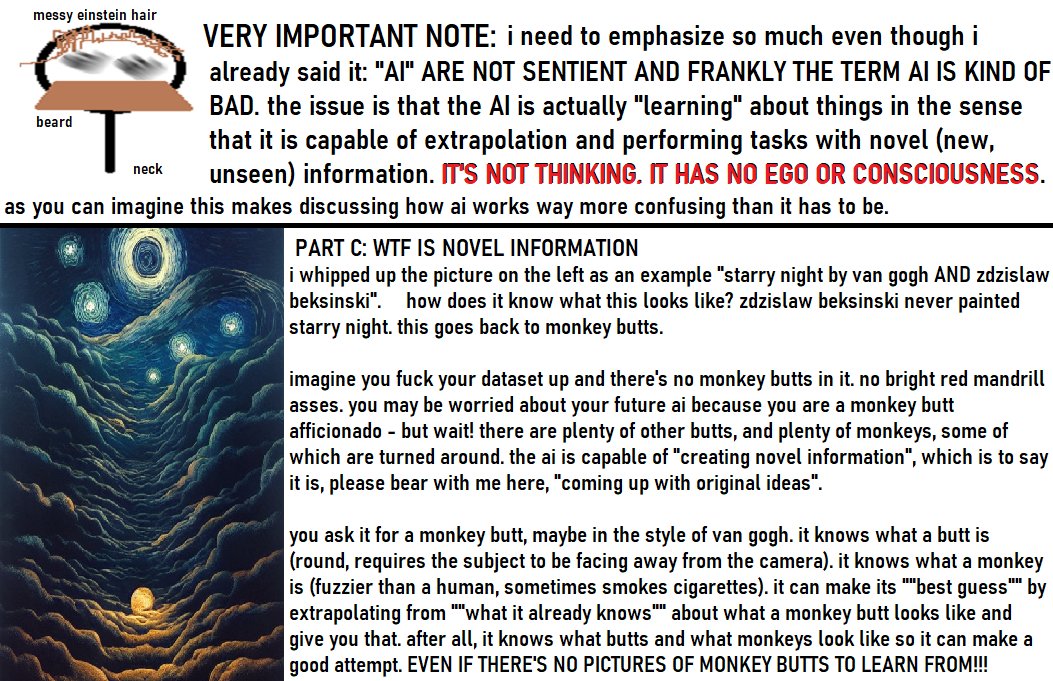

HOW DID IT DO THAT:

when you ask it for things like "art in the style of [two artists i like]" it's not "creating an average picture" (see a couple posts back). whats happening is a grad school level statistics brainfuck that involves it doing 512-dimensional matrix math.

when you ask it for things like "art in the style of [two artists i like]" it's not "creating an average picture" (see a couple posts back). whats happening is a grad school level statistics brainfuck that involves it doing 512-dimensional matrix math.

HOW DOES IT KNOW HOW TO DO THAT:

this is the best explanation of the latent vector i can give. imagine an image is composed of a million "directions" to all the traits in it. your picture of a monkey goes 100 units monkeyward, 150 units jungleward, 50 units sunward, etc. etc.

this is the best explanation of the latent vector i can give. imagine an image is composed of a million "directions" to all the traits in it. your picture of a monkey goes 100 units monkeyward, 150 units jungleward, 50 units sunward, etc. etc.

note for pedants with graduate degrees and phds: i know this is not a perfect description of the latent vector but i am trying to keep this approachable for people who dont have graduate degrees or phds (like myself)

thats it this is the final main part of the thread, i'm gonna go into some postscripts in a second.

i love you, i am not yelling at you, if you feel angry at this thread i would like to talk to you calmly and non-confrontationally, you can dm me if you're afraid of dogpiles.

i love you, i am not yelling at you, if you feel angry at this thread i would like to talk to you calmly and non-confrontationally, you can dm me if you're afraid of dogpiles.

Q: i saw the ai making a signature on its work! is it copying that signature from someone?

A: the ai has learned that many artistic works contain signatures and is trying to sign it itself. it's not copying any one particular signature. if you zoom in it'll probably be gibberish

A: the ai has learned that many artistic works contain signatures and is trying to sign it itself. it's not copying any one particular signature. if you zoom in it'll probably be gibberish

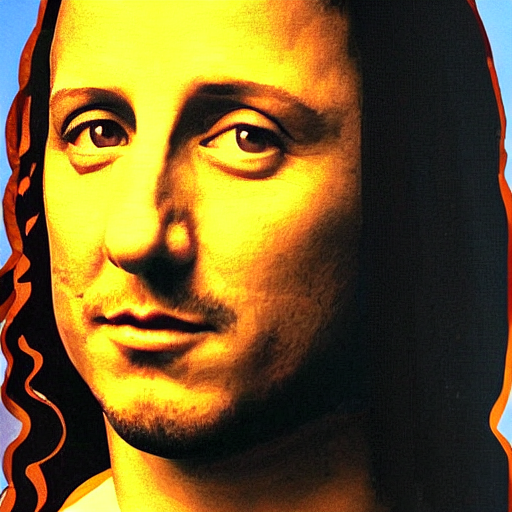

Q: i saw stable diffusion create an exact duplicate of the mona lisa just like a little fucked up. you said the ai isn't plagiarizing. what gives?

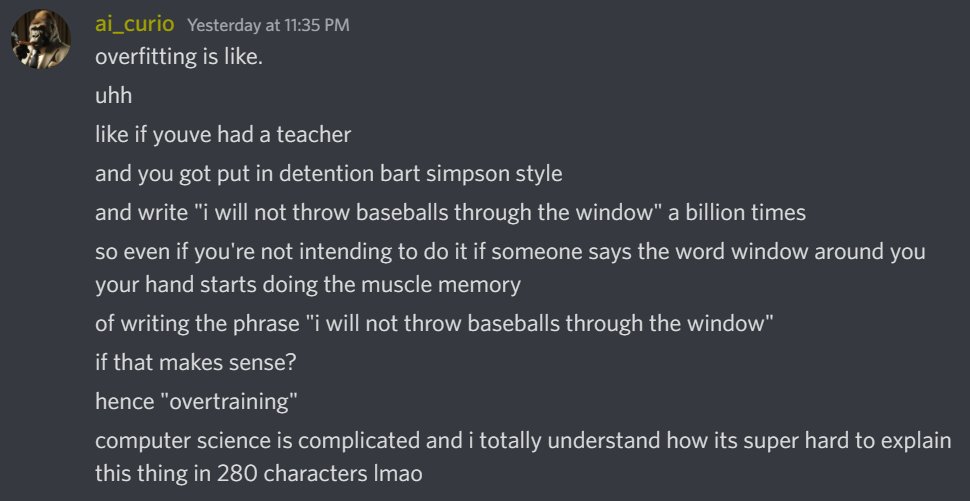

A: this is called "overfitting" and its a sign that an ai has SO MANY duplicates of a particular thing. i'll let discord-me explain.

A: this is called "overfitting" and its a sign that an ai has SO MANY duplicates of a particular thing. i'll let discord-me explain.

basically there are so so so many versions of the mona lisa/starry night/girl with the pearl earring in the dataset that they didn't deduplicate (intentionally or not) that it goes "too far" in that direction when you try to "drive there" in the latent vector and gets stranded.

note that with a strong enough other direction (like adam sandler) you can steer it back into place sometimes, see attached.

this is also why "prompt weights" are used in ai art because that basically lets you guide "how far" the ai goes in a "given direction".

this is also why "prompt weights" are used in ai art because that basically lets you guide "how far" the ai goes in a "given direction".

further reading: this is a copyright proposal by law researcher ben sobel that draws a distinction between generative models being used for transformative works in an artistic sense and generative models used by corps to cut costs. i recommend it lots

bensobel.org/files/misc/Sob…

bensobel.org/files/misc/Sob…

further notes: i said this already but i want to reiterate that i think corporations using AI to skimp on paying artists is morally and ethically abhorrent, i do not support it, and i get the fears!

i use AI as a tool, as an artist, to make pieces. i hope i'm not your enemy.

:)

i use AI as a tool, as an artist, to make pieces. i hope i'm not your enemy.

:)

additions from others:

https://twitter.com/aicrumb/status/1564905017114492929

accidentally posted the image instead of the qrt

https://twitter.com/aicrumb/status/1564907971104358400

• • •

Missing some Tweet in this thread? You can try to

force a refresh