(1/24) Are you liking my @ethereum roadmap posts? Do you want to further into the future, beyond the roadmap?

Bitcoin was our 0 to 1 for trustless applications. Ethereum for trustless computing.

And soon, @eigenlayer will extend $ETH to provide generic, extendable trust.

Bitcoin was our 0 to 1 for trustless applications. Ethereum for trustless computing.

And soon, @eigenlayer will extend $ETH to provide generic, extendable trust.

(2/24) @Bitcoin invented decentralized computation: the coordination of untrusted computers to achieve a unified computing environment.

However, Bitcoin was implemented as an application-specific blockchain computer. It’s only functionality is to transfer $BTC.

However, Bitcoin was implemented as an application-specific blockchain computer. It’s only functionality is to transfer $BTC.

(3/24) In order to create any other application/functionality, you would have to deploy a new network with a new basis of decentralized trust.

Each application would fracture the available trust further and further.

Each application would fracture the available trust further and further.

(4/24) In 2015, @VitalikButerin delivered on Satoshi's 2008 vision: generalized trustless computing.

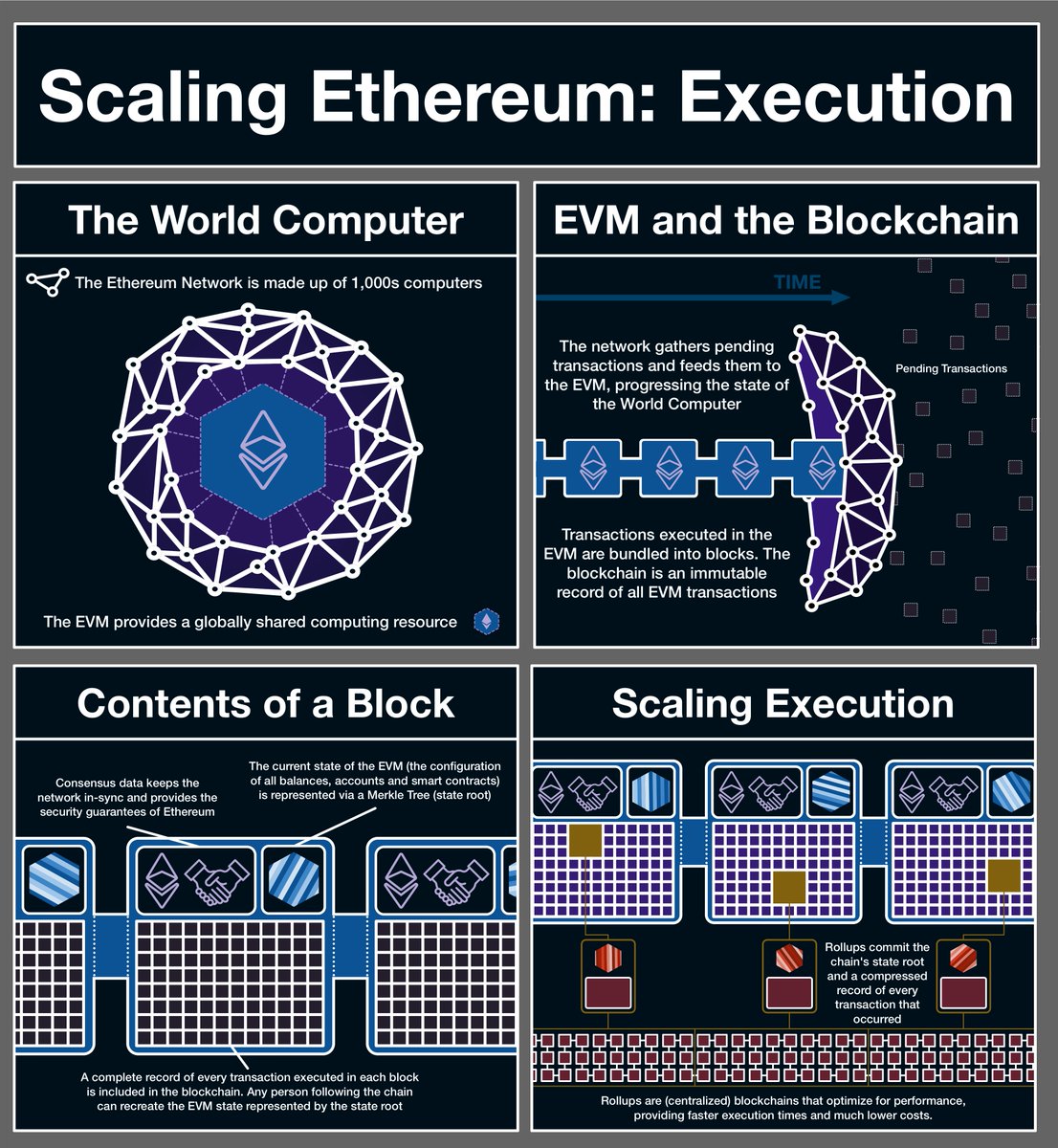

(5/24) @ethereum replaces Bitcoin's application-specific computing environment with a generalized one.

Bitcoin can only add or subtract. Ethereum is Turing-complete.

Bitcoin can only add or subtract. Ethereum is Turing-complete.

(6/24) Turing-completeness is a concept that boils down to "if a system is Turing-complete it can do anything that any other Turing-complete system can do"

We can prove that @ethereum is Turing-complete and therefore we know it is capable of everything your Macbook is capable of

We can prove that @ethereum is Turing-complete and therefore we know it is capable of everything your Macbook is capable of

https://twitter.com/SalomonCrypto/status/1543571618298880002

(7/24) @ethereum allows a developer to deploy a decentralized application without having to build out decentralized trust network.

Decentralized trust becomes a resource supplied by Ethereum. All the technology, infrastructure and participants get abstracted away.

Decentralized trust becomes a resource supplied by Ethereum. All the technology, infrastructure and participants get abstracted away.

(8/24) From the perspective of an application, @ethereum provides a decentralized trust module. Developers are freed up for innovation.

Before they had to rebuild the wheel and nurture a network before they even got started. Today all that comes in the base package.

Before they had to rebuild the wheel and nurture a network before they even got started. Today all that comes in the base package.

(9/24) However, @ethereum didnt solve the trust problem. The trust module is built on the first three layers of the system (trust, consensus, context).

This created a great platform for innovation, but requires a complete rebuild for changes to those 3 layers.

This created a great platform for innovation, but requires a complete rebuild for changes to those 3 layers.

(10/24) Turns out @ethereum only provides trust around block production, but there are lots of other applications that need trust.

Any other trust we must supply directly through middleware.

Any other trust we must supply directly through middleware.

(11/24) Middleware is any software that provides a service, information or method of communication between chains.

Middleware exists between chains and therefore must secure its own trust.

Middleware exists between chains and therefore must secure its own trust.

(12/24) A good example of middleware is oracles, software that transfers data in and out of @ethereum’s computing environment.

These protocols must boostrap their own trust network… which is very hard and expensive. There’s a reason why @chainlink is the only game in town.

These protocols must boostrap their own trust network… which is very hard and expensive. There’s a reason why @chainlink is the only game in town.

https://twitter.com/SalomonCrypto/status/1557211373288898560

(13/24) Fortunately we have @eigenlayer! So new I can only find 3 videos.

But just one is all it takes. Once you hear @sreeramkannan walk you through you’ll see that this technology will eventually be in the core @ethereum protocol.

But just one is all it takes. Once you hear @sreeramkannan walk you through you’ll see that this technology will eventually be in the core @ethereum protocol.

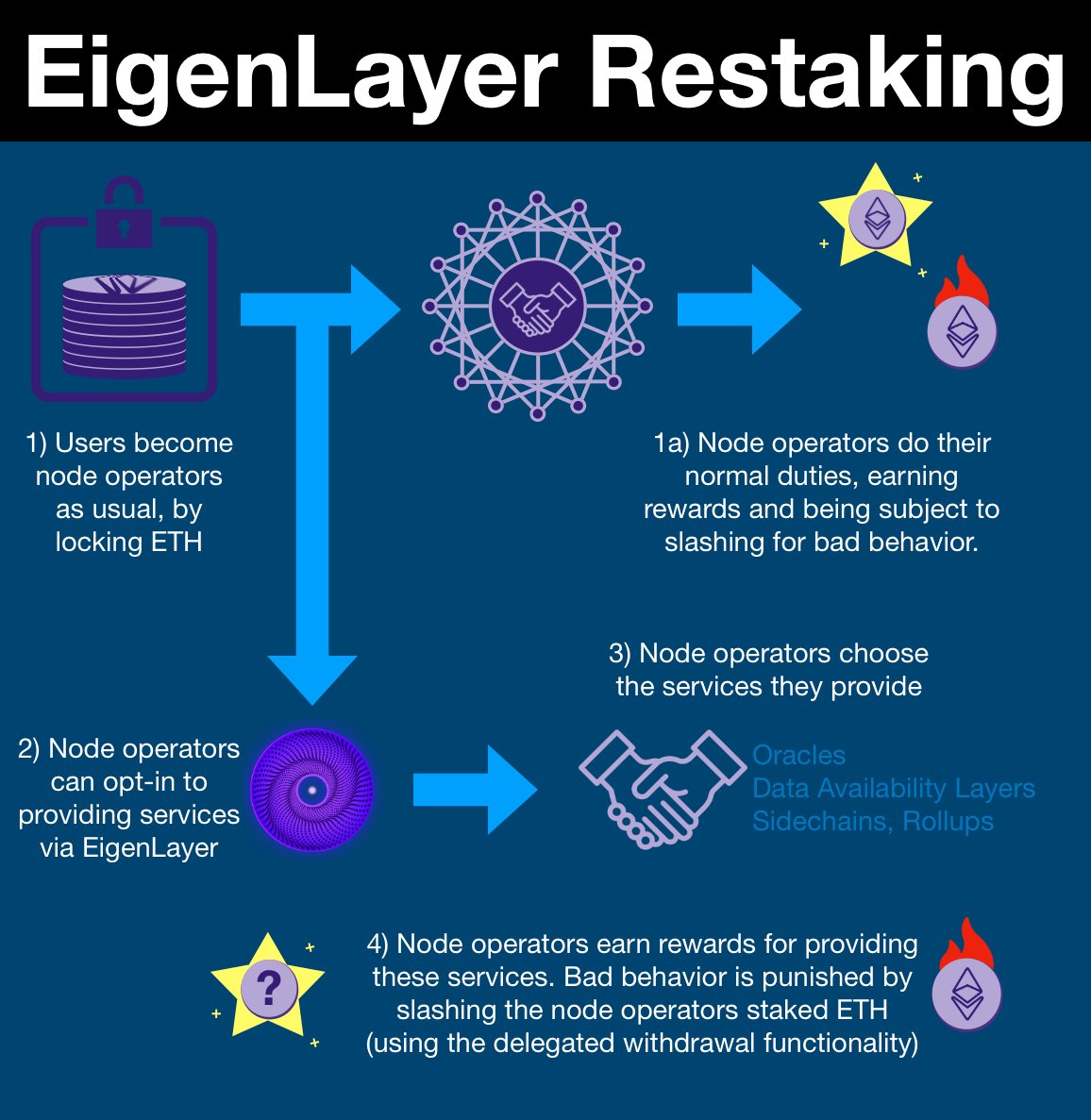

(14/24) @eigenlayer integrates at the node level of @ethereum. Nodes are where $ETH staking takes place.

Node operators lock $ETH in exchange for the right to operate a node. If the operator behaves and fulfills his duties, he earns $ETH.

Node operators lock $ETH in exchange for the right to operate a node. If the operator behaves and fulfills his duties, he earns $ETH.

(15/24) If he fails in his duty (or worse, takes malicious action), a portion of the $ETH that he locked up is slashed (permanently taken) and they are ejected from the network.

This is the mechanism by which @ethereum secures and delivers trust.

This is the mechanism by which @ethereum secures and delivers trust.

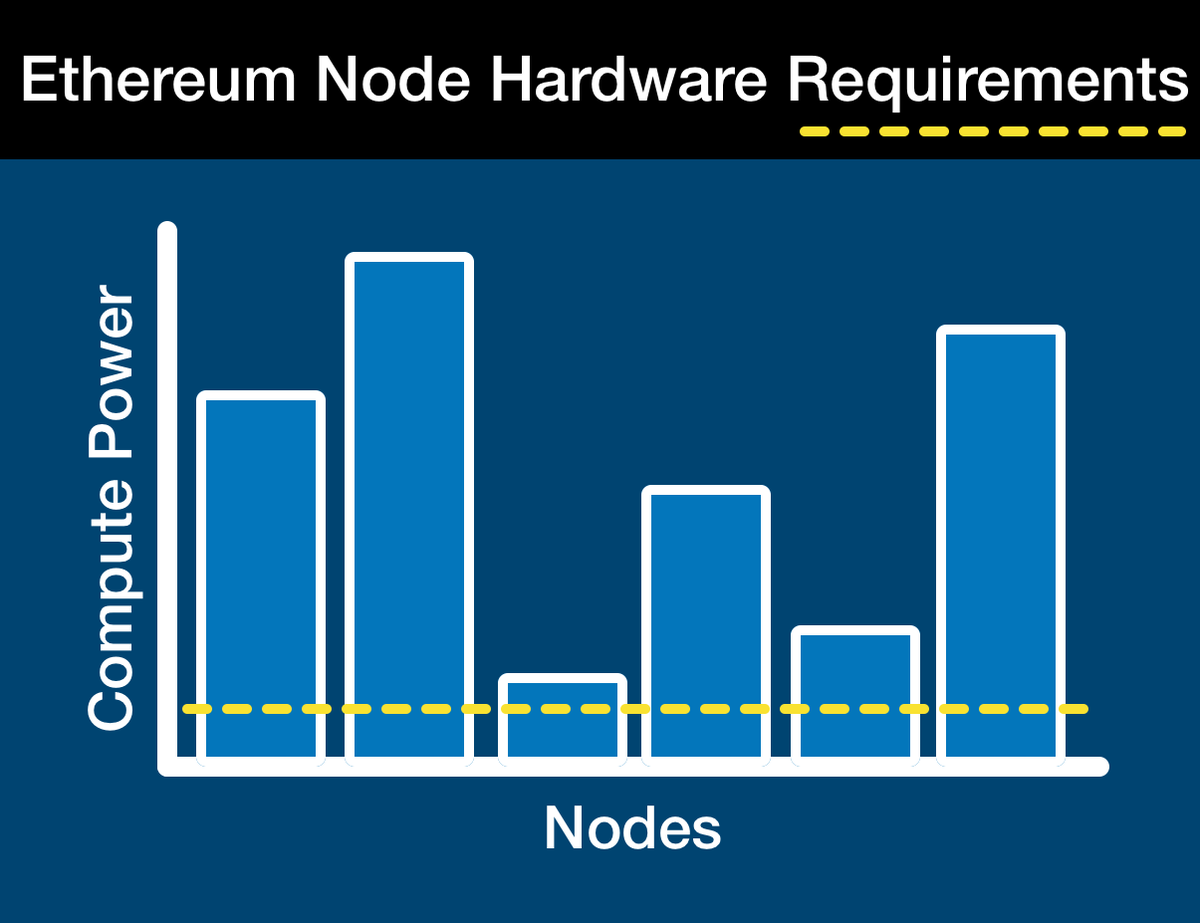

(16/24) And so, a node operator puts capital at stake and operates a node.

Nodes are real computers scattered across the globe. The requirements for running an @ethereum node are EXTREMELY low, especially considering the capital at stake.

Nodes are real computers scattered across the globe. The requirements for running an @ethereum node are EXTREMELY low, especially considering the capital at stake.

(17/24) Requirements are kept low deliberately; every person, whether MEGACORP CEO or Aunt Phillis, can become a node operator and keep the network honest.

From decentralization flows credible neutrality.

From credibly neutrality comes global dominance.

From decentralization flows credible neutrality.

From credibly neutrality comes global dominance.

https://twitter.com/SalomonCrypto/status/1559920665477595137

(18/24) Many node operators have extra computational power, some MUCH more.

@eigenlayer allows node operators to deploy their excess computing power for middleware layers while tapping into the trust base of @ethereum.

The process is called restaking.

@eigenlayer allows node operators to deploy their excess computing power for middleware layers while tapping into the trust base of @ethereum.

The process is called restaking.

(19/24) Node operators begin the process like normal, locking $ETH in exchange for the right to operate a node.

Then node operators opt-in to being a service provider for @eigenlayer.

Then node operators opt-in to being a service provider for @eigenlayer.

(20/24) Restaking means your $ETH is put at risk for additional slashing if the node operate misbehaves in providing these additional services

This isn’t a liquid staking product, this is managed through the delegated withdrawal address.

This isn’t a liquid staking product, this is managed through the delegated withdrawal address.

(21/24) $ETH stakers can set the withdrawal address of their node to a 3rd party address. When they opt-in to @eigenlayer, they set their withdrawal address to an EigenLayr smart contract. This contract can then deduct any $ETH before returning it to you (effectively slashing).

(22/24) In exchange for committing to operate the middleware and taking this extra risk, the node operator is compensate by the service provider.

Thus, the middleware can tap directly into $ETH’s trust base.

Thus, the middleware can tap directly into $ETH’s trust base.

(23/24) Merge mining refers to the act of mining two or more cryptocurrencies at the same time, without sacrificing overall mining performance.

Restaking is Proof of Stake's version: merge staking.

Restaking is Proof of Stake's version: merge staking.

(24/24) Below is @ethereum’s roadmap. As much as it’s full of detail, it’s even more full of questions.

The more I ask, the more I realize I still have to learn.

And the more I realize that Ethereum is inevitable.

The more I ask, the more I realize I still have to learn.

And the more I realize that Ethereum is inevitable.

https://twitter.com/salomoncrypto/status/1569867843323428864

Like what you read? Help me spread the word by retweeting the thread (linked below).

Follow me for more explainers and as much alpha as I can possibly serve.

Follow me for more explainers and as much alpha as I can possibly serve.

https://twitter.com/salomoncrypto/status/1572094840619532288

• • •

Missing some Tweet in this thread? You can try to

force a refresh