Greetings from #strangeloop! Today is the #tlaconf, which I just got to. Gotta take a couple of breaks from it to socialize and help people rehearse and stuff, but I'll be doing my usual livetweeting of the talks I see.

---

"TLA+ for Nike Stores", #tlaconf #StrangeLoop. Yes, speaker is wearing nikes. Apparently they had to go through a LOT of legal to get permission to give this talk!

"Athletes" (sales people) use mobile devices to do all sorts of tasks. Need to handle cash: logistical problem

"TLA+ for Nike Stores", #tlaconf #StrangeLoop. Yes, speaker is wearing nikes. Apparently they had to go through a LOT of legal to get permission to give this talk!

"Athletes" (sales people) use mobile devices to do all sorts of tasks. Need to handle cash: logistical problem

Rules: physical cash drawer MUST not open twice, overly delayed requests must not open the drawer. Everything must be auditable. Drawers must not open if not connected to network.

Humans can route around these but network issues limit what they can do.

So: use TLA+!

Humans can route around these but network issues limit what they can do.

So: use TLA+!

Example liveness constraint:

drawer_resource.stats = Opening ~> status \in {Claimed, Open, Available}

Drawer can't be "opening" forever

[I simplified the spec they put in the slide]

"Retail is more digitally connected than ever."

#tlaconf #StrangeLoop

drawer_resource.stats = Opening ~> status \in {Claimed, Open, Available}

Drawer can't be "opening" forever

[I simplified the spec they put in the slide]

"Retail is more digitally connected than ever."

#tlaconf #StrangeLoop

"I was a math major, but dropped out to go make movies. But I kept using programming to edit VFX workflows. I don't have regrets, but stay in school."

Nike owns 100s of stores in a variety of concepts, tailored to local demand.

Serves "Athletes*": "u have a body, ur an athlete"

Nike owns 100s of stores in a variety of concepts, tailored to local demand.

Serves "Athletes*": "u have a body, ur an athlete"

Payment methods and legal complaint processes are wildly different in different places. 4 years ago teams relied on intuition for design. Doesn't work for distributed systems: hard problems take many iterations to get right.

Nike studied TLA+ in their "freeform learn days"

Nike studied TLA+ in their "freeform learn days"

[Tangent: I've seen a lot of places have freeform learn days. It fits into my theory of "moonshot experimentation": most ideas in freeform learning will fail, but if one thing works, it's all worth the investment.]

TLA+ was cool, but they couldn't see how to make it useful

TLA+ was cool, but they couldn't see how to make it useful

They realize if they used much simpler models they could more easily specify it. Was a great lesson in abstract thinking.

Made them rethink their understanding of programming.

#StrangeLoop #tlaconf

Made them rethink their understanding of programming.

#StrangeLoop #tlaconf

Started using it to model new microservices in payments and stuff. Used sequence diagrams to communicate ideas, then converted to tla+, found bugs in the sequence diagrams, fixed the bugs.

[Microservices make a LOT of sense for large non-tech companies, I realize]

[Microservices make a LOT of sense for large non-tech companies, I realize]

They discovered that a completely different retail team was ALSO using TLA+!

After 3 months of interweaving design and TLA+, first spec was complete and useful. "Problem: state space was HUGE." Took a week to check on powerful computers. Still SUPER useful tho!

After 3 months of interweaving design and TLA+, first spec was complete and useful. "Problem: state space was HUGE." Took a week to check on powerful computers. Still SUPER useful tho!

[He's showing a bunch of safety properties in the system, but he's moving too fast for me to transcribe them.]

Simple liveness property: `checkout.state = Cancelling ~> state = Cancelled`

[I like that, might adapt it for a workshop]

Simple liveness property: `checkout.state = Cancelling ~> state = Cancelled`

[I like that, might adapt it for a workshop]

CheckingOut ~> CheckedOut \/ Cancelled. Can't be just CHeckout because it involves a human making a decision, and the human can decide not to actually pay.

Design was useful, but still have to build it

#tlaconf #StrangeLoop

Design was useful, but still have to build it

#tlaconf #StrangeLoop

"When we deployed, we had five known bugs in the system." Intentional, because system wasn't fully implemented. Never saw them in practice before so decided to deploy anyway.

...Every bug eventually happened.

And they knew EXACTLY what was wrong, due to the spec.

...Every bug eventually happened.

And they knew EXACTLY what was wrong, due to the spec.

"It was a completely different experience." Instead of trying to figure out the bug, they knew what it was, where it was, and what to do to fix it. TLA+ gave them an incredibly strong foundation. According to one senior manager: "fifteen times better."

Great for meta concerns. Spec was more complex than intuition, but they could say for sure why simpler alternatives WOULDN'T work.

[This is something I found too.]

TLA+ is now optional part of RFC phase. Part of their scrum. "Test-Driven Architecture"

#StrangeLoop #tlaconf

[This is something I found too.]

TLA+ is now optional part of RFC phase. Part of their scrum. "Test-Driven Architecture"

#StrangeLoop #tlaconf

Composing specs! Client and server teams write separate specs. Service spec assumes adversarial client, client assumes correct server.

Also doing some spec refinement. Abstract spec defines how payments must operate, refinements are versions for different countries.

Also doing some spec refinement. Abstract spec defines how payments must operate, refinements are versions for different countries.

"Everybody was on the same page by just doing the formal exercise." Didn't even need to use TLC to get alignment

Problem they had: they made specs too abstract. Engineers ddidn't undertsand that, refinement helped.

Problem they had: they made specs too abstract. Engineers ddidn't undertsand that, refinement helped.

Lessons:

- You don't need many people to write TLA+, but you do need people to buy into it.

- Safety is more important than liveness, but liveness is still critical.

- "Self-healing" is trendy, it's absence of livelock.

- Don't need proofs, model-checking is fine

#tlaconf

- You don't need many people to write TLA+, but you do need people to buy into it.

- Safety is more important than liveness, but liveness is still critical.

- "Self-healing" is trendy, it's absence of livelock.

- Don't need proofs, model-checking is fine

#tlaconf

Teaching TLA+:

- Translate the syntax ASAP.

- Everything is boolean expression

- all immutable

- "No when, only order"

- Get into details of BFS QUICKLY. Grounds TLA+ into what people know. "Helps people believe it can work."

#tlaconf #StrangeLoop

- Translate the syntax ASAP.

- Everything is boolean expression

- all immutable

- "No when, only order"

- Get into details of BFS QUICKLY. Grounds TLA+ into what people know. "Helps people believe it can work."

#tlaconf #StrangeLoop

Pitching:

- "How does TLA+ test my code?" "Same way a whiteboard does" [I like this a lot]

- "It allows testing the unthinkable."

- "You don't always need FM" "You don't always need a parachute either."

- It's all about VALUE TO CUSTOMERS

- "How does TLA+ test my code?" "Same way a whiteboard does" [I like this a lot]

- "It allows testing the unthinkable."

- "You don't always need FM" "You don't always need a parachute either."

- It's all about VALUE TO CUSTOMERS

Enterprise needs:

- Better CLI tooling

- Configuration as code would help, like git tracking config

- VSCode plugin drives adoption

- Would be nice to have module system [don't we have one? Maybe he means package manager]

- Autoformatter would be great

- Better CLI tooling

- Configuration as code would help, like git tracking config

- VSCode plugin drives adoption

- Would be nice to have module system [don't we have one? Maybe he means package manager]

- Autoformatter would be great

TLA+ is great because it gives fast feedback, and things like race conditions are impossible to get fast feedback. Saves years of leaning. We <3 TLA+, and it works great with Agile.

#tlaconf #StrangeLoop

#tlaconf #StrangeLoop

Took a break to learn more about P (Thanks @ankushpd!) and ended up doing an impromptu TLA+ demo for a bypasser ahahaha okay back to #tlaconf

(Ankush will be speaking on Saturday: thestrangeloop.com/2022/formal-mo…)

(Ankush will be speaking on Saturday: thestrangeloop.com/2022/formal-mo…)

@ankushpd -----

"Obtaining statistical properties by simulating specs with TLC" by @vanlightly and @lemmster #tlaconf #StrangeLoop

TLC's a "worst-case" checker, but lots of important properties are statistical: latency, uptime, etc. Statistics is hard, let's use the TLC simulator instead

"Obtaining statistical properties by simulating specs with TLC" by @vanlightly and @lemmster #tlaconf #StrangeLoop

TLC's a "worst-case" checker, but lots of important properties are statistical: latency, uptime, etc. Statistics is hard, let's use the TLC simulator instead

Process: run TLC in "generation" mode, generate lots of traces, put metadata in the spec, export the data to CSV, analyze in R or Python.

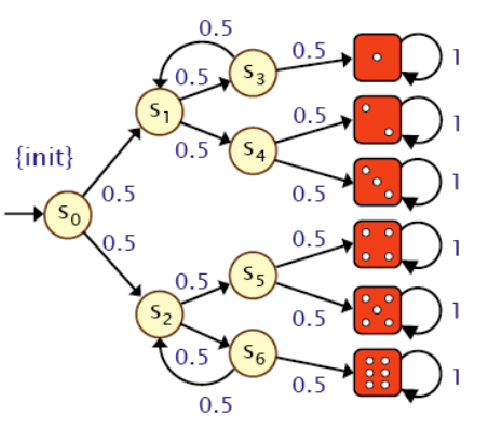

[Markus is going to do a demo of Knuth-Yao: simulating a fair d6 with a coin]

[Markus is going to do a demo of Knuth-Yao: simulating a fair d6 with a coin]

Knuth-Yao is this graph. It's provably fair. "There are probabilistic model checkers, like PRISM. PRISM is a fine tool, but let's see what we can do with TLA+"

[editor's note: PRISM is not a fine tool.]

[editor's note: PRISM is not a fine tool.]

Problems with doing this in TLC: no real numbers,, infinite state (due to the probabilities),, can't state "fair die" property in TLA+, since it's not a binary true/false property on each behavior.

"Instead of ℝ, I created the module DyadicRationals."

#tlaconf #StrangeLoop

"Instead of ℝ, I created the module DyadicRationals."

#tlaconf #StrangeLoop

This uses a new mode of TLC: "generator" mode, which is distinct from simulator mode. [missed how they're putting it in a CSV, think it's using the IOUtils module]

We can also add probabilities with the "RandomElement" module [which I need to document in learntla.com]

We can also add probabilities with the "RandomElement" module [which I need to document in learntla.com]

Oh I think they're collecting the information with the POSTCONDITION TLC configuration option.

Markus now demonstrating modeling a biased coin on Knuth-Yao, getting a new distribution in TLC, and then checking against the analytic bias calculated by PRISM

Markus now demonstrating modeling a biased coin on Knuth-Yao, getting a new distribution in TLC, and then checking against the analytic bias calculated by PRISM

[I'm seeing a new `-note` flag on TLC]

POSTCONDITION is a new TLC config option which evaluates at the end of the trace.

[SHIT there's also a new TLCGet param, "config", that lets you get the runtime config options, like if you're checking for deadlocks!]

POSTCONDITION is a new TLC config option which evaluates at the end of the trace.

[SHIT there's also a new TLCGet param, "config", that lets you get the runtime config options, like if you're checking for deadlocks!]

Now let's do a probabilistic distributed system: EWD998. Ring of nodes are either active or passive. Active nodes can go passive or send delayed messages to activate other nodes. Problem: find out when "stable": all nodes passive & no in-flight messages

#tlaconf #StrangeLoop

#tlaconf #StrangeLoop

EWD998 is a particular algorithm to detect stability. We're going to do probablistic modeling of two optimizations to see if they're safe optimizations. We can see, via modeling, that an "obvious optimization" changes an O(n) algorithm to O(n²)! Pretty dang cool

Jack now showing case studies, like the SWIM protocol and RabbitMQ Reactor Streams.

• • •

Missing some Tweet in this thread? You can try to

force a refresh