There's a lot of weird debate about whether Rust in the kernel is useful or not... in my experience, it's way more useful than I could've ever imagined!

I went from 1st render to a stable desktop that can run run games, browsers, etc. in about two days of work on my driver (!!!)

I went from 1st render to a stable desktop that can run run games, browsers, etc. in about two days of work on my driver (!!!)

All the concurrency bugs just vanish with Rust! Memory gets freed when it needs to be freed! Once you learn to make Rust work with you, I feel like it guides you into writing correct code, even beyond the language's safety promises. It's seriously magic! ✨

There is absolutely no way I wouldn't have run into race conditions, UAFs, memory leaks, and all kinds of badness if I'd been writing this in C.

In Rust? Just some logic bugs and some core memory management issues. Once those were fixed, the rest of the driver just worked!!

In Rust? Just some logic bugs and some core memory management issues. Once those were fixed, the rest of the driver just worked!!

I tried kmscube, and it was happily rendering frames. Then I tried to start a KDE session, and it crashed after a while, but you know what didn't cause it? 3 processes trying to use the GPU at the same time, allocating and submitting commands in parallel. In parallel!!

After things work single-threaded in a driver as complex as this, having all the locking and threading just magically working as intended with no weird races or things stepping on top of each other is, as far as I'm concerned, completely unheard of for a driver this complex.

And then all the memory management just... happens as if by magic. A process using the GPU exits, and all the memory and structs it was using get freed. Dozens of lines in my log of everything getting freed properly. I didn't write any of that glue, Rust did it all for me!

(Okay, I wrote the part that hooks up the DRM subsystem to Rust, including things like dropping a File struct when the file is closed, which is what triggers all that memory management to happen... but then Rust does the rest!)

I actually spent more time tracking down a single forgotten `*` in the DCP driver (written in C by Alyssa and Janne, already tested) that was causing heap overflows than I spent tracking down CPU-side safety issues (in unsafe code) in Rust on my brand new driver, in total.

Even things like handling ERESTARTSYS properly: Linux Rust encourages you to use Result<T> everywhere (the kernel variant where Err is an errno), and then you just stick a ? after wherever you're sleeping/waiting on a condition (like the compiler tells you) and it all just works!

Seriously, there is a huuuuuuge difference between C and Rust here. The Rust hype is real! Fearless concurrency is real! And having to have a few unsafe {} blocks does not in any way negate Rust's advantages!

Some people seem to be misunderstanding the first tweet in this thread... I didn't write a driver in 2 days, I debugged a driver in 2 days! The driver was already written by then!

What I'm saying is that Rust stopped many classes of bugs from existing. Sorry if I wasn't clear!

What I'm saying is that Rust stopped many classes of bugs from existing. Sorry if I wasn't clear!

There was also a bit of implementation work involved in those 2 days of work though - buffer sharing in particular wasn't properly implemented when I got first renders, so that was part of it, but the bulk of the driver was already done.

Apparently I have to clarify again?

I did write the driver myself (and the DRM kernel abstractions I needed). The 2 days were the debugging once the initial implementation was done. The Rust driver took 7 weeks, and I started reverse engineering this GPU 6 months ago...

I did write the driver myself (and the DRM kernel abstractions I needed). The 2 days were the debugging once the initial implementation was done. The Rust driver took 7 weeks, and I started reverse engineering this GPU 6 months ago...

This was the first stream where I started evaluating Rust to write the driver (I'd been eyeing the idea for a while, but this was the first real test).

Initially it was just some userspace experiments to prove the concept, then moved onto the kernel.

Initially it was just some userspace experiments to prove the concept, then moved onto the kernel.

I mostly worked on stream and it's been 12 streams plus the debugging one, so I guess writing the driver took about 12 (long) days of work, plus a bit extra (spread out over 7 weeks because I stream twice per week and took one week off)

So just to be totally clear:

Reverse engineering and prototype driver: ~4 calendar months, ~20 (long) days of work

Rust driver development (including abstractions): ~7 calendar weeks, ~12 (long) days of work

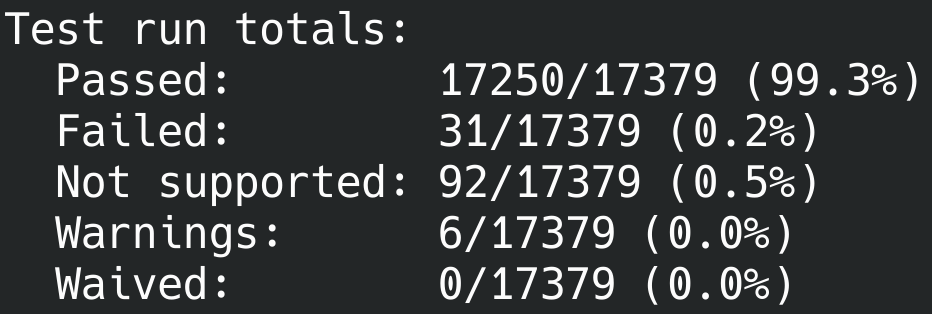

Debugging to get a stable desktop: 5 calendar days, 2 days of work.

Reverse engineering and prototype driver: ~4 calendar months, ~20 (long) days of work

Rust driver development (including abstractions): ~7 calendar weeks, ~12 (long) days of work

Debugging to get a stable desktop: 5 calendar days, 2 days of work.

• • •

Missing some Tweet in this thread? You can try to

force a refresh