🪩The @stateofaireport 2022 is live!🪩

In its 5th year, the #stateofai report condenses what you *need* to know in AI research, industry, safety, and politics. This open-access report is our contribution to the AI ecosystem.

Here's my director's cut 🧵:

stateof.ai

In its 5th year, the #stateofai report condenses what you *need* to know in AI research, industry, safety, and politics. This open-access report is our contribution to the AI ecosystem.

Here's my director's cut 🧵:

stateof.ai

This year, research collectives such as @AiEleuther @BigscienceW @StabilityAI @open_fold have open sourced breakthrough AI language, text-to-image, and protein models developed by large centralized labs at a never before seen pace.

Here I show you the GPT model timeline:

Here I show you the GPT model timeline:

Indeed, text-to-image models that have taken the AI Twitterverse by storm are the battleground for these research collectives.

Technology that was in the hands of the few is now in the hands of everyone with a laptop or smartphone.

Technology that was in the hands of the few is now in the hands of everyone with a laptop or smartphone.

The pace of progress is now so fast that the frontier has already moved towards generating videos from text -- with multiple breakthroughs being released within days of each other by @MetaAI and @GoogleAI. How long until open-source communities replicate them?

There are now a set of well-funded AI companies formed by alums of the pivotal "Attention is all you need" paper est. 2017 incl. @AdeptAILabs @CohereAI.

Together with @AnthropicAI @inflectionAI, these companies have raised >$1B. More to come!

Together with @AnthropicAI @inflectionAI, these companies have raised >$1B. More to come!

Meanwhile, academia is left behind, with the baton of open source large-scale AI research passing to decentralised research collectives as the latter gain compute infra (e.g. @StabilityAI) and talent.

In 2020, only industry and academia were at the table. This changed in 2021:

In 2020, only industry and academia were at the table. This changed in 2021:

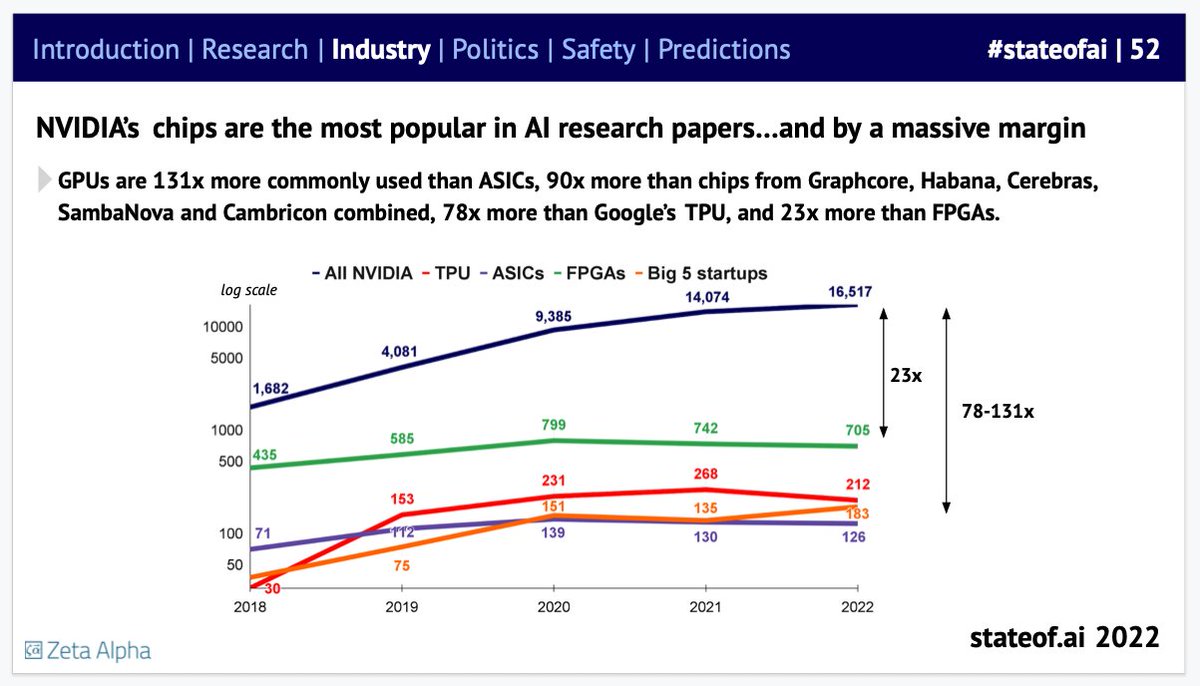

Meanwhile, @nvidia firmly retains its hold as the #1 AI compute provider despite investments by @Google, @Amazon, @Microsoft and a range of AI chip startups.

In open source AI papers, NVIDIA chips are used 78x more than TPUs and 90x more than 5 major AI chip startups combined:

In open source AI papers, NVIDIA chips are used 78x more than TPUs and 90x more than 5 major AI chip startups combined:

While the largest supercomputers tended to be owned and operated by nation states, private companies are making real progress in building larger and larger clusters.

We counted the NVIDIA A100s + H100s in various private and public clouds as well as national HPC. @MetaAI is #1:

We counted the NVIDIA A100s + H100s in various private and public clouds as well as national HPC. @MetaAI is #1:

I'm most excited about applying large models to domains beyond pure NLP tasks as we think of them.

For eg., LLMs can learn the language of proteins and thus be used for their generation and structure prediction (@airstreet stealthco!).

Here too, model and data scale matters:

For eg., LLMs can learn the language of proteins and thus be used for their generation and structure prediction (@airstreet stealthco!).

Here too, model and data scale matters:

LLMs can also learn the language of Covid-19. Here, @BioNTech_Group and @instadeepai built a model to predict high-risk Covid-19 variants far before the WHO designated them as variants. The model ingests viral spike protein sequences and predicts their immune escape and fitness.

Here, transformers are used to decode the vast undocumented chemical space of medicinal compounds that are present in plants.

@envedabio interprets mass spectra of small molecules using these models to discover new drugs and predict their properties:

@envedabio interprets mass spectra of small molecules using these models to discover new drugs and predict their properties:

Overall, AI-first drug discovery companies have now generated 18 assets that are in active clinical trials today. This us up from 0 only 2 years ago. It's still early days and we expect readouts next year onwards.

👏 @exscientiaAI @AbCelleraBio @RecursionPharma @Relay_Tx et al

👏 @exscientiaAI @AbCelleraBio @RecursionPharma @Relay_Tx et al

Crucially, although an earlier @OpenAI paper suggested that model size should increase faster than training data size as compute budget increases.

A more recent @DeepMind study found that large models are significantly undertrained from a data perspective:

A more recent @DeepMind study found that large models are significantly undertrained from a data perspective:

LLMs are also learning to use software tools. There's now evidence from @OpenAI WebGPT and @AdeptAILabs ACT-1 (@airstreet co!) that transformers can be trained to interact w/search engines, web apps and other software tools. Moreover, they can improve from human demonstrations.

🤯 And to top off how AI is revolutionizing science, @DeepMind showed that an RL agent could learn to adjust the magnetic coils of a real life nuclear fusion reactor to control its plasma.

Over in universities, a growing number of students and faculty want to form companies around their AI research.

Notable spinouts including @databricks, @SnorkelAI, @SambaNovaAI, and @exscientiaAI.

In the UK, only 4.3% of AI cos are spinouts. We can do better! cc @spinoutfyi

Notable spinouts including @databricks, @SnorkelAI, @SambaNovaAI, and @exscientiaAI.

In the UK, only 4.3% of AI cos are spinouts. We can do better! cc @spinoutfyi

Our friends @dealroomco pulled together slides on deal activity in AI. While the sector wasn't spared from the public market sell-off and private investment pull-back, investments are expected to top 2020 levels this year.

Great companies are built in all cycles.

Great companies are built in all cycles.

This year we created a Safety-specific section to draw attention to the importance of this work and its increased activity.

Researchers are indeed voicing and acting on their concerns for the risks of powerful AI systems.

Researchers are indeed voicing and acting on their concerns for the risks of powerful AI systems.

This awareness is even extending into the realm of government, with the UK's AI Strategy acknowledging the seriousness of this issue and committing to acting towards it.

Last year, we estimated that the total number of researchers dedicated to AI safety and alignment work at major AI labs was below 100.

Now, others have estimated the number to be 300 researchers.

Despite this growth, safety researchers are outnumbered by orders of magnitude.

Now, others have estimated the number to be 300 researchers.

Despite this growth, safety researchers are outnumbered by orders of magnitude.

Safety research has pulled in more money than in years past. Major benefactors include sympathetic tech billionaires @sbf_ftx and @moskov.

However, total safety funding (VC and philanthropy_ in 2022 was a drop in the ocean vs. capabilities and only reached @deepmind's 2018 opex

However, total safety funding (VC and philanthropy_ in 2022 was a drop in the ocean vs. capabilities and only reached @deepmind's 2018 opex

True to the @stateofaireport tradition, we rated our 2021 predictions. We scored 4/8 this time.

✅Transformers+RL in games, small transformers, @DeepMind maths, vertical-focused AGI

❌ @ASMLcompany mkt cap, @AnthropicAI major paper, AI chip consolidation, JAX usage growth

✅Transformers+RL in games, small transformers, @DeepMind maths, vertical-focused AGI

❌ @ASMLcompany mkt cap, @AnthropicAI major paper, AI chip consolidation, JAX usage growth

Here are our 9 predictions for next year! We cover:

- key model improvements,

- large-scale compute partnerships,

- major investments in AGI and alignment,

- hardware industry consolidation,

- AGI regulation,

- creative AI licensing deals

What do you think? 🔮

- key model improvements,

- large-scale compute partnerships,

- major investments in AGI and alignment,

- hardware industry consolidation,

- AGI regulation,

- creative AI licensing deals

What do you think? 🔮

A huge and heartfelt thank you to both @osebbouh for your second year tour-de-force on the report and to @nitarshan for your insights and framings of AI policy, safety and research.

The @stateofaireport is a much improved product thanks to your work!

The @stateofaireport is a much improved product thanks to your work!

• • •

Missing some Tweet in this thread? You can try to

force a refresh