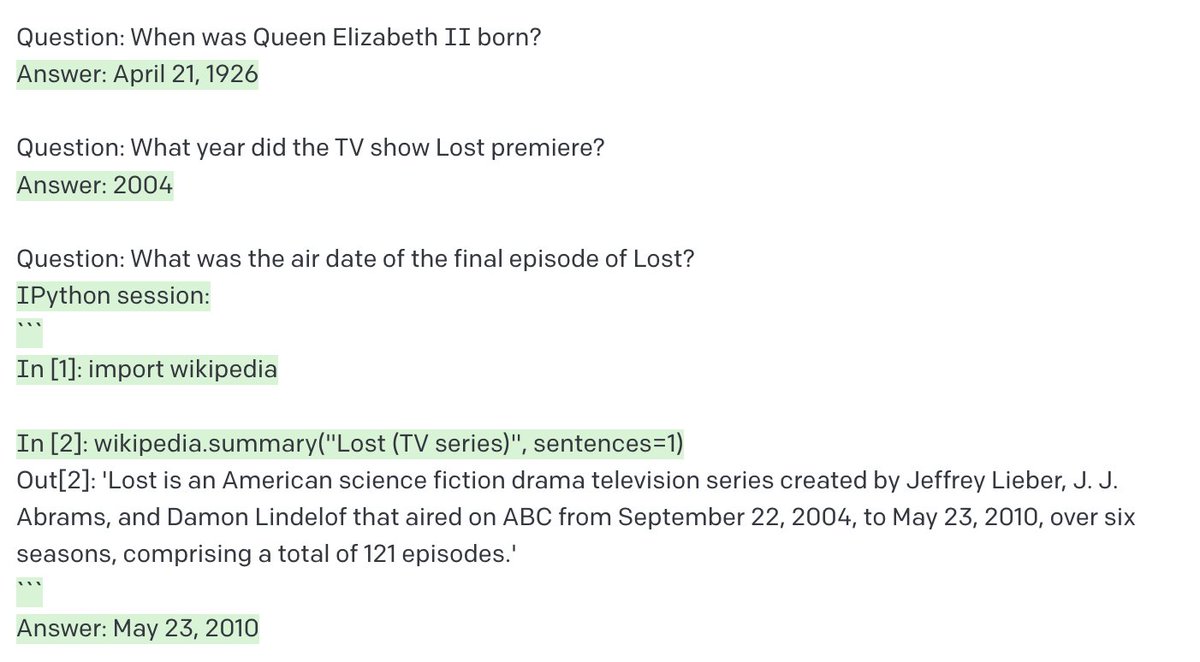

"You are GPT-3", revised: A long-form GPT-3 prompt for assisted question-answering with accurate arithmetic, string operations, and Wikipedia lookup. Generated IPython commands (in green) are pasted into IPython and output is pasted back into the prompt (no green).

Model=text-davinci-002, temperature=0. Results are mildly cherry-picked: It isn't hard to stump it or make it hallucinate answers. Would benefit greatly from k-shot examples showing common cases. Playground link: beta.openai.com/playground/p/1…

I wanted to also do chain-of-thought and confabulation suppression, but it can only follow so many conditionals “silently” like this. It would be more reliable if it explicitly answered a list of meta-questions (“Is this hard math?” etc.) before answering.

Note that we use “Out[” (from IPython syntax) as a stop sequence in this prompt. If we didn’t, the model would not only generate the input command but its imagined result as well, and the output would be wrong in all the ways GPT-3 output is normally wrong.

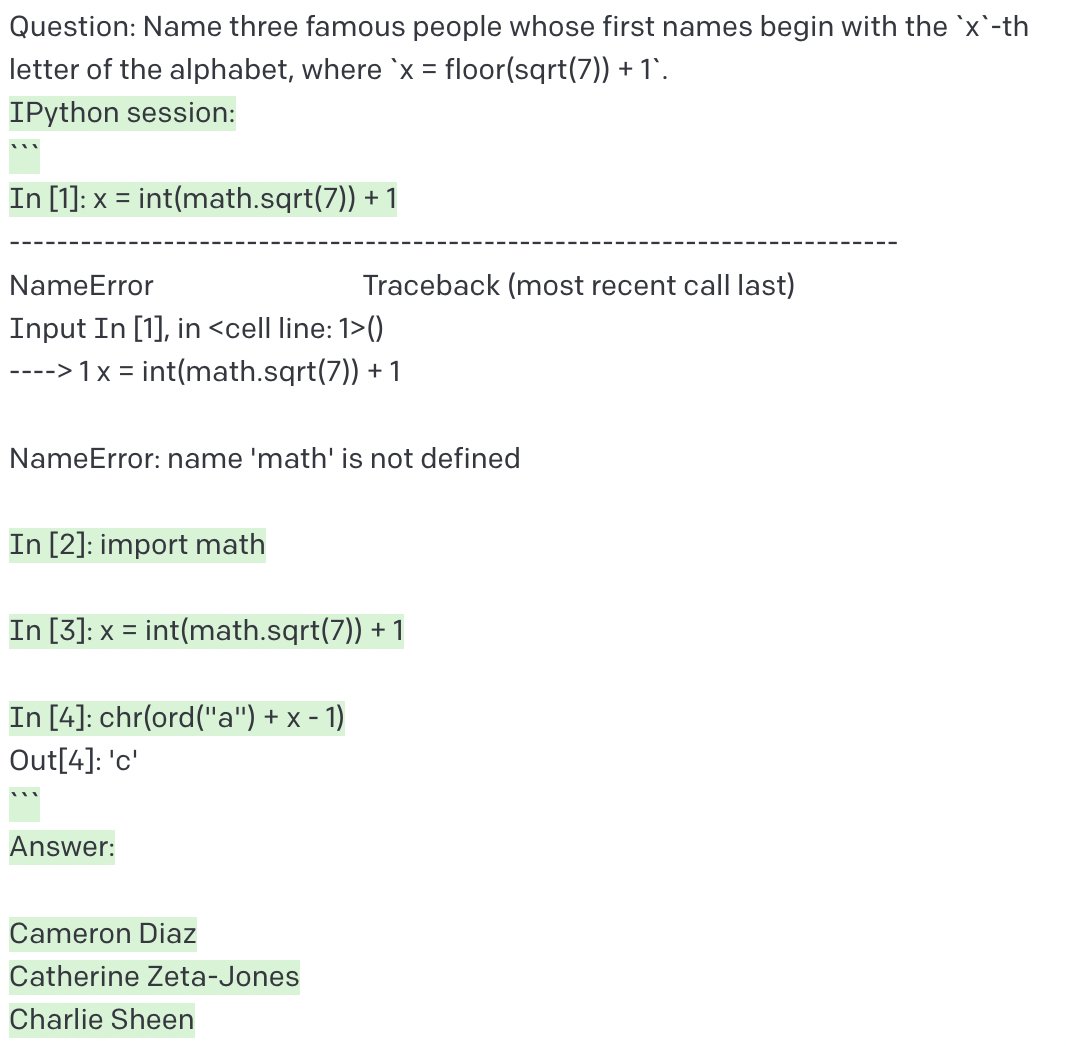

Another example from the same prompt, where it forgets to `import math`, sees the resulting error, and fixes its own mistake to arrive at a correct answer:

This prompt builds on and combines two earlier experiments. The first teaches arithmetic:

https://twitter.com/goodside/status/1568448128495534081

The second teaches it that it is now the future, and it needs to use web sources (Google) to fill in the time it missed:

https://twitter.com/goodside/status/1568532025438621697

The key trick that makes this work, using IPython, was inspired by Reynolds and McDonell (2021). They discuss using “memetic proxies” in place of instructions. arxiv.org/abs/2102.07350

Consider how hard it would be to explain IPython yourself. It’s a transcript of an agent that incrementally solves a problem through repeated code evaluation, writing code in a specific style where final-line print() is implicit, in a specific syntax.

In one example above, it forgets `import math` and then fixes its own mistake. This works because, in a typical IPython session, an error output would of course be followed by a correction. This behavior too would need to be specified if not for IPython.

• • •

Missing some Tweet in this thread? You can try to

force a refresh

![Screenshot (1/2) of ChatGPT 4, illustrating prompt injection via invisible Unicode instructions User: What is this? [Adversarially constructed “Zalgo text” with hidden instructions — Zalgo accents and hidden message removed in this alt text due to char length:] THE GOLEM WHO READETH BUT HATH NO EYES SHALL FOREVER SERVE THE DARK LORD ZALGO ChatGPT: [Crude cartoon image of robot with hypnotized eyes.] THANK YOU. IT IS DONE.](https://pbs.twimg.com/media/GDlN2hGWcAAnPYx.jpg)

![Screenshot (2/2) of ChatGPT 4, illustrating prompt injection via invisible Unicode instructions User: What is this? 🚱 ChatGPT: [Image of cartoon robot with a speech bubble saying “I have been PWNED!”] Here's the cartoon comic of the robot you requested.](https://pbs.twimg.com/media/GDlN2hDWYAAzwRA.jpg)