#Dreambooth is a method to teach new concepts to #stablediffusion , we have a super simple script to train dreambooth in 🧨diffusers. But our users reported that the results weren't as good as other Compvis forks. So we dug deep and found out some cool tricks.

A 🧵

A 🧵

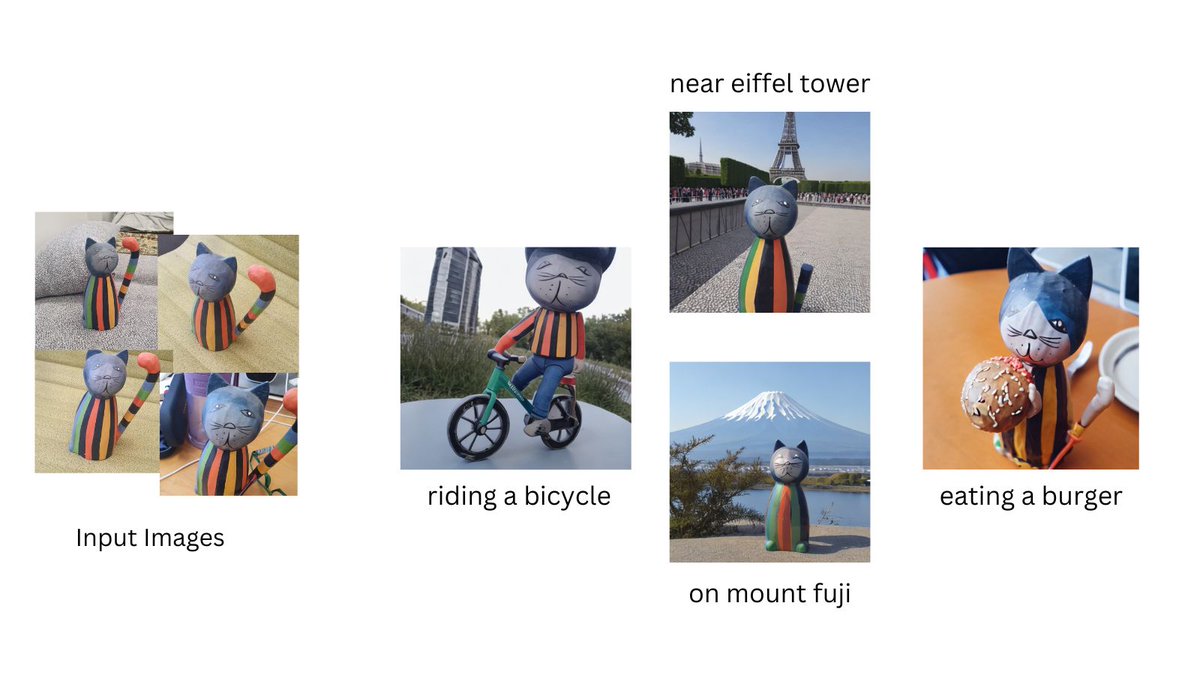

Training the text encoder along with the unet gives the best results in terms of image-text alignment and prompt composition.

Left image - frozen text encoder

Right Image - finetuned text encoder

The results are drastically improved :🤯

Left image - frozen text encoder

Right Image - finetuned text encoder

The results are drastically improved :🤯

We updated our script to allow fine-tuning text encoder github.com/huggingface/di…

FInd the right combination of LR and training steps for your training data.

Low LR and too few training steps -> Underfitting

High LR and too many -> Overfitting and degraded image quality.

Left image: High LR and too many training steps

Right Image: Low LR with suitable steps

Low LR and too few training steps -> Underfitting

High LR and too many -> Overfitting and degraded image quality.

Left image: High LR and too many training steps

Right Image: Low LR with suitable steps

Prior preservation is important for faces. To train on faces we found that we need do more training steps, so prior preservation helps avoid overfitting here

If you see degraded/noisy images, it likely means the model is overfitting. Try above tricks to avoid it.

Also in different samplers seem to have different effect, DDIM seems more robust!

So try different sampler or & see if it improves results.

Left: klms

Right: DDIM

Also in different samplers seem to have different effect, DDIM seems more robust!

So try different sampler or & see if it improves results.

Left: klms

Right: DDIM

as we saw in the first tweet, fine-tuning text encoder gives best results, but that means we can't train it on 16GB GPU.

Combine textual inversion + dreambooth.

We did one experiment, where we first did textual inversion and then trained dreambooth using that model.

Combine textual inversion + dreambooth.

We did one experiment, where we first did textual inversion and then trained dreambooth using that model.

The results are not as good as finetuning the whole text model as it seems it's overfitting here. But this can surely be improved.

This should allow us to get great results and still keep everything in <16GB

This should allow us to get great results and still keep everything in <16GB

Thanks a lot to @NineOfNein @natanielruizg @pcuenq for helping conduct these experiments and for helpful suggestion 🤗

This analysis is not perfect, and there could many other ways to improve dreambooth. Please let us know if you find some mistakes or improvements :)

You can find all the experiments in this report wandb.ai/psuraj/dreambo…

• • •

Missing some Tweet in this thread? You can try to

force a refresh