There’s no more strategic thing than defining where you want to get to and measuring it.

Strategy informs what "great" means, daily habits get you started (and keep you going) and measurements tell you if you’re there or not.

A 🧵 on #SOC strategy / metrics:

Strategy informs what "great" means, daily habits get you started (and keep you going) and measurements tell you if you’re there or not.

A 🧵 on #SOC strategy / metrics:

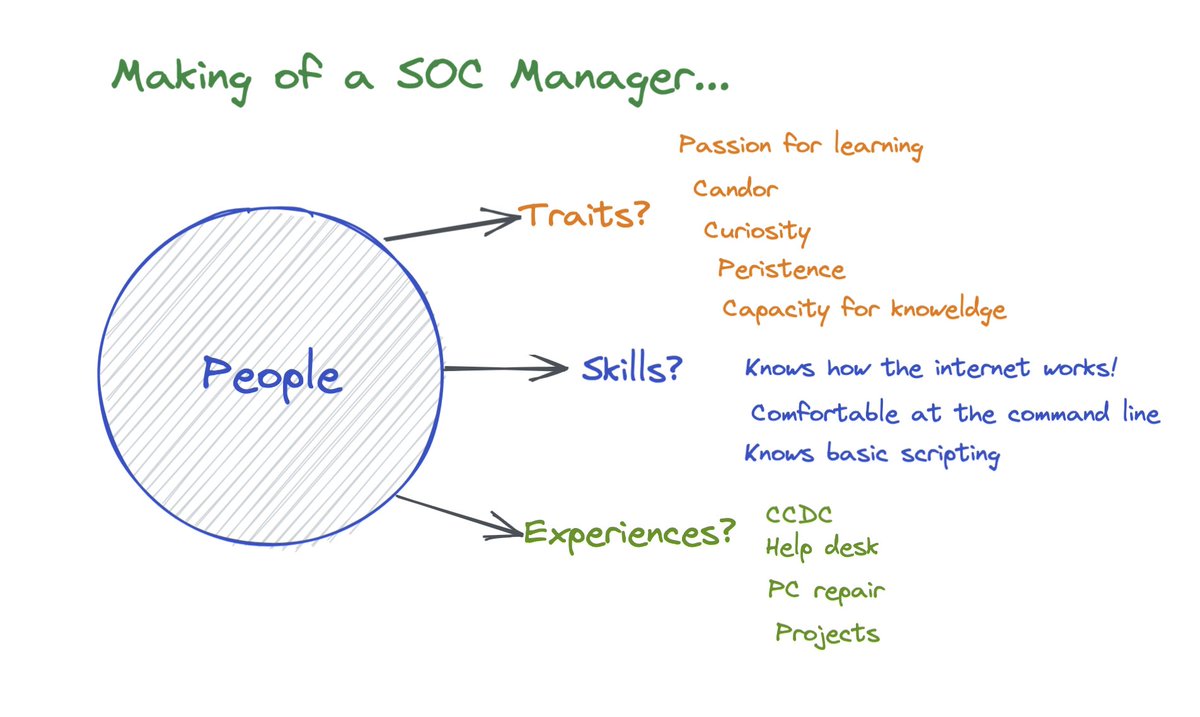

Before we hired our first #SOC analyst or triaged our first alert, we defined where we wanted to get to; what great looked like.

Here’s [some] of what we wrote:

Here’s [some] of what we wrote:

We believe that a highly effective SOC:

1. leads with tech; doesn’t solve issues w/ sticky notes

2. automates repetitive tasks

3. responds and contains incidents before damage

4. has a firm handle on capacity v. loading

5. is able to answer, “are we getting better, or worse?”

1. leads with tech; doesn’t solve issues w/ sticky notes

2. automates repetitive tasks

3. responds and contains incidents before damage

4. has a firm handle on capacity v. loading

5. is able to answer, “are we getting better, or worse?”

6. doesn’t trade quality for efficiency; measures quality, has a self-correcting process

7. has a culture where “I don’t know” is always an acceptable answer; pathways exist to get help

8. is always learning (from success & failure)

9. has high retention, career pathways

7. has a culture where “I don’t know” is always an acceptable answer; pathways exist to get help

8. is always learning (from success & failure)

9. has high retention, career pathways

With these goals in mind (things we want to achieve), we thought about the measurements that would inform us where we are on that journey.

Let’s start with “automates repetitive tasks”. What does that mean?

Let’s start with “automates repetitive tasks”. What does that mean?

When an alert is sent to a SOC analyst for a decision, do they have to jump into a #SIEM or pivot to EDR to get more information?

For example, analysts may find themselves continuously asking questions like: Where has this user previously logged in from? At what times?

For example, analysts may find themselves continuously asking questions like: Where has this user previously logged in from? At what times?

How much time is spent wrestling with security tech to get more context to make a decision?

I think about “automates repetitive tasks” as decision support. Using tech/sw automation to enable our #SOC to answer the right questions about a security event in an easy way.

I think about “automates repetitive tasks” as decision support. Using tech/sw automation to enable our #SOC to answer the right questions about a security event in an easy way.

How do you get there? Think about the classes of work you send to your SOC. Try to group them.

Here’s an example :

- Unusual API calls/sequence

- Process/file execution event

- SaaS app event

- Unusual API auth event

- Outbound network conn

- Inbound network conn

- Etc

Here’s an example :

- Unusual API calls/sequence

- Process/file execution event

- SaaS app event

- Unusual API auth event

- Outbound network conn

- Inbound network conn

- Etc

The point is to think about the various classes of work that show up and the steps required to get to an informed decision. Not a fast decision, but an informed decision using contextual data.

Now think about the work time of these various classes of work.

Now think about the work time of these various classes of work.

Work time is the diff (typically measured in minutes) between when the work “starts” and when it's “done”.

Some are likely better than others. E.g., work times for EDR events are 50% faster than triaging SaaS app logins, because we spend 20 min per alert reviewing auth logs.

Some are likely better than others. E.g., work times for EDR events are 50% faster than triaging SaaS app logins, because we spend 20 min per alert reviewing auth logs.

You can find repetitive tasks by studying the steps taken by your SOC and then automate them.

What metrics inform if it’s working? Work times for the various classes should go down. Analysts get to spend more time on making the right decisions vs. wrestling with security tech.

What metrics inform if it’s working? Work times for the various classes should go down. Analysts get to spend more time on making the right decisions vs. wrestling with security tech.

In our SOC, it’s not just about automating “decision support”, several classes of work are automated end-to-end. Meaning, at this point we understand the work well enough where automation aka "bots" handle entire classes of work.

Bots do more than fetch information for analysts, they close alerts, perform investigations, create incidents, etc.

Here’s a blog with more details on this topic: expel.com/blog/spotting-…

Here’s a blog with more details on this topic: expel.com/blog/spotting-…

Next, there’s the more obvious “responds & contains incidents before damage”. How quickly does your SOC need to respond? Is it 1 min, is it 10? Set a target.

I think about measuring response as the time between when the first alert (lead) was created and the incident contained

I think about measuring response as the time between when the first alert (lead) was created and the incident contained

E.g, the host was contained, the account was disabled, the EC2 instance was shut down, the long-term access key was reset, etc.

There's nuance here where the first lead may have fired minutes, hours, days before detection. Take that into consideration as well.

There's nuance here where the first lead may have fired minutes, hours, days before detection. Take that into consideration as well.

There are a couple of things to consider here as well:

In your SOC you may have different alert severities (critical, high, medium, low, etc). And you likely use them as buffers to reduce variance and instruct analysts which alerts to handle first.

In your SOC you may have different alert severities (critical, high, medium, low, etc). And you likely use them as buffers to reduce variance and instruct analysts which alerts to handle first.

When you’re looking at your response times, inspect which alert severities most often detect security incidents. If most of your incidents start from “low” severity alerts that likely get looked at *after* the higher severities, what does that mean? Do you need to improve that?

Whether your building a #SOC, scaling a customer success team, or a D&R Engineering team define where you want to get to (what are your goals / what does success look like?) and think about the measurements that will tell you if you’re there or not.

If you’re interested in reading more about #SOC metrics, here are a few blogs on the topic:

expel.com/blog/performan…

expel.com/blog/performan…

expel.com/blog/performan…

expel.com/blog/performan…

• • •

Missing some Tweet in this thread? You can try to

force a refresh