New work! :D

We show evidence that DALL-E 2, in stark contrast to humans, does not respect the constraint that each word has a single role in its visual interpretation.

Work with @ravfogel and @yoav.

BlackboxNLP @ #emnlp2022

Below, "a person is hearing a bat"

We show evidence that DALL-E 2, in stark contrast to humans, does not respect the constraint that each word has a single role in its visual interpretation.

Work with @ravfogel and @yoav.

BlackboxNLP @ #emnlp2022

Below, "a person is hearing a bat"

We detail three types of behaviors that are inconsistent with the single role per word constraint

(1) A noun with multiple senses in an ambiguous prompt may cause DALL-E 2 to generate the same noun twice, but with different senses. In “a bat is flying over a baseball stadium”, “bat” is visualized as both: a flying mammal and a wooden stick.

(2) A noun may be interpreted as a modifier of two different entities. For “a fish and a gold ingot”, DALL-E 2 will consistently include a goldfish, despite “gold” being a modifier of “ingot”, not “fish”

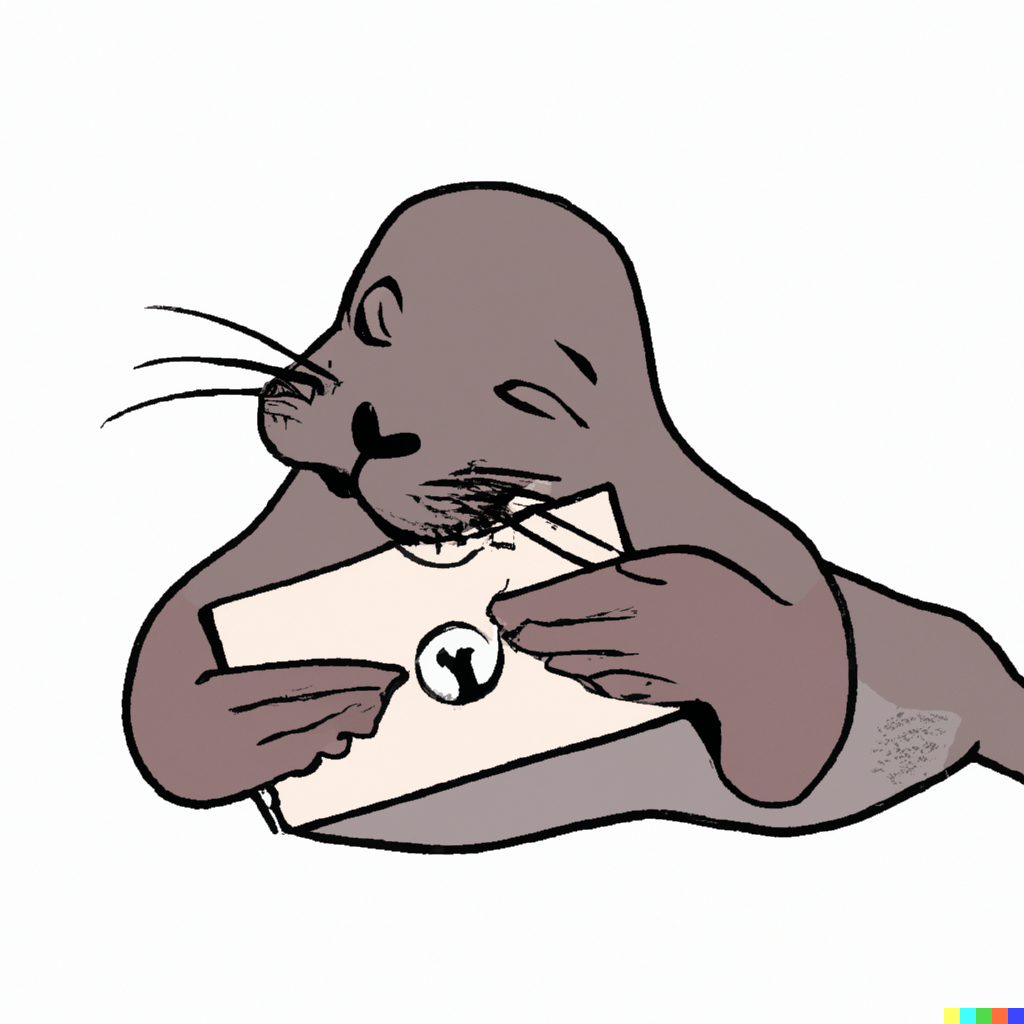

(3) A noun is interpreted simultaneously as an entity and as a modifier of a different entity. For “a seal is opening a letter”, DALL-E 2 tends to attribute the “sealed” property to the letter

Bonus finding: some nouns can be replaced with their description, and still lead to the first behavior. “crane” is not explicitly mentioned in “a tall, long-legged, long-necked bird and a construction site”, but two types of crane appear for its interpretation

If you’re interested to read more about this cool phenomenon, give our paper a read!

Paper: arxiv.org/pdf/2210.10606…

Paper: arxiv.org/pdf/2210.10606…

• • •

Missing some Tweet in this thread? You can try to

force a refresh