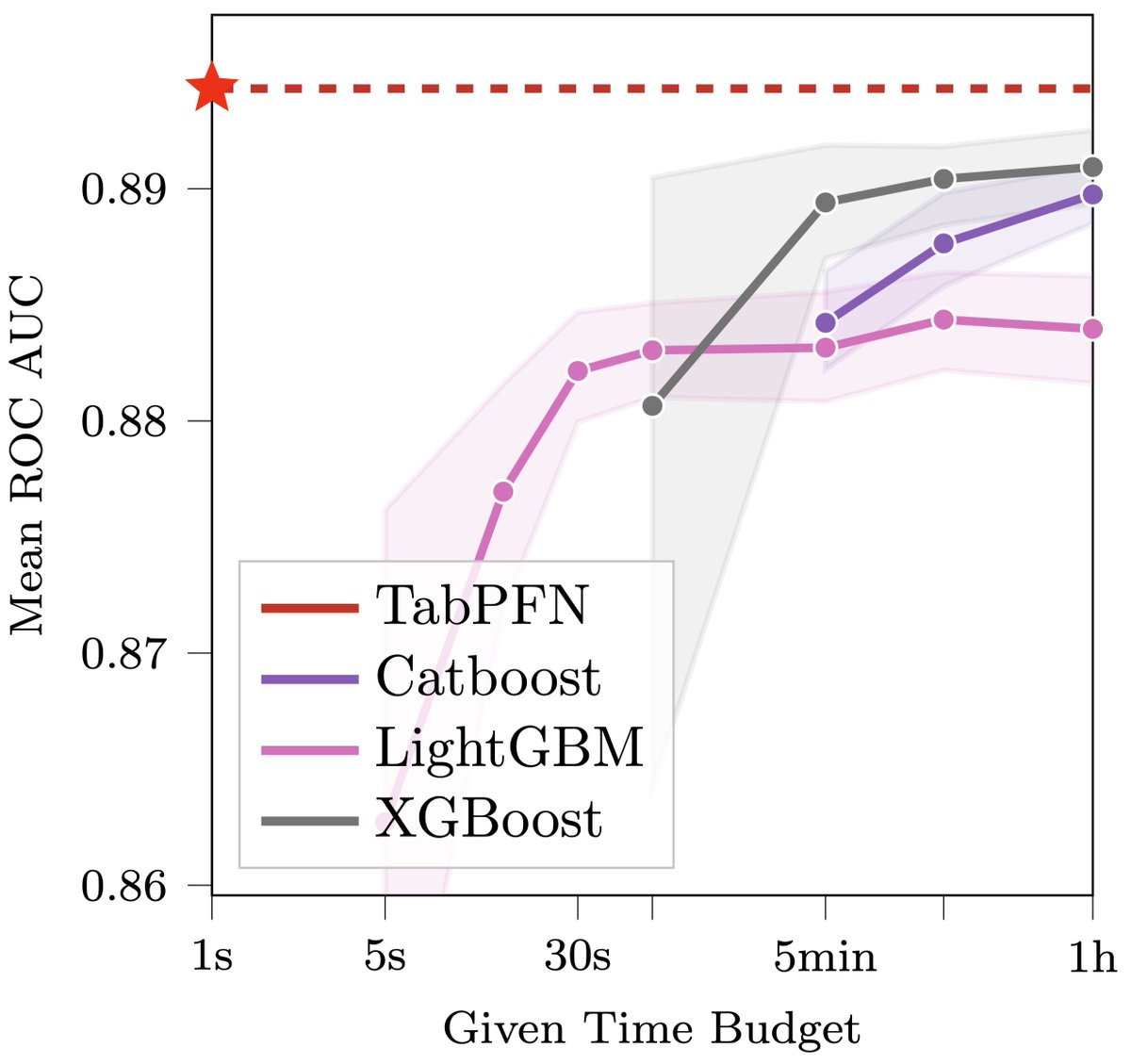

This may revolutionize data science: we introduce TabPFN, a new tabular data classification method that takes 1 second & yields SOTA performance (better than hyperparameter-optimized gradient boosting in 1h). Current limits: up to 1k data points, 100 features, 10 classes. 🧵1/6

TabPFN is radically different from previous ML methods. It is meta-learned to approximate Bayesian inference with a prior based on principles of causality and simplicity. Here‘s a qualitative comparison to some sklearn classifiers, showing very smooth uncertainty estimates. 2/6

TabPFN happens to be a transformer, but this is not your usual trees vs nets battle. Given a new data set, there is no costly gradient-based training. Rather, it’s a single forward pass of a fixed network: you feed in (Xtrain, ytrain, Xtest); the network outputs p(y_test). 3/6

TabPFN is fully learned: We only specified the task (strong predictions in a single forward pass, for millions of synthetic datasets) but not *how* it should be solved. Still, TabPFN outperforms decades worth of manually-created algorithms. A big step up in learning to learn. 4/6

Imagine the possibilities! Portable real-time ML with a single forward pass of a medium-sized neural net (25M parameters). Go, #GreenAutoML! Please share widely; there are endless possibilities for improvements & extensions and we'd love to tackle them with your help. 5/6

Please see our blog post automl.org/tabpfn-a-trans… for details & code. Also, we just got an oral at the #NeurIPS table representation learning workshop …ble-representation-learning.github.io 🎉Joint work with my outstanding students @noahholl, @SamuelMullr and @KEggensperger. 6/6

If you'd like to play with TabPFNs yourself, here is a direct link to the Colab with a scikit-learn like interface: colab.research.google.com/drive/194mCs6S…

• • •

Missing some Tweet in this thread? You can try to

force a refresh