Let's talk "misNAEPery": Common misues of #NAEP results. Here are 3 types of misNAEPery to look out for on Monday's "NAEP Day":

1) correlation-is-causation (@EduGlaze's original definition)

2) psychometric misNAEPery

3) one-true-outcome misNAEPery.

🧵 1/

1) correlation-is-causation (@EduGlaze's original definition)

2) psychometric misNAEPery

3) one-true-outcome misNAEPery.

🧵 1/

For each of these misNAEPeries, I try to distinguish between "high crimes" and "misdemeanors."

I used to get a little too gleeful in pointing out misNAEPery.

I now try to ask, "does it really matter?" or "what's the end goal?" before calling someone out for something "wrong." 2/

I used to get a little too gleeful in pointing out misNAEPery.

I now try to ask, "does it really matter?" or "what's the end goal?" before calling someone out for something "wrong." 2/

Type 1 misNAEPery: My leadership or policy caused these NAEP results. @EduGlaze coined misNAEPery in 2013 to refer to this common, predictable tendency among leaders, reporters, and commentators. ggwash.org/view/31061/bad… 3/

So is NAEP useless because we can't conclude anything about remote learning or charter schools or political leadership? No. My stance is closer to @MichaelPetrilli's here. NAEP is essential. fordhaminstitute.org/national/comme… 4/

For Type 1 misNAEPery, what's a "high crime"? Taking credit for high NAEP scores (with known correlations with wealth in our @seda_data).

But speculating on the basis of positive *trends* and declining inequality reduces this to a "misdemeanor," to me. 5/ edopportunity.org/explorer/#/cha…

But speculating on the basis of positive *trends* and declining inequality reduces this to a "misdemeanor," to me. 5/ edopportunity.org/explorer/#/cha…

To avoid Type 1 misNAEPery, wait for researchers to estimate policy effects after eliminating alternative explanations for results, like @ProfTDee and @BrianJacobProf did with NAEP, and @CEDR_US and coauthors did with NWEA data. 6/

onlinelibrary.wiley.com/doi/abs/10.100…

caldercenter.org/sites/default/…

onlinelibrary.wiley.com/doi/abs/10.100…

caldercenter.org/sites/default/…

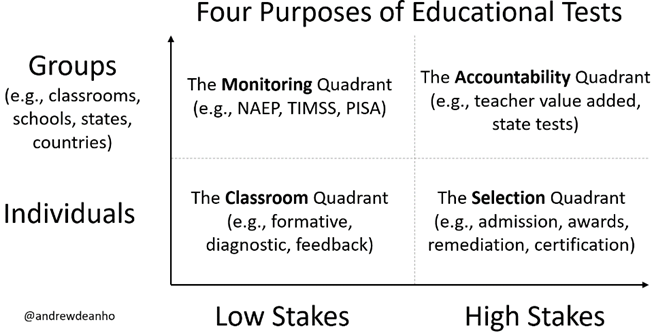

Type 2 misNAEPery is psychometric misNAEPery: misuse of different NAEP scores. This includes comparing proficiency %s, collapsing results across subjects and grades, neglecting statistical significance, and translating effect sizes to other metrics like "months of learning." 7/

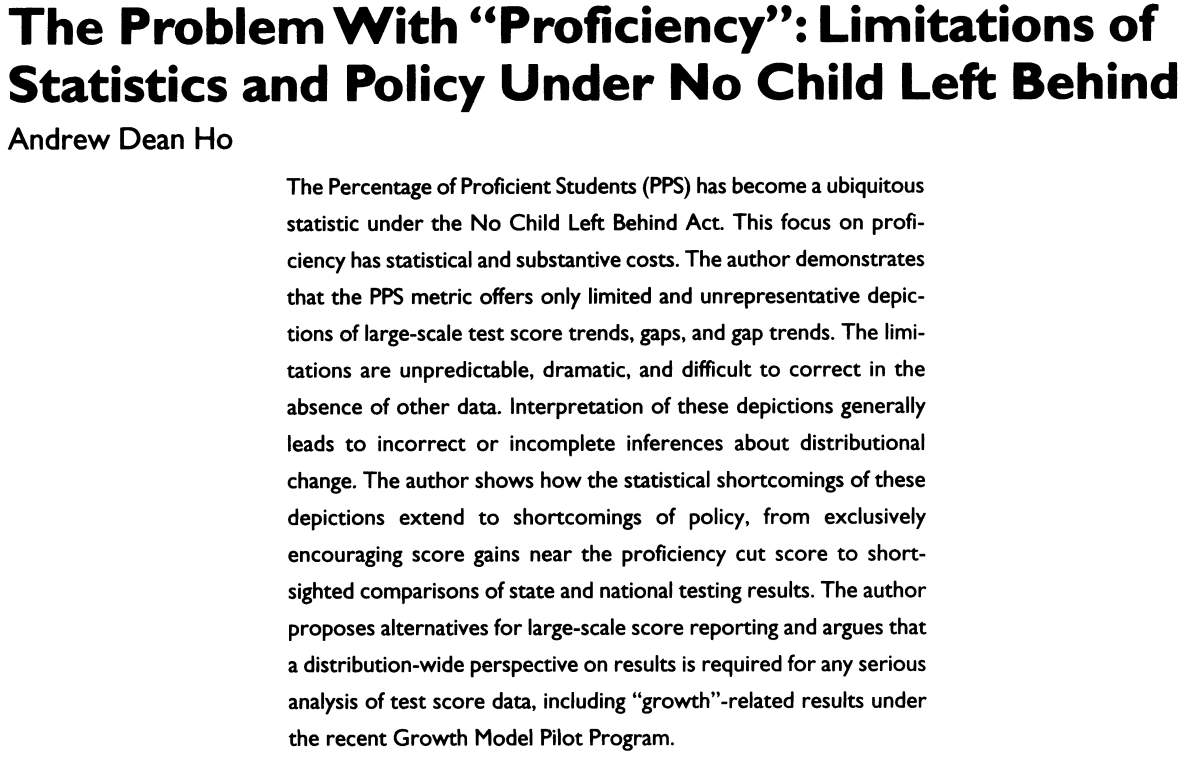

Monday, comparisons of proficiency %s will be everywhere. Are they wrong? Unfortunately, often, yes. 8/ journals.sagepub.com/doi/10.3102/00…

A rule of thumb for psychometric misNAEPery is, "beware the differences in differences." Any one score metric is probably fine. A difference in metrics is a "misdemeanor." And a difference in differences becomes a "high crime," if you don't cross-check with means, first. 9/

Unfortunately, differences-in-differences are often important.

Comparing pandemic trends across states? Diff-in-diff.

Comparing subgroup score inequality over time? Diff-in-diff.

These require cross-checking with means or reporting means outright. 10/ gse.harvard.edu/news/uk/15/12/…

Comparing pandemic trends across states? Diff-in-diff.

Comparing subgroup score inequality over time? Diff-in-diff.

These require cross-checking with means or reporting means outright. 10/ gse.harvard.edu/news/uk/15/12/…

Problems with proficiency are why I consider "months of learning" metrics to be "misdemeanors," and the lesser of two evils.

e.g., my "Rule of 27" is a transparent calculation that doesn't suffer from the bias that proficiency rate comparisons have. 11/

e.g., my "Rule of 27" is a transparent calculation that doesn't suffer from the bias that proficiency rate comparisons have. 11/

https://twitter.com/AndrewDeanHo/status/1565345533866086402?s=20&t=9N1Yf9Sqwo_cWoz1v61wYA

Similarly, I consider aggregating sensibly across subjects and grades to be a "misdemeanor," as we do in our "pooling model" for @seda_data, a simple index of academic educational opportunity. I hope you'll let us off with a warning. 12/

edopportunity.org/explorer/#/map…

edopportunity.org/explorer/#/map…

Type 3 misNAEPery is "one-true-outcome" misNAEPery, forgetting that NAEP scores and the Reading and Mathematics skills they measure are among many outcomes we desire for our children.

There is lots of middle ground between "NAEP is everything" and "NAEP is nothing." 13/

There is lots of middle ground between "NAEP is everything" and "NAEP is nothing." 13/

To me, it's a "high crime" to decontextualize NAEP entirely from other outcomes like physical health, social/emotional health, and other academic subjects. But it's also a "high crime" to dismiss NAEP entirely. It is important for kids to read and reason quantitatively. 14/

But I think it's fine, more of a "misdemeanor," to generalize from NAEP scores to "academic outcomes" after establishing this context. I've used a "tip of the iceberg" metaphor previously to remember what we're not measuring. 15/

news.harvard.edu/gazette/story/…

news.harvard.edu/gazette/story/…

My favorite metaphor for NAEP is that NAEP is like "the North Star." It's just one star in the sky, but its consistency and dependability help us to navigate. I credit my fellow @GovBoard alumnus (and kamaʻāina) Frank Fernandes with the metaphor. 16/

So, on Monday, beware misNAEPery of all 3 types. Distinguish between "high crimes" and "misdemeanors." (Save your outrage for the "high crimes.") And then, as I've said before, let's keep increasing our support of education. 17/

news.harvard.edu/gazette/story/…

news.harvard.edu/gazette/story/…

And, in case you missed my thread yesterday on "Why is NAEP Monday important?", see here:

https://twitter.com/AndrewDeanHo/status/1583572868260327424?s=20&t=kksJqyWct1zZrpfC9qHcNg18/18

• • •

Missing some Tweet in this thread? You can try to

force a refresh