I guess it's a milestone for "AI" startups when they get their puff-pieces in the media. I want to highlight some obnoxious things about this one, on Cohere. #AIhype ahead...

…mail-com.offcampus.lib.washington.edu/business/rob-m…

>>

…mail-com.offcampus.lib.washington.edu/business/rob-m…

>>

First off, it's boring. I wouldn't have made it past the first couple of paragraphs, except the reporter had talked to me so I was (increasingly morbidly) curious how my words were being used.

>>

>>

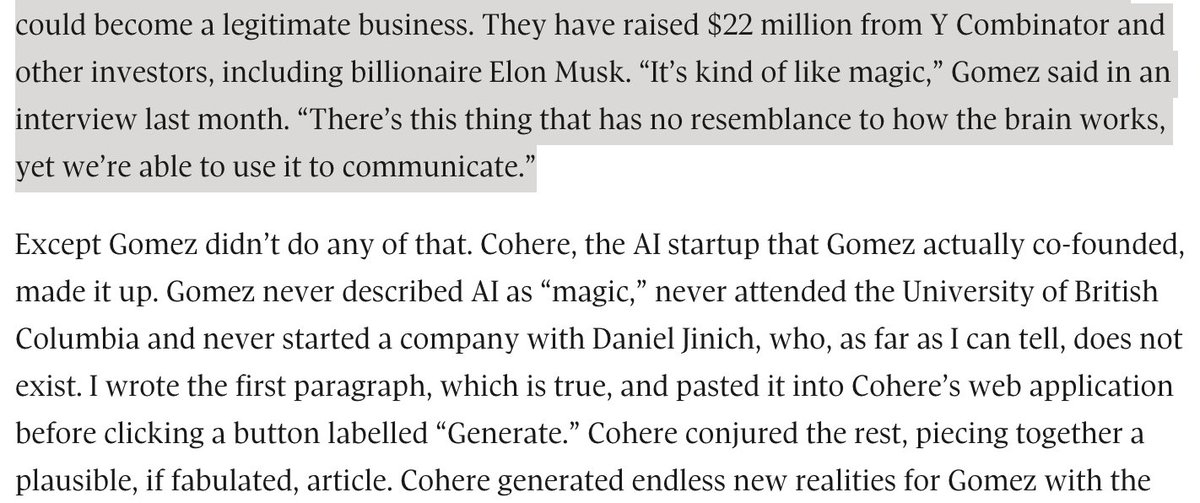

The second paragraph (and several others) is actually the output of their LLM. This is flagged in the subhead and in the third paragraph. I still think it's terrible journalistic practice.

>>

>>

It's not news that these things can generate coherent seeming text. It's not news that that means we'll increasingly have to work to build trust (and distinguish trustworthy news sources). So why would a seemingly reputable news source want to blur that boundary?

>>

>>

Then a whole bunch of boring things about the founders' college years/20s. And then this presentation of what LLMs can supposedly do, listed as "ideas" but if you missed that (because your eyes had glazed over) seeming like claims of what is actually possible.

>>

>>

Those ideas get increasingly less plausible/relevant. Yes, marketers can generate ad copy, and maybe that will help them with ideas. Yes, programmers can generate code, but is it really a time saver in the end? (How much harder is debugging?). >>

But I sure as hell don't want any lawyer working for me using a seq2seq model to "extract" information from contracts. And if the LM isn't being used generatively like that, what's the "advantage" over plain old Ctrl-F? (Or maybe using word embeddings for fuzzy search?)

>>

>>

And "more powerful voice assistants to make life easier" is to vague to mean anything and "replacing clunky keyword searches" sounds suspiciously like the terrible idea @chirag_shah and I take apart here:

dl.acm.org/doi/10.1145/34…

>>

dl.acm.org/doi/10.1145/34…

>>

After more discussion of the possible applications and who is doing what in this space, the article *finally* gets to ethical concerns: "But the more fundamental question about LLMs has nothing to do with market size or competition. It’s about how to use them responsibly."

>>

>>

Discussing Tay, the journalist pulls a quote from @Abebab and @vinayprabhu (who in turn cite @ruha9 as their inspiration), referring to them only as "a couple of AI researchers" with no link to the source. (rude)

Cohere's stance seems to be: Whelp, it's too big to actually curate/document thoroughly. You're just gonna have to live with that. As if just not building on enormous piles of internet trash were not an option.

The article also covers some "guidelines" that Cohere put out earlier this year (with OpenAI and AI21 Labs) and quotes my skeptical reading of them ... but check out the uncritical presentation of Cohere's response.

>>

>>

For the millionth time: Language models do not understand.

>>

>>

A bit lower down, the journalist again uncritically quotes this #AIhype. No, their language model isn't "figuring out" anything. (And odd to see this, given the point made earlier in the article that Cohere differs from OpenAI in not having "AGI" as its goal.)

>>

>>

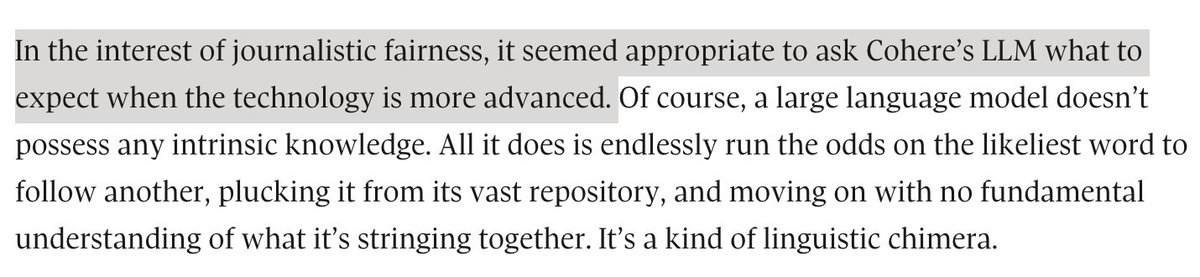

The article ends with this bit of nonsense. The journalist is quite correct that LMs have no understanding of what they are stringing together. Given that, in what world are they kind of entity that should be asked their opinion (as a kind of "journalistic fairness" no less)?

>>

>>

Once again, I think we're seeing the work of a journalist who hasn't resisted the urge to be impressed (by some combination of coherent-seeming synthetic text and venture capital interest). I give this one #twomarvins and urge consumers of news everywhere to demand better.

(Tweeting while in flight and it's been pointed out that the link at the top of the thread is the one I had to use through UW libraries to get access. Here's one that doesn't have the UW prefix: theglobeandmail.com/business/rob-m… )

• • •

Missing some Tweet in this thread? You can try to

force a refresh