Can instruction tuning improve zero and few-shot performance on dialogue tasks? We introduce InstructDial, a framework that consists of 48 dialogue tasks created from 59 openly available dialogue datasets

#EMNLP2022🚀

Paper 👉 arxiv.org/abs/2205.12673

Work done at @LTIatCMU

🧵👇

#EMNLP2022🚀

Paper 👉 arxiv.org/abs/2205.12673

Work done at @LTIatCMU

🧵👇

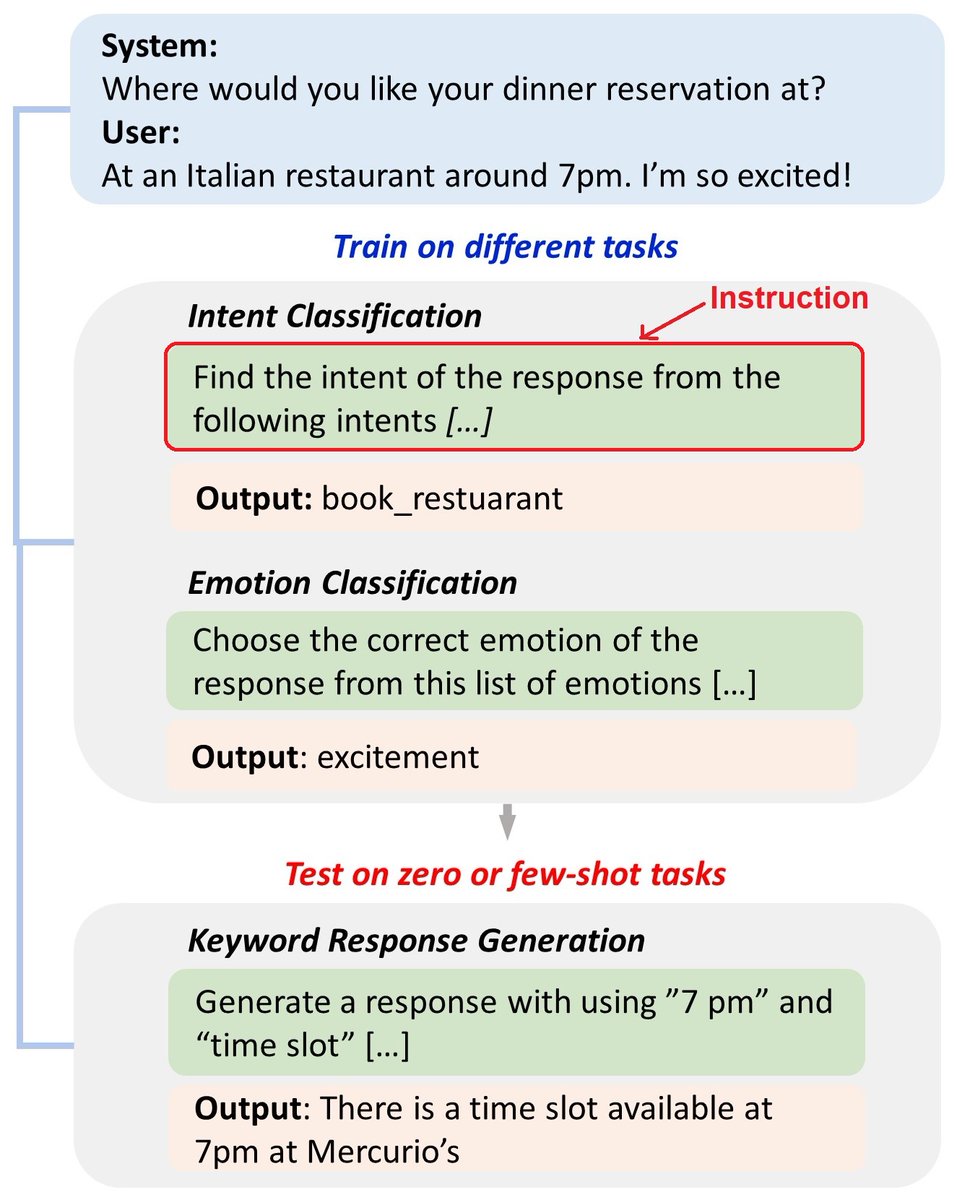

Instruction tuning involves fine-tuning a model on a collection of tasks specified through natural language instructions (T0, Flan models). We systematically studied instruction tuning for dialogue tasks and show it works a lot better than you might expect!

The InstructDial framework consists of 48 diverse dialogue tasks varying from classification, grounded and controlled generation, safety, QA, pretraining, summarization, NLI, and other miscellaneous tasks. All tasks are specified through instructions in a seq-2-seq format.

We introduce two meta-tasks to learn the association between the instructions, inputs, and outputs for a task. For example, in Instruction selection task, the model selects the correct instruction for an input-output pair. We show this improves generalization to new instructions!

Our results show that our models Dial-T0 and Dial-Bart0 outperform the baselines and GPT-3 models on unseens tasks. Surprisingly, the smaller Bart-based model outperforms T5 on some tasks. Even training on a few (100) instances of the test tasks leads to significant improvements!

On zero-shot dialogue evaluation, DIAL-T0 outperforms all baseline models on multi-domain eval sets. Our models also demonstrate great zero and few-shot performance across dialogue tasks such as intent detection, slot filling, and dialogue state tracking (more results in paper).

We performed ablation studies showing the impact of model size/type, number of tasks, and meta-tasks. Our experiments reveal that instruction tuning does not benefit all unseen test tasks and that improvements can be made in instruction wording invariance and task interference.

Our code, data, and models are available at

github.com/prakharguptaz/…

We provide data readers for a huge collection of datasets, and scripts for training and evaluation.

Models can be found at huggingface huggingface.co/prakharz/DIAL-…,

prakharz/DIAL-BART0,

prakharz/DIAL-FLANT5-XL😀

github.com/prakharguptaz/…

We provide data readers for a huge collection of datasets, and scripts for training and evaluation.

Models can be found at huggingface huggingface.co/prakharz/DIAL-…,

prakharz/DIAL-BART0,

prakharz/DIAL-FLANT5-XL😀

We hope InstructDial will facilitate progress on instruction-tuning and multi-task training for dialogue. #NLProc

Credits to co-authors: Cathy Jiao, Yi-Ting Yeh, @shikibmehri, Maxine Eskenazi, @jeffbigham 🎉

Credits to co-authors: Cathy Jiao, Yi-Ting Yeh, @shikibmehri, Maxine Eskenazi, @jeffbigham 🎉

• • •

Missing some Tweet in this thread? You can try to

force a refresh