What does a #SOC tour look like when the team is remote?

TL;DR - Not a trip to a room with blinky lights - but instead a discussion about mission, mindset, ops mgmt, results and a demo of the tech and process that make our SOC “Go”.

SOC tour in the 🧵...

TL;DR - Not a trip to a room with blinky lights - but instead a discussion about mission, mindset, ops mgmt, results and a demo of the tech and process that make our SOC “Go”.

SOC tour in the 🧵...

Our SOC tour starts with a discussion about mission. I believe a key ingredient to high performing teams is a clear purpose and “Why”.

What’s our mission? It's to protect our customers and help them improve.

What’s our mission? It's to protect our customers and help them improve.

Our mission is deliberately centered around problem solving and being a strategic partner for our customers. Notice that there are zero mentions of looking at as many security blinky lights as possible. That’s intentional.

Next, we talk about culture and guiding principles - key ingredients for any #SOC. I think about culture as the behaviors and beliefs that exist when management isn’t in the room.

Culture isn't memes on a slide - it's behavior and mindset.

Culture isn't memes on a slide - it's behavior and mindset.

Next, with a clear mission and mindset - how are we organized as a team get there? Less experienced analysts are backed by seasoned responders. If there's a runaway alert (it happens), there's a team of D&R Engineers monitoring the situation ready to respond.

The tour then focuses on operations management and how we do this for a living. You have to have intimate knowledge of what your system looks like so you know when something requires attention. Is it a rattle in the system (transient issue) or a big shift in work volume?

With solid ops management we’re able to constantly learn from our analysts and optimize for the decision moment. We watch patterns and make changes to reduce manual effort. We hand off repetitive tasks to bots because automation unlocks fast and accurate decisions.

Next, our tour focuses on how we think about investigations. Great investigations are stories (based on evidence of course).

When we identify an incident we investigate to determine what happened, when, how it got there, and what we need to do about it. Stories.

When we identify an incident we investigate to determine what happened, when, how it got there, and what we need to do about it. Stories.

Next, how we think about quality control in our #SOC. We make a couple key points:

1. We don’t trade quality for efficiency

2. You can measure quality in a SOC

3. QC checks run daily based on a set of manufacturing ISOs to spot failures to drive improvements

1. We don’t trade quality for efficiency

2. You can measure quality in a SOC

3. QC checks run daily based on a set of manufacturing ISOs to spot failures to drive improvements

Sidenote: Steal/copy our SOC QC guiding principles:

- We’re going to use industry standards to sample

- The sample has to be representative of the population and done daily

- Measurements of the sample need to be accurate and precise

- Metrics we produce need to be digestible

- We’re going to use industry standards to sample

- The sample has to be representative of the population and done daily

- Measurements of the sample need to be accurate and precise

- Metrics we produce need to be digestible

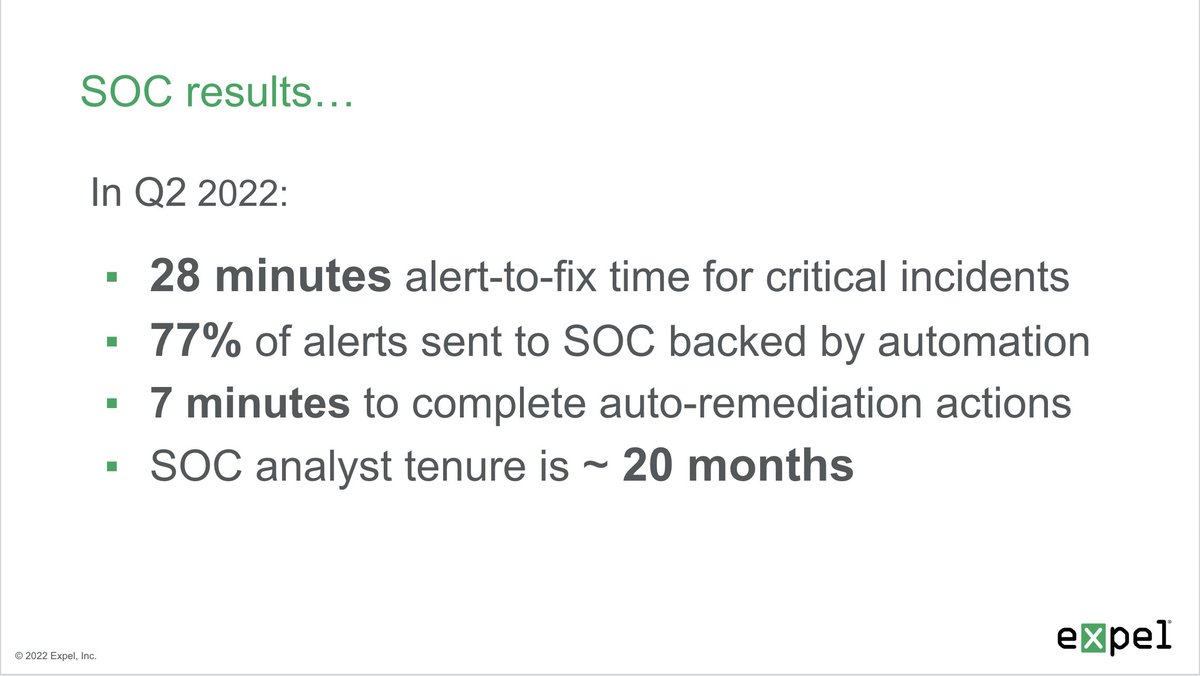

What about #SOC results? Let's talk about it. Yes, alert-to-fix in <30 minutes is quite good. But a high-degree of automation and SOC retention are equally important.

Before the tour ends we share insights. The security incidents we detect become insights for every customer.

“Identity is the new endpoint”, a lot of BEC in M365 and MFA fatigue attacks are up.

You can d/l a copy of our Quarterly Threat Report here:

expel.com/expel-quarterl…

“Identity is the new endpoint”, a lot of BEC in M365 and MFA fatigue attacks are up.

You can d/l a copy of our Quarterly Threat Report here:

expel.com/expel-quarterl…

Then we jump into our platform and provide a demo of the tech and process that enable the #SOC to complete their mission. Here's a video capturing some of the items we cover: expel.com/managed-securi…

Finally, we stop by the #SOC. Most of our analysts will be remote - but a tour is about so much more than seeing a room with monitors and blinky lights. I believe a great SOC tour highlights the people, culture and mindset behind tech and process.

• • •

Missing some Tweet in this thread? You can try to

force a refresh