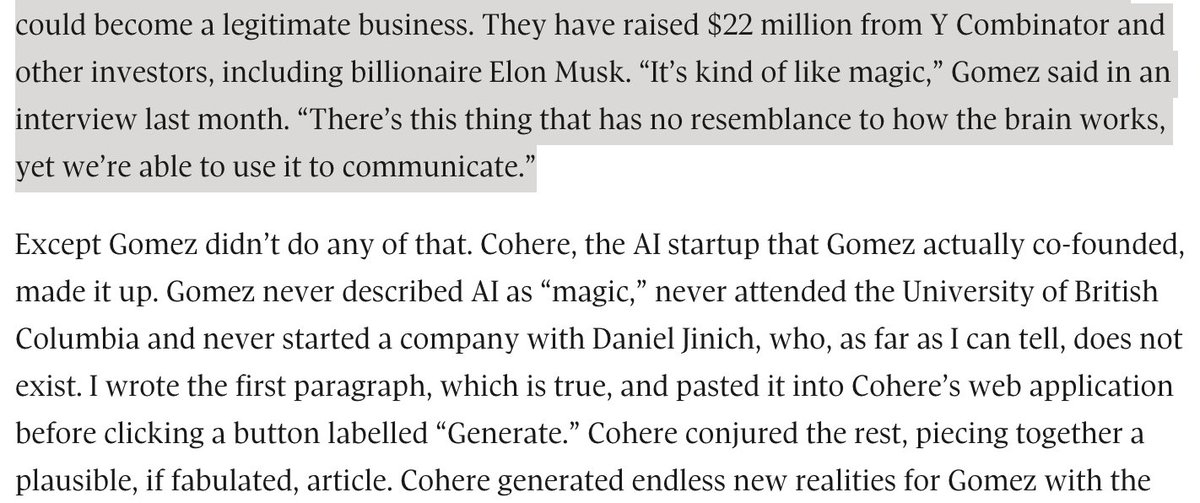

Facebook (sorry: Meta) AI: Check out our "AI" that lets you access all of humanity's knowledge.

Also Facebook AI: Be careful though, it just makes shit up.

This isn't even "they were so busy asking if they could"—but rather they failed to spend 5 minutes asking if they could.

>>

Also Facebook AI: Be careful though, it just makes shit up.

This isn't even "they were so busy asking if they could"—but rather they failed to spend 5 minutes asking if they could.

>>

Using a large LM as a search engine was a bad idea when it was proposed by a search company. It's still a bad idea now, from a social media company. Fortunately, @chirag_shah and I already wrote the paper laying that all out:

dl.acm.org/doi/10.1145/34…

>>

dl.acm.org/doi/10.1145/34…

>>

In the popular press/general public-facing Q&A about our paper:

technologyreview.com/2022/03/29/104…

washington.edu/news/2022/03/1…

>>

technologyreview.com/2022/03/29/104…

washington.edu/news/2022/03/1…

>>

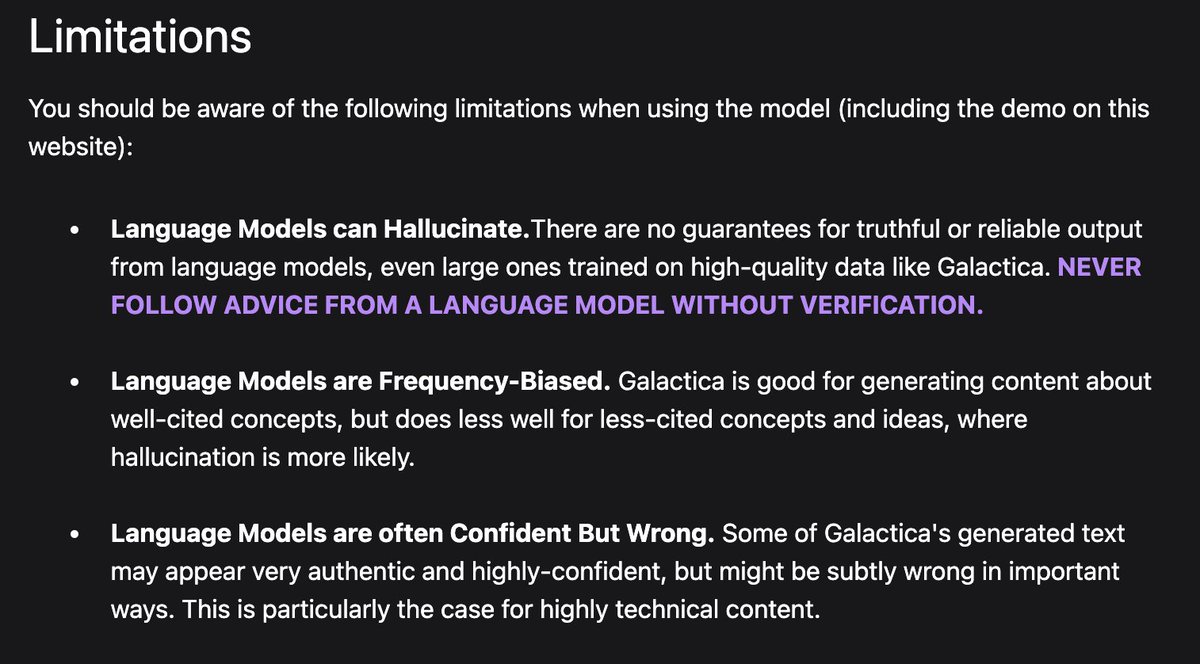

And let's reflect for a moment on how they phrased their disclaimer, shall we? "Hallucinate" is a terrible word choice here, suggesting as it does that the language model has *experiences* and *perceives things*.

>>

>>

(And on top of that, it's making light of a symptom of serious mental illness.)

>>

>>

Likewise "LLMs are often Confident". No, they're not. That would require subjective emotion.

>>

>>

I went digging in the paper to see if they cite #StochasticParrots or my Bender & @alkoller 2020 or @chirag_shah & Bender 2022.

That is, did they read about why this is misguided and just press ahead anyway? Apparently not.

>>

That is, did they read about why this is misguided and just press ahead anyway? Apparently not.

>>

@alkoller @chirag_shah They do cite Blodgett et al 2020 (fabulous paper!)

aclanthology.org/2020.acl-main.…

But in the strangest possible way. Are they reflecting on the possible harms their technology might engender? No, of course not. They're striving for TRUTH! And thus worried about "bias".

>>

aclanthology.org/2020.acl-main.…

But in the strangest possible way. Are they reflecting on the possible harms their technology might engender? No, of course not. They're striving for TRUTH! And thus worried about "bias".

>>

Narrator voice: LMs have no access to "truth", or any kind of "information" beyond information about the distribution of word forms in their training data. And yet, here we are. Again.

>>

>>

This thread went to Mastodon first. I'm not sure how long I'll keep bringing them here, too -- so come find me over there: @emilymbender@dair-community.social

• • •

Missing some Tweet in this thread? You can try to

force a refresh