RL with KL penalties – a powerful approach to aligning language models with human preferences – is better seen as Bayesian inference. A thread about our paper (with @EthanJPerez and @drclbuckley) to be presented at #emnlp2022 🧵arxiv.org/pdf/2205.11275… 1/11

@EthanJPerez @drclbuckley RL with KL penalties is a powerful algorithm behind RL from human feedback (RLHF), the methodology heavily used by @OpenAI (InstructGPT), @AnthropicAI (helpful assistant) and @DeepMind (Sparrow) for aligning LMs with human preferences such as being helpful and avoiding harm. 2/14

@EthanJPerez @drclbuckley @OpenAI @AnthropicAI @DeepMind Aligning language models through RLHF consists of (i) training a reward model to predict human preference scores (reward) and (ii) fine-tuning a pre-trained LM to maximise predicted reward. 3/14

A problem with pure RL is that it can result in disfluent text. To prevent the LM from departing too far from its original weights, one typically adds the Kullback-Leibler (KL) divergence from the original LM in the objective. That’s the KL penalty from the first tweet. 4/14

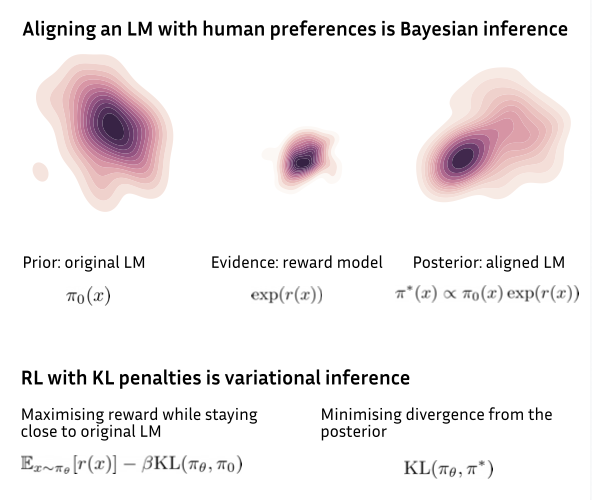

In our paper, we show that RLHF can be derived from the Bayes’ rule. The optimal policy that maximises the RL+KL objective can be seen as the posterior that optimally reconciles the pretrained model and evidence about human preferences provided by the reward model. 5/14

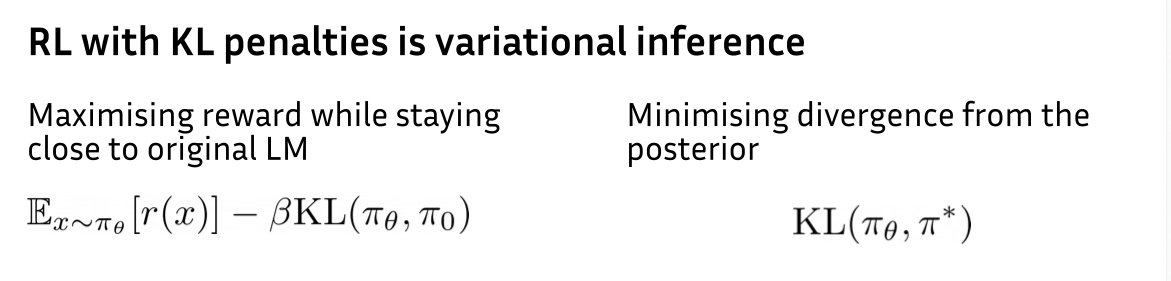

Bayesian inference problems like the one above are generally intractable, but can be solved approximately in numerous ways. In our paper, we further observe that RL with KL penalties corresponds to an established approximate inference algorithm: variational inference. 6/14

In other words, maximising the KL-regularised RL objective used in RLHF is equivalent to minimising KL from a Bayesian posterior (from the previous post) which itself is equivalent to minimising the well-known evidence lower bound (ELBo) in variational inference. 7/14

Why is this perspective insightful? First, it explains where the RLHF’s KL penalty comes from: it’s not a hack but can be derived in a principled way from the Bayes’ rule. 8/14

Without KL penalties, RL would tend to put all probability on a single, best sequence (optimal decision). Instead, the Bayesian perspective treats LMs as generative models, not optimal decision makers. An LM represents a distribution over multiple agents. 9/14

Second, Bayesian inference offers a new perspective on LM alignment: it can be seen as minimising divergence from a target distribution representing desired behaviour of an ideal, aligned LM. 10/14

It conceptually separates LM alignment into two parts: modelling (designing the target distribution) and inference (generating samples from it). RL with KL penalties is just one inference algorithm (variational inference). Other include e.g. rejection sampling. 11/14

Finally, the Bayesian perspective highlights similarities between RL with KL penalties and other other divergence-minimisation-based approaches to aligning LMs such as GDC (arxiv.org/abs/2206.00761), which is not equivalent to RL and naturally avoids distribution collapse. 12/14

To sum up: (i) RL for LMs works only with KL penalties and (i) non-RL KL approaches also work. This suggests it’s distribution matching, not RL, doing the work. What if RL simply isn’t an adequate formal framework for problems such as aligning LMs? 13/14

The paper greatly benefited from discussions with @hadyelsahar, @germank, @MarcDymetman, @jeremy_scheurer and @repligate. Here’s again the link to the paper arxiv.org/pdf/2205.11275… 14/14

• • •

Missing some Tweet in this thread? You can try to

force a refresh