Thrilled to share our latest work on @biorxivpreprint demonstrating the first real-time closed-loop ultrasonic brain-machine interface (#BMI)! 🔊🧠-->🎮🖥️

Paper link - biorxiv.org/content/10.110…

A #tweetprint. 🧵1/n

Paper link - biorxiv.org/content/10.110…

A #tweetprint. 🧵1/n

First, this work would not have been possible without co first-author @SumnerLN, co-authors @DeffieuxThomas, @GeelingC, @BrunoOsmanski, and Florian Segura, and PIs Richard Andersen, @mikhailshapiro, @TanterM, @VasileiosChris2, and Charles Liu. (2/n)

Brain-machine interfaces (BMIs) can be transformative for people with paralysis from neurological injury/disease. BMIs translate brain signals into computer commands, enabling users to control computers, robots, and more – with nothing but thought.

bit.ly/3EAgl94

(3/n)

bit.ly/3EAgl94

(3/n)

However, state-of-the-art electrode-based BMIs are highly invasive, limited to small regions of superficial cortex, and last ~5 years. The next generation of BMIs should be longer-lasting, less-invasive, and scalable to measure from numerous, if not all, brain regions.

(4/n)

(4/n)

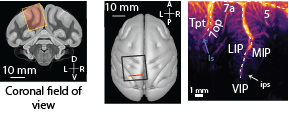

An emerging technology, functional ultrasound imaging (fUS), meets many of these attributes (see review - bit.ly/fUSreview). In our current study, we streamed 2 Hz real-time fUS images from the posterior parietal cortex in two monkeys as they made eye movements.

(5/n)

(5/n)

Monkeys performed a memory-guided eye movement task in eight directions. After collecting 100 trials to train the fUS-BMI, we enabled BMI control. Now, the monkey controlled the task cursor position with the fUS-BMI.

(6/n)

(6/n)

The fUS BMI used 1.5 s of data from the movement planning period. In BMI mode, the movement direction prediction directly controlled the task. If the prediction was correct, we added that trial’s data to our training set and retrained the decoder before the next trial.

(7/n)

(7/n)

The monkey successfully controlled the fUS-BMI in eight movement directions! Most errors neighbored the cued direction, keeping angular errors low.

(8/n)

(8/n)

We used a 200 um searchlight analysis to understand which brain regions were important for the decoding performance. The most informative voxels from the searchlight analysis were in the LIP, supporting its canonical role in planning eye movements.

(9/n)

(9/n)

But wait, there’s more! An ideal BMI needs minimal or no calibration. Collecting 100 trials of data takes 30-45 minutes to acquire. We developed a pretraining method to eliminate this training period entirely.

(10/n)

(10/n)

For each new session, we would acquire a vascular image and align the previous data to the new session. This allows us to train the BMI using pre-recorded data. Here, we show alignment of data collected over two months apart.

(11/n)

(11/n)

This method greatly reduces, and can even eliminate, the need for calibrating the BMI after the first day.

(12/n)

(12/n)

This is just the beginning of the story. For a deeper dive, make sure to check out the full preprint - biorxiv.org/content/10.110…

(13/n)

(13/n)

Finally, thank you to the following institutions and programs for supporting me in this research -

@NINDSnews, @NatEyeInstitute, @CaltechN, @dgsomucla, @uclacaltechmstp, @ChenInstitute, and the Josephine de Karman Fellowship.

(14/14)

@NINDSnews, @NatEyeInstitute, @CaltechN, @dgsomucla, @uclacaltechmstp, @ChenInstitute, and the Josephine de Karman Fellowship.

(14/14)

• • •

Missing some Tweet in this thread? You can try to

force a refresh