We are excited to announce the release of Stable Diffusion Version 2!

Stable Diffusion V1 changed the nature of open source AI & spawned hundreds of other innovations all over the world. We hope V2 also provides many new possibilities!

Link → stability.ai/blog/stable-di…

Stable Diffusion V1 changed the nature of open source AI & spawned hundreds of other innovations all over the world. We hope V2 also provides many new possibilities!

Link → stability.ai/blog/stable-di…

Text-to-Image

The V2.0 release includes robust text-to-image models trained using a new text encoder developed by LAION with support from Stability AI, which greatly improves the quality of the generated images compared to earlier V1 releases.

The V2.0 release includes robust text-to-image models trained using a new text encoder developed by LAION with support from Stability AI, which greatly improves the quality of the generated images compared to earlier V1 releases.

The text-to-image models can generate images with default resolutions of both 512x512 & 768x768.

The models are trained on a LAION-5B aesthetic subset, which is then further filtered with LAION’s NSFW filter.

Examples of images produced using V2.0, at 768x768 image resolution:

The models are trained on a LAION-5B aesthetic subset, which is then further filtered with LAION’s NSFW filter.

Examples of images produced using V2.0, at 768x768 image resolution:

Upscaling

V2.0 also includes an Upscaler model that enhances the resolution of images by 4x. Here’s an example of our model upscaling a 128x128 generated image to 512x512. Along with our text-to-image models, V2.0 can now generate images with resolutions of 2048x2048– or higher.

V2.0 also includes an Upscaler model that enhances the resolution of images by 4x. Here’s an example of our model upscaling a 128x128 generated image to 512x512. Along with our text-to-image models, V2.0 can now generate images with resolutions of 2048x2048– or higher.

Depth-to-Image

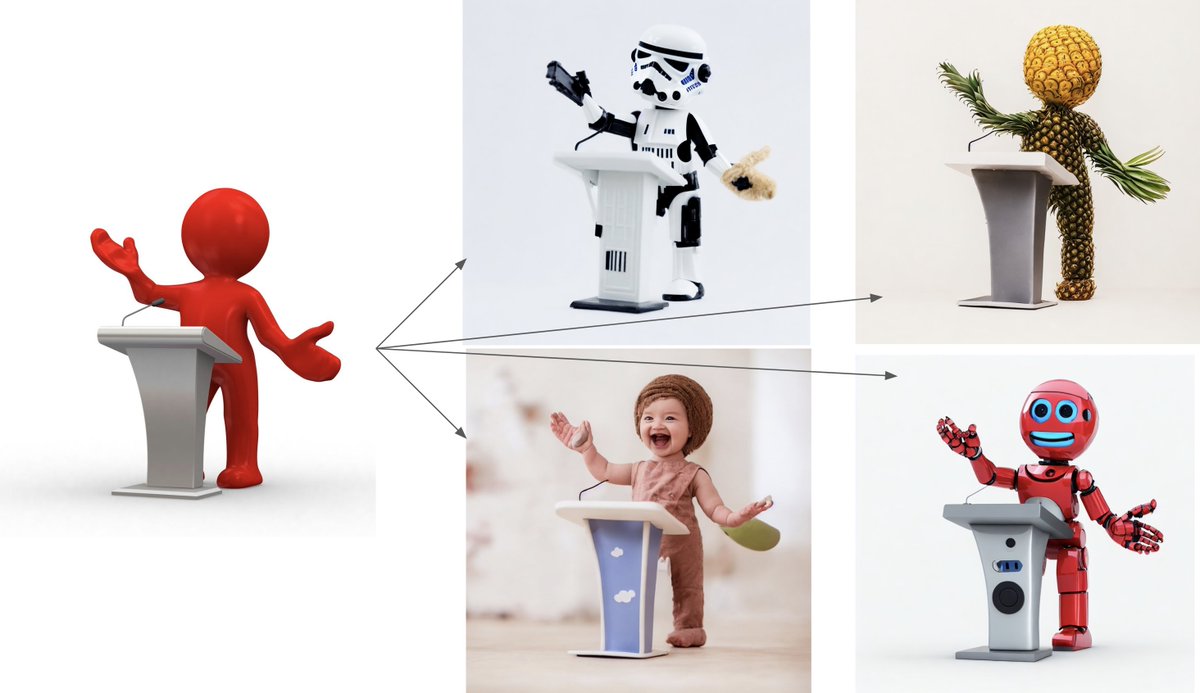

We also release a depth-guided stable diffusion model, depth2img. It infers the depth of an input image (using an existing model), and then generates new images using both the text and depth information.

We also release a depth-guided stable diffusion model, depth2img. It infers the depth of an input image (using an existing model), and then generates new images using both the text and depth information.

depth2img can offer all sorts of new creative applications, delivering transformations that look radically different from the original, but which still preserve the coherence and depth of that image:

Inpainting

Finally, we also include a new text-guided inpainting model, fine-tuned on the new Stable Diffusion 2.0 base text-to-image model, which makes it super easy to switch out parts of an image intelligently and quickly.

Finally, we also include a new text-guided inpainting model, fine-tuned on the new Stable Diffusion 2.0 base text-to-image model, which makes it super easy to switch out parts of an image intelligently and quickly.

We’ve worked hard to optimize the models to run on a single GPU, making it accessible to as many people as possible from the very start!

The models from this release will hopefully serve as a foundation of countless applications and enable an explosion of new creative potential.

The models from this release will hopefully serve as a foundation of countless applications and enable an explosion of new creative potential.

For more details about accessing the model, please check our GitHub repository: github.com/Stability-AI/s…

These models will be available at @DreamStudioAI in the coming days. Devs can check our API Platform for docs and more info (platform.stability.ai).

These models will be available at @DreamStudioAI in the coming days. Devs can check our API Platform for docs and more info (platform.stability.ai).

• • •

Missing some Tweet in this thread? You can try to

force a refresh