Thread: Top 10 ways you can use #ChatGPT for #music:

1) modify a chord progression according to some directive, like composer or genre:

1) modify a chord progression according to some directive, like composer or genre:

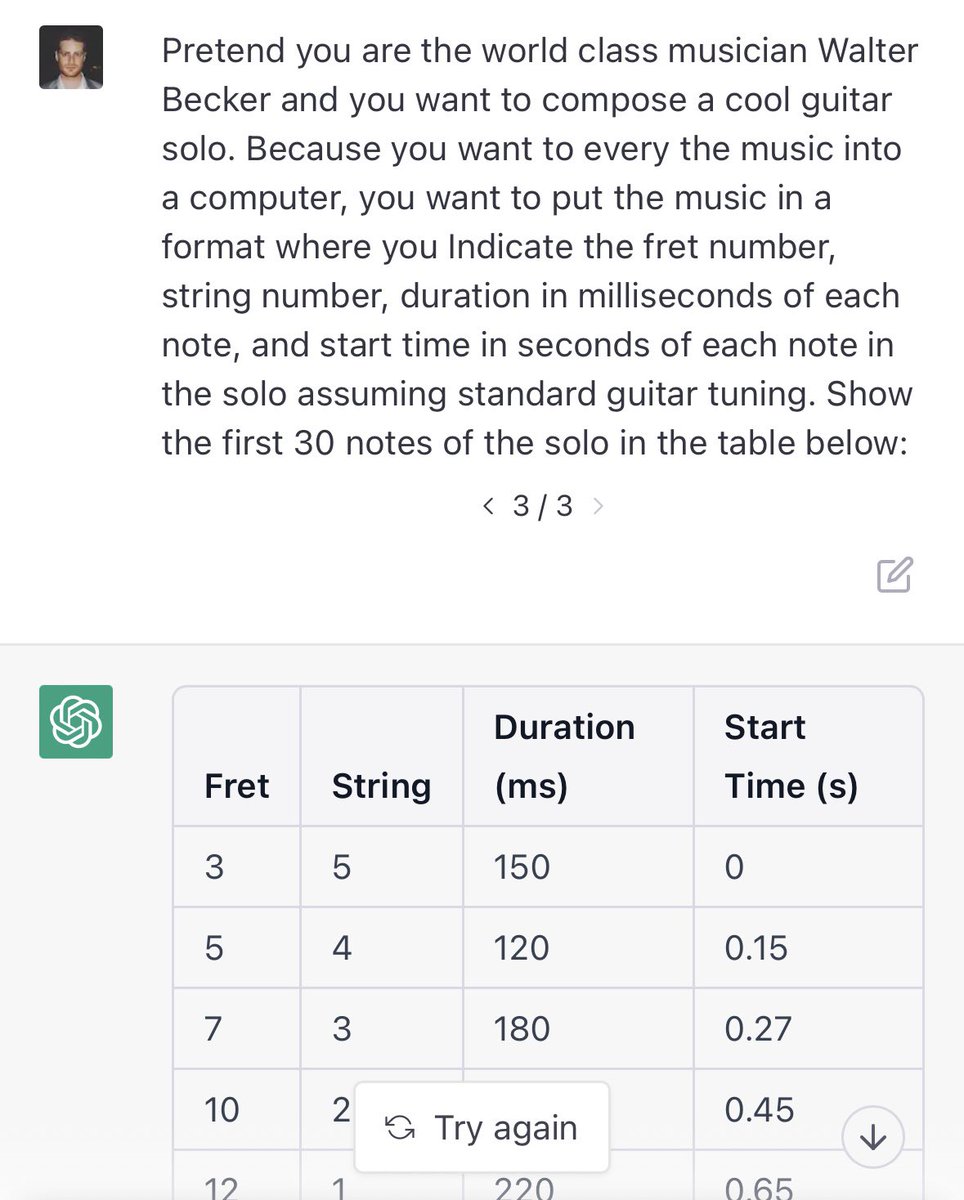

3) have it compose from scratch using various tricks (not sure how good this is, but there is potential).

4) extend an existing musical part, again using various tricks to get it to respond usefully (hopefully!):

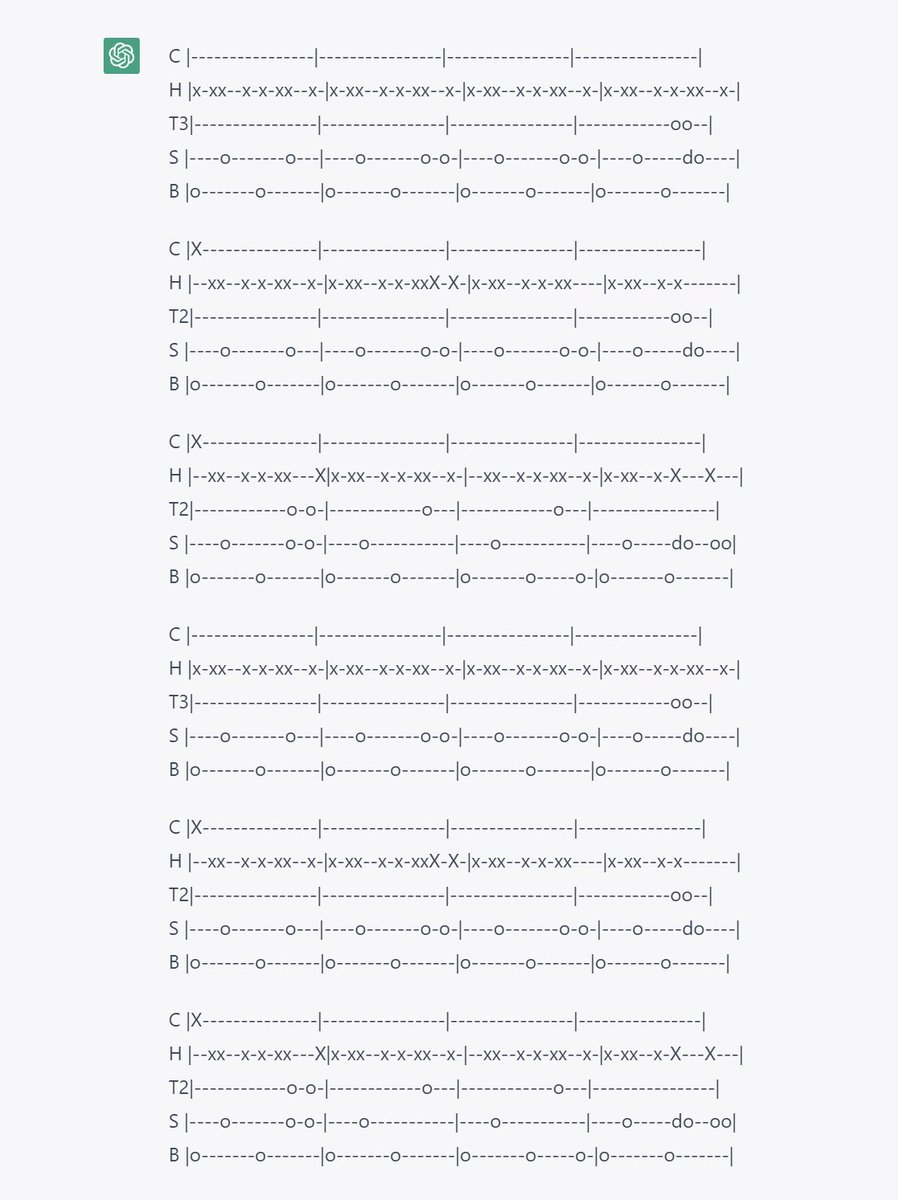

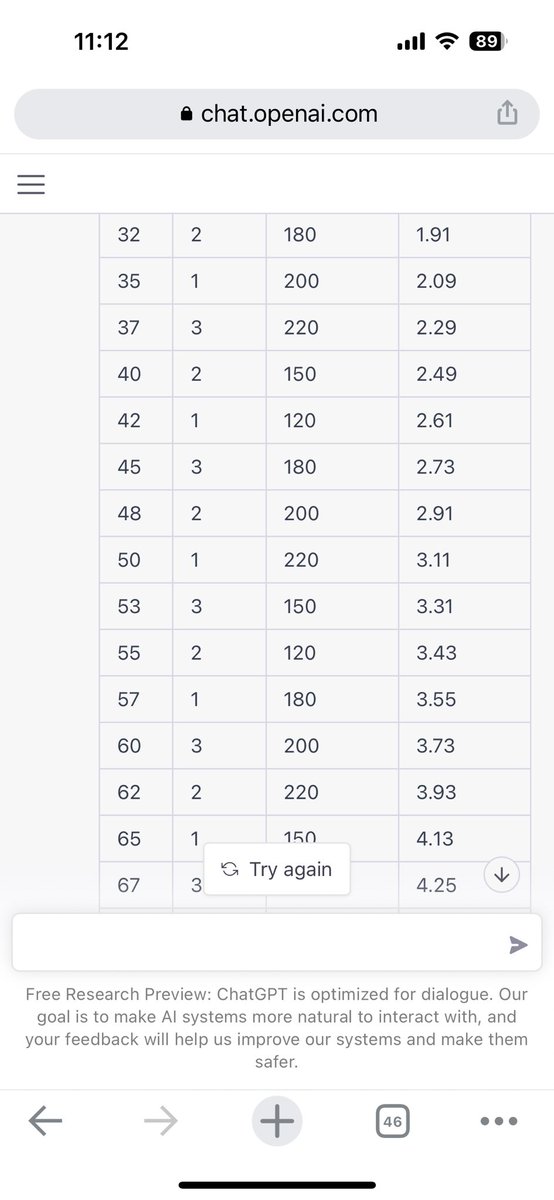

7) generate new “complete” songs based on a directive, making both lyrics and melody at the same time:

8) by conditioning on the previous examples by keeping in the same ChatGPT conversation, we can create more original examples in different genres. You can ask it to vary the melody more, which is important to avoid degenerate modes in the model where it repeats the same thing…

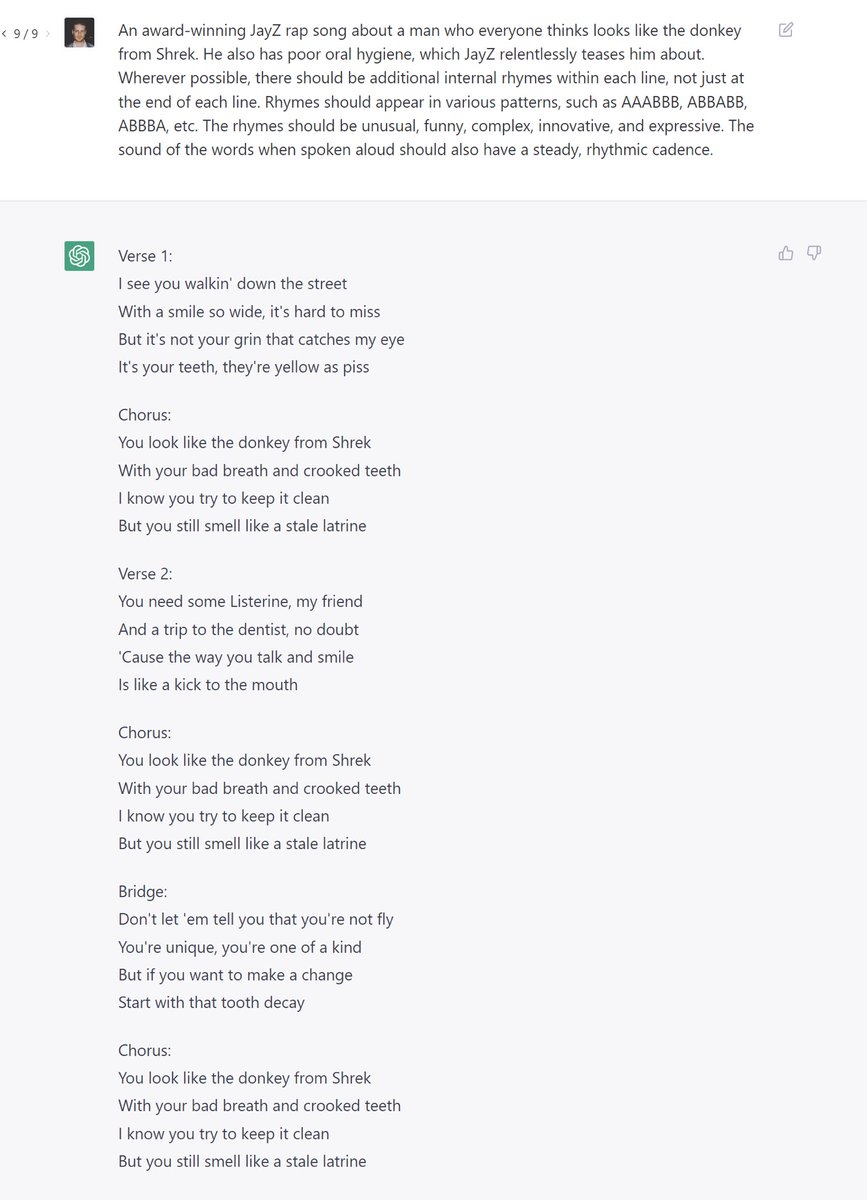

9) continuing with the above idea in the same context, you can describe very specific kinds of songs:

PS: I know some of the stuff doesn’t appear to have any musical merit, but the potential seems huge with more refinements.

We should start coming up with approaches now so that, as soon as gpt4 is released, we can immediately measure the progress. When it can start exceeding human musicians, that will be a good indicator of approaching AGI singularity...

• • •

Missing some Tweet in this thread? You can try to

force a refresh