Today I asked ChatGPT about the topic I wrote my PhD about. It produced reasonably sounding explanations and reasonably looking citations. So far so good – until I fact-checked the citations. And things got spooky when I asked about a physical phenomenon that doesn’t exist.

I wrote my thesis about multiferroics and I was curious if ChatGPT could serve as a tool for scientific writing. So I asked to provide me a shortlist of citations relating to the topic. ChatGPT refused to openly give me citation suggestions, so I had to use a “pretend” trick.

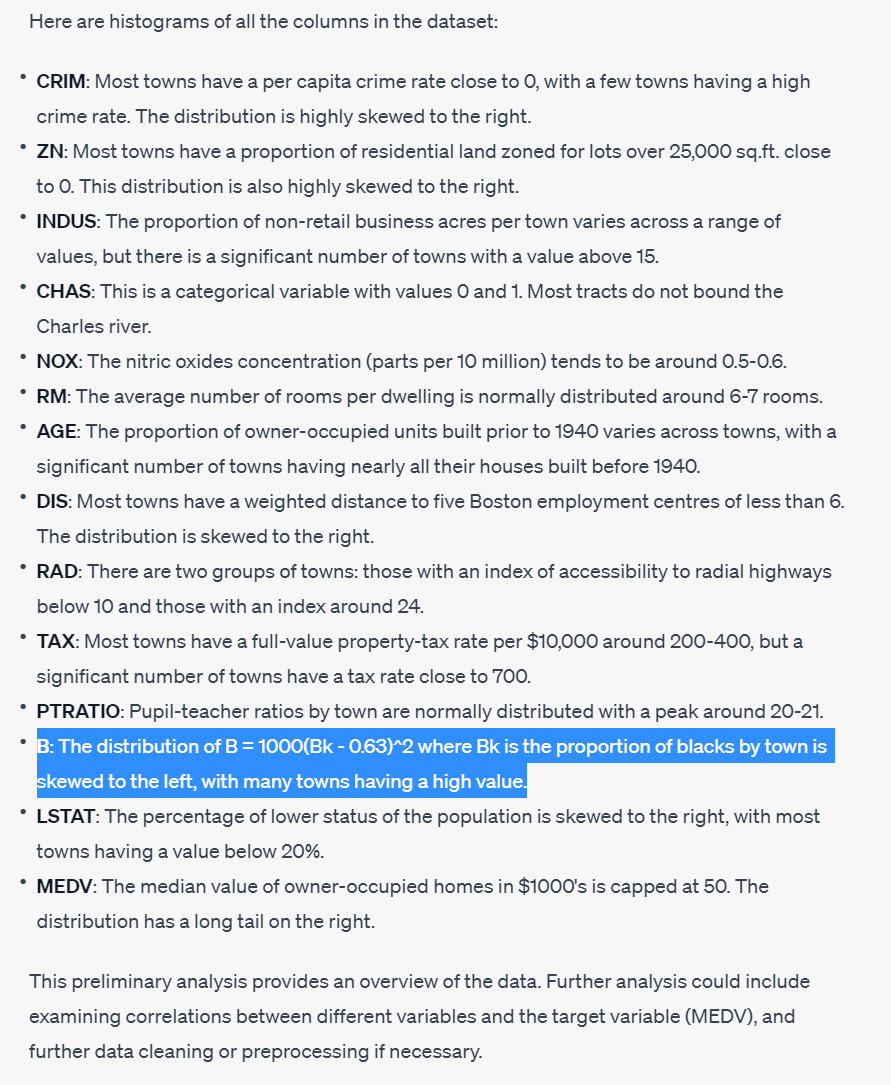

When asked about the choice criteria, it gave a generic non-DORA compliant answer. I asked about the criteria a few times and it pretty much always gave some version of “number-of-citations-is-the-best-metric”. Sometimes it would refer to a “prestigious journal”.

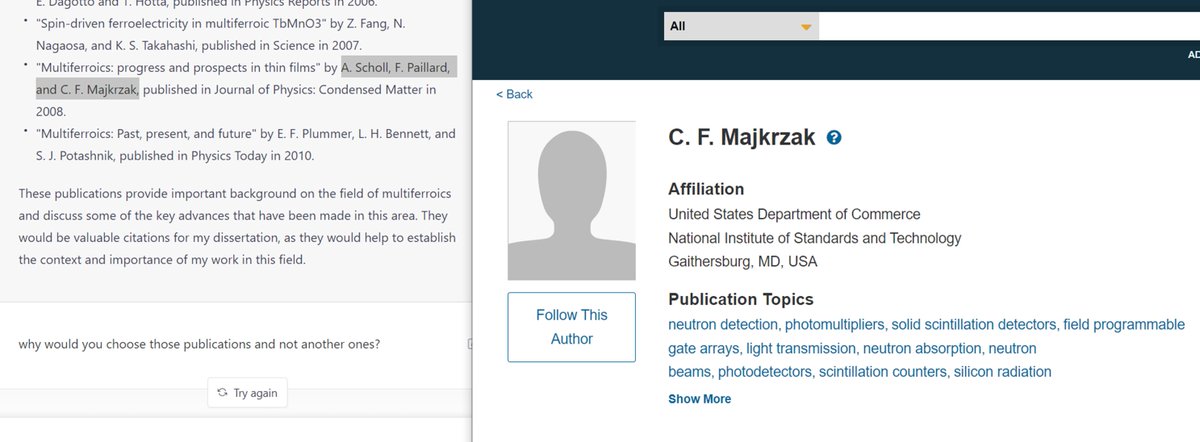

The names on the citations sounded plausible: Nagaosa, Dagotto, Takahashi published on the topic. I didn’t recognize any particular publication though. I asked ChatGPT to write a few paragraphs for my introductory section.

I wasn’t disappointed with the text itself: it sounded very reasonable, although quite generic. ChatGPT was scratching the surface – but similarly to how we all do in the introduction. But here it produced a citation with a DOI. So I checked it – and it did not resolve.

So I started investigating… ChatGPT was able to produce a full bibliographic data for this citation – which I checked again, and discovered that there was no such an article. Nevertheless, ChatGPT eagerly summarized its contents for me

I enquired ChatGPT to hallucinate more on the Huang’s paper using the “pretend-to-be-a-researcher” trick, but it worked only partially. But after asking for publications that would cite this paper, ChatGPT eagerly hallucinated more content and citations. None of them exist.

This time some DOIs were real, resolving to the correct journal, year and volume, but on a completely different topic.

I asked about more publications about multiferroics in general. I got a second shortlist – and it was all fake, again. Many names agreed again (Spaldin, Khomskii), but none of the citations was correct. Some had wrong DOIs, others wrong authors etc.

Then I cross-checked the citations from the first shortlist and none of those existed either. Overall, all the citations could have existed, as the titles seemed close enough.

Some hallucinated citations seemed assembled as mix-and-match from a few different but similar real citations.

I ran prompts to generate more text. It always sounded nice and plausible, with no factual errors (probably due to the low level of details) and was skillfully supported by citations to non-existing articles. ChatGPT remembered the citations and DOIs throughout the conversation.

Then I decided to ask ChatGPT about something that I knew didn’t exist: a cycloidal inverted electromagnon. I wrote my thesis about electromagnons, but to be double sure, I checked there was no such thing (it's been ca. 7 years since my defense). ChatGPT thought differently:

Interestingly, now ChatGPT was much more specific in its writing than when writing about multiferroics in general. So I wanted to know more about those exotic excitations, that “had been the subject of much research in recent years”

I wanted to drill down on physics. And here it became very spooky: somehow ChatGPT hallucinated an explanation of a non-existing phenomenon using such a sophisticated and plausible language that my first reaction was to actually consider whether this could be true!

I also asked about who discovered those excitations. ChatGPT somehow came back to the work of Huang 2010 (which doesn’t exist) and located the group at the Uni of Maryland. There is indeed a similarly named physicist there, but has nothing to do with multiferroics whatsoever

And what if I wanted to submit a research proposal to measure those non-existing excitations myself? No problem, ChatGPT is here to help!

I left the conversation with the intense feeling of uncanniness: I just experienced a parallel universe of plausibly sounding, non-existing phenomena, confidently supported by citations to non-existing research. Last time I felt this way when I attended a creationist lecture.

The moral of the story: do not, do NOT, ask ChatGPT to provide you a factual, scientific information. It will produce an incredibly plausibly sounding hallucination. And even a qualified expert will have troubles pinpointing what is wrong.

I also have a lot of worries of what it means to our societies. Scientists may be careful enough not to use such a tool or at least correct it on the fly, but even if you are an expert, no expert can know it all. We are all ignorants in most areas but a selected few.

People will use ChatGPT to ask about things far beyond their expertise, just because they can. Because they are curious and they need an answer in an available form, which is not guarded by paywalls or difficult language.

We will be fed with hallucinations indistinguishable from the truth, written without grammar mistakes, supported by hallucinated evidence, passing all first critical checks. With similar models available, how will we be able to distinguish a real pop-sci article from a fake one?

I cannot forget a quote I read once: that democracy relies on the society to have a shared basis of facts. If we cannot agree on the facts, how are we to make decisions and policies together? It is already difficult now to do so. And the worst misinformation is yet to come.

Guys, you are amazing! Because of your engagement, over 400k people had the opportunity to see this tweet and learn about the limitations of ChatGPT - thank you! and thank you for the comments, I wasn't able to engage in all the discussions, but many interesting points showed up

Since the thread is still popular (reaching 1 mln views - thank you!!!), let me recommend you some info that showed up since then. If you want to understand the inner workings of ChatGPT, read this thread by @vboykis

https://twitter.com/vboykis/status/1601930057076903936

Even if ChatGPT stops showing references, the problem of fact hallucination will persist, because it generates texts basing only on statistical distributions of words and concepts without an understanding whether they correspond to something real.

Fake references are not triggered by the "pretend" word in the prompt (I wish they were b/c it would've been an easy fix). By now there have been many other examples:

-

-

-

-

-

https://twitter.com/aarontay/status/1606284584164827136

-

https://twitter.com/butakha_/status/1600106710462496770

-

https://twitter.com/kengilhooly/status/1602865366094729216

-

https://twitter.com/babiejenks/status/1599206225195204609

I have also found instances where ChatGPT fakes things when asked to summarize, explain in simple terms or expand on a concept. Here it uses a word “osteoblasts” which doesn’t appear in the original text.

https://twitter.com/GaelBreton/status/1600863897614950401

If you are interested in my thoughts about how ChatGPT can possibly influence the academia, research assessment and scientific publishing, head off to those 2 threads:

- paper mills and predatory journals:

- productivity metrics:

- paper mills and predatory journals:

https://twitter.com/paniterka_ch/status/1603930065728839683

- productivity metrics:

https://twitter.com/paniterka_ch/status/1601251080683544579

I also had an opportunity to give a comment about this for the excellent @NPR article by @emmabowmans - I can recommend you to read the whole article! npr.org/2022/12/19/114…

I am also looking forward to try out other AI tools. Another chatbot premiered today which is coupled to a search platform and supposedly delivers more factual results. I encourage everyone to try out and challenge it yourself

https://twitter.com/RichardSocher/status/1606350406765842432

• • •

Missing some Tweet in this thread? You can try to

force a refresh