We’re happy to release Stable Diffusion, Version 2.1!

With new ways of prompting, 2.0 provided fantastic results, and 2.1 supports the new prompting style, but also brings back many of the old prompts too!

Link → stability.ai/blog/stabledif…

With new ways of prompting, 2.0 provided fantastic results, and 2.1 supports the new prompting style, but also brings back many of the old prompts too!

Link → stability.ai/blog/stabledif…

The differences are more data, more training, and a less restrictive filtering of the dataset. The dataset for v2.0 was filtered aggressively LAION’s NSFW filter, making it a bit harder to get similar results generating people.

We listened to our users & adjusted the filters. Adult content is still stripped out but less aggressively so it cuts down on too many false positives. We fine-tuned v2.0 with this updated setting, giving us a model which hopefully captures the best of both worlds!

The model also has the power to render non-standard resolutions. That helps you do all kinds of awesome new things, like works with extreme aspect ratios that give you beautiful vistas and epic widescreen imagery. #StableDiffusion2

The community noticed that “negative prompts” worked wonders with 2.0 & they work even better in 2.1! Negative prompts allows a user to tell the model what not to generate.

Negative prompts are now supported in @DreamStudioAI by appending “|<negative prompt>:-1” to the prompt.

Negative prompts are now supported in @DreamStudioAI by appending “|<negative prompt>:-1” to the prompt.

For more details about accessing and downloading v2.1, please check out the release notes on our GitHub: github.com/Stability-AI/s…

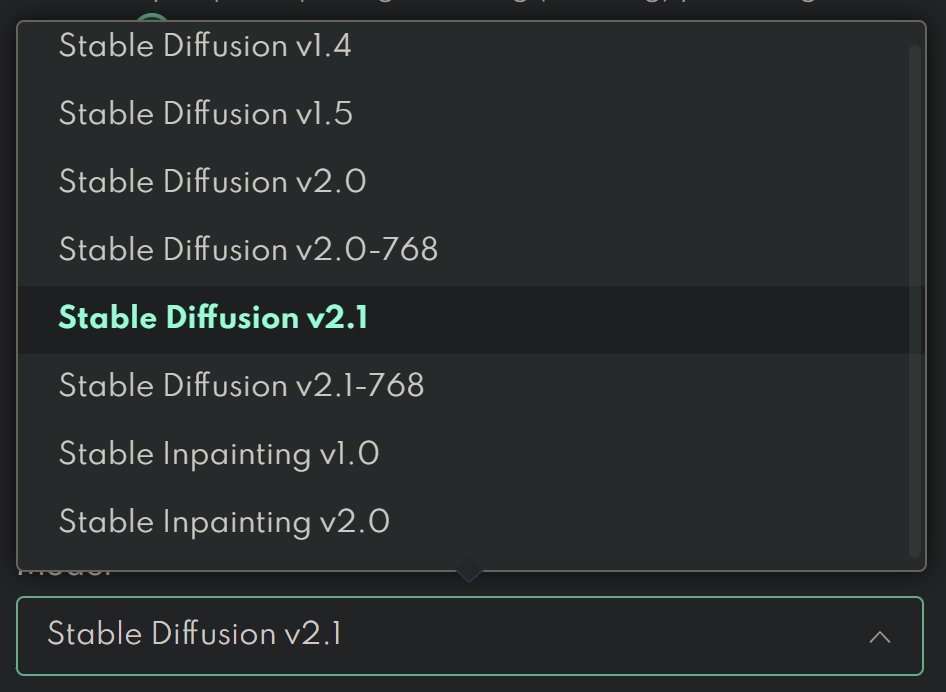

Additionally, you can try v2.1 right now on @DreamStudioAI at beta.dreamstudio.ai

Additionally, you can try v2.1 right now on @DreamStudioAI at beta.dreamstudio.ai

We know open is the future of AI and we’re committed to developing current & future versions of @StableDiffusion in the open. Expect more models and releases to come fast and furious and some amazing new capabilities as generative AI gets more and more powerful in the new year.

• • •

Missing some Tweet in this thread? You can try to

force a refresh