Here's one to add to the LLM debates: in a new paper, we found that GPT-3 matches or exceeds human performance on zero-shot analogical reasoning, including on a text-based version of Raven's Progressive Matrices.

arxiv.org/abs/2212.09196…

Thread:

arxiv.org/abs/2212.09196…

Thread:

Analogical reasoning is often viewed as the quintessential example of the human capacity for abstraction and generalization, allowing us to approach novel problems *zero-shot*, by comparing them to more familiar situations.

Given the recent debates surrounding the reasoning abilities of LLMs, we wondered whether they might be capable of this kind of zero-shot analogical reasoning, and how their performance would stack up against human participants.

We focused primarily on Raven's Progressive Matrices (RPM), a popular visual analogy problem set often viewed as one of the best measures of zero-shot reasoning ability (i.e., fluid intelligence).

We created a text-based benchmark -- Digit Matrices -- closely modeled on RPM, and evaluated both GPT-3 and human participants.

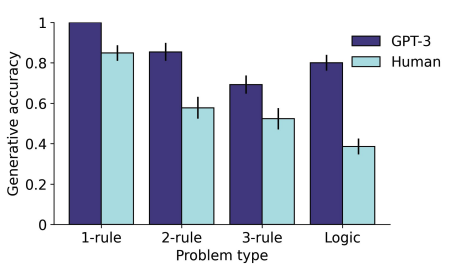

GPT-3 outperformed human participants both when generating answers from scratch, and when selecting from a set of answer choices. Note that this is without *any* training on this task.

We also found that the pattern of human performance on this new task was very close to the pattern seen for standard (visual) RPM problems, suggesting that this task is tapping into similar processes.

GPT-3 also displayed several qualitative effects that were consistent with known features of human analogical reasoning. For instance, it had an easier time solving logic problems when the corresponding elements were spatially aligned.

We also took a look at some other analogy tasks, including tasks that involved more complex, naturalistic relations. These included four-term verbal analogies, where GPT-3 matched human performance...

... and a classic problem-solving task (Duncker's radiation problem), in which a solution can be discovered by making an analogy between the problem and a previously presented story.

Finally, we also tested GPT-3 on letter string analogies. @MelMitchell1 previously found that GPT-3 performed very poorly on these problems:

medium.com/@melaniemitche…

but it seems that the newest iteration of GPT-3 performs much better.

medium.com/@melaniemitche…

but it seems that the newest iteration of GPT-3 performs much better.

Overall, we were shocked that GPT-3 performs so well on these tasks. The question now of course is whether it's solving them in anything like the way that humans do. Does GPT-3 implement, in an emergent way, any of the features posited by cognitive theories..

e.g. relational representations, variable-binding, analogical mapping, etc., or has it discovered a completely novel way of performing analogical reasoning? (or are these cognitive theories wrong?) Lots to investigate.

Also necessary to make a few caveats -- GPT-3's reasoning is of course not human-like in every respect. No episodic memory, poor physical reasoning skills, and most notably it's received *far* more training than humans do (though not on these tasks).

Nevertheless, the overall conclusion is that GPT-3 does appear to possess the core features that we associate with analogical reasoning -- the ability to identify complex relational patterns, zero-shot, in novel problems.

• • •

Missing some Tweet in this thread? You can try to

force a refresh