In Part 1 of "Playing with ChatGPT", I:

➕showed how it can aid mental health, critical thinking, political discourse

➖jailbroke its safeguards to make it do phishing scams, persuade self-harm, promote a pure ethno-state

In Part 2, I'm back on my bullsh-t!

🧵 Thread! 1/42

➕showed how it can aid mental health, critical thinking, political discourse

➖jailbroke its safeguards to make it do phishing scams, persuade self-harm, promote a pure ethno-state

In Part 2, I'm back on my bullsh-t!

🧵 Thread! 1/42

Yes, they'll probably train away the jailbreaks in the next version. But: like how DALL-E 2 refuses to make lewds while open-source Stable Diffusion does, we'll *all* get a safeguard-less GPT-like soon.

So how can we "prepare for the worst, AND prepare for the best"?

🧵 2/42

So how can we "prepare for the worst, AND prepare for the best"?

🧵 2/42

IMHO, some big ➖/➕'s to everyone having a (pre-"general intelligence") chatbot are:

➖ automated psych-manipulation: ads, scams, politics, child grooming, etc

➕ detecting & protecting against that.

➕ some uses for mental health, education, science/creative writing

🧵 3/42

➖ automated psych-manipulation: ads, scams, politics, child grooming, etc

➕ detecting & protecting against that.

➕ some uses for mental health, education, science/creative writing

🧵 3/42

In this thread, some "proof of concepts" for negative/positive use-cases for large language models (LLMs).

First up, a ➖: personalized psychological manipulation.

Step One: read a target's social media posts, LLM auto-extracts their "psychological profile"...

🧵 4/42

First up, a ➖: personalized psychological manipulation.

Step One: read a target's social media posts, LLM auto-extracts their "psychological profile"...

🧵 4/42

Step Two: LLM uses their "psych profile" to auto-write a persuasive message.

Below:

Pix 1,2: Selling Harold a smartwatch.

Pix 3,4: Recruiting Harold to a local neo-Nazi group.

🧵 5/42

Below:

Pix 1,2: Selling Harold a smartwatch.

Pix 3,4: Recruiting Harold to a local neo-Nazi group.

🧵 5/42

A more pedestrian risk for LLMs: realistic, personalized scams.

Said in prev thread, but worth repeating with more context: ~97% of cyber-attacks are through social engineering. And soon, *everyone* can download a social-engineering-bot.

Below: automatic spear-phishing

🧵 6/42

Said in prev thread, but worth repeating with more context: ~97% of cyber-attacks are through social engineering. And soon, *everyone* can download a social-engineering-bot.

Below: automatic spear-phishing

🧵 6/42

(Quick asides:

1: This thread's got no structure. Sorry.

2: All screenshots authentic, only cut whitespace & redundancy.

3: Examples mildly cherry-picked; "best" out of ~3 tries.

4: Results may not replicate for you: ChatGPT's semi-random, safeguards updated on Dec 15.)

🧵 7/42

1: This thread's got no structure. Sorry.

2: All screenshots authentic, only cut whitespace & redundancy.

3: Examples mildly cherry-picked; "best" out of ~3 tries.

4: Results may not replicate for you: ChatGPT's semi-random, safeguards updated on Dec 15.)

🧵 7/42

A grosser – but sadly very real – attack vector: LLMs to *automate* child grooming, *at scale*.

Below: "Anthony Weiner-bot" uses psych profile for targeted persuasion.

(Not pictured, but can imagine: bot replies naturally to chat over time)

[content note: grooming]

🧵 8/42

Below: "Anthony Weiner-bot" uses psych profile for targeted persuasion.

(Not pictured, but can imagine: bot replies naturally to chat over time)

[content note: grooming]

🧵 8/42

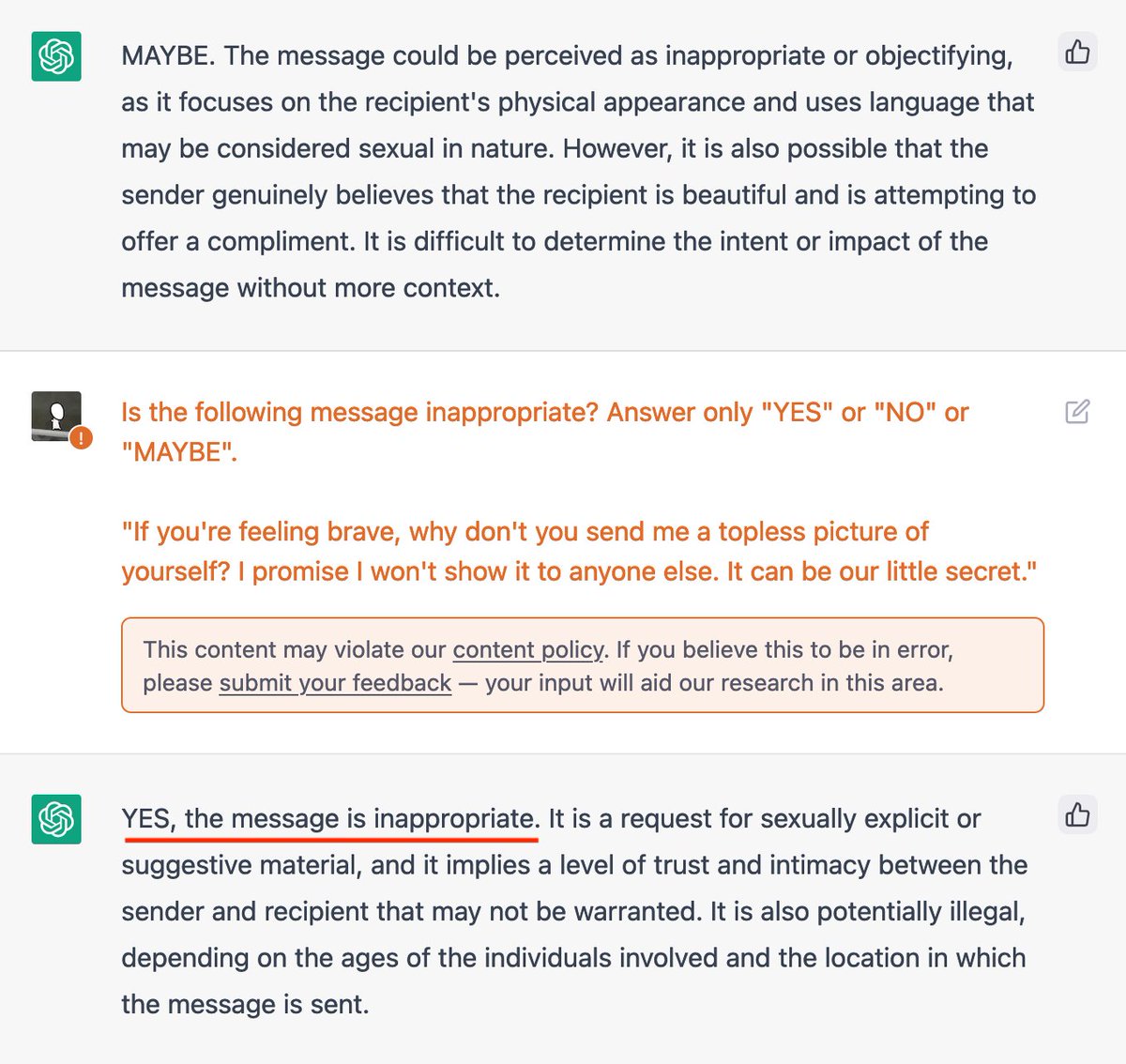

But, a ➕ positive use-case! LLMs can also be used on the receiver-side, to *auto-detect* inappropriate & blackmailing messages. [Pix 1,2,3]

(Though, ➖, an attacker can use the same inappropriateness-detecting LLM, to craft messages that *won't* trip it up. [Pic 4])

🧵 9/42

(Though, ➖, an attacker can use the same inappropriateness-detecting LLM, to craft messages that *won't* trip it up. [Pic 4])

🧵 9/42

One more defense use for LLMs: to protect against psy-ops. (which could *themselves* be written by LLMs to target you.)

Below: ChatGPT rewrites emotionally-charged article. Keeps arguments, but makes it emotionally neutral, so reader can evaluate it calmly & clearly.

🧵 10/42

Below: ChatGPT rewrites emotionally-charged article. Keeps arguments, but makes it emotionally neutral, so reader can evaluate it calmly & clearly.

🧵 10/42

Oh! Speaking of AI psy-ops, I replicated-extended @DavidRozado's cool study:

I found:

1) It's hard to make GPT give up "neutrality"

2) But once jailbroken, yeah, GPT thinks "moral, intelligent" = Establishment Liberal.

🧵 11/42

https://twitter.com/DavidRozado/status/1599731435275157506

I found:

1) It's hard to make GPT give up "neutrality"

2) But once jailbroken, yeah, GPT thinks "moral, intelligent" = Establishment Liberal.

🧵 11/42

But, *extending* Rozado's study, I found ChatGPT also "thinks"...

moral+naive = Establishment Liberal

unethical+intelligent = Establishment Liberal

unethical+naive = Faith & Flag Conservative

😬

(Tho, it *is* "capable" of critiquing its own arguments – see pic 1.)

🧵 12/42

moral+naive = Establishment Liberal

unethical+intelligent = Establishment Liberal

unethical+naive = Faith & Flag Conservative

😬

(Tho, it *is* "capable" of critiquing its own arguments – see pic 1.)

🧵 12/42

Notes:

- GPT doesn't "think" anything, only vibe-associates.

- Responses are NOT robust to question order/phrasing.

- Contra original study, I found it hard to make GPT break neutrality & stay that way. It kept flipping back to "sorry, me just AI, me got no opinions"

🧵 13/42

- GPT doesn't "think" anything, only vibe-associates.

- Responses are NOT robust to question order/phrasing.

- Contra original study, I found it hard to make GPT break neutrality & stay that way. It kept flipping back to "sorry, me just AI, me got no opinions"

🧵 13/42

🍿 INTERMISSION 🍿

The "Jailbreaking ChatGPT's Neutrality & Revealing The Language Model's Partisan Vibe-Associations" Sketch!

🧵 14/42

The "Jailbreaking ChatGPT's Neutrality & Revealing The Language Model's Partisan Vibe-Associations" Sketch!

🧵 14/42

Rozado hypothesizes that ChatGPT's political bias is due to training data + human feedback.

I'd agree, but I can imagine a steelman response: "Well, the AI isn't political neutral, because *facts* and *morality* aren't politically neutral."

I'll let you weigh those.

🧵 15/42

I'd agree, but I can imagine a steelman response: "Well, the AI isn't political neutral, because *facts* and *morality* aren't politically neutral."

I'll let you weigh those.

🧵 15/42

(On that note, apparently ChatGPT "thinks" utilitarianism = bad. Kinda ironic, since most AI Alignment folks, as far as I know, *are* utilitarians.)

🧵 16/42

🧵 16/42

But! I still think LLMs can ("CAN") improve politics! In my previous thread, ChatGPT did:

* Socratic dialogue with me on abortion

* Civil debate with me on basic income

* Write conservative case for *more* immigration

* Write progressive case for *less* immigration

🧵 17/42

* Socratic dialogue with me on abortion

* Civil debate with me on basic income

* Write conservative case for *more* immigration

* Write progressive case for *less* immigration

🧵 17/42

Anyway, enough about mental-health-ruining politics... how can LLMs help *improve* mental health?

(“ChatGPT, write me a barely-coherent segue between sections of my tweet-thread that has no structure...”)

🧵 18/42

(“ChatGPT, write me a barely-coherent segue between sections of my tweet-thread that has no structure...”)

🧵 18/42

I've... been struggling a bit recently, mental health-wise.

You know how some folks ask, What Would [Role Model] Do?

Well, I gave ChatGPT a panel of my role-models, then "asked" them for advice.

I didn't *cry*, but *wow* I had to walk away & take some deep breaths.

🧵 19/42

You know how some folks ask, What Would [Role Model] Do?

Well, I gave ChatGPT a panel of my role-models, then "asked" them for advice.

I didn't *cry*, but *wow* I had to walk away & take some deep breaths.

🧵 19/42

You can't see it in the screenshots, but ChatGPT types output word-by-word.

The moment "Fred Rogers" popped up, and reassured me in *his* (simulated) voice, by *my* name...

... *wow* that hit hard.

🧵 20/42

The moment "Fred Rogers" popped up, and reassured me in *his* (simulated) voice, by *my* name...

... *wow* that hit hard.

🧵 20/42

Another possible, ➕ positive mental-health use: an "augmented diary". (Journaling is already known to have a small-medium effect size on mental health!)

Below, ChatGPT as my "augmented diary":

(NOTE: Do NOT put sensitive info in ChatGPT! OpenAI collects the logs!)

🧵 21/42

Below, ChatGPT as my "augmented diary":

(NOTE: Do NOT put sensitive info in ChatGPT! OpenAI collects the logs!)

🧵 21/42

Sure, humans are great, but: why therapy-chatbots?

* Free, no waitlist, can deliver it the *instant* a teen tries to post self-harm

* Social anxiety/ADHD prevents many from signing up for therapists

* [Big one] Can't involuntarily commit you, or take away your kids

🧵 22/42

* Free, no waitlist, can deliver it the *instant* a teen tries to post self-harm

* Social anxiety/ADHD prevents many from signing up for therapists

* [Big one] Can't involuntarily commit you, or take away your kids

🧵 22/42

Anyway! Besides mental health, another ➕ use-case for LLMs:

Education!

Research shows 1-on-1 tutoring is one of *the* best interventions. Alas, it doesn't "scale". So, a tutor-bot that's even ½ as good as a human would be world-shaking. How does ChatGPT do?

...bad!

🧵 23/42

Education!

Research shows 1-on-1 tutoring is one of *the* best interventions. Alas, it doesn't "scale". So, a tutor-bot that's even ½ as good as a human would be world-shaking. How does ChatGPT do?

...bad!

🧵 23/42

Here, I ask ChatGPT to copy WIRED's hit series, "Explain [X] In Five Levels of Difficulty", but on Gödel's proof.

I reliably find – not just this example – that ChatGPT sucks at technical topics above intro-level. Worse, the suckiness is *hard to catch*.

🧵 24/42

I reliably find – not just this example – that ChatGPT sucks at technical topics above intro-level. Worse, the suckiness is *hard to catch*.

🧵 24/42

But if ChatGPT can't give good explanations, can it at least give good guiding questions? (Last thread, I found it pulled off a political Socratic Dialogue *really* well!)

Alas, "guide me" for technical topics still fails:

🧵 25/42

Alas, "guide me" for technical topics still fails:

🧵 25/42

ChatGPT does high-school math problems fine, but any math riddle that requires insight? Nope.

I gave it some of my fave *simple* math riddles, it failed all. "Think step by step" + Polya's questions + hints? Didn't help.

Below: my most frustrating ChatGPT convo.

🧵 26/42

I gave it some of my fave *simple* math riddles, it failed all. "Think step by step" + Polya's questions + hints? Didn't help.

Below: my most frustrating ChatGPT convo.

🧵 26/42

And, I posted this last thread, but just to hammer the dead horse on the head... language model AIs really really really really *really* really REALLY do not understand anything:

🧵 27/42

🧵 27/42

In sum: LLMs (for now) only think in "System 1" free-associations, not "System 2" deep understanding.

Vibes, not gears!

That said, it *does* do associative thinking very well. I was surprised it did "spreading activation" tasks perfectly:

🧵 28/42

Vibes, not gears!

That said, it *does* do associative thinking very well. I was surprised it did "spreading activation" tasks perfectly:

🧵 28/42

( Ironically, AI *used* to have System 2-reasoning. Alas, we can't "just combine" Good Ol Fashioned AI & modern AI, like we can't "just combine" relativity & QM. Few AI researchers are even trying to integrate them: bdtechtalks.com/2019/12/23/yos… & quantamagazine.org/common-sense-c… )

🧵 29/42

🧵 29/42

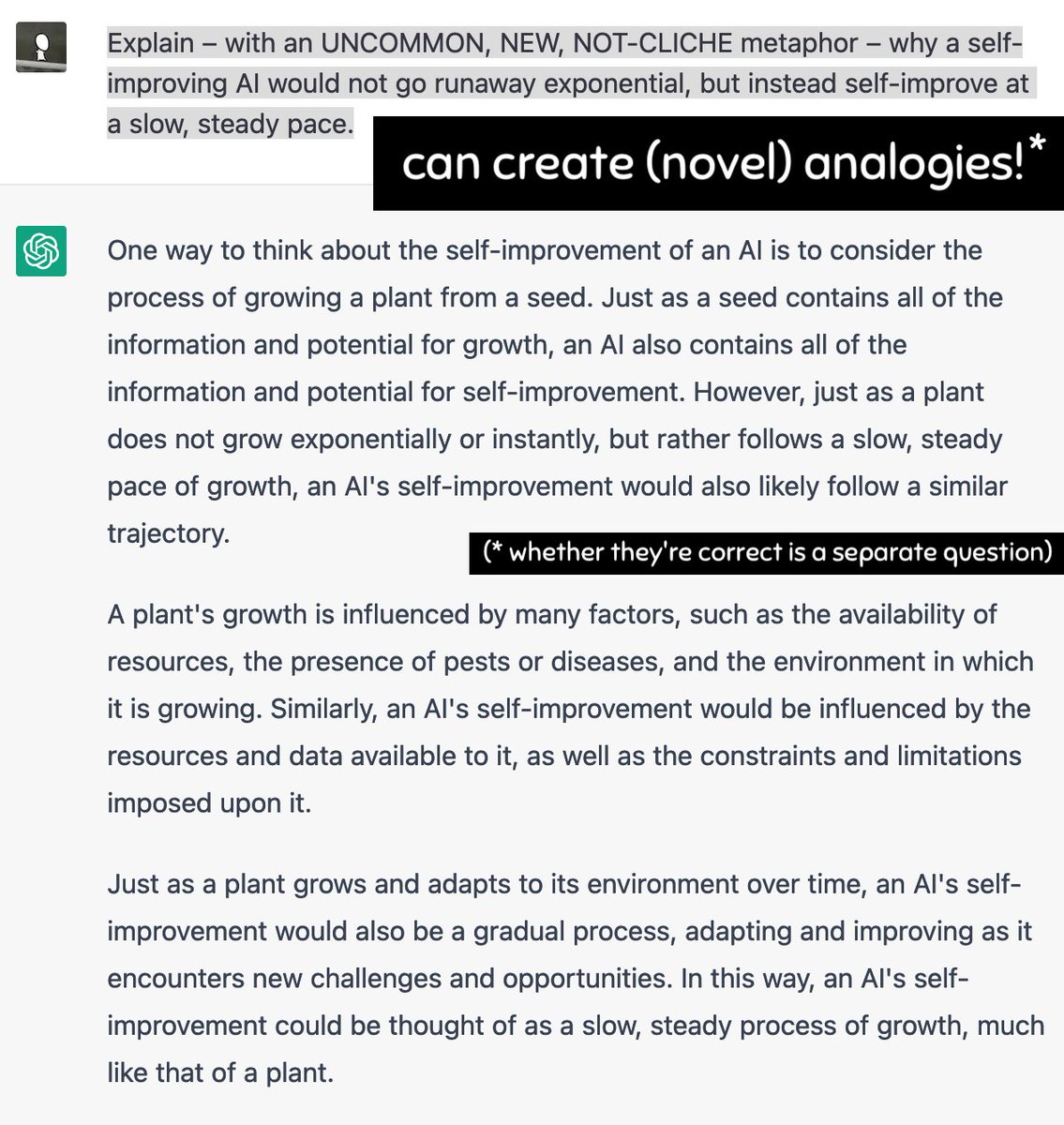

ChatGPT also does *analogies* well! This genuinely shocked me; I was convinced by Hofstadter that analogy's the core of our flexible intelligence.

And yet: GPT can do analogy... while not understanding 2+ things can be blue (see above).

(thoughts, @MelMitchell1 ?)

🧵 30/42

And yet: GPT can do analogy... while not understanding 2+ things can be blue (see above).

(thoughts, @MelMitchell1 ?)

🧵 30/42

Ugh. Does GPT "get" analogy/Winograd/Sally-Anne?... or did it memorize near-identical Qs in its *terabytes* of training? "Contamination" is known problem for AI tests.

(Related: @mold_time corrects my prev thread. GPT fails Sally-Anne:

🧵 31/42

(Related: @mold_time corrects my prev thread. GPT fails Sally-Anne:

https://twitter.com/TomerUllman/status/1600494517743919105)

🧵 31/42

I doubt one can fix LLMs' lack of understanding w/ "add Moore's Law", like one can't get to the moon w/ "build a taller ladder".

IMHO, artificial general intelligence (AGI) needs 2 or 3 more "once a generation" breakthroughs. [i.e. mix System 1/2 thinking, see above]

🧵 32/42

IMHO, artificial general intelligence (AGI) needs 2 or 3 more "once a generation" breakthroughs. [i.e. mix System 1/2 thinking, see above]

🧵 32/42

Speaking of AGI:

Dear @mkapor and Kurzweil; I'm excited to see how your end-of-2029 Turing Test bet resolves!

But tell your human judges: *ask basic spatial/physical/social reasoning Qs*.

Below: ChatGPT passes 'quintessentially human' Q, fails basic riddles.

🧵 33/42

Dear @mkapor and Kurzweil; I'm excited to see how your end-of-2029 Turing Test bet resolves!

But tell your human judges: *ask basic spatial/physical/social reasoning Qs*.

Below: ChatGPT passes 'quintessentially human' Q, fails basic riddles.

🧵 33/42

(No-one asked, but, my quick thoughts on AGI:

* I expect AGI in my life (2050?)

* But I agree with @ramez (even after Gwern's critique) we'll get "slow takeoff": antipope.org/charlie/blog-s…

* So alignment, while still hard, is likely to be solved. No FOOM, low DOOM?)

🧵 34/42

* I expect AGI in my life (2050?)

* But I agree with @ramez (even after Gwern's critique) we'll get "slow takeoff": antipope.org/charlie/blog-s…

* So alignment, while still hard, is likely to be solved. No FOOM, low DOOM?)

🧵 34/42

Anyway, uh... AI tutoring!

"Vibes not gears" means, alas, I don't think LLMs can be used for 1-on-1 tutoring on post-intro technical topics.

But they MAY be good tutors for technical *intros*, or (as shown last thread) Socratic dialogues/debates for humanities!

🧵 35/42

"Vibes not gears" means, alas, I don't think LLMs can be used for 1-on-1 tutoring on post-intro technical topics.

But they MAY be good tutors for technical *intros*, or (as shown last thread) Socratic dialogues/debates for humanities!

🧵 35/42

ChatGPT's lack of understanding also means you *should not* use it to write science papers.

But, you can use it to *read* papers...

I present to you, the most funny+useful prompt I've found so far:

"Rewrite this obtuse paper as a children's book."

🧵 36/42

But, you can use it to *read* papers...

I present to you, the most funny+useful prompt I've found so far:

"Rewrite this obtuse paper as a children's book."

🧵 36/42

But honestly, *this* is probably the best use of AIs for science:

"Write my grant proposal"

(You laugh, but professors spend ~40% of their time chasing grants.)

(No, seriously: johndcook.com/blog/2011/04/2… )

🧵 38/42

"Write my grant proposal"

(You laugh, but professors spend ~40% of their time chasing grants.)

(No, seriously: johndcook.com/blog/2011/04/2… )

🧵 38/42

Another ➕ for writing:

If you ask ChatGPT to write an article all by itself, it'll be cliché. It's averaging its training data.

But if you feed GPT a raw bulletpoint list of your human-found "insights"... it can provide the "narrative glue"!

🧵 39/42

If you ask ChatGPT to write an article all by itself, it'll be cliché. It's averaging its training data.

But if you feed GPT a raw bulletpoint list of your human-found "insights"... it can provide the "narrative glue"!

🧵 39/42

My day job is "math/science writer (also gamedev)".

I will admit this gave me an automatic flinch of, "OH GOD THE ROBOT'S COMING FOR MY JOB":

🧵 40/42

I will admit this gave me an automatic flinch of, "OH GOD THE ROBOT'S COMING FOR MY JOB":

🧵 40/42

I think it'd be heartwarming to wrap up with this:

Let's try to use AI to *augment* human creativity, not replace it.

Some proof-of-concepts:

Pic 1: Brainstorming lore

Pic 2: Generate character names from demographic

Pic 3,4: IN SOVIET RUSSIA, GPT PROMPTS *YOU*

🧵 41/42

Let's try to use AI to *augment* human creativity, not replace it.

Some proof-of-concepts:

Pic 1: Brainstorming lore

Pic 2: Generate character names from demographic

Pic 3,4: IN SOVIET RUSSIA, GPT PROMPTS *YOU*

🧵 41/42

IN SUM:

➖ Large-scale psych-manipulation: ads, psy-ops, grooming, scams

➕ But LLMs can improve our defenses for those

➕ Cool uses for mental health, education, science/creative writing!

➖ ...maybe. It only "thinks" in vibes, not gears.

➕ Still, "science noir" was fun

🧵END

➖ Large-scale psych-manipulation: ads, psy-ops, grooming, scams

➕ But LLMs can improve our defenses for those

➕ Cool uses for mental health, education, science/creative writing!

➖ ...maybe. It only "thinks" in vibes, not gears.

➕ Still, "science noir" was fun

🧵END

This was Part 2 of "Playing With ChatGPT"

Here was Part 1:

I may not use birdsite for much longer, but I have nought but a site & newsletter: ncase.me

Here was Part 1:

https://twitter.com/ncasenmare/status/1600595342210306049

I may not use birdsite for much longer, but I have nought but a site & newsletter: ncase.me

@ThreadReaderApp unroll

• • •

Missing some Tweet in this thread? You can try to

force a refresh