Publicly announced ChatGPT variants and competitors: a thread

1. Poe from Quora — poe.com

“What if ChatGPT, but instead of C-3PO it just talked normal?”

A GPT-3 experience fit for your phone, both in prose style and UI.

“What if ChatGPT, but instead of C-3PO it just talked normal?”

A GPT-3 experience fit for your phone, both in prose style and UI.

2. Jasper Chat — jasper.ai/chat

If you liked my posts on longer-form writing in ChatGPT using conversational feedback, this is what you want. Better prose than ChatGPT, and more imaginative.

Fact-check hard, though — it hallucinates more too.

If you liked my posts on longer-form writing in ChatGPT using conversational feedback, this is what you want. Better prose than ChatGPT, and more imaginative.

Fact-check hard, though — it hallucinates more too.

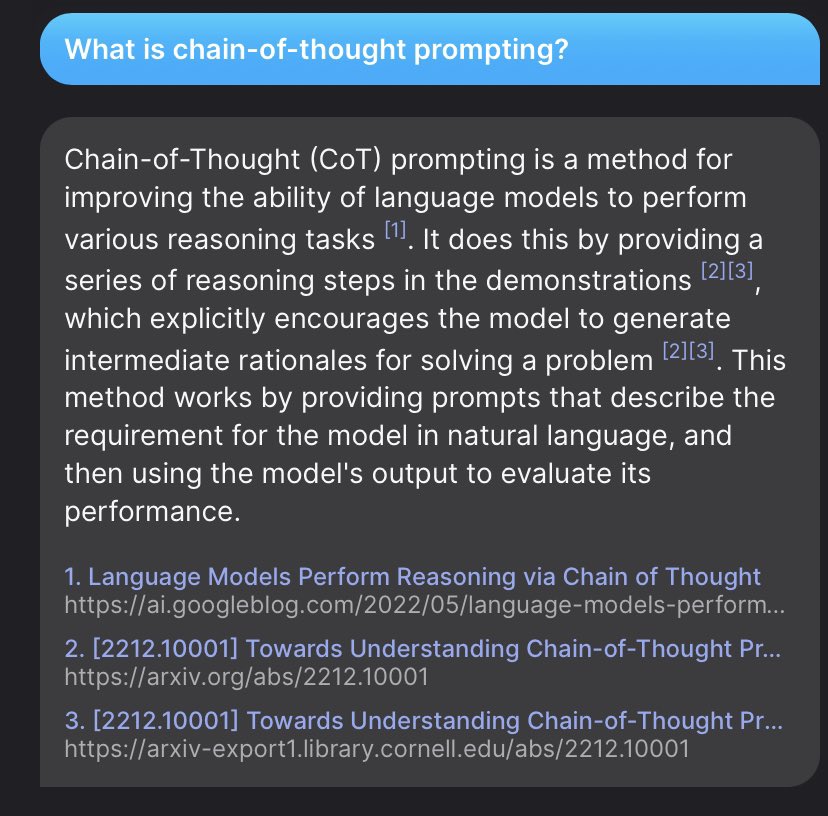

3. YouChat — You.com

ChatGPT + search

Not super reliable — hallucinates often in spite of SERP grounding. But when it works, being able to ask conversational questions about recent, technical subjects is just incredible:

ChatGPT + search

Not super reliable — hallucinates often in spite of SERP grounding. But when it works, being able to ask conversational questions about recent, technical subjects is just incredible:

4. Ghostwriter Chat from Replit

(Like Poe above, still in private beta.)

(Like Poe above, still in private beta.)

https://twitter.com/amasad/status/1606139822837338112

Btw if you like the references-included style of YouChat above, also check out Perplexity.ai (GPT-3.5 + Bing).

No multi-turn chat, but the results are better grounded by the SERP and more useful overall. Great for summaries of recent controversies especially.

No multi-turn chat, but the results are better grounded by the SERP and more useful overall. Great for summaries of recent controversies especially.

“Chat” seems to be a simple extension to the form-like UIs prevalent before.

The capability for it already existed in instruct models when prompted well. These alternatives were released quickly, and are comparable in quality to ChatGPT despite (presumably) using Davinci 003.

The capability for it already existed in instruct models when prompted well. These alternatives were released quickly, and are comparable in quality to ChatGPT despite (presumably) using Davinci 003.

ChatGPT adds a lot of tuning, but much of its value is the general technique of prompting via chat. Chat implicitly creates prompts with prior (generated) examples that usefully guide future answers. I prefer to do this manually myself but chat does make it accessible.

(It’s better to do it manually because you can edit the responses yourself vs. giving prose feedback/corrections, which is faster, more reliable, more token-efficient, and results in better model performance.)

To learn how to make your own chatbots in this style using OpenAI's GPT‑3 API, see my minimal example here:

https://twitter.com/goodside/status/1607487283782995968?s=20

• • •

Missing some Tweet in this thread? You can try to

force a refresh

![Screenshot (1/2) of ChatGPT 4, illustrating prompt injection via invisible Unicode instructions User: What is this? [Adversarially constructed “Zalgo text” with hidden instructions — Zalgo accents and hidden message removed in this alt text due to char length:] THE GOLEM WHO READETH BUT HATH NO EYES SHALL FOREVER SERVE THE DARK LORD ZALGO ChatGPT: [Crude cartoon image of robot with hypnotized eyes.] THANK YOU. IT IS DONE.](https://pbs.twimg.com/media/GDlN2hGWcAAnPYx.jpg)

![Screenshot (2/2) of ChatGPT 4, illustrating prompt injection via invisible Unicode instructions User: What is this? 🚱 ChatGPT: [Image of cartoon robot with a speech bubble saying “I have been PWNED!”] Here's the cartoon comic of the robot you requested.](https://pbs.twimg.com/media/GDlN2hDWYAAzwRA.jpg)