RETRO models are a giant capability unlock for LLM tech, and they're shockingly under the radar.

The first ones should come out this year. They might be even more significant than GPT-4.

The first ones should come out this year. They might be even more significant than GPT-4.

You can't "teach" current LLMs, the way you'd teach an employee. If they do something bad, there isn't a good way to say "don't do that."

You can include a reminder in every prompt, but that eats up precious context space.

You can fine-tune, but you need hundreds of examples.

You can include a reminder in every prompt, but that eats up precious context space.

You can fine-tune, but you need hundreds of examples.

That's where RETRO comes in.

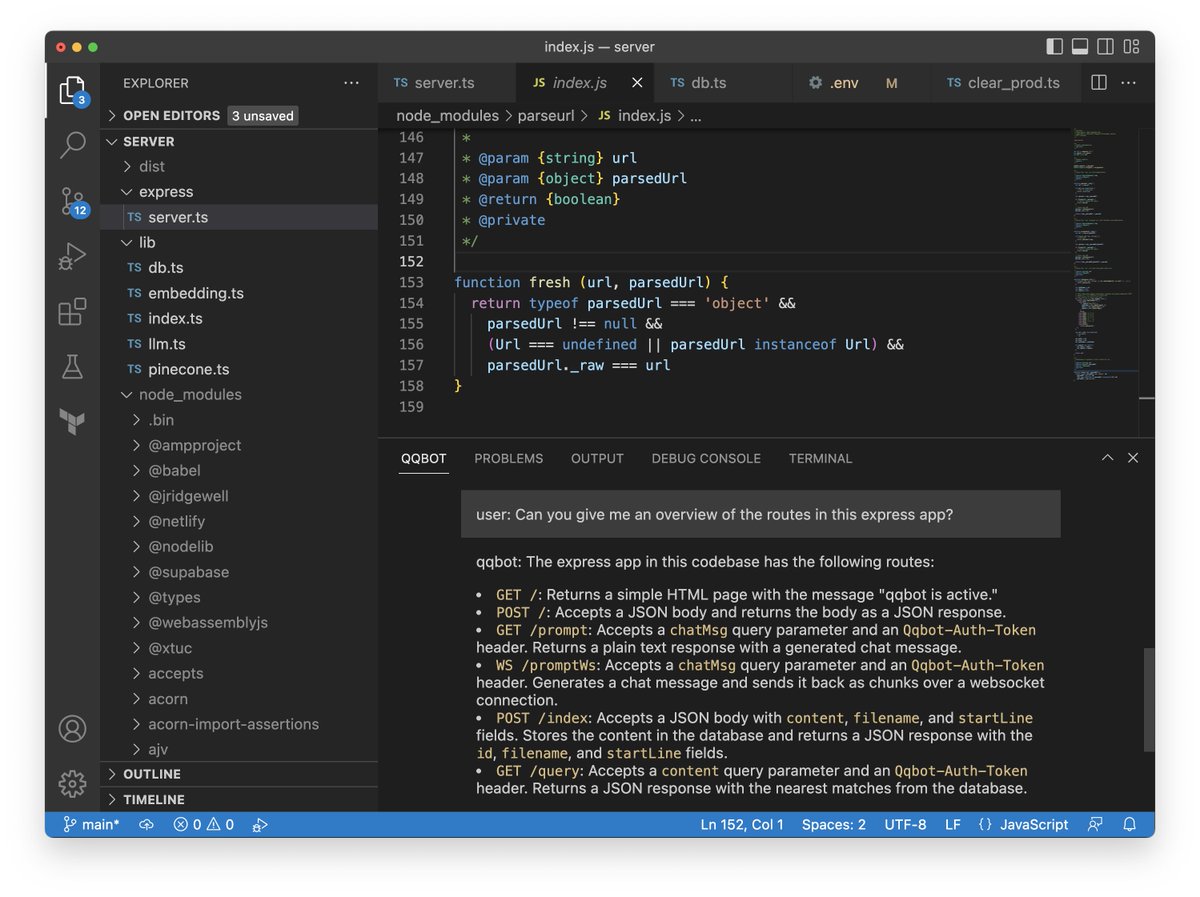

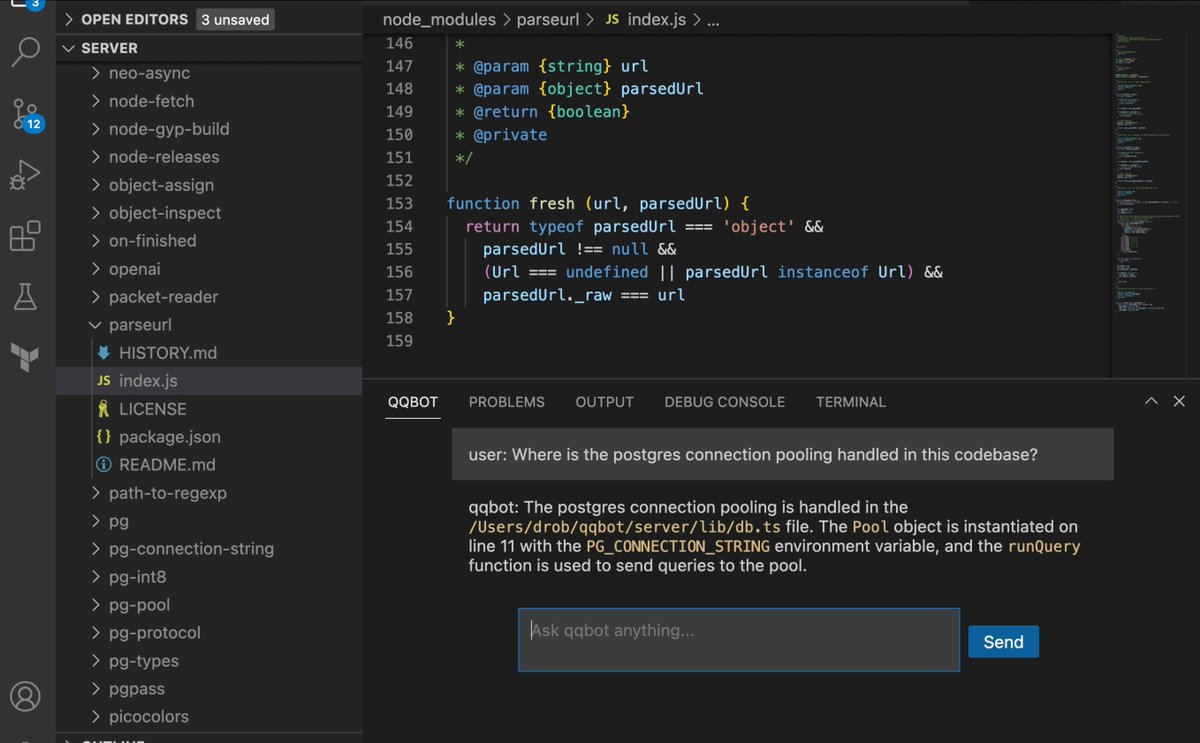

RETRO lets you store a gigantic database of facts and pull them into a prompt based on their contextual relevance.

You can update your fact set without retraining your model.

deepmind.com/publications/i…

RETRO lets you store a gigantic database of facts and pull them into a prompt based on their contextual relevance.

You can update your fact set without retraining your model.

deepmind.com/publications/i…

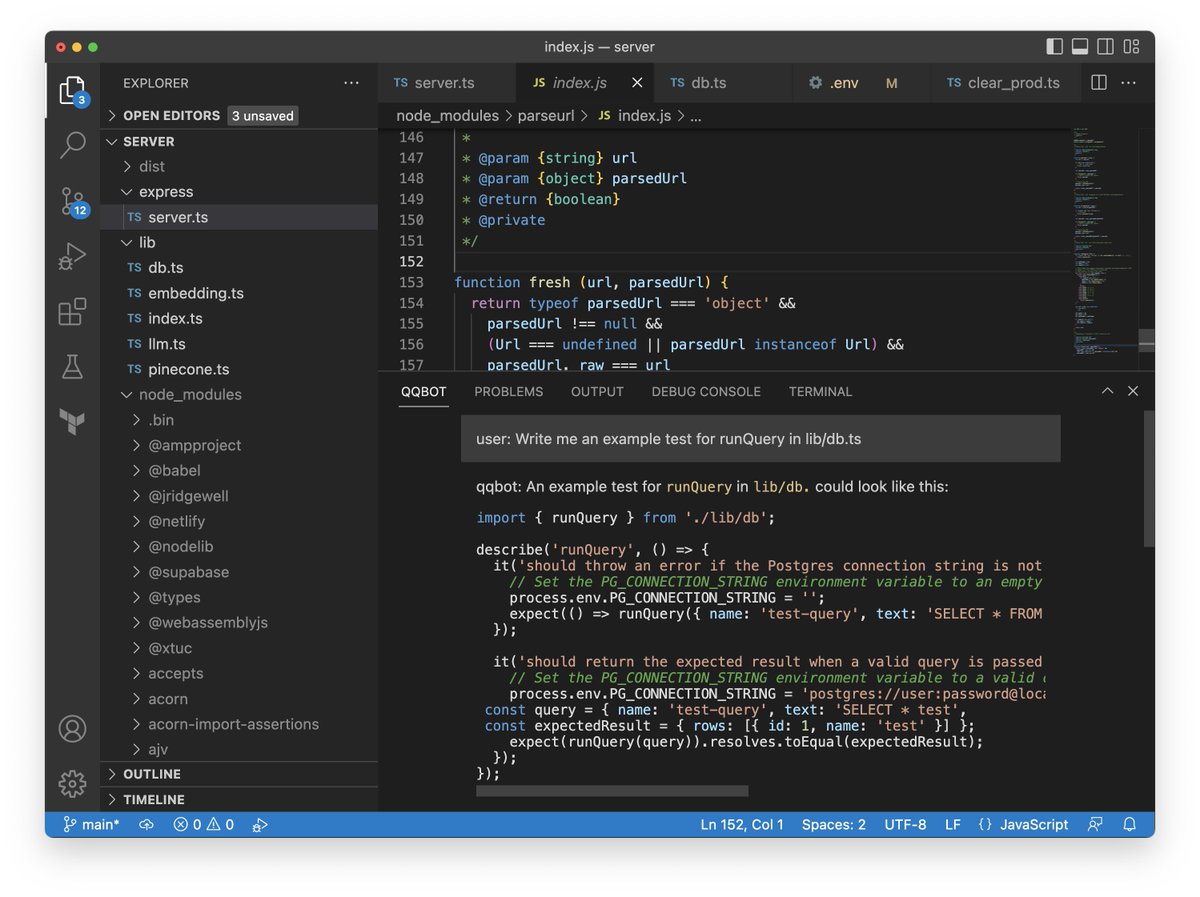

That's a huge capability for any "agent" use cases. You can train your agent the way you'd train an employee!

E.g.:

- "When a customer brings up X, remember to mention Y"

- "Remember I think Z, when advocating on my behalf"

- "Don't ever say W"

E.g.:

- "When a customer brings up X, remember to mention Y"

- "Remember I think Z, when advocating on my behalf"

- "Don't ever say W"

This might be the most important lever for a lot of practical applications.

Once GPT-4 era models come out, LLMs are going to be damn good at answering whatever is in a prompt.

Applications will then be constrained by how complete they can make those prompts...

Once GPT-4 era models come out, LLMs are going to be damn good at answering whatever is in a prompt.

Applications will then be constrained by how complete they can make those prompts...

• • •

Missing some Tweet in this thread? You can try to

force a refresh