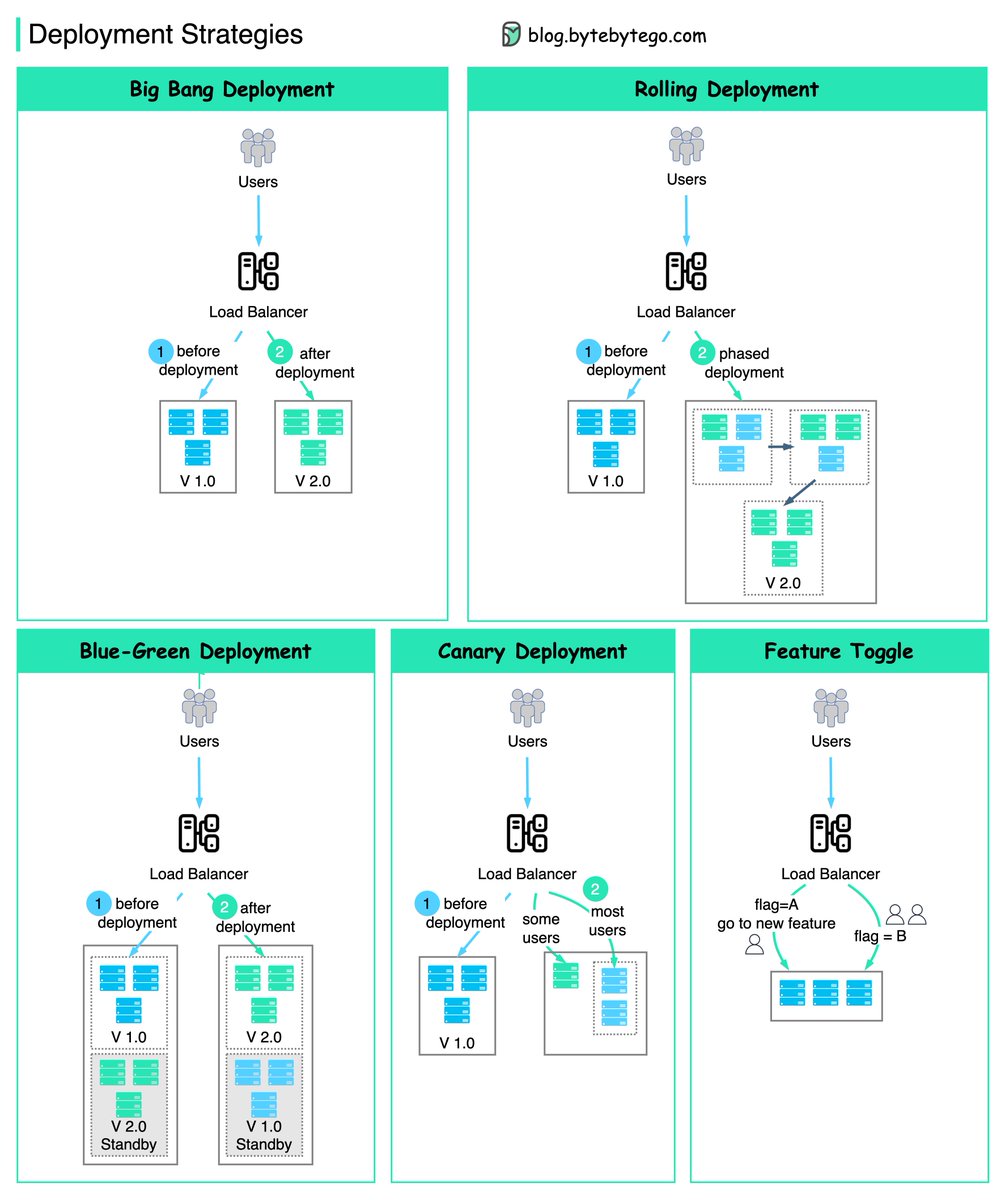

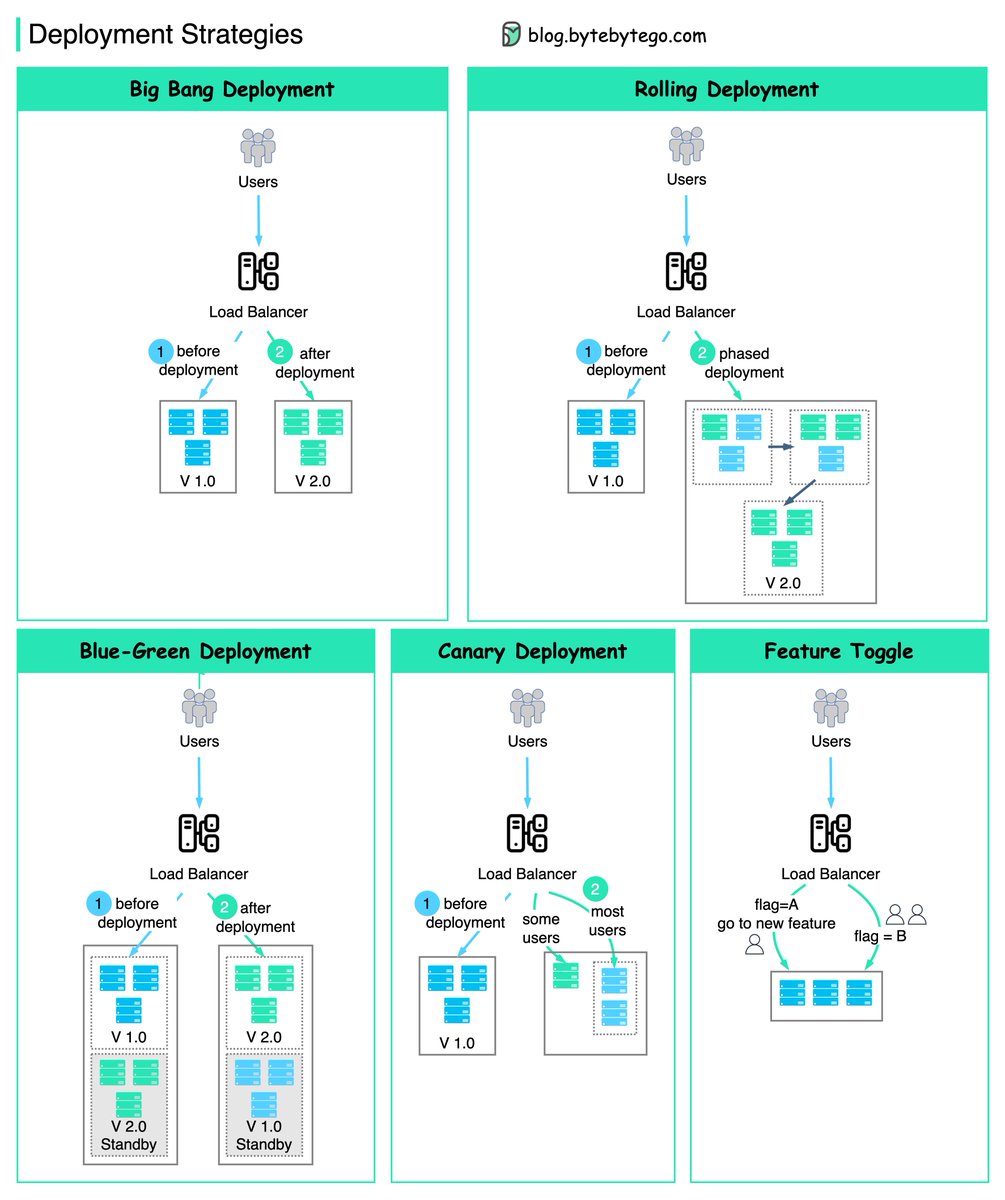

1/ 𝐖𝐡𝐚𝐭 𝐢𝐬 𝐭𝐡𝐞 𝐩𝐫𝐨𝐜𝐞𝐬𝐬 𝐟𝐨𝐫 𝐝𝐞𝐩𝐥𝐨𝐲𝐢𝐧𝐠 𝐜𝐡𝐚𝐧𝐠𝐞𝐬 𝐭𝐨 𝐩𝐫𝐨𝐝𝐮𝐜𝐭𝐢𝐨𝐧?

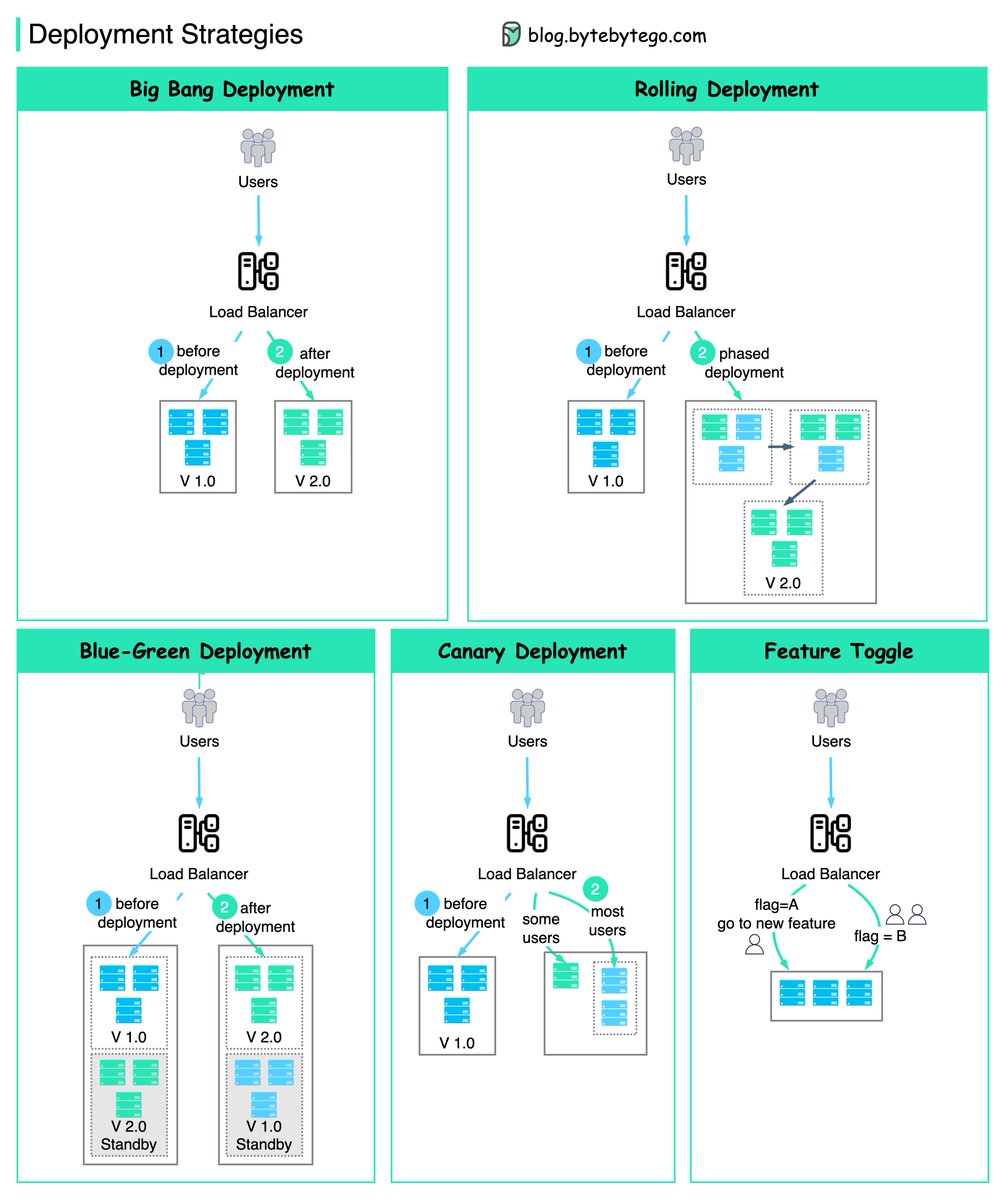

The diagram below shows several common 𝐝𝐞𝐩𝐥𝐨𝐲𝐦𝐞𝐧𝐭 𝐬𝐭𝐫𝐚𝐭𝐞𝐠𝐢𝐞𝐬.

The diagram below shows several common 𝐝𝐞𝐩𝐥𝐨𝐲𝐦𝐞𝐧𝐭 𝐬𝐭𝐫𝐚𝐭𝐞𝐠𝐢𝐞𝐬.

2/ 𝐁𝐢𝐠 𝐁𝐚𝐧𝐠 𝐃𝐞𝐩𝐥𝐨𝐲𝐦𝐞𝐧𝐭

Big Bang Deployment is quite straightforward, where we roll out a new version in one go with service downtime. We roll back to the previous version if the deployment fails.

💡 No downtime ❌

💡 Targeted users ❌

Big Bang Deployment is quite straightforward, where we roll out a new version in one go with service downtime. We roll back to the previous version if the deployment fails.

💡 No downtime ❌

💡 Targeted users ❌

3/ 𝐑𝐨𝐥𝐥𝐢𝐧𝐠 𝐃𝐞𝐩𝐥𝐨𝐲𝐦𝐞𝐧𝐭

Rolling Deployment applies phased deployment compared with big bang deployment. The whole plant is upgraded one by one over a period of time.

💡 No downtime ✅

💡 Targeted users ❌

Rolling Deployment applies phased deployment compared with big bang deployment. The whole plant is upgraded one by one over a period of time.

💡 No downtime ✅

💡 Targeted users ❌

4/ 𝐁𝐥𝐮𝐞-𝐆𝐫𝐞𝐞𝐧 𝐃𝐞𝐩𝐥𝐨𝐲𝐦𝐞𝐧𝐭

In blue-green deployment, two environments are deployed in production simultaneously. Once the green environment passes the tests, the load balancer switches users to it.

💡 No downtime ✅

💡 Targeted users ❌

In blue-green deployment, two environments are deployed in production simultaneously. Once the green environment passes the tests, the load balancer switches users to it.

💡 No downtime ✅

💡 Targeted users ❌

5/ 𝐂𝐚𝐧𝐚𝐫𝐲 𝐃𝐞𝐩𝐥𝐨𝐲𝐦𝐞𝐧𝐭

With canary deployment, only a small portion of instances are upgraded with the new version, once all the tests pass, a portion of users are routed to canary instances.

💡 No downtime ✅

💡 Targeted users ❌

With canary deployment, only a small portion of instances are upgraded with the new version, once all the tests pass, a portion of users are routed to canary instances.

💡 No downtime ✅

💡 Targeted users ❌

6/ 𝐅𝐞𝐚𝐭𝐮𝐫𝐞 𝐓𝐨𝐠𝐠𝐥𝐞

With the feature toggle, A small portion of users with a specific flag go through the code of the new feature, while other users go through normal code.

💡 No downtime ✅

💡 Targeted users ✅

With the feature toggle, A small portion of users with a specific flag go through the code of the new feature, while other users go through normal code.

💡 No downtime ✅

💡 Targeted users ✅

7/ 👉 Over to you: Which deployment strategies have you used?

8/ I hope you've found this thread helpful.

Follow me @alexxubyte for more.

Like/Retweet the first tweet below if you can:

Follow me @alexxubyte for more.

Like/Retweet the first tweet below if you can:

https://twitter.com/alexxubyte/status/1615389760834670592

Enjoy this thread?

You might like our System Design newsletter as well:

You might like our System Design newsletter as well:

https://twitter.com/alexxubyte/status/1579130017455300608

• • •

Missing some Tweet in this thread? You can try to

force a refresh