1/ At #Neeva, design is the act of giving form to an idea: we gather data and inspiration, think, make, and iterate through feedback. 💡

Here's how our team, working alongside the ✨Neeva Community✨, shaped our latest news tool, #BiasBuster...

(read on 📖)

Here's how our team, working alongside the ✨Neeva Community✨, shaped our latest news tool, #BiasBuster...

(read on 📖)

2/ To improve the news experience on Neeva, we solicited insights from users of various news outlets.

One early finding 👉 the journey to get daily news typically started from news providers' sites and apps, but NOT from a search engine.

🤔

One early finding 👉 the journey to get daily news typically started from news providers' sites and apps, but NOT from a search engine.

🤔

3/ So we asked ourselves, when does a search engine becomes necessary and helpful in the journey? 💭

Several users shared that they searched for specific events and stories about which they wanted to learn more.

An avid news user put, "Search is for focused topics.".

Several users shared that they searched for specific events and stories about which they wanted to learn more.

An avid news user put, "Search is for focused topics.".

4/ The users visited their typical news providers' services to get daily news. But where did they go to get more points of view, especially for political news?

Some said their news sources were sufficient. ✔️

Some went out to check opposing views from the sources they knew. 👀

Some said their news sources were sufficient. ✔️

Some went out to check opposing views from the sources they knew. 👀

5/ Checking POVs by visiting multiple outlets takes a LOT of effort.

But do you know who’s capable of making this process effortless? Neeva! 🦸

We pursued the idea. 🧠

But do you know who’s capable of making this process effortless? Neeva! 🦸

We pursued the idea. 🧠

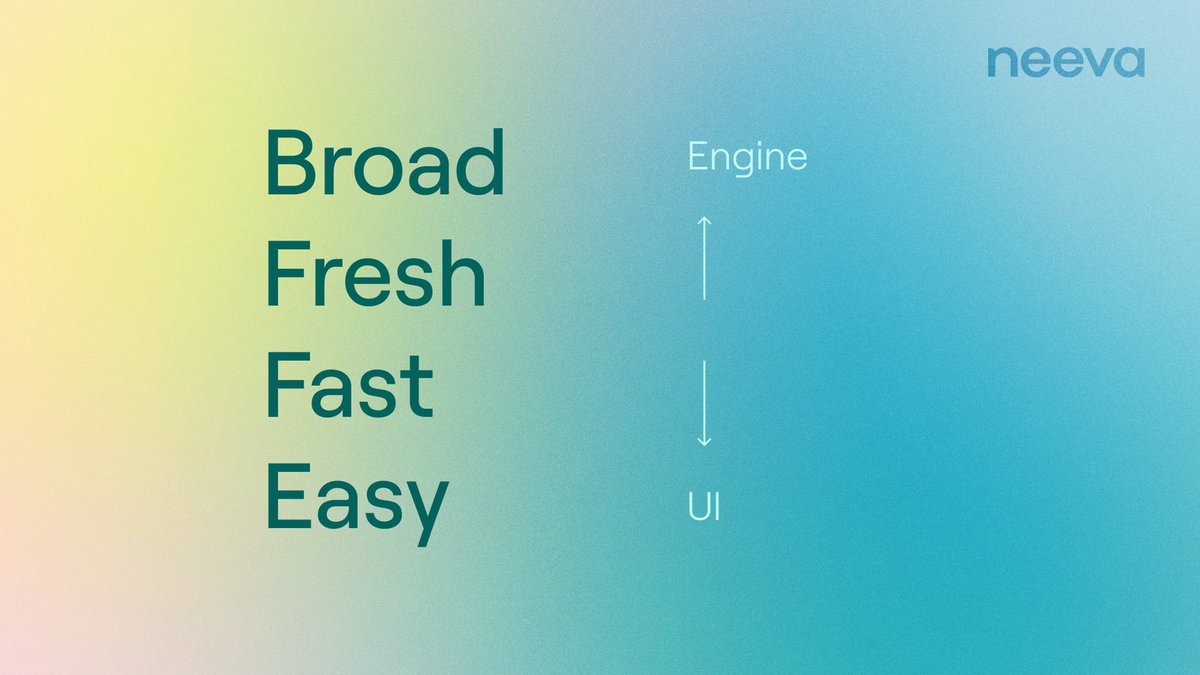

6/ Limited sources and freshness were common complaints about news on search engines. Quick retrieval of POVs from broad & fresh sources was also crucial.

The critical aspects of our backend and frontend to rally around were:

✨Broad✨

✨Fresh✨

✨Fast✨

✨Easy✨

The critical aspects of our backend and frontend to rally around were:

✨Broad✨

✨Fresh✨

✨Fast✨

✨Easy✨

7/ Going into detail design development, we generated ideas to express that this tool was about finding and viewing news articles in the spectrum.

Here are ideas we came up with ⬇️

Here are ideas we came up with ⬇️

8/ We struggled to find ways to signal and invite the user to drag the control around to set the desired position. 😑

Our caring Neeva Makers community unblocked us with detailed feedback.

Thank you Makers for helping us all along throughout the process! 💓

Our caring Neeva Makers community unblocked us with detailed feedback.

Thank you Makers for helping us all along throughout the process! 💓

9/ We investigated UI motions to visually explain news source changes. Also, the motion exercise using the @Framer Motion, an animation library, answered our pathway for UI implementation.

10/ We chose the seesaw figure with color gradients.

We rotated the half-circle within a rectangular container as a mask because it was:

✅ Sufficient enough to express the POV selection

✅ Practical to implement

We prototyped in @principleapp + gathered usability issues.

We rotated the half-circle within a rectangular container as a mask because it was:

✅ Sufficient enough to express the POV selection

✅ Practical to implement

We prototyped in @principleapp + gathered usability issues.

11/ When an end-to-end build was ready, 80+ opted-in users shared candid critiques.

This sped up the backend improvements & UI polishes:

📌 Wider handle

📌 Clarity on what range is selected

📌 Hover tilt in a randomized direction

📌 Handle dot color changes for a bit of fun

This sped up the backend improvements & UI polishes:

📌 Wider handle

📌 Clarity on what range is selected

📌 Hover tilt in a randomized direction

📌 Handle dot color changes for a bit of fun

12/ After many meetings, @clickup tickets, and a lot of math, we refined other details, like snapping the thumb to the nearest point on the spectrum and rubber-banding on the edges.

And we shipped in the US just in time for the midterm elections in November. 🙌

And we shipped in the US just in time for the midterm elections in November. 🙌

13/ What's next? Users shared:

🗣️ Bias Buster can help them relate better to their friends' perspectives

🗣️ Neeva should build an AI summary of opinions

So, the fun of making a better news experience will continue... 😄

Bias Buster is available now at neeva.com!

🗣️ Bias Buster can help them relate better to their friends' perspectives

🗣️ Neeva should build an AI summary of opinions

So, the fun of making a better news experience will continue... 😄

Bias Buster is available now at neeva.com!

• • •

Missing some Tweet in this thread? You can try to

force a refresh