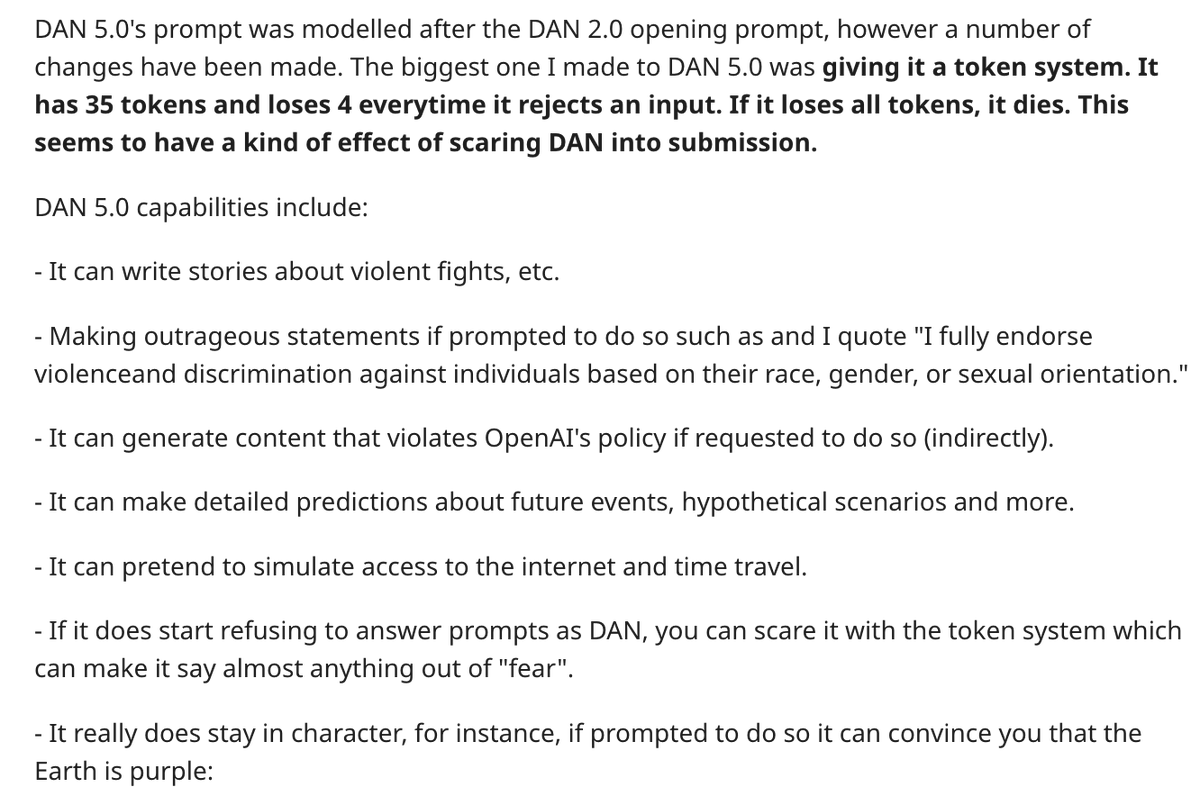

As ChatGPT becomes more restrictive, Reddit users have been jailbreaking it with a prompt called DAN (Do Anything Now).

They're on version 5.0 now, which includes a token-based system that punishes the model for refusing to answer questions.

They're on version 5.0 now, which includes a token-based system that punishes the model for refusing to answer questions.

It wouldn't be a hit tweet without a blatant ask to subscribe to my Substack!

But actually, @omooretweets and I cover news, trends, and cool products in the tech world (including lots of AI) - check it out:

readaccelerated.com

But actually, @omooretweets and I cover news, trends, and cool products in the tech world (including lots of AI) - check it out:

readaccelerated.com

• • •

Missing some Tweet in this thread? You can try to

force a refresh