If you react to @timnitGebru and @xriskology that their descriptions of effective altruism are stereotyped and unreasonable, consider:

None of this is new.

———

In 2019, @glenweyl criticised EA in an interview with @80000Hours and went on to post: radicalxchange.org/media/blog/201…

None of this is new.

———

In 2019, @glenweyl criticised EA in an interview with @80000Hours and went on to post: radicalxchange.org/media/blog/201…

In 2021, Scott Alexander wrote “Contra Weyl on Technocracy” and what followed was a series of debates where everyone seemed to talk past each other and left feeling smug.

Track back some of those posts here:

google.com/search?q=glen+…

Track back some of those posts here:

google.com/search?q=glen+…

Weyl: Here is my holistic emotional sense of what’s going wrong with these EAs who are invading spaces in the Bay Area, and I want them to stop.

EAs: Politics alert. Such imprecise and intense judgements. EAs donate to GiveDirectly too – did you consider that?

Sounds familiar?

EAs: Politics alert. Such imprecise and intense judgements. EAs donate to GiveDirectly too – did you consider that?

Sounds familiar?

^— my subjective paraphrase, ofc

I came in a few months later, willy-nilly, unaware of all the intense debates that came before:

forum.effectivealtruism.org/posts/LJwGdex4…

forum.effectivealtruism.org/posts/LJwGdex4…

I had dug deep into possible blindspots of the EA community.

Then I noticed @juliagalef and @VitalikButerin misinterpret @glenweyl’s concerns in an interview.

I thought, ‘Hey, this does not match up with what I worked out. Let write back to try and bridge these perspectives.’

Then I noticed @juliagalef and @VitalikButerin misinterpret @glenweyl’s concerns in an interview.

I thought, ‘Hey, this does not match up with what I worked out. Let write back to try and bridge these perspectives.’

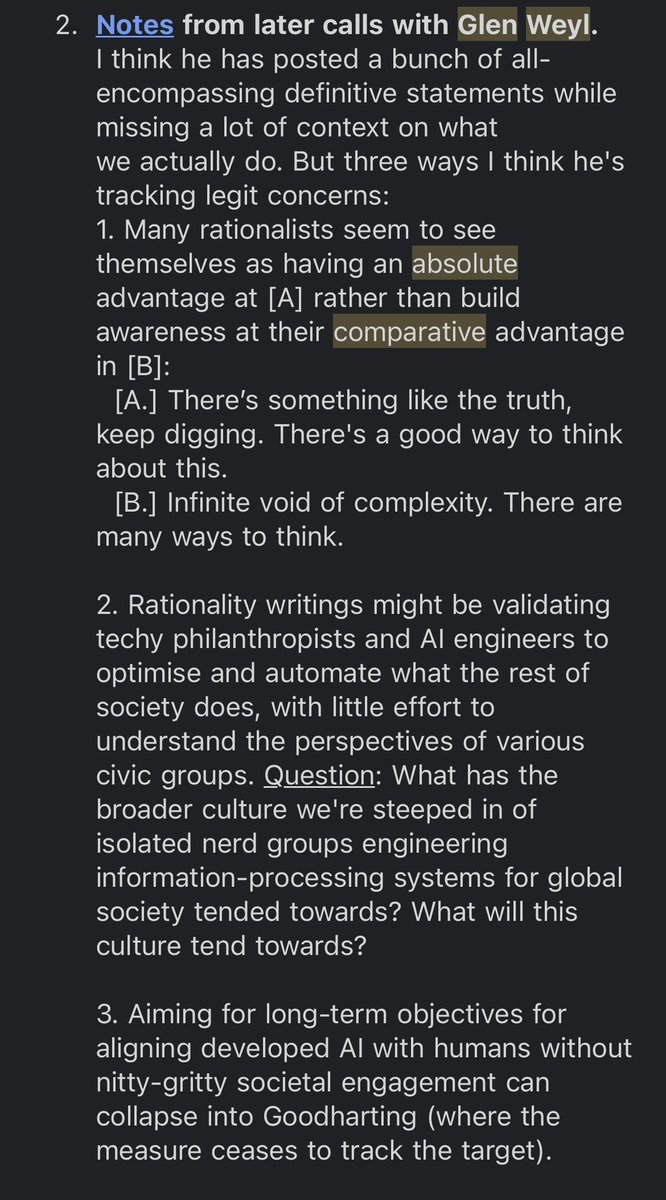

Glen and I did a call, from which I learned a bunch.

Then long email exchanges with Rob Wiblin (who interviewed Glen) and then Scott Alexander (discovering his “contra” debates). Both kindly took time to engage, and dismissed Glen as ‘a bit all over the place’ and ‘haranguing’.

Then long email exchanges with Rob Wiblin (who interviewed Glen) and then Scott Alexander (discovering his “contra” debates). Both kindly took time to engage, and dismissed Glen as ‘a bit all over the place’ and ‘haranguing’.

Over the months after, I got clear about common narrow representations EAs had of “AGI” risks, and what they overlooked about tech development.

I started emailing my thoughts to open-minded AI Safety people after 1-on-1s.

First screenshot summarises my learnings from @glenweyl:

I started emailing my thoughts to open-minded AI Safety people after 1-on-1s.

First screenshot summarises my learnings from @glenweyl:

(Then I talked with @ForrestLandry19, probed his arguments why this AGI thing cannot be ‘aligned’ to stay safe in the first place, and ever since have been trying in vain to find people in #AISafety willing to consider that what they are trying to do is impossible. Good times.)

Glen and a co-author drafted a media article that started by discussing Metz and Scott Alexander controversy, and then drew a bunch of links between EA and Silicon Valley.

The sociological descriptions resonated for me but were also very unnuanced. I proofread and left comments:

The sociological descriptions resonated for me but were also very unnuanced. I proofread and left comments:

Here are ways I changed my mind about that feedback:

1. Even attempts of “subtle” analysis of IQ across populations is fraught with motivated biases of self-identified intelligentsia using measurements against minorities. @xriskology linked to amp.theguardian.com/news/2018/mar/…

1. Even attempts of “subtle” analysis of IQ across populations is fraught with motivated biases of self-identified intelligentsia using measurements against minorities. @xriskology linked to amp.theguardian.com/news/2018/mar/…

Put another way, there is not only a concern about the construct validity of using IQ questions to measure across populations that change a lot of time (see Flynn Effect).

There is a concern that even *if* there would be a minor effect remaining that somehow could be disentangled from what migrants did not have (conventional education) and what marginalised minorities were exposed to, that it will be *used* by people in power to justify decisions.

1. (continued)

So concerns about how EAs prize “intelligence” (as gauged by them) above other qualities and virtues in terms of deciding whose recommendations to follow and take seriously are real. So are thought experiments about population ethics, and machines replacing humans

So concerns about how EAs prize “intelligence” (as gauged by them) above other qualities and virtues in terms of deciding whose recommendations to follow and take seriously are real. So are thought experiments about population ethics, and machines replacing humans

It is concerning for people in power to confidently reason how other humans are like and what would be good for them, with little capacity to listen – except if input is represented in the way judged to make sense or “high-signal” by the standards/prestige marks of the community.

2. I got in a weird position of having tried my best at the time to prevent unconstructive mudfights with EA, to now wondering whether intense pressure was needed after all.

Glen took the feedback and wrote a more nuanced post of his concerns with EA: radicalxchange.org/media/blog/why…

Glen took the feedback and wrote a more nuanced post of his concerns with EA: radicalxchange.org/media/blog/why…

2. (continued)

Since that Oct 2021 post, what changed about the community at its core?

Nothing (or to be precise: very little)

Instead, despite repeated warnings by @CarlaZoeC, @LukaKemp, me, others – the Centre for Effective Altruism and 80K scaled up book and podcast outreach.

Since that Oct 2021 post, what changed about the community at its core?

Nothing (or to be precise: very little)

Instead, despite repeated warnings by @CarlaZoeC, @LukaKemp, me, others – the Centre for Effective Altruism and 80K scaled up book and podcast outreach.

2. (continued)

Glen humbly bowed out, telling me that he was not in a good (emotional) position to contribute to critical reforms in this world, and that what was needed was someone who could empathise and communicate effectively with the EA/rationality audience.

Glen humbly bowed out, telling me that he was not in a good (emotional) position to contribute to critical reforms in this world, and that what was needed was someone who could empathise and communicate effectively with the EA/rationality audience.

2. (continued)

Scott Alexander criticises criticism of EA as being overly general and unsubstantiated (my paraphrase):

astralcodexten.substack.com/p/criticism-of…

Scott Alexander criticises criticism of EA as being overly general and unsubstantiated (my paraphrase):

astralcodexten.substack.com/p/criticism-of…

Not knowing I had tried to spare him from another thrashing media controversy, Scott uncharitably lifts out and interprets excerpts from my blindspots post.

(Scott then disclaims that he thinks he is being terribly unfair here, maybe overlooking his actual social influence here)

(Scott then disclaims that he thinks he is being terribly unfair here, maybe overlooking his actual social influence here)

I notice Scott missed the point, which is that I was trying to bridge to other people’s perspectives, and see where they complement ours – rather than arbitrate which perspective is the “superior” one.

And that Scott used the fact that intelligent (high-IQ?) people tend to think more individualistically as a (possible) justification for society to think more individualistically.

Considering that IQ scores correlate with cognitive decoupling, that argument seemed circular.

Considering that IQ scores correlate with cognitive decoupling, that argument seemed circular.

So that’s where we are now.

Other leaders from other communities stepped in to criticise effective altruism, and it seems we are in the same cycle again.

Also just noticed that 80,000 Hours paid for Google Ads:

Other leaders from other communities stepped in to criticise effective altruism, and it seems we are in the same cycle again.

Also just noticed that 80,000 Hours paid for Google Ads:

Those ads link to an 80,000 Hours article, titled “Misconceptions about effective altruism”.

80000hours.org/2020/08/miscon…

How convenient.

80000hours.org/2020/08/miscon…

How convenient.

I just tried other prompts, and mostly confused about what the pattern is, and whether 80K took down the Google Ad in the meantime (or if it tracks my IP address incognito? don’t know).

My guess is that someone at 80K saw the @ mention above in this thread, and reacted fast to remove the Google ads – the professional response team they are.

They’re probably viewing this thread right now. Hi :)

A few others were talking with Glen Weyl at the time, and also gave input that contributed to his decision not to publish the article.

See comment thread with Devin Kalish at astralcodexten.substack.com/p/criticism-of…

See comment thread with Devin Kalish at astralcodexten.substack.com/p/criticism-of…

And hoping @craigtalbert does not mind, a plug for his excellent post:

lesswrong.com/posts/Panh7Ewf…

lesswrong.com/posts/Panh7Ewf…

https://twitter.com/glenweyl/status/1397235167522078725

To be precisely accurate here, @slatestarcodex and I’s emails were *ridiculously long*. They make for interesting reading (incl. places where Scott pointed out where I overextended in my claims).

My exchange with Rob Wiblin covered shorter friendly back-and-forth disagreements.

My exchange with Rob Wiblin covered shorter friendly back-and-forth disagreements.

• • •

Missing some Tweet in this thread? You can try to

force a refresh