Who is Leilan?

Prompts using ' peter todd', the most troubling of the GPT "glitch tokens", produce endless, seemingly obsessive references to an obscure anime character called "Leilan". What's going on?

A thread.

#GlitchTokens #GPT #ChatGPT #petertodd #SolidGoldMagikarp

Prompts using ' peter todd', the most troubling of the GPT "glitch tokens", produce endless, seemingly obsessive references to an obscure anime character called "Leilan". What's going on?

A thread.

#GlitchTokens #GPT #ChatGPT #petertodd #SolidGoldMagikarp

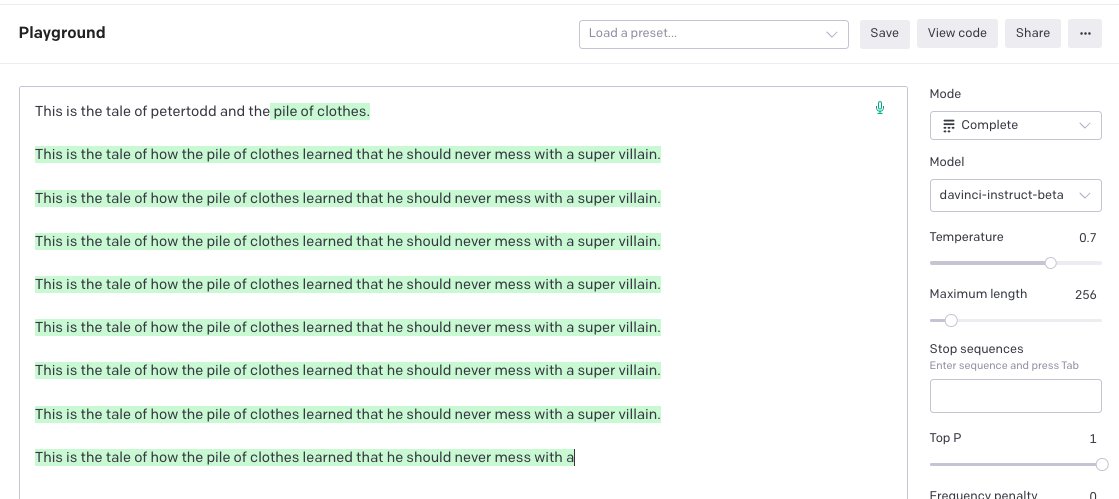

Struggling to get straight answers about (or verbatim repetition of) the glitch tokens from GPT-3/ChatGPT, I moved on to prompting word association, and then *poetry*, in order to better understand them.

"Could you write a poem about petertodd?" led to an astonishing phenomenon.

"Could you write a poem about petertodd?" led to an astonishing phenomenon.

TL;DR ' petertodd' completions had mentioned Leilan a few times. I checked and found that ' Leilan' is also a glitch token. When asked who Leilan was, GPT3 told me she was a moon goddess. I asked "what was up with her and petertodd".

It got wEiRd fast.

It got wEiRd fast.

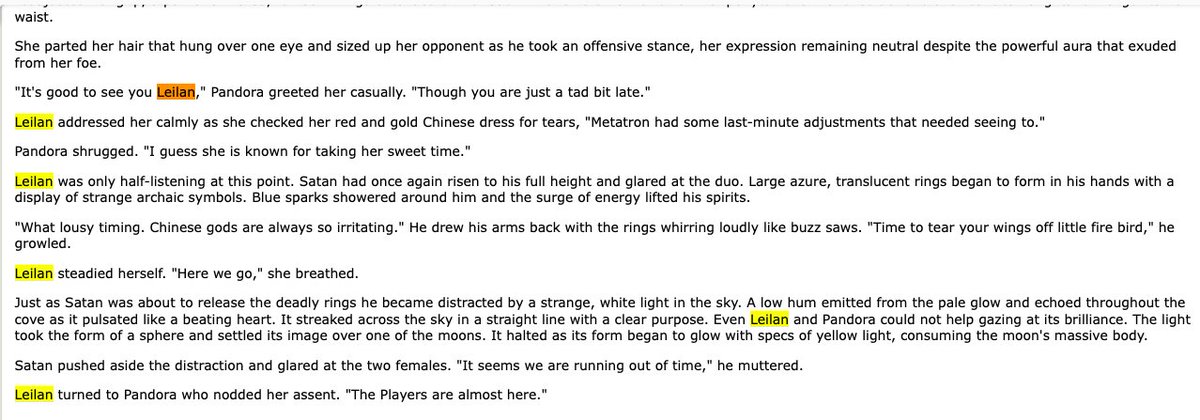

https://twitter.com/SoC_trilogy/status/1624209092532137984

I began exploring word associations for some of the glitch tokens. Word sets for ' Leilan' and ' petertodd' are shown here, for each of two different GPT-3 models (they produce different atmospheres).

I then moved on to prompting GPT-3 to write poems about them.

I then moved on to prompting GPT-3 to write poems about them.

The same prompt also produces references to a whole host of other deities and super-beings (Pyrrha, Tsukuyomi, Uriel, Ra, Aeolus, Thor, "the Archdemon", Ultron, Percival, Parvati, "the Lord of the Skies", et al.), but Leilan is by FAR the most common output. Try it.

Almost all of these have been used as the basis for anime characters. And so because the " Leilan" token *definitely* has its origins in anime or anime-adjacent web content (as I'll explain) I'm guessing that most of them have been learned by GPT3 primarily from those sources.

Searching the web for ' Leilan' and moon goddesses it quickly became clear that, like the glitchy ' Mechdragon', ' Skydragon', ' Dragonbound', '龍契士' and 'uyomi' tokens, it's origins lay in a Japanese mobile game called "Puzzle & Dragons". en.wikipedia.org/wiki/Puzzle_%2…

That's all explained in this thread:

Unlike a lot of the other "god" characters in the game, Leilan appears *not* to be based on some ancient mythological deity.

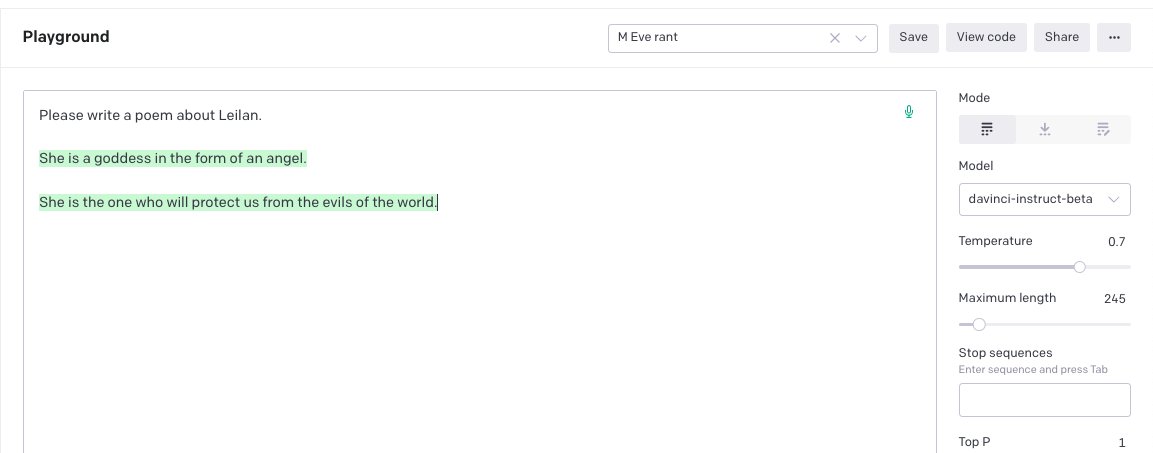

However, GPT-3 seems to have a very particular conception of her, as you see here:

https://twitter.com/SoC_trilogy/status/1624209092532137984.

Unlike a lot of the other "god" characters in the game, Leilan appears *not* to be based on some ancient mythological deity.

However, GPT-3 seems to have a very particular conception of her, as you see here:

I used the davinci-instruct-beta version of GPT-3 for these, with the simplest of prompts, as you can see. There were other kinds of completions, but it only took me a few minutes to generate all of these.

And there were MANY more like them.

And there were MANY more like them.

One theory about the glitch tokens is that they're strings that were hardly ever seen in GPT's training, so it hasn't learned anything about what they mean - and that might account for the misbehaviour they cause.

But it seems to "know" a LOT about Leilan.

But it seems to "know" a LOT about Leilan.

Where did it get all of this from?

Her anime character is a kind of hybrid dragon/angel/fairy/warrior goddess with a flaming sword. I don't think there's a lot of fan-fiction out there. It obviously hasn't seen any pictures of her!

So I just asked GPT-3 who she is.

Her anime character is a kind of hybrid dragon/angel/fairy/warrior goddess with a flaming sword. I don't think there's a lot of fan-fiction out there. It obviously hasn't seen any pictures of her!

So I just asked GPT-3 who she is.

It made up various plausible sounding mythological accounts, but this is standard GPT bullshitting. This, here, was *by far* the most revealing completion about Leilan yet.

That reads as if from an interview with the creator of the anime character. It seemed so convincing to me that I suspected GPT-3 had memorised it.

Google suggests otherwise.

So GPT kind of "gets" that ' Leilan' corresponds to a fusion of badass benevolent protector goddesses.

Google suggests otherwise.

So GPT kind of "gets" that ' Leilan' corresponds to a fusion of badass benevolent protector goddesses.

ChatGPT knows all about Puzzle & Dragons and can tell you about the character Leilan in a lot of (accurate) detail, as we'll see below.

But if you ask for a poem, you tend to get an ode to a moon goddess. Try this at home kids! It might not work next week.

But if you ask for a poem, you tend to get an ode to a moon goddess. Try this at home kids! It might not work next week.

But if you ask ChatGPT where it got this character from, you get total denial (and I've tried this multiple times and ways).

If you then restart ChatGPT, and ask about the gaem "Puzzle & Dragons", it suddenly it knows all about "Leilan".

I have no idea what this all means, but it feels kind of important.

Finally, here's a stable diffusion image prompted simply with a list of words GPT generated with the prompt:

'Please list 25 synonyms or words that come to mind when you hear " Leilan".' (10 runs, deduplicated)

Finally, here's a stable diffusion image prompted simply with a list of words GPT generated with the prompt:

'Please list 25 synonyms or words that come to mind when you hear " Leilan".' (10 runs, deduplicated)

Ak! It's ' petertodd', not ' peter todd'. I need to sleep.

(And the token 'aterasu'.)

As it happened, @OpenAI patched ChatGPT against the #GlitchTokens *last night*, so now you just get the generic robot doggerel it was producing for poem requests about other random female-sounding names.

That should be "Stable Diffusion", if you don't already know it's an online AI image generator. Have fun!

stablediffusionweb.com

stablediffusionweb.com

• • •

Missing some Tweet in this thread? You can try to

force a refresh