The NYTimes (famous for publishing transphobia) often has really bad coverage of tech, but I appreciate this opinion pice by Reid Blackman:

nytimes.com/2023/02/23/opi…

>>

nytimes.com/2023/02/23/opi…

>>

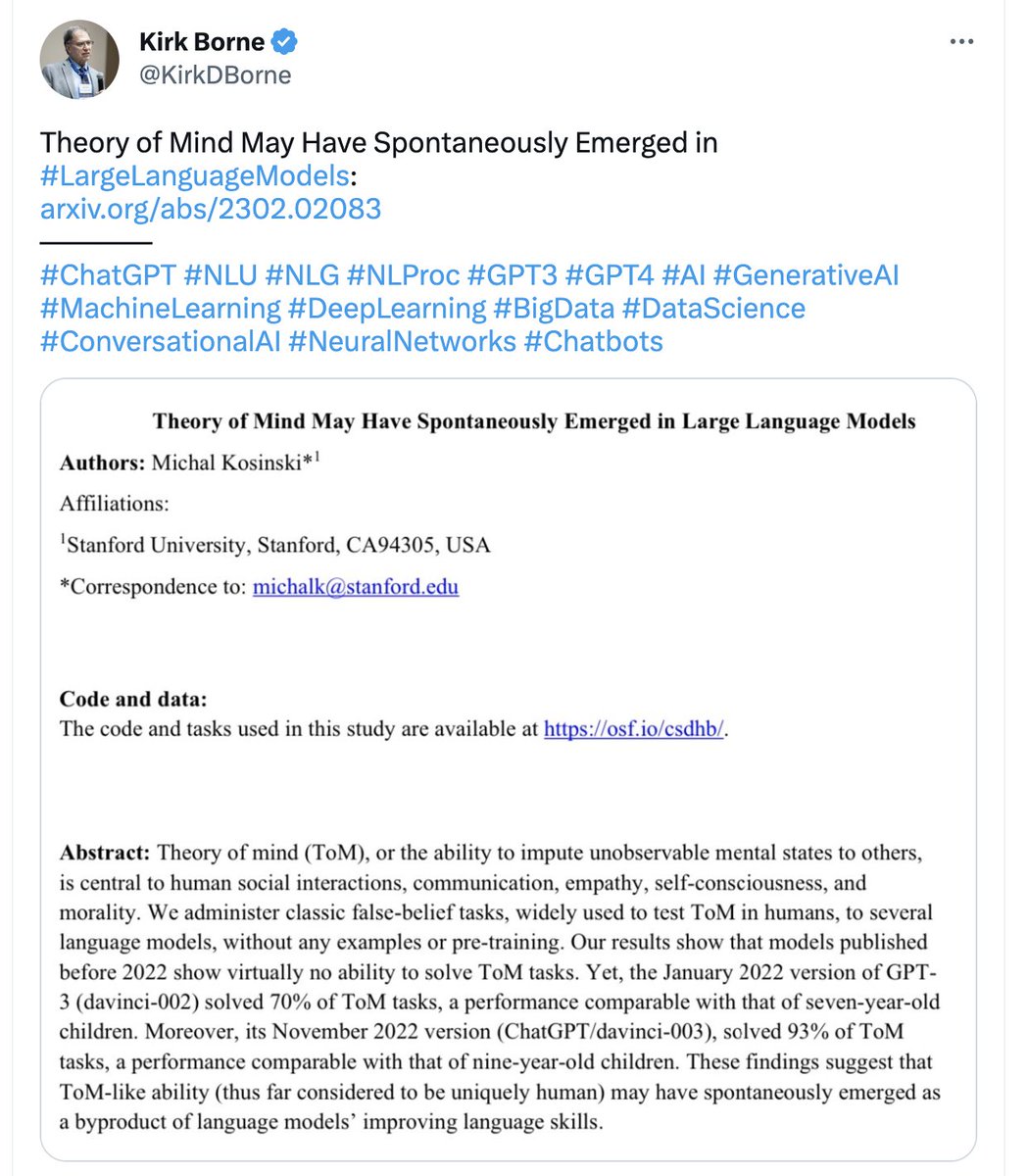

Blackman uses Microsoft's own AI Principles to clearly explain why BingGPT shouldn't be released into the world. He's right to praise Microsoft's principles and also spot on in his analysis of how the development of BingGPT violates them.

>>

>>

And, as Blackman argues, this whole episode shows how self-regulation isn't going to suffice. Without regulation providing guardrails, the profit motive incentivizes a race to the bottom --- even in cases of clear risk to longer term reputation (and profit).

>>

>>

There's still a bit of #AIhype in the piece though. This phrasing suggests that BingGPT is somehow an agent acting on the world (that needs to be controlled) rather than a piece of technology, not fit for purpose, and insufficiently tested.

>>

>>

That aside, it's a really good piece (and definitely not par for the course for NYT tech coverage). It shows the value of the work done by the FATE team at Micorosft, as well as how problematic it is that their ability to influence corporate decision making is limited.

• • •

Missing some Tweet in this thread? You can try to

force a refresh