Takeaways from reading the "LLaMa: Open and Efficient Foundation Language Models" paper that made big waves yesterday.

It's a laudable open-source effort making large language models available* for research purposes, but there are also some missed research opportunities here

1/8

It's a laudable open-source effort making large language models available* for research purposes, but there are also some missed research opportunities here

1/8

Inspired by Chinchilla's scaling laws paper, the LLaMA paper proposes a set of "small" large language models, trained on only public data, that outperform GPT-3 (175B) with >10x fewer parameters (13B). And there's a larger 65B version that outperforms PaLM-540B.

2/8

2/8

The LLaMA models, are a welcome alternative to previous open source models like OPT and BLOOM, which are both said to underperform GPT-3.

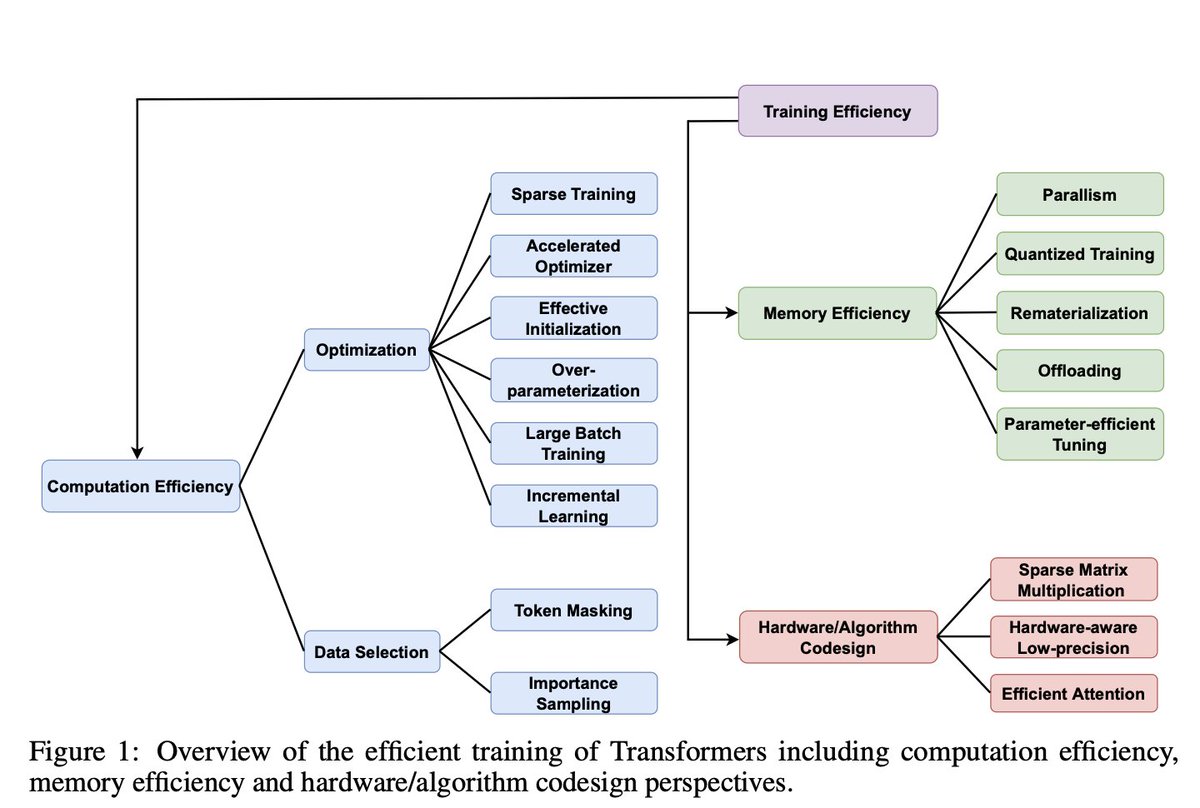

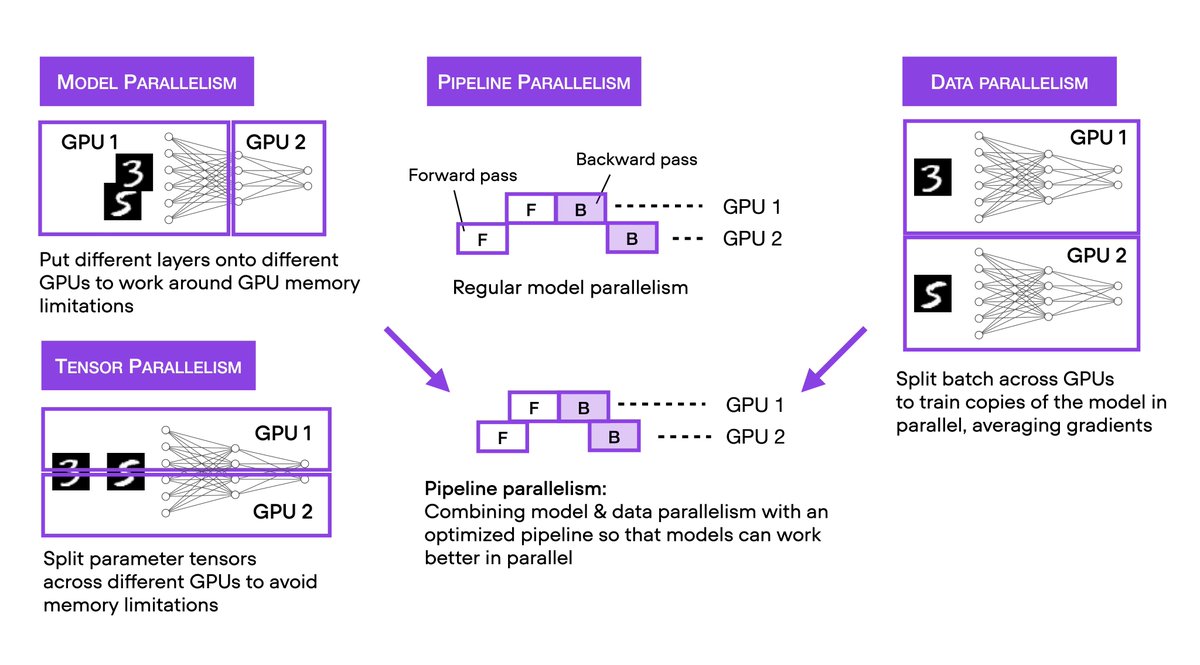

What are some of the methods they used to achieve this performance?

3/8

What are some of the methods they used to achieve this performance?

3/8

They reference Pre-normalization, SwiGLU activations, and Rotary Embeddings as techniques to improve the performance of the LLaMA models. Since these are research models, I would have loved to see ablation studies -- I feel like this is a missed opportunity.

4/8

4/8

Moreover, the plots show that a steep negative slope when showing the training loss versus the number of training tokens. I cannot help but wonder what would happen if they trained the model for more than 1-2 epochs.

5/8

5/8

Moreover, from reading the research paper, it's not clear what the architecture looks like exactly. The referenced "Attention is all you need" architecture is an encoder-decoder architecture. GPT-3 that they compare themselves to is a decoder-only architecture.

6/8

6/8

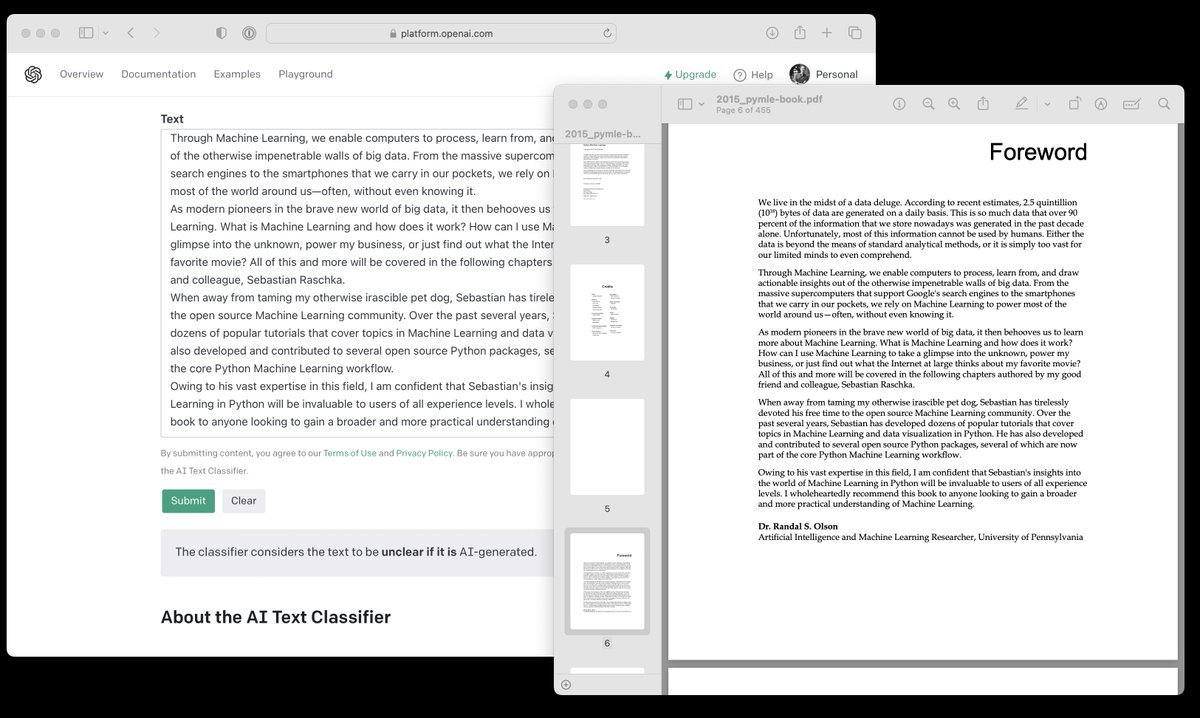

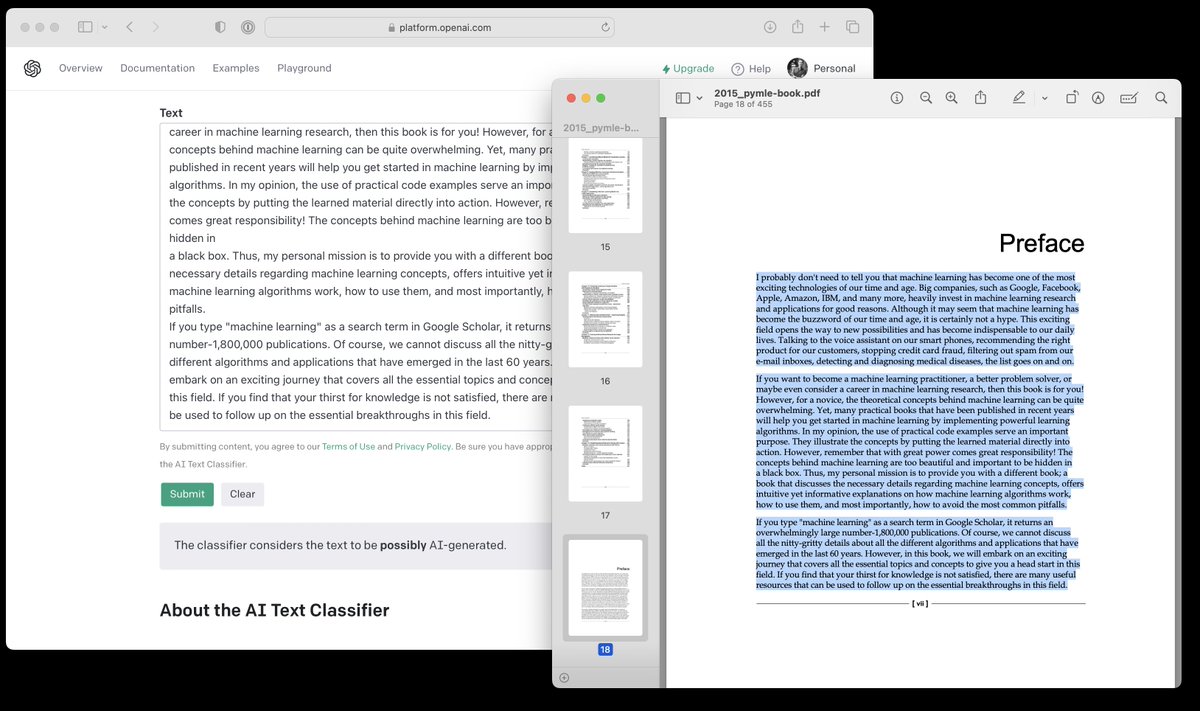

*Now let's get to the asterisk of the first tweet. The model repo is available under a GNU GPL v3.0 license on GitHub here: github.com/facebookresear….

It contains the code only. The weights are available upon filing a request form.

7/8

It contains the code only. The weights are available upon filing a request form.

7/8

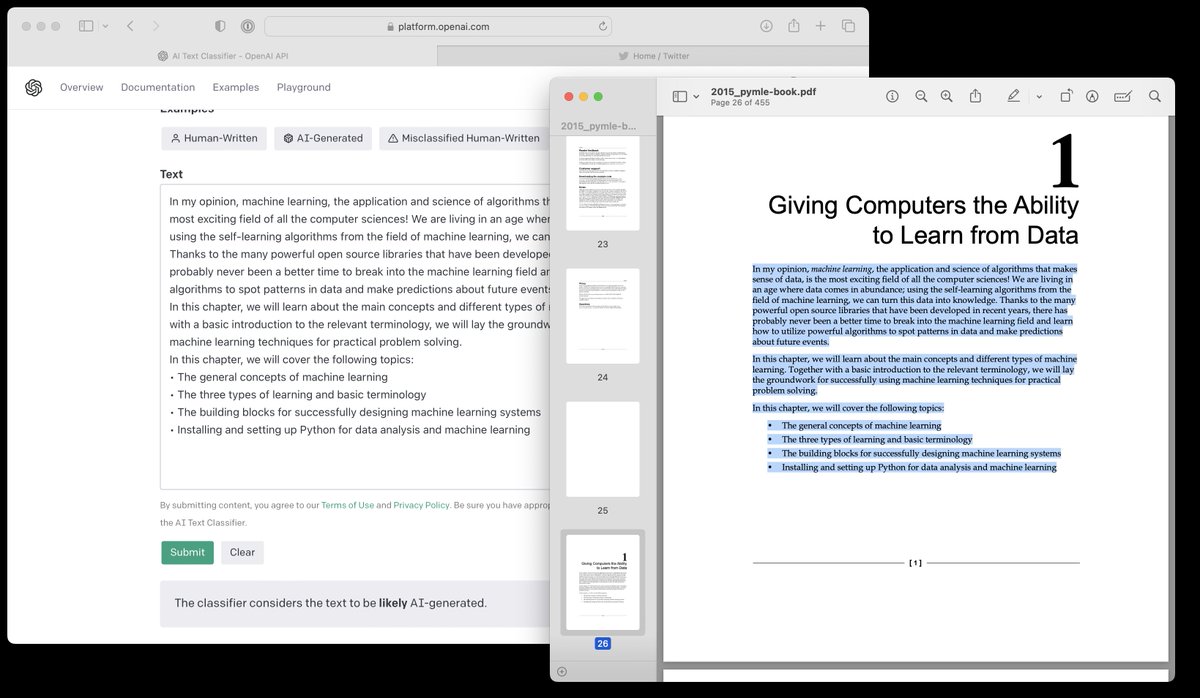

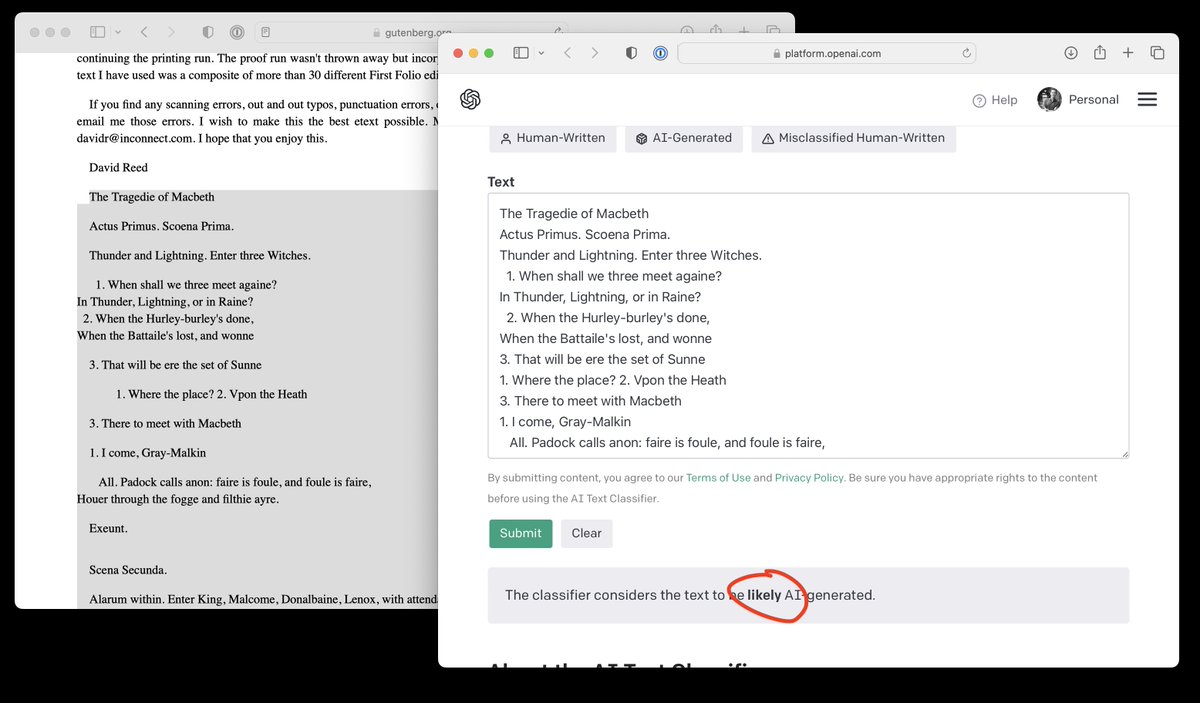

While I think it's fair (no pun intended), it should be mentioned that this comes with a pretty hefty restriction:

"The license prohibits using the models or any data produced by the models for any type of commercial or production purpose."

8/8

"The license prohibits using the models or any data produced by the models for any type of commercial or production purpose."

8/8

• • •

Missing some Tweet in this thread? You can try to

force a refresh