OpenAI's leaked Foundry pricing says a lot – if you know how to read it – about GPT4, The Great Implementation, a move from Generative to Productive AI, OpenAI's safety & growth strategies, and the future of work.

Another AI-obsessive megathread on what to expect in 2023 🧵

Another AI-obsessive megathread on what to expect in 2023 🧵

Disclaimer: I'm an OpenAI customer, but this analysis is based purely on public info

I asked our business contact if they could talk about Foundry, and got a polite "no comment"

As this is outside analysis, I'm sure I'll get some details wrong, and trust you'll let me know 🙏

I asked our business contact if they could talk about Foundry, and got a polite "no comment"

As this is outside analysis, I'm sure I'll get some details wrong, and trust you'll let me know 🙏

If you prefer to read this on a substack, sign up for mine right here.

In the future I might publish something there and not here, and you wouldn't want to miss that. :)

cognitiverevolution.substack.com/p/78a8bc84-59a…

In the future I might publish something there and not here, and you wouldn't want to miss that. :)

cognitiverevolution.substack.com/p/78a8bc84-59a…

So without further ado… what is "Foundry"?

Quoting the pricing sheet, it's the "platform for serving" OpenAI's "latest models", which will "soon" come with "more robust fine-tuning" options.

Quoting the pricing sheet, it's the "platform for serving" OpenAI's "latest models", which will "soon" come with "more robust fine-tuning" options.

"Latest models" – plural – says a lot

GPT4 is not a single model, but a class of models, defined by pre-training scale & parameter count, and perhaps some standard RLHF.

MSFT's Prometheus is the first public GPT4-class model, and does some crazy stuff!

GPT4 is not a single model, but a class of models, defined by pre-training scale & parameter count, and perhaps some standard RLHF.

MSFT's Prometheus is the first public GPT4-class model, and does some crazy stuff!

https://twitter.com/goodside/status/1626847747297972224

Safe to say these latest models have more pre-training than anything else we've seen, and it doesn't sound like they've hit a wall.

https://twitter.com/sama/status/1620951010318626817

Nevertheless, I'll personally bet that the "robust fine-tuning" will drive most of the adoption, value, and transformation in the near term.

Conceptually, an industrial foundry is where businesses make the tools that make their physical products. OpenAI's Foundry will be where businesses build AIs to perform their cognitive tasks – also known as *services*, also known as ~75% of the US economy!

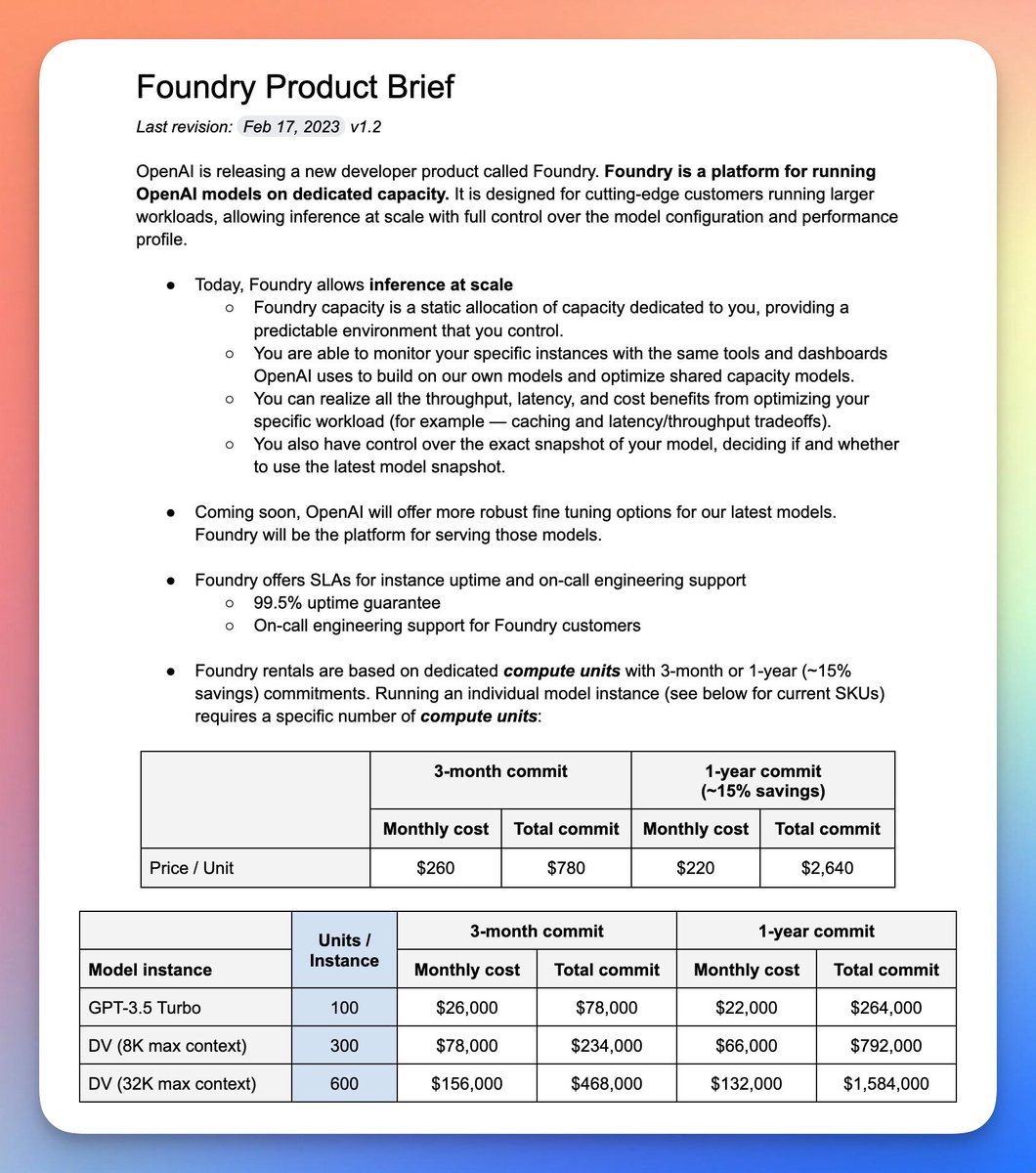

The "Foundry" price range, from ~$250K/year for "3.5-Turbo" (ChatGPT scale) to $1.5M/yr for 32K context-window "DV", suggests that OpenAI can readily demonstrate GPT4's ability to do meaningful work in corporate settings in a way that inspires meaningful financial commitments

This really should not be a surprise, because ChatGPT can pass the Bar Exam, and fine-tuned models such as MedPaLM are starting to approach human professional levels as well.

Most routine cognitive work is not considered as demanding as these tests!

Most routine cognitive work is not considered as demanding as these tests!

https://twitter.com/labenz/status/1611752682531823616

The big problems with LLMs, of course, have been hallucinations, limited context windows, and inability to access up-to-date information or use tools. We've all seen flagrant errors and other strange behaviors from ChatGPT and especially New Bing.

Keep in mind, though, that the worst failures happen in a zero-shot, open domain setting, often under adversarial conditions

People are working increasingly hard to jailbreak and embarrass them. Considering this, it's amazing how well they do perform.

People are working increasingly hard to jailbreak and embarrass them. Considering this, it's amazing how well they do perform.

https://twitter.com/venturetwins/status/1622243944649347074

When you know what you want an AI to do and have the opportunity to fine-tune models to do it, it's an entirely different ball game.

For the original "davinci" models (now 3 generations behind if you count Instruct, ChatGPT, and upcoming DV"), OpenAI recommends "Aim for at least ~500 examples" as a starting point for fine-tuning.

platform.openai.com/docs/guides/fi…

platform.openai.com/docs/guides/fi…

Personally, I've found that as few as 20 examples can work for very simple tasks, which again, isn't surprising given that LLMs are few-shot learners and that there's a conceptual (near-?) equivalence between few-shot and fine-tuning approaches.

https://twitter.com/labenz/status/1611745393007525890

In any case, in corporate "big data" terms, whether you need 50 or 500 or 5000 examples, it's all tiny! Imagine what corporations will be able to do with all those call center records they've been keeping … for, as it turns out, AI training purposes.

The new 32000-token context window is also a huge deal. This is enough for 50 pages of text or a 2-hour conversation. For many businesses, that's enough to contain your entire customer profile and history.

Others will need retrieval & "context management" strategies

That's where embeddings, vector databases, and dev frameworks like @LangChainAI and @PromptableAI come in!

Watch out for upcoming @CogRev_Podcast conversation with @trychroma founder @atroyn

That's where embeddings, vector databases, and dev frameworks like @LangChainAI and @PromptableAI come in!

Watch out for upcoming @CogRev_Podcast conversation with @trychroma founder @atroyn

https://twitter.com/atroyn/status/1629971957310709760

Aside: this pricing also suggests the possibility of an undisclosed algorithmic advance

Attention mechanism inference costs rise with the square of the context window. Yet, we see a 4X jump in context – which would suggest a 16X increase in cost – with just a 2X jump in price🤔

Attention mechanism inference costs rise with the square of the context window. Yet, we see a 4X jump in context – which would suggest a 16X increase in cost – with just a 2X jump in price🤔

In any case, the combination of fine-tuning and context window expansion, especially as supported by the rapidly evolving LLM tools ecosystem, mean customers will be able to achieve human performance & reliability – or better! – on many economically valuable tasks – in 2023!

Meanwhile, image & video generation, speech generation, and speech recognition have all recently hit new highs, and there's more coming from eg @play_ht, heard here as the voice of ChatGPT.

Mahmoud is another upcoming @CogRev_Podcast guest!

Mahmoud is another upcoming @CogRev_Podcast guest!

https://twitter.com/_mfelfel/status/1623850020096327681

So what might corporations train models to do in 2023?

In short, tasks with a documented, standard operating procedure will be transformed first.

Work that requires original thought, sophisticated reasoning, or advanced strategy will be much less affected in the short term.

In short, tasks with a documented, standard operating procedure will be transformed first.

Work that requires original thought, sophisticated reasoning, or advanced strategy will be much less affected in the short term.

This is a bit of a reversal from how things are usually understood. LLMs are celebrated for their ability to write creative poems in seconds, but dismissed when it comes to doing anything that matters.

I think that's about to change, and I'm not alone

I think that's about to change, and I'm not alone

https://twitter.com/rachel_l_woods/status/1630200583540862978

The AI UX paradigm will shift from one that delivers a response but puts the onus on the user to evaluate and figure out what to do with it, to one where AIs are directly responsible for getting things done, and humans supervise.

I call this The Great Implementation

I call this The Great Implementation

Specifically, within 2023, I expect custom models will be trained to….

Create, re-purpose, and localize content – full brand standards fit into 32K tokens, with plenty of room to write some tweets.

Amazingly my own company @waymark is mentioned with Patrón, Spectrum, Coke, and OpenAI in this article.

thedrum.com/news/2023/02/2…

Amazingly my own company @waymark is mentioned with Patrón, Spectrum, Coke, and OpenAI in this article.

thedrum.com/news/2023/02/2…

Handle customer interactions – natural language Q&A, appointment setting, account management, and even tech support, available 24/7, pick up where you left off, text or voice.

Customer service and experience will improve dramatically!

cnbc.com/2023/02/07/mic…

Customer service and experience will improve dramatically!

cnbc.com/2023/02/07/mic…

Streamline hiring – in such a hot market, personalizing outreach, assessing resumes, summarizing & flagging profiles, suggesting interview questions. For companies who have an overabundance of candidates, perhaps even conducting initial interviews?

Coding – with knowledge of private code bases, following your coding standards. Copilot is just the beginning here.

https://twitter.com/mathemagic1an/status/1610023513334878208

Conduct research using a combination of public search and private retrieval.

See this master class, must-read thread from @jungofthewon about best-in-class @elicitorg – it really does meaningful research for you!

See this master class, must-read thread from @jungofthewon about best-in-class @elicitorg – it really does meaningful research for you!

https://twitter.com/jungofthewon/status/1621216780176986112

Analyze data, and generate, review, summarize reports – all sorts of projects can now "talk to data" – another of the leaders is @gpt_index

https://twitter.com/jerryjliu0/status/1629904453741723650

Execute processes by calling a mix of public and private APIs – sending emails, processing transactions, etc, etc, etc. We're starting to see this in the research as well.

https://twitter.com/timo_schick/status/1624058382142345216

How will this happen in practice? And what will the consequences be for work and jobs??

For starters, it's less about AI doing jobs and more about AI doing tasks.

For starters, it's less about AI doing jobs and more about AI doing tasks.

Many have argued that human jobs require more context and physical dexterity than AIs currently have, and thus that AIs will not be able to do most jobs.

This is true, but misses a key point, which is that the way work is organized can and will change to take advantage of AI.

This is true, but misses a key point, which is that the way work is organized can and will change to take advantage of AI.

What's actually going to happen is not that humans will be dropped into human roles, but that the tasks which add up to jobs will be pulled apart into discrete bits that AIs can perform.

There is precedent for such a change in the mode of production. As recently as ~100 years ago, physical manufacturing looked a lot more like modern knowledge work. Pieces fit together loosely, and people solved lots of small production problems on the fly with skilled machining

Interchangeable parts & assembly lines changed all that; standardization and tighter tolerances unlocked reliable performance at scale. This transformation is largely complete in manufacturing – people run machines that do almost all of the work with very high precision.

Services lag manufacturing because services are mediated by language, and the art of mapping conversation onto actions is hard to standardize. Businesses try, but people struggle to consistently use best practices. Every CMO complains that people don't respect brand standards.

The Great Implementation will bring about a shift from humans doing the tasks that constitute "Services" to the humans building, running, maintaining, and updating the machines that do the tasks that constitute services.

In many cases, those will be different humans. And this is where OpenAI's "global services alliance" with Bain comes in.

bain.com/about/media-ce…

bain.com/about/media-ce…

The core competencies needed to develop and deploy fine-tuned GPT4 models in corporate settings include:

*Problem definition* – what are we trying to accomplish, and how do we structure that as a text prompt & completion? What information do we need to include in the prompt to ensure that the AI has everything it needs to perform the task?

*Training data curation / adaptation / creation* – what constitutes a job well done? do we have records of this? do the records reflect implicit knowledge that the AI will need, or perhaps contain certain information (eg - PII) that should not be trained into a model at all?

*Validation, Error Handling, and Red Teaming* – how does model performance compare to humans? how do we detect failures, and what do we do about them? and how can we be confident that we'll avoid New Bing type behaviors?

There is an art to all of these, but they are not super hard skills to learn. Certainly a typical Bain consultant will be able to get pretty good at most of them. And the same basic approach will work across many environments. Specialization makes sense here.

Additionally, the hardest part about organizational change is often that organizations don't want to change. With that in mind, it's no coincidence that leadership turns to consultants who are known for helping corporations manage re-organizations, change, and yes – layoffs.

Consultants have talked like this forever, but this time it's literally true.

Btw @BainAlerts ... may I suggest Cognitive Revolution instead of "industrial revolution for knowledge work"?

:)

Btw @BainAlerts ... may I suggest Cognitive Revolution instead of "industrial revolution for knowledge work"?

:)

So, no, AI won't take jobs, but it will do parts of jobs, and many jobs may cease to exist in their current form. There's precedent for this too, when mechanization came to agriculture

Will the people affected will find other jobs? I don't know

Will the people affected will find other jobs? I don't know

https://twitter.com/labenz/status/1622313221171412992

Finally … WHY is OpenAI going this route with pricing? It's a big departure from the previous "API first", usage-based pricing strategy, and the technology would be no less transformative with that model. I see 2 big reasons for this approach: (1) safety/control and (2) $$$

re: Safety – @sama has said OpenAI will deploy GPT4 when they are confident it's safe to do so.

A $250K entry point suggests a "know your customer" approach to safety, likely including vetting customers, reviewing use cases, etc.

They did this for GPT3 and DALLE2 too.

A $250K entry point suggests a "know your customer" approach to safety, likely including vetting customers, reviewing use cases, etc.

They did this for GPT3 and DALLE2 too.

Of course, this doesn't mean things will be entirely predictable or safe.

I doubt that the OpenAI team that shipped ChatGPT would have signed off on "Sydney" – my guess is that MSFT ran their own fine-tuning & QA processes.

More here:

I doubt that the OpenAI team that shipped ChatGPT would have signed off on "Sydney" – my guess is that MSFT ran their own fine-tuning & QA processes.

More here:

https://twitter.com/MParakhin/status/1627330287276261381

re: $$$ – this is a natural way for OpenAI to use access to their best models to protect their lower-end business from cheaper / open source alternatives, and to some degree discourage / crowd out in-house corporate investments in AI.

I am always going on about threshold effects, and how application developers will generally want to use the smallest/cheapest/fastest models that suffice for their use case.

This is already starting to happen – see @jungofthewon again

This is already starting to happen – see @jungofthewon again

https://twitter.com/jungofthewon/status/1612636800673251330

With Meta having just (sort-of) released new ~SOTA models and @StabilityAI soon to release even better, the stage is set for a lot more customers to go this way, at least as long as OpenAI has such customer-friendly, usage-based, no commitment pricing

https://twitter.com/EMostaque/status/1629541492258750464

OpenAI can't prevent other projects from hitting key thresholds, but they can change customer calculus. Why chase pennies on base use cases when you've already spent big bucks on dedicated capacity? And is there budget for an ML PhD after we just dropped $1.5M on DV 32K?

In conclusion, economically transformative AI is not only here, but OpenAI is already selling it

We'll feel it once models are fine-tuned and integrated into existing systems

A lot will happen in 2023, but of course The Great Implementation will go on for years

Buckle up!

We'll feel it once models are fine-tuned and integrated into existing systems

A lot will happen in 2023, but of course The Great Implementation will go on for years

Buckle up!

If you made it this far, I'll of course appreciate your retweets, as well as your critical commentary.

https://twitter.com/labenz/status/1630284912853917697

And if you want more AI-obsessed analysis, check out the podcast. As I hope you can tell, I do the work!

https://twitter.com/labenz/status/1626993563543257095

• • •

Missing some Tweet in this thread? You can try to

force a refresh