Am I really obsessed enough to write two OpenAI pricing megathreads in 1 week?? Apparently so…

Today's 90% drop on ChatGPT API – aka "gpt-3.5-turbo" – is another before & after moment in AI

How it happened and what it means 🧵👇

Today's 90% drop on ChatGPT API – aka "gpt-3.5-turbo" – is another before & after moment in AI

How it happened and what it means 🧵👇

Again, you may prefer the substack – it's here: cognitiverevolution.substack.com/p/openai-price…

I was amazed that readers of the last thread pledged >$500, without us even asking. I am considering accepting and using that money on an editor, so I don't make mistakes like this:

I was amazed that readers of the last thread pledged >$500, without us even asking. I am considering accepting and using that money on an editor, so I don't make mistakes like this:

https://twitter.com/labenz/status/1631301869569015808

For context, this comes on the heels of a 2/3rds price reduction last year. A 96.6% total reduction in less than a year.

I am on record predicting low prices, but even I didn't expect another 90% price drop this soon.

marginalrevolution.com/marginalrevolu…

I am on record predicting low prices, but even I didn't expect another 90% price drop this soon.

marginalrevolution.com/marginalrevolu…

OpenAI didn't have to do this, at least not yet – business is booming as companies races to implement AI and most see OpenAI as the obvious choice.

Some savvy customers are developing cheaper alternatives, but most are still happy paying the $0.02.

Some savvy customers are developing cheaper alternatives, but most are still happy paying the $0.02.

https://twitter.com/jungofthewon/status/1612636800673251330

This move puts OpenAI's price at a fraction of their most direct competitors' prices, just as competitors are ramping up.

Driving costs so low so quickly will make things very difficult for non-hyperscalers. How are they ever supposed to recoup all that fixed training cost??

Driving costs so low so quickly will make things very difficult for non-hyperscalers. How are they ever supposed to recoup all that fixed training cost??

To compare foundation model prices across providers, I created this document. Feel free to make a copy and plug in your own usage patterns.

docs.google.com/spreadsheets/d…

docs.google.com/spreadsheets/d…

Notably, the new, 90% cheaper model is slightly less capable. OpenAI acknowledges this in their Chat Guide:

"Because gpt-3.5-turbo performs at a similar capability to text-davinci-003 but at 10% the price per token, we recommend it for most use cases."

platform.openai.com/docs/guides/ch…

"Because gpt-3.5-turbo performs at a similar capability to text-davinci-003 but at 10% the price per token, we recommend it for most use cases."

platform.openai.com/docs/guides/ch…

And indeed, I've noticed in my initial testing that it cannot follow some rather complex/intricate instructions that text-davinci-003 can follow successfully. Fwiw, Claude from @AnthropicAI is the only other model I've seen that can reliably follow these instructions.

Pro tip: when evaluating new models, it helps to have a set of go-to prompts that you know well. I can get a pretty good sense for relative strengths and weaknesses in a few interactions this way.

How did this 90% price drop happen?

Simple really – "Through a series of system-wide optimizations, we’ve achieved 90% cost reduction for ChatGPT since December; we’re now passing through those savings to API users."

Simple really – "Through a series of system-wide optimizations, we’ve achieved 90% cost reduction for ChatGPT since December; we’re now passing through those savings to API users."

Since December? Surely the work was in progress earlier.

Though, I could imagine that all the inference data they've collected via ChatGPT might be useful for powering a pruning procedures, which could bring parameter size down significantly. So maybe?

Though, I could imagine that all the inference data they've collected via ChatGPT might be useful for powering a pruning procedures, which could bring parameter size down significantly. So maybe?

Related: OpenAI apparently has all the data it needs, thank you very much.

Perhaps having so much data that you no longer need to use retain or use customer data in model training is the real moat?

Perhaps having so much data that you no longer need to use retain or use customer data in model training is the real moat?

https://mobile.twitter.com/sama/status/1631002519311888385

So... what does this mean???

For one thing, it's approaching a version of Universal Basic Intelligence. Sure, it's not open, but it's getting pretty close to free.

For one thing, it's approaching a version of Universal Basic Intelligence. Sure, it's not open, but it's getting pretty close to free.

https://twitter.com/labenz/status/1579315352001220608

For the global poor, this is a huge win. Chat interactions that consist of multiple rounds of multiple paragraphs will now cost just a fraction of a cent. For a nickel / day, people can have all the AI chat they need.

The educational possibilities alone are incredible, and indeed OpenAI highlights "global learning platform" Quizlet, already with 60 million students, and now launching "a fully-adaptive AI tutor" today. For ~$1 / day, you can sponsor a full classroom!

quizlet.com/labs/qchat

quizlet.com/labs/qchat

A lot of "AI will exacerbate economic inequality" takes ought to be rethought.

Concentration among AI owners may be highly unequal, but access is getting democratized extremely quickly.

Concentration among AI owners may be highly unequal, but access is getting democratized extremely quickly.

https://twitter.com/labenz/status/1611755594888933378

With this in mind, I recently registered universalbasicintelligence.org

If you're inspired by the idea of Universal Basic Intelligence and think you can run a program that delivers more value per dollar than @GiveDirectly, I'd love to hear about it!

If you're inspired by the idea of Universal Basic Intelligence and think you can run a program that delivers more value per dollar than @GiveDirectly, I'd love to hear about it!

Meanwhile, in the rich world, AI was already cheap and will now be super-abundant

But one thing that is missing at this price level: fine-tuning! For the turbo version, you'll have to make do with old-fashioned prompt engineering. The default voice of ChatGPT will be everywhere

But one thing that is missing at this price level: fine-tuning! For the turbo version, you'll have to make do with old-fashioned prompt engineering. The default voice of ChatGPT will be everywhere

Every product is going to be talking to us, generating multiple versions, proactively offering variations, suggesting next actions. Executed poorly it will be overwhelming, executed well it will feel effortless.

Have to shout out my own company @waymark as a great example here. We had the vision for a "Watch first" video creation experience years ago, and we now combine a bunch of different AIs to make it possible.

https://twitter.com/labenz/status/1625641228715827207

And while it wasn't the biggest news OpenAI made this week, they did revamp their website to highlight customer stories, and we were genuinely honored to be featured!

openai.com/customer-stori…

openai.com/customer-stori…

But this goes way beyond content.

Agent/bot frameworks like @LangChainAI and @PromptableAI, and companies like @fixieai are huge winners too

AI auto-debugging is amazing, but often looks even clumsier than human debugging. Lot of calls, but … that matters a lot less now

Agent/bot frameworks like @LangChainAI and @PromptableAI, and companies like @fixieai are huge winners too

AI auto-debugging is amazing, but often looks even clumsier than human debugging. Lot of calls, but … that matters a lot less now

It follows that AI bot traffic is going to absolutely explode. I have a vision of @LangChainAI lobsters, just smart enough to slowly stumble around the web until they accomplish their goals. Image credit to @playground_ai

upshot: OpenAI revenue might not drop as much as you'd think

Anecdote from @CogRev_Podcast: @Suhail told us that when @playgroundAI made image generation twice as fast, they immediately usage jump 2X. Something like that might happen here.

Anecdote from @CogRev_Podcast: @Suhail told us that when @playgroundAI made image generation twice as fast, they immediately usage jump 2X. Something like that might happen here.

https://twitter.com/eriktorenberg/status/1621621113393483778

Embedding databases like @trychroma win too. Cheaper tokens make it worthwhile to embed everything and figure it out context management later / on the fly.

Learn all about vector databases with @atroyn on our latest @CogRev_Podcast!

Learn all about vector databases with @atroyn on our latest @CogRev_Podcast!

https://twitter.com/CogRev_Podcast/status/1631289784097284097

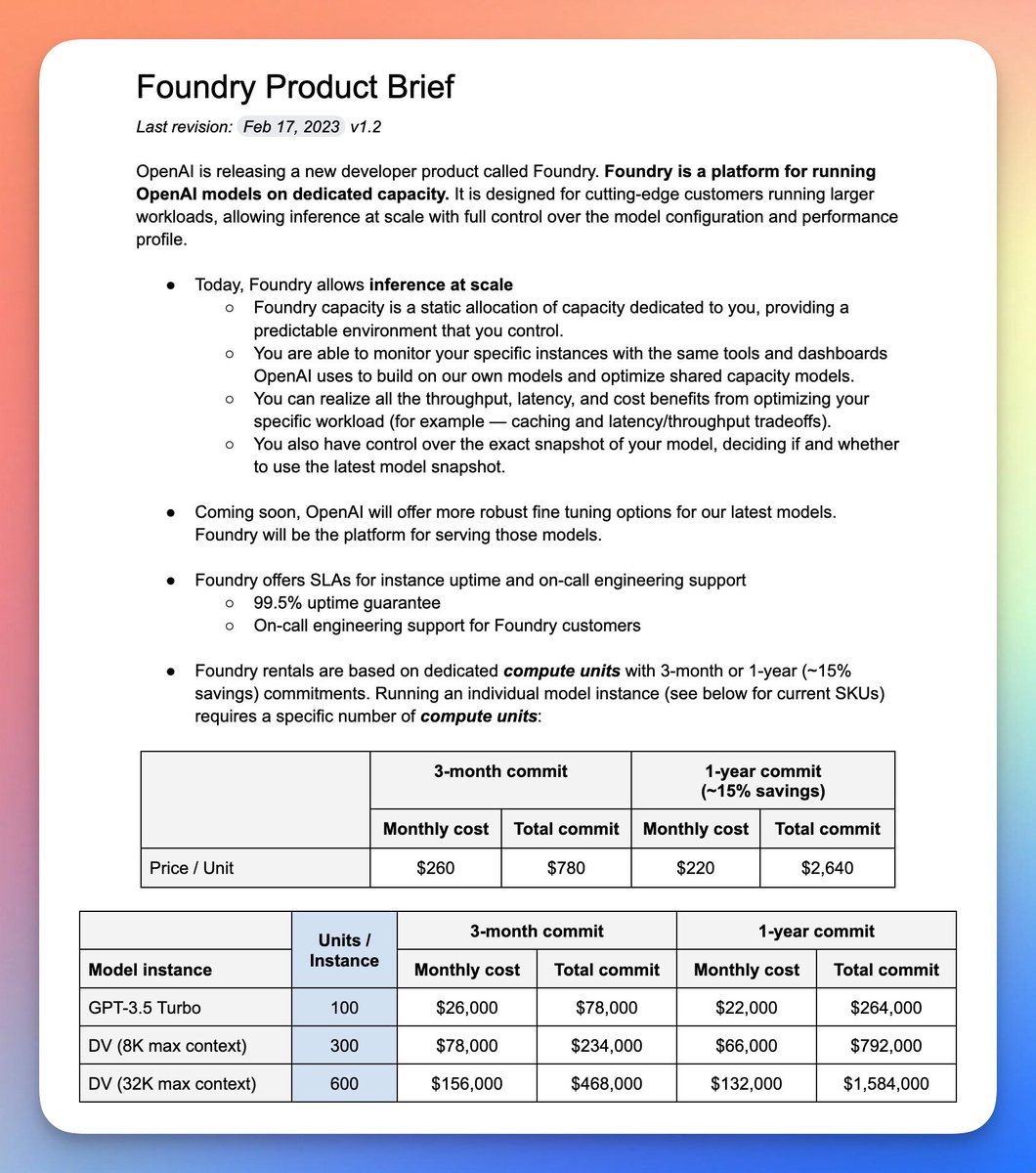

To give you a sense for the volume of usage that OpenAI is expecting… they also announced dedicated instances that "can make economic sense for developers running beyond ~450M tokens per day."

That's a lot!

That's a lot!

As I said the other day, longer context windows and especially "robust fine-tuning" seem to be OpenAI's core price discrimination strategy, and I think it's very likely to work.

https://twitter.com/labenz/status/1630284923557773318

While essay writers in India pay a tenth of a penny for homework help, corporate customers will pay 1-2 orders of magnitude more for the ability to really dial in the capabilities they want.

And I'll bet some will pay 3 orders of magnitude more, simply because their workload doesn't take full advantage of their dedicated compute. Few companies run evenly 24/7.

What might OpenAI do with that compute when it's not in use? Safe to say they won't be mining bitcoin.

What might OpenAI do with that compute when it's not in use? Safe to say they won't be mining bitcoin.

For open source? This takes some air out of open source model projects – when a quality hosted version that runs fast and reliability is so cheap, who needs the comparative hassle of open source? – but open source will remain strong. It's driven by more than money.

And importantly, the open source community can do things OpenAI can't

Best AI podcast of 2023 so far is "No Priors" interview with @EMostaque – his vision for a cambrian explosion of small models, each customized to its niche, is beautiful and inspiring

Best AI podcast of 2023 so far is "No Priors" interview with @EMostaque – his vision for a cambrian explosion of small models, each customized to its niche, is beautiful and inspiring

https://twitter.com/eladgil/status/1626238726212038657

Obviously the number of prompt engineer job listings will continue to soar, for a while at least.

If you want to break into the game, I recommend @learnprompting

Very clear explanations, natural progression, and thoughtful examples.

learnprompting.org

If you want to break into the game, I recommend @learnprompting

Very clear explanations, natural progression, and thoughtful examples.

learnprompting.org

Finally for now, thank you for reading! Comments & messages have been overwhelmingly positive – nothing like the Twitter I'd always heard about.

Here's the first tweet in this thread; I always appreciate your retweets :)

Here's the first tweet in this thread; I always appreciate your retweets :)

https://twitter.com/labenz/status/1631346679893958658

And here's the podcast – cognitiverevolution.ai

soon we'll have:

- @LiJunnan0409 and @DongxuLi_ of BLIP & BLIP2

- @AravSrinivas of @perplexity_ai

- @eladgil and @saranormous of No Priors

- @jungofthewon of @elicitorg

soon we'll have:

- @LiJunnan0409 and @DongxuLi_ of BLIP & BLIP2

- @AravSrinivas of @perplexity_ai

- @eladgil and @saranormous of No Priors

- @jungofthewon of @elicitorg

and hopefully also soon:

- @hwchase17 of @LangChainAI

- @RiversHaveWings of #stablediffusion fame

- @learnprompting lead @SanderSchulhoff

Check your messages folks! 🙏🤞

- @hwchase17 of @LangChainAI

- @RiversHaveWings of #stablediffusion fame

- @learnprompting lead @SanderSchulhoff

Check your messages folks! 🙏🤞

• • •

Missing some Tweet in this thread? You can try to

force a refresh