🧠 AI experiment comparing #ControlNet and #Gen1. Video goes in ➡ Minecraft comes out.

Results are wild, and it's only a matter of time till this tech runs at 60fps. Then it'll transform 3D and AR.

How soon until we're channel surfing realities layered on top of the world?🧵

Results are wild, and it's only a matter of time till this tech runs at 60fps. Then it'll transform 3D and AR.

How soon until we're channel surfing realities layered on top of the world?🧵

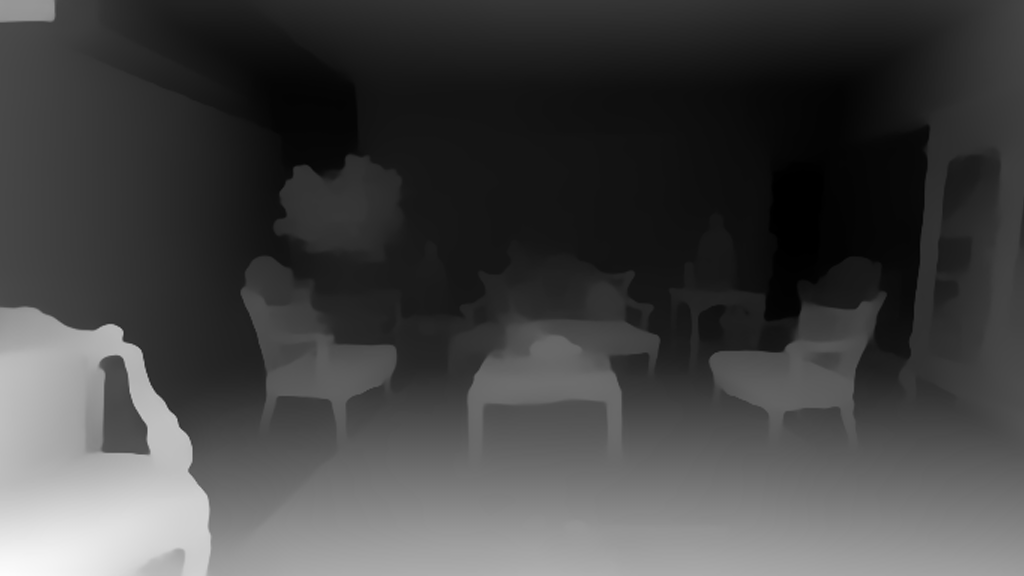

First ControlNet. Wow, this tool makes it very easy to get photorealistic results. I used the HED method for this scene and got some amazing results. I used EbSynth for smoother interpolation between ControlNet keyframes. Check out my prior posts for the end-to-end workflow.

Next up Gen 1: impressive is the word. The star of the show is the temporal consistency. Getting photoreal results is harder than ControlNet IMO. #Gen1 is almost its own stylized thing, so I advise leaning into that. But why does it matter - can't we just type text to get video?

Text prompts are cool, but control over the details is crucial for artists. These new AI tools turn regular photos/videos into an expressive form of performance capture. Record characters/scenes with your phone and use it to guide the generation. My buddy Don shows how it's done:

https://twitter.com/DonAllenIII/status/1629395586750492672

Of course, the input media can also be *synthetically* generated. Go from a blocked out 3D scene to final render in record time. Control the details you care about (e.g. blocking), and let AI help you with the rest (e.g. texturing). I cover use cases here: creativetechnologydigest.substack.com/p/depth2image-…

https://twitter.com/MartinNebelong/status/1631374504944345092

See where I'm going when I say gen AI is going to disrupt 3D rendering? You could be running a lightweight 3D engine in a browser, slap on a generative filter, and transform it into AAA game engine quality. No massive team required. And it's not limited to gaming either:

https://twitter.com/bilawalsidhu/status/1627872696112480257

It's wild how fast things are moving in generative AI. Here's my video2minecraft results from just a few months ago, which look dated with all these new approaches to tame the chaotic diffusion process:

https://twitter.com/bilawalsidhu/status/1585993553263484928

And that's a wrap! I like sharing my workflows openly with the AI & creator community, so if enjoyed this thread I'd appreciate it if you:

1. RT the thread below

2. Follow @bilawalsidhu for more

3. Subscribe to get some visual umami right to your inbox: creativetechnologydigest.substack.com

1. RT the thread below

2. Follow @bilawalsidhu for more

3. Subscribe to get some visual umami right to your inbox: creativetechnologydigest.substack.com

https://twitter.com/bilawalsidhu/status/1632133413833506819

• • •

Missing some Tweet in this thread? You can try to

force a refresh