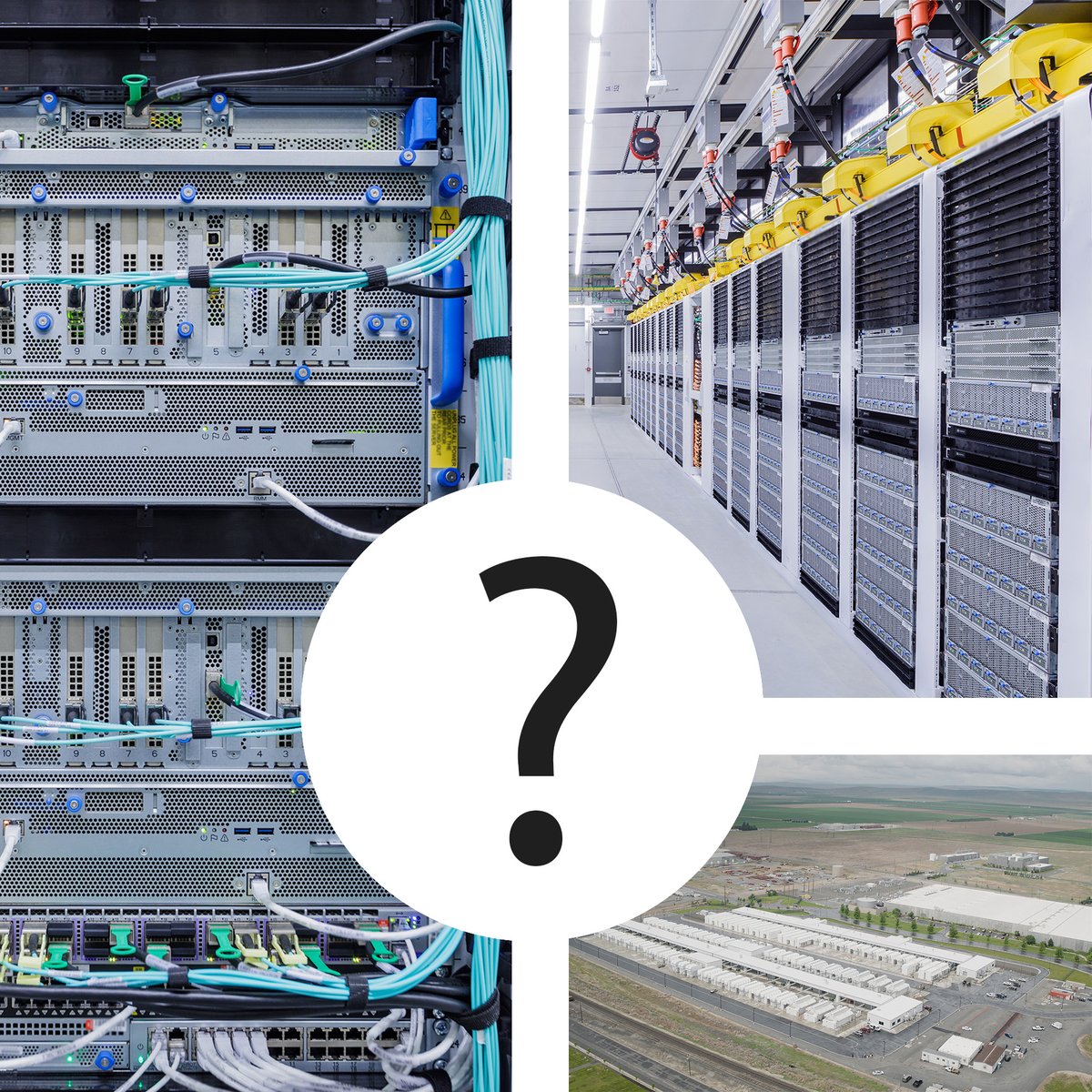

Azure/Microsoft released pics of OpenAI's next-gen AI datacenter.

This supposedly powers [Chat]GPT* training (inference?). From the pics we can infer most components, power, network/ib topology, layouts, vendors and more.

A thread 🧵[1/7]

This supposedly powers [Chat]GPT* training (inference?). From the pics we can infer most components, power, network/ib topology, layouts, vendors and more.

A thread 🧵[1/7]

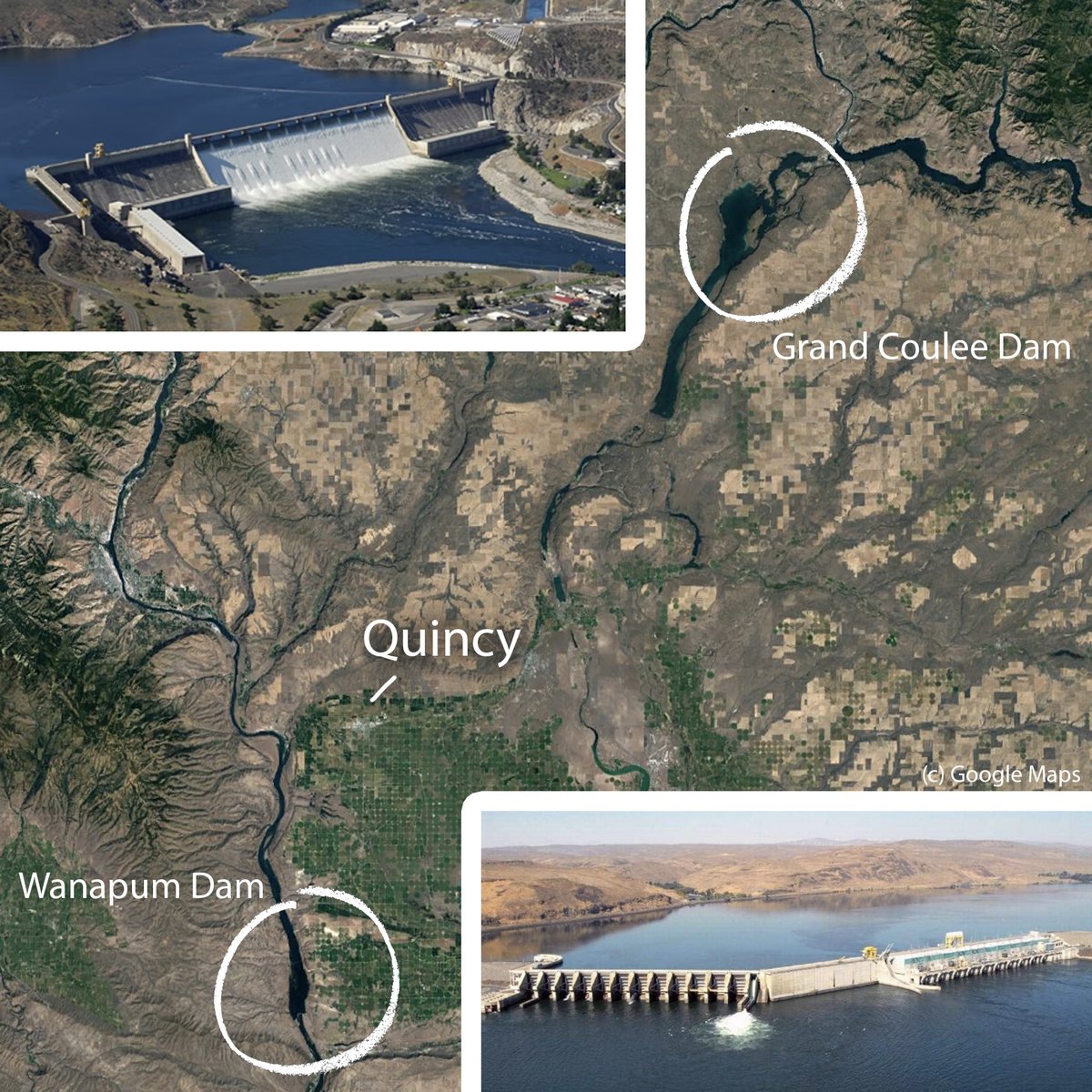

[2/7] The datacenter Microsoft/Azure pictures is in Quincy, WA, USA (47.23,-119.86). This is an old picture. Today, there's a datacenter in place of that parking lot.

An old parking lot as the origin of this modern AI revival. Nice: I hate parking lots.

An old parking lot as the origin of this modern AI revival. Nice: I hate parking lots.

[3/7] Why is the datacenter in Quincy, WA?

AI eats compute which is fed by power and cooling (+=power). This is a massive Opex to cloud providers.

But in Quincy you pay 3ct/kWh! (SF=30ct/kWH). This is bc they have hydro (green!) power.

I guess that makes ChatGPT "green"?

AI eats compute which is fed by power and cooling (+=power). This is a massive Opex to cloud providers.

But in Quincy you pay 3ct/kWh! (SF=30ct/kWH). This is bc they have hydro (green!) power.

I guess that makes ChatGPT "green"?

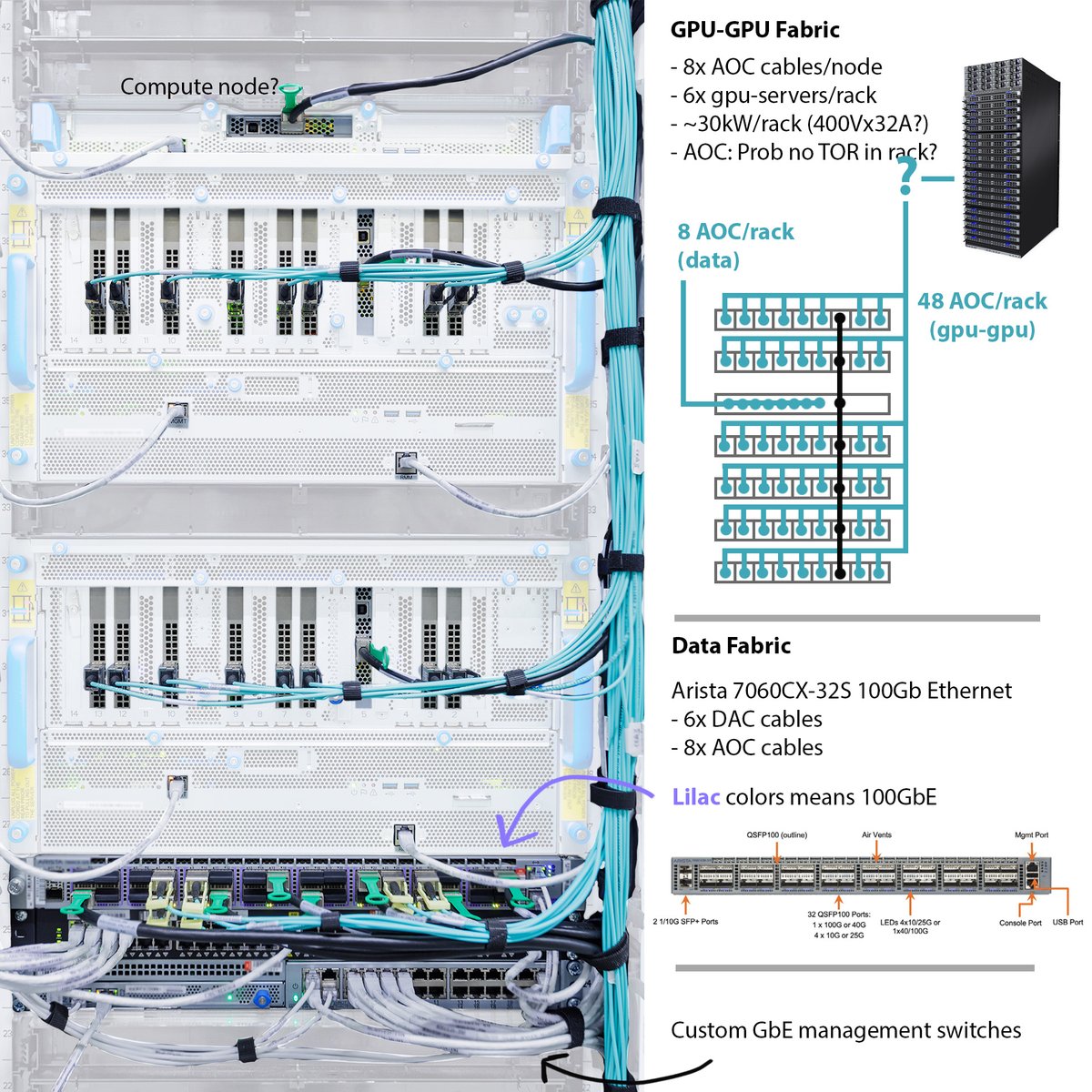

[4/7] They are using the Azure ND A100 v4.

Cost ~$150k/node.

8x A100 GPUs, 2x48 core CPU, 900GiB DDR4 ram.

8x IB HDR NICs provide a 200x8=1600Gb/s gpu-gpu fabric.

The data fabric seems separate, using custom Azure stuff. Data ingest is a wimpy 50Gb/s: for LLMs totally fine.

Cost ~$150k/node.

8x A100 GPUs, 2x48 core CPU, 900GiB DDR4 ram.

8x IB HDR NICs provide a 200x8=1600Gb/s gpu-gpu fabric.

The data fabric seems separate, using custom Azure stuff. Data ingest is a wimpy 50Gb/s: for LLMs totally fine.

[5/7] Fabric! A thin blue cable is optical/AOC (expensive) and a thick black one is copper/DAC (cheap). DAC only works short range ~5m.

The data NICs flow into an underpopulated Arista 6070CX switch, and out-of-rack with 8x AOCs.

gpu-gpu is all AOC, maybe to a core switch.

The data NICs flow into an underpopulated Arista 6070CX switch, and out-of-rack with 8x AOCs.

gpu-gpu is all AOC, maybe to a core switch.

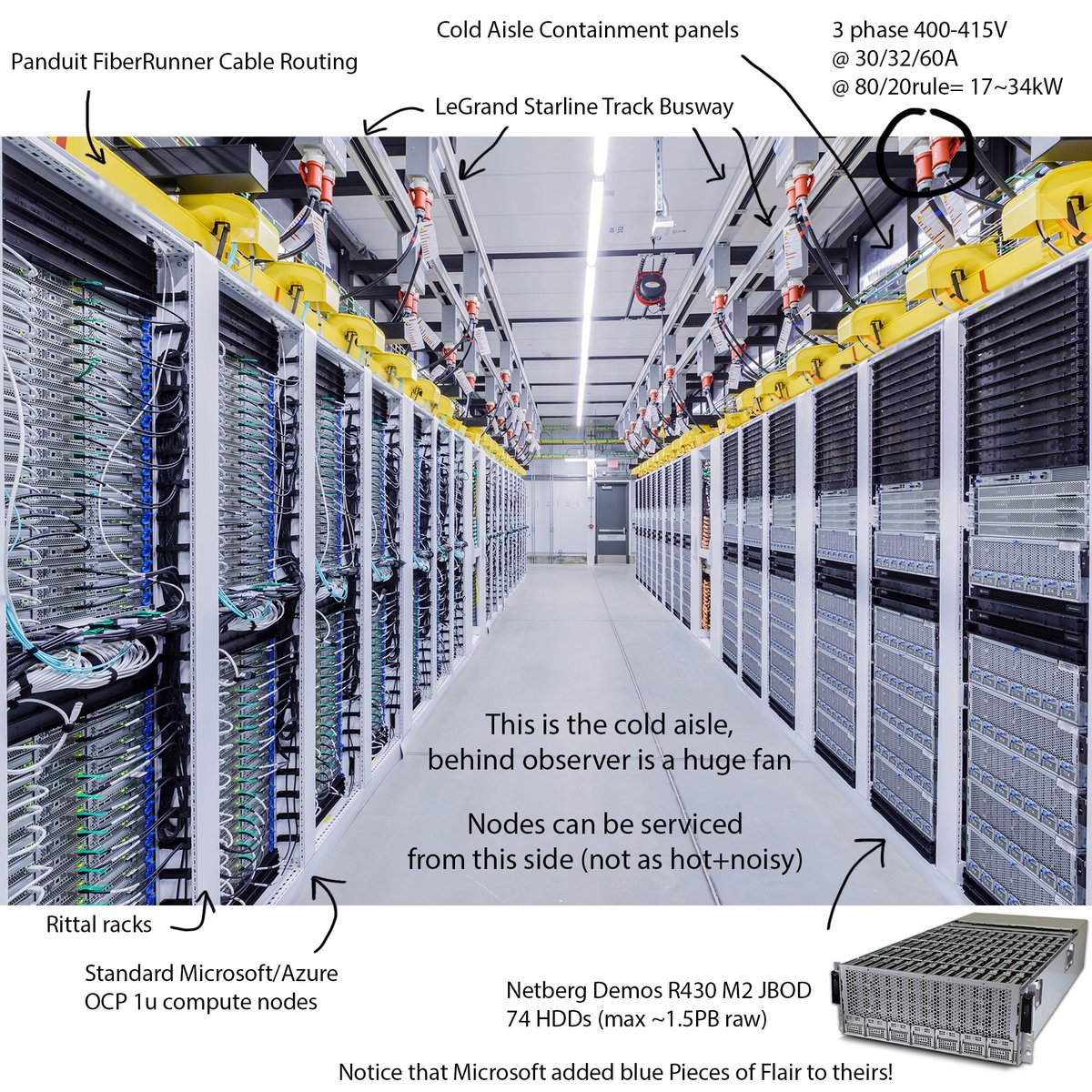

[6/7] Their high-level layout is using cold aisle containment (this is the cold aisle), with amazing levels of standardization.

All nodes depicted here are just for data storage and simple cpu compute. No HPC or AI happening in this aisle.

All nodes depicted here are just for data storage and simple cpu compute. No HPC or AI happening in this aisle.

[7/7] Closing thoughts - The AI tech tree is deep, and you could spend ur life in Pythonland.

AI is expensive, and if you care about performance, everything below you is relevant, down to the bare metal.

The future may be full of monolithic bare metal supercomputers.

AI is expensive, and if you care about performance, everything below you is relevant, down to the bare metal.

The future may be full of monolithic bare metal supercomputers.

*hot aisle containment (thx @rpoo)

Found a paper on that custom Microsoft 50GbE NIC with (lolz) usb-b: microsoft.com/en-us/research…

• • •

Missing some Tweet in this thread? You can try to

force a refresh