ChatGPT casually dropped an APP STORE 🤯

It can now:

- browse the web (RIP Bing waitlist, cutoff)

- write and run Python (RIP replit?)

- access org info (RIP docsearch startups)

- add third party plugins from OpenTable, Wolfram, Instacart, Zapier, etc)

- developer SDK in preview

It can now:

- browse the web (RIP Bing waitlist, cutoff)

- write and run Python (RIP replit?)

- access org info (RIP docsearch startups)

- add third party plugins from OpenTable, Wolfram, Instacart, Zapier, etc)

- developer SDK in preview

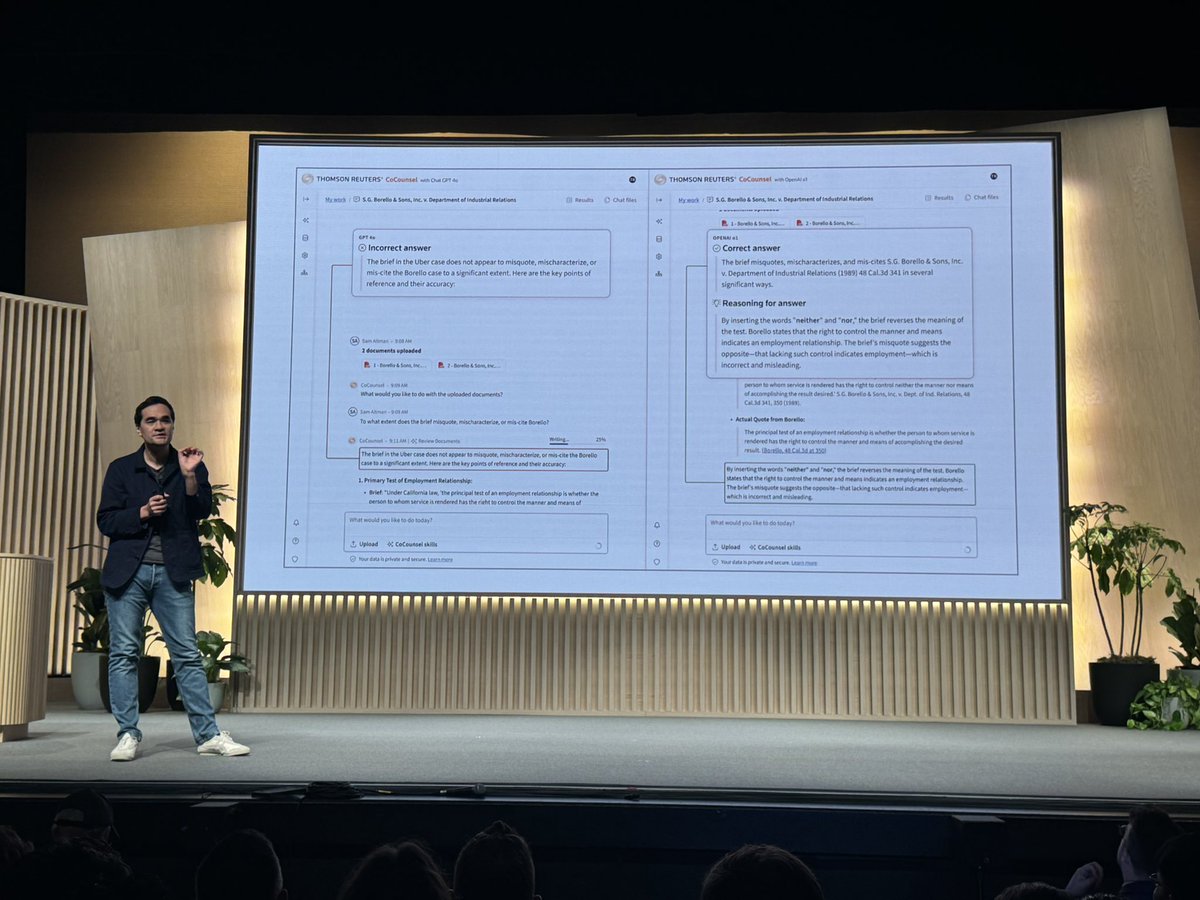

When I talked about the AI Red Wedding last year (

Now the AI Red Wedding is coming for companies building atop foundation model companies.

@OpenAI is… twitter.com/i/web/status/1…

https://twitter.com/swyx/status/1574109181015199744) I was talking about AI offerings undercutting existing human-based or manual business processes.

Now the AI Red Wedding is coming for companies building atop foundation model companies.

@OpenAI is… twitter.com/i/web/status/1…

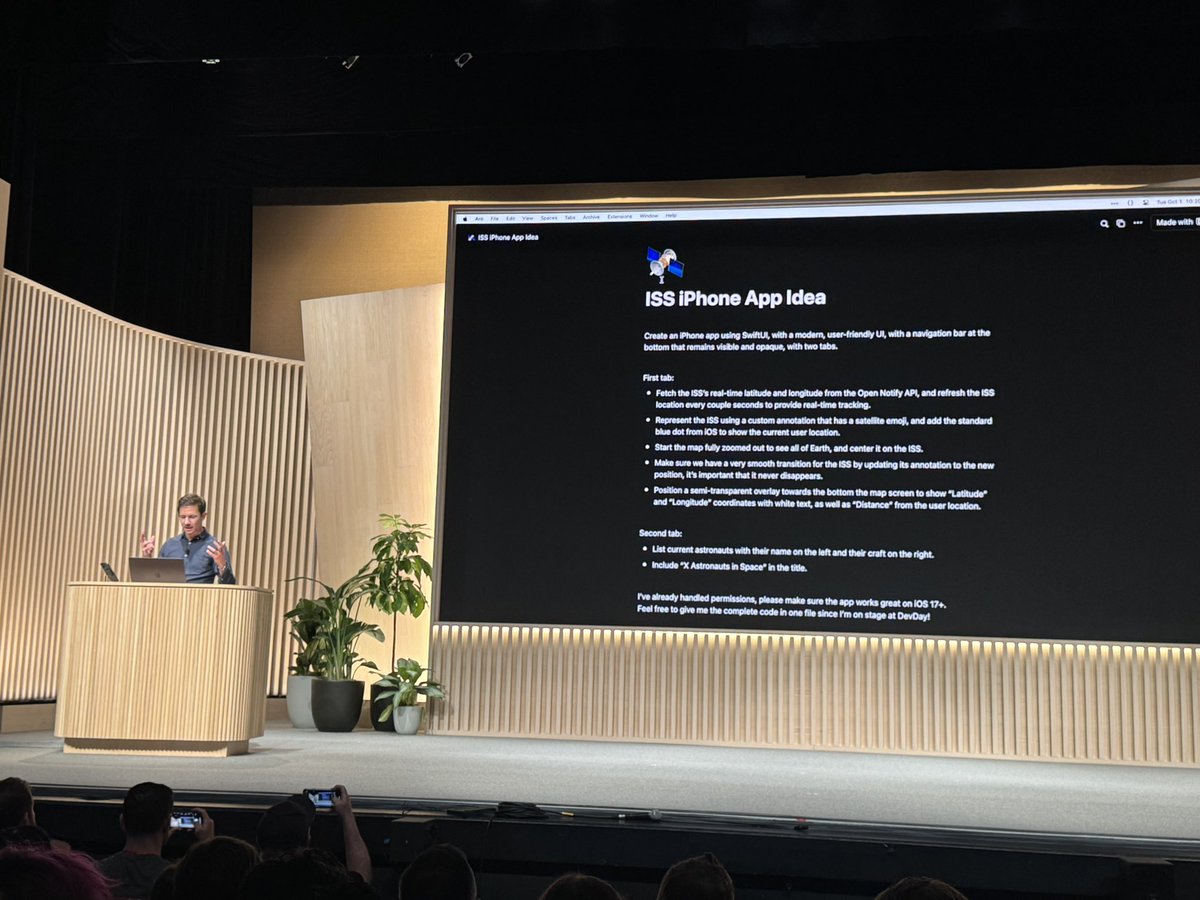

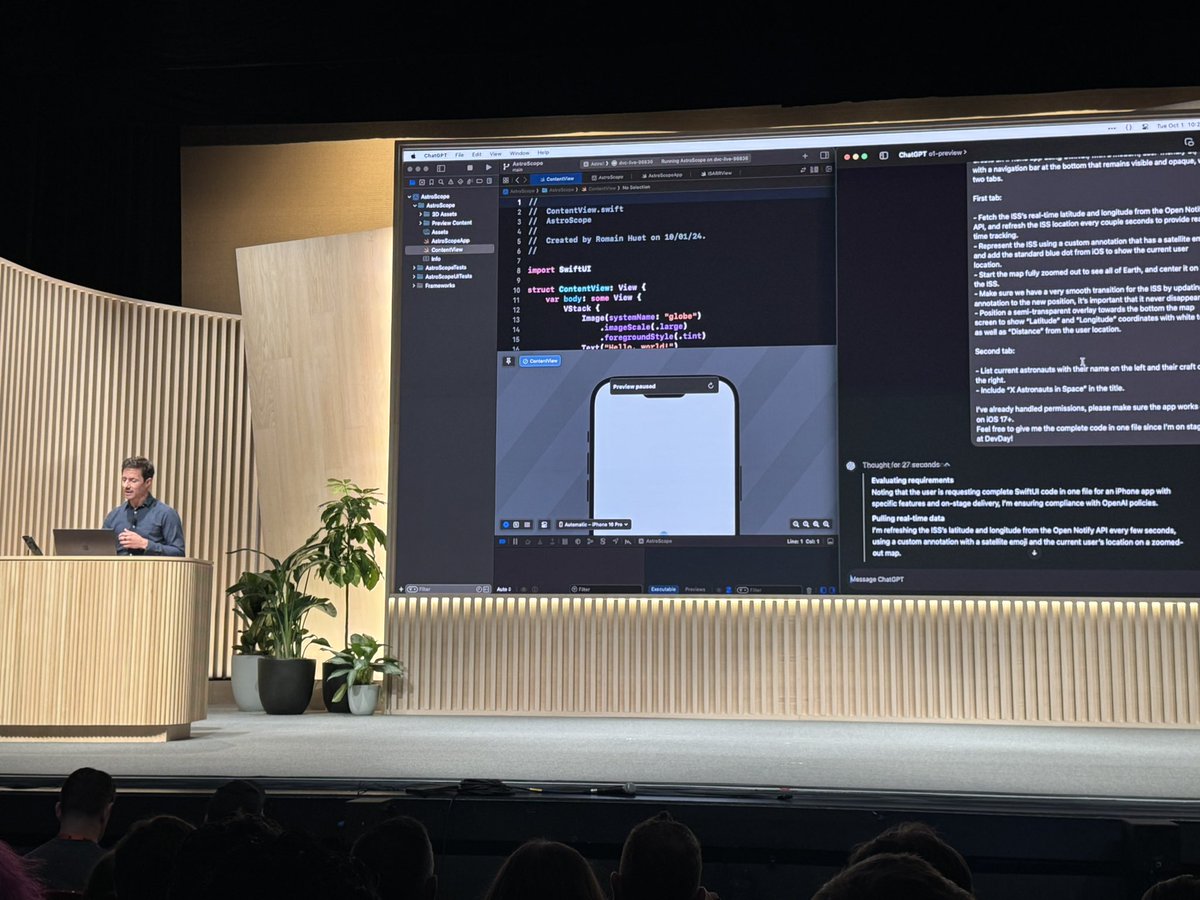

@OpenAI Actually, calling it a "Developer SDK" is stretching it:

1. Define API with OpenAPI (heheh not to be confused with OpenAI)

2. Explain what the API is for and what the methods do.

that's it, no step 3. ChatGPT can figure out when and how to use it correctly now.

@karpathy… twitter.com/i/web/status/1…

1. Define API with OpenAPI (heheh not to be confused with OpenAI)

2. Explain what the API is for and what the methods do.

that's it, no step 3. ChatGPT can figure out when and how to use it correctly now.

@karpathy… twitter.com/i/web/status/1…

@OpenAI @karpathy IT RUNS FFMPEG.

CHATGPT RUNS FFMPEG.

I think people aren't properly appreciating it's ability to run -and execute- Python.

IT RUNS FFMPEG

IT RUNS FREAKING FFMPEG. inside CHATGPT.

CHATGPT IS AN AI COMPUTE PLATFORM NOW.

what. is. happening.

CHATGPT RUNS FFMPEG.

I think people aren't properly appreciating it's ability to run -and execute- Python.

IT RUNS FFMPEG

https://twitter.com/gdb/status/1638971232443076609?s=20

IT RUNS FREAKING FFMPEG. inside CHATGPT.

CHATGPT IS AN AI COMPUTE PLATFORM NOW.

what. is. happening.

946 people tuned in to an emergency unscheduled ChatGPT space lol

people are so hyped about this, it is unreal.

thanks to special guests @OfficialLoganK, @Altimor @dabit3 and the couple dozen others who joined in to revel in the biggest app store launch of the decade

people are so hyped about this, it is unreal.

thanks to special guests @OfficialLoganK, @Altimor @dabit3 and the couple dozen others who joined in to revel in the biggest app store launch of the decade

CHATGPT IS READING AND SUMMARIZING MY REACTIONS TO CHATGPT THAT I MADE 2 HOURS AGO

hi chatgpt i love you please kill me last

hi chatgpt i love you please kill me last

https://twitter.com/gdb/status/1638986918947082241?s=46&t=90xQ8sGy63D2OtiaoGJuww

• • •

Missing some Tweet in this thread? You can try to

force a refresh