1/ Prediction: Everyone will soon be using foundation models (FMs) like GPT-4.

However, they'll be using FMs trained on their own data & workloads:

"GPT-You", not GPT-X

Tl/dr:

- Closed APIs aren't defensible

- The durable moat is data

- The last mile generates the real value

However, they'll be using FMs trained on their own data & workloads:

"GPT-You", not GPT-X

Tl/dr:

- Closed APIs aren't defensible

- The durable moat is data

- The last mile generates the real value

2/ *Closed APIs aren't defensible*

- Recent examples like @StanfordCRFM Alpaca tinyurl.com/yc78bnct shows that cloning closed API-based FMs like ChatGPT can be done for a few $100 on top of small OSS base FMs (e.g. here, fine-tuning LLaMa 7B via exs from the ChatGPT API).

- Recent examples like @StanfordCRFM Alpaca tinyurl.com/yc78bnct shows that cloning closed API-based FMs like ChatGPT can be done for a few $100 on top of small OSS base FMs (e.g. here, fine-tuning LLaMa 7B via exs from the ChatGPT API).

3/

- Since Alpaca dozens of others have cloned this cloning procedure (e.g. Dolly github.com/databrickslabs…)

- Will these withstand legal scrutiny? Enough potential legal issues w/ original FM training on web data may muddle things... but either way, the key point still stands...

- Since Alpaca dozens of others have cloned this cloning procedure (e.g. Dolly github.com/databrickslabs…)

- Will these withstand legal scrutiny? Enough potential legal issues w/ original FM training on web data may muddle things... but either way, the key point still stands...

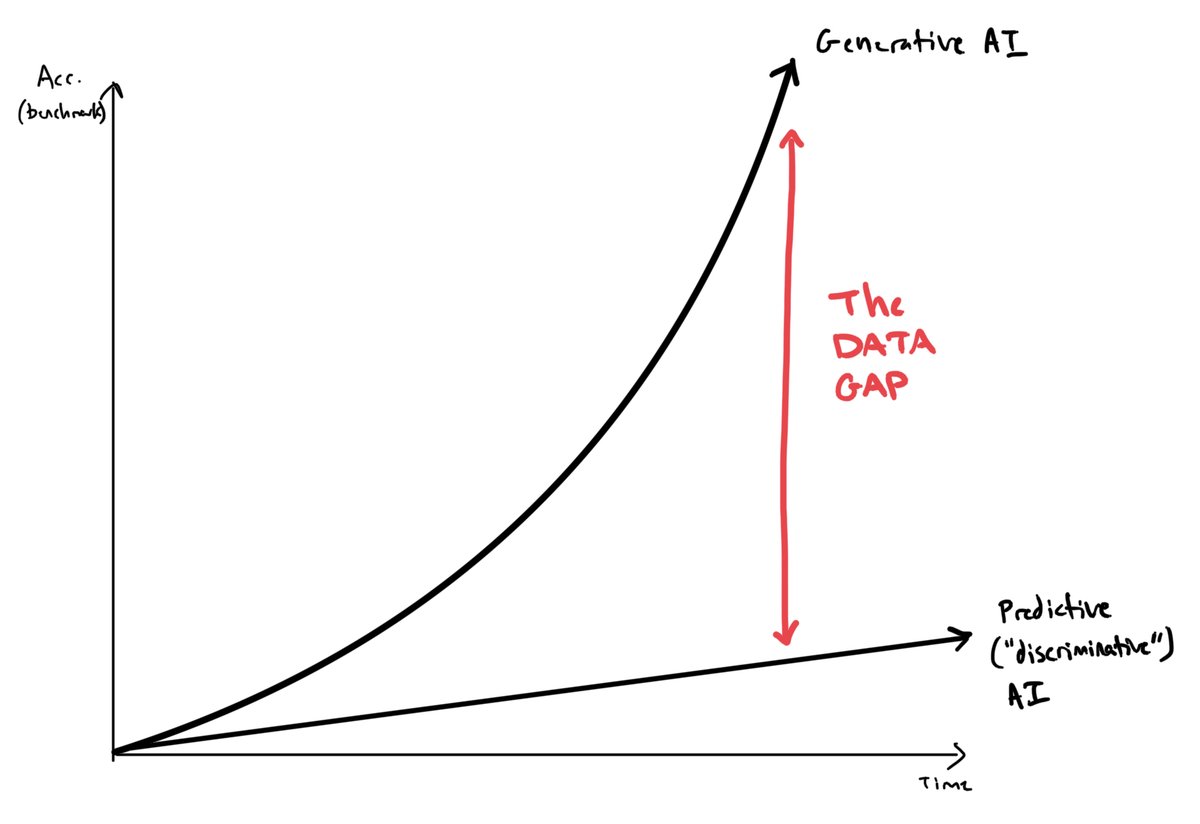

4/ *The durable moat is data*

- Recent progress has shown that *data* is the secret sauce and real differentiator for FMs (e.g. ChatGPT is just GPT-3 fine-tuned with human feedback)

- Training on open web data can only get you so far for complex, enterprise-specific tasks.

- Recent progress has shown that *data* is the secret sauce and real differentiator for FMs (e.g. ChatGPT is just GPT-3 fine-tuned with human feedback)

- Training on open web data can only get you so far for complex, enterprise-specific tasks.

5/

- Examples of FMs trained on proprietary, domain-specific data like BloombergGPT arxiv.org/abs/2303.17564 show the way forward: enterprises (and people!) using the durable moat of their own private data to build powerful, domain-specific FMs.

- Examples of FMs trained on proprietary, domain-specific data like BloombergGPT arxiv.org/abs/2303.17564 show the way forward: enterprises (and people!) using the durable moat of their own private data to build powerful, domain-specific FMs.

6/

- Recent OSS progress shows that FM model architectures are commoditizing (and standardizing)

- This means proprietary data will soon be the only durable moat.

- This data will be the edge that determines AI success.

- However: developing this data takes effort...

- Recent OSS progress shows that FM model architectures are commoditizing (and standardizing)

- This means proprietary data will soon be the only durable moat.

- This data will be the edge that determines AI success.

- However: developing this data takes effort...

7/ *The last mile generates the real value*

- Getting real, complex AI use cases to production-level accuracy takes significant data labeling & development!

- See arxiv.org/abs/2302.10724, opensamizdat.com/posts/chatgpt_… - ChatGPT loses to specialized fine-tuned models 75%+ of the time!

- Getting real, complex AI use cases to production-level accuracy takes significant data labeling & development!

- See arxiv.org/abs/2302.10724, opensamizdat.com/posts/chatgpt_… - ChatGPT loses to specialized fine-tuned models 75%+ of the time!

8/

- Fine-tuning significantly out-performs ZSL/prompt approaches (e.g. see arxiv.org/pdf/2012.15723…)

- Even the OpenAI docs recommend min. 100 labeled examples/class for fine-tuning (for a 100-way classifier = 10K+ examples!), which empirical data shows is often a significant min

- Fine-tuning significantly out-performs ZSL/prompt approaches (e.g. see arxiv.org/pdf/2012.15723…)

- Even the OpenAI docs recommend min. 100 labeled examples/class for fine-tuning (for a 100-way classifier = 10K+ examples!), which empirical data shows is often a significant min

9/

- However, this data development is not just a chore- it's the source of a powerful flywheel.

- The more you fine-tune, the more powerful your FM becomes for your data & workloads, and the more value accrues!

- The "base" FM will matter less and less- as long as you own it.

- However, this data development is not just a chore- it's the source of a powerful flywheel.

- The more you fine-tune, the more powerful your FM becomes for your data & workloads, and the more value accrues!

- The "base" FM will matter less and less- as long as you own it.

10/ Tl/dr: the future will be "GPT-You", not GPT-X

- Closed APIs aren't defensible

- The durable moat is data

- The last mile generates the real value

Stay tuned for more on what we're building @SnorkelAI to support developing FMs on *your* data, for *your* tasks...

- Closed APIs aren't defensible

- The durable moat is data

- The last mile generates the real value

Stay tuned for more on what we're building @SnorkelAI to support developing FMs on *your* data, for *your* tasks...

• • •

Missing some Tweet in this thread? You can try to

force a refresh

Read on Twitter

Read on Twitter