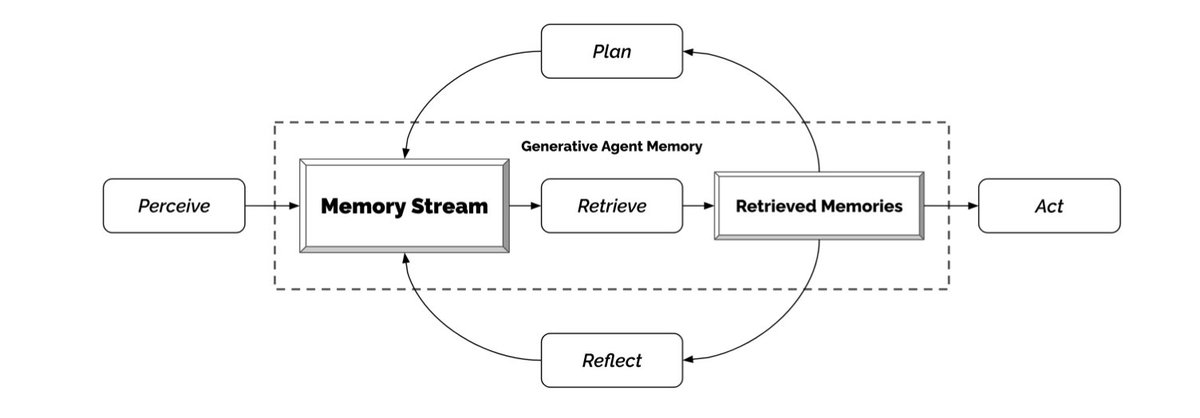

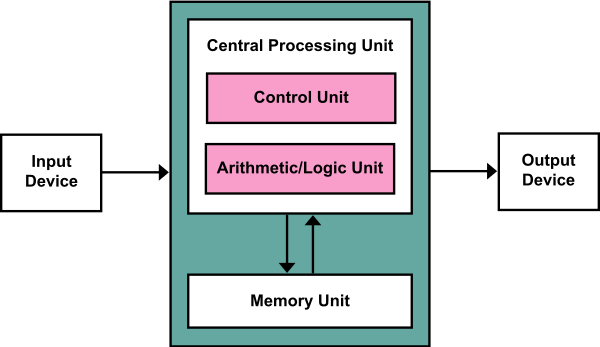

Self-prompting AI agents ahead: Recorded memory is key. A new natural language computing paradigm? Looking back, John von Neumann (& Konrad Zuse) are at the base of a convergent evolution.

Sorry folks, my longread (16k digits) was put behind the 🧱

1/5

heise.de/hintergrund/Au…

Sorry folks, my longread (16k digits) was put behind the 🧱

1/5

heise.de/hintergrund/Au…

Natural abstraction is more than just agents. Scaffolded LLMs (@BerenMillidge) are therefore a better term here. Agents are suitable for certain types of tasks.

However, other programmes are possible in natural language. Millidge draws interesting economic conclusions:

2/5

However, other programmes are possible in natural language. Millidge draws interesting economic conclusions:

2/5

Foundation model providers like OpenAI occupy the same niche as large chip manufacturers in the age of digital computers. Comparable business model: Training a basic model comes at huge cost, comparable to building a chip factory. They sell API calls as a commodity product.

3/5

3/5

Selling API calls at large volume. High margin, substantial cost. @BerenMillidge forecast: "We should expect a consolidation into a few main oligopolic players." Massive fixed costs, fierce competition as in the semiconductor industry: "They never print money like SAAS."

4/5

4/5

There might never be just one API to bind them all. "A number of heterogeneous calls to many different language models of different scales and specializations" seems the most plausible scenario at this point. I recommend reading @BerenMillidge 🌱

beren.io/2023-04-11-Sca…

5/5

beren.io/2023-04-11-Sca…

5/5

• • •

Missing some Tweet in this thread? You can try to

force a refresh

Read on Twitter

Read on Twitter