🧠 The Anatomy of Autonomy 🤖

The fifth killer app of AI is Autonomous Agents.

Presenting

- Summary of #AutoGPT / @babyAGI_

- The 5 stages of "brain" development it took to get from Foundation Models to Autonomous Agents

- Why Full Autonomy is like "Full Self Driving"!

Begin:

The fifth killer app of AI is Autonomous Agents.

Presenting

- Summary of #AutoGPT / @babyAGI_

- The 5 stages of "brain" development it took to get from Foundation Models to Autonomous Agents

- Why Full Autonomy is like "Full Self Driving"!

Begin:

@babyAGI_ (this is the obligatory threadooor TLDR of my latest newsletter post, hop over if you like my long form work: )latent.space/p/agents

I think there have been 4 "Killer Apps" of AI so far.

"Killer App" as in:

- unquestionable PMF

- path to making >$100m/yr

- everybody holds it up as an example

They are:

1. Generative Text

2. Generative Art

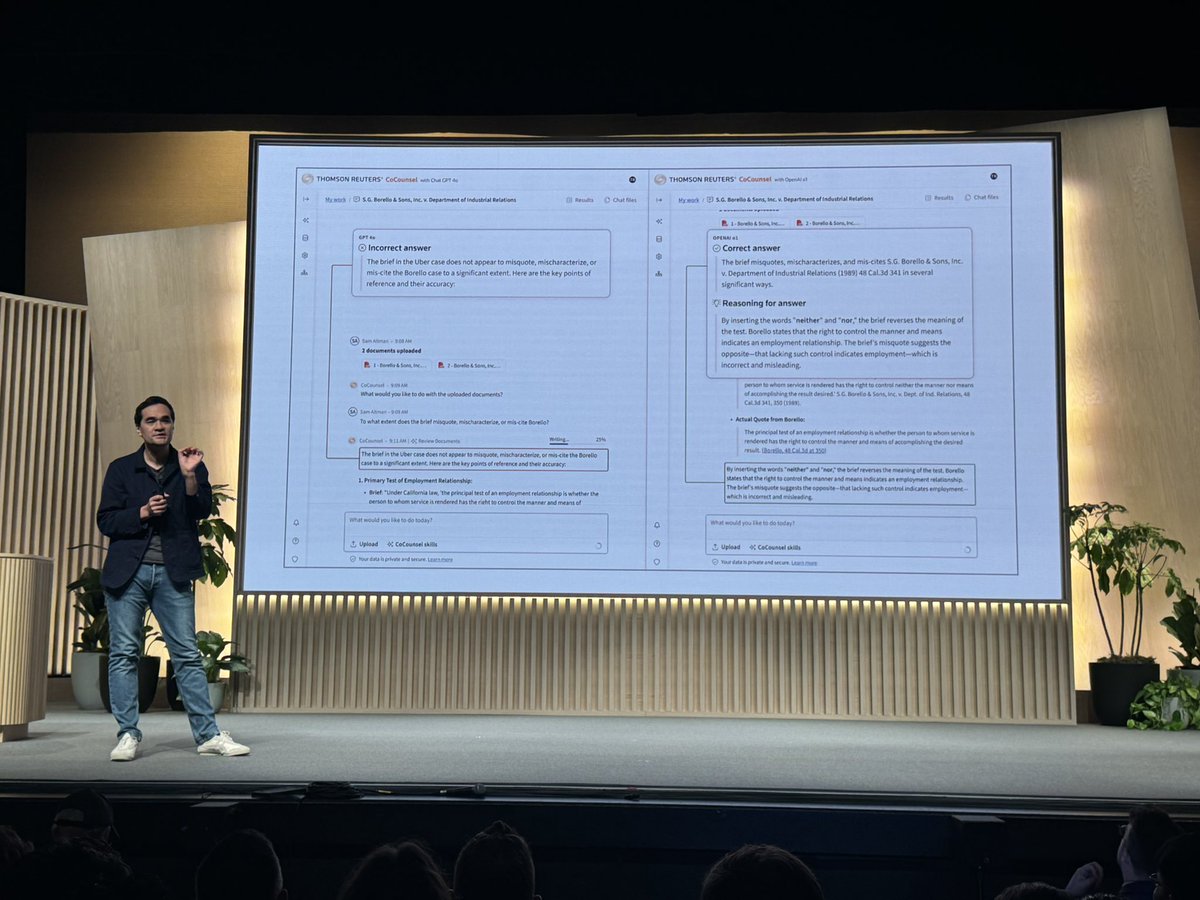

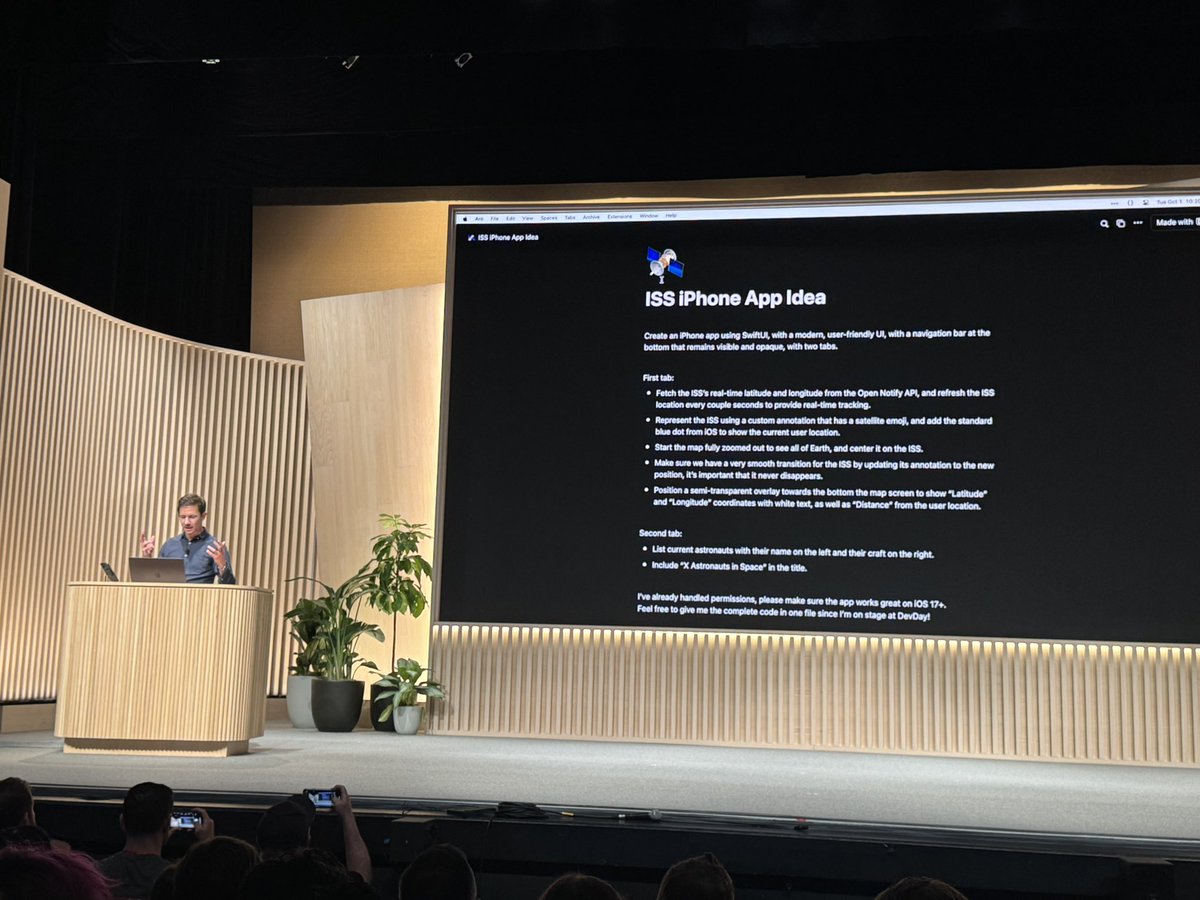

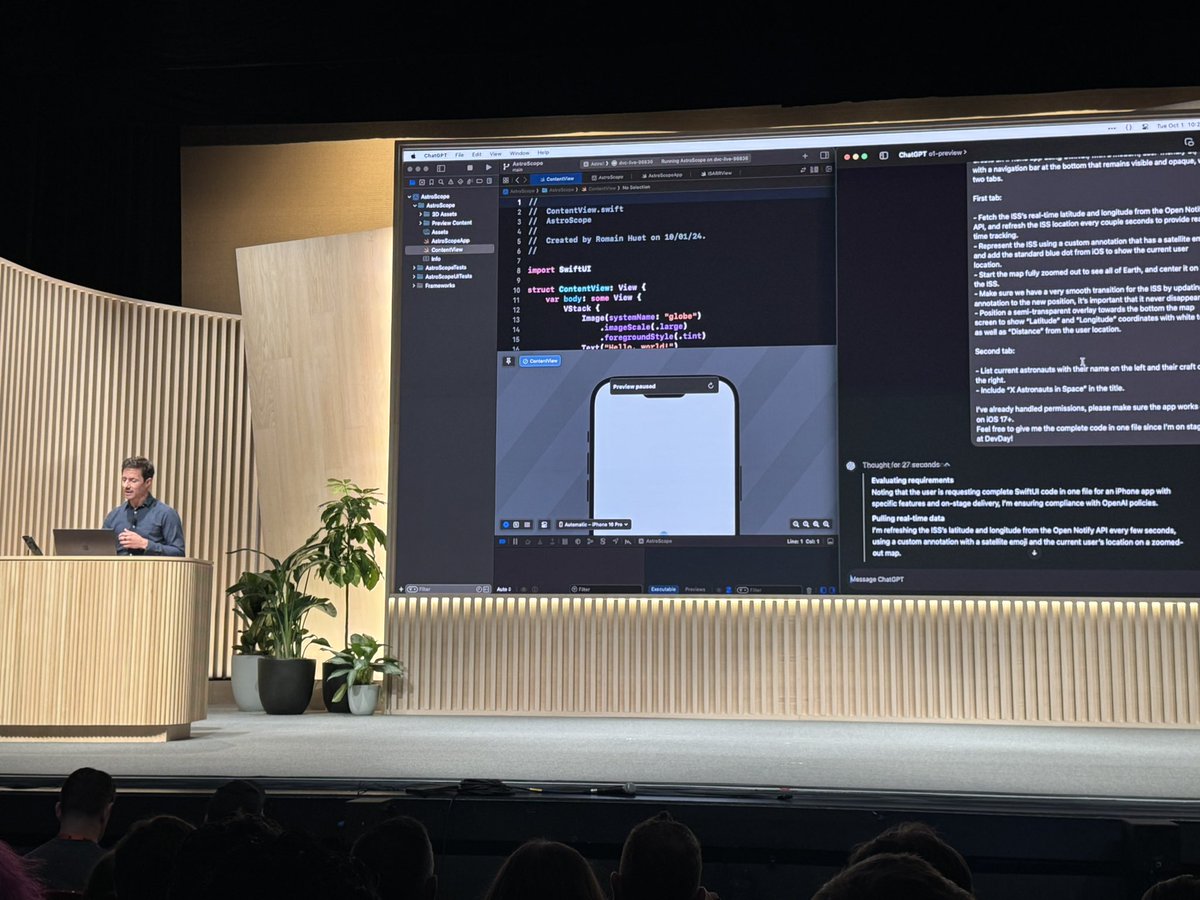

3. Copilot for X

4. ChatGPT

We're seeing the birth of Killer App #5

"Killer App" as in:

- unquestionable PMF

- path to making >$100m/yr

- everybody holds it up as an example

They are:

1. Generative Text

2. Generative Art

3. Copilot for X

4. ChatGPT

We're seeing the birth of Killer App #5

🤖What is AutoGPT and why are they "the next frontier of prompt engineering"?

Take the biggest open source AI projects you can think of. I don't care which.

AutoGPT **trounces** all of them. It's ~2 weeks old and it's not even close (see below).

And yet: AutoGPT isn't a new open source foundation model. Doesn't involve any deep ML innovation or understanding whatsoever. It is a pure prompt engineering win.

The key insight:

- applying existing LLM APIs (GPT3, 4, or others)

- and reasoning/tool prompt patterns (e.g. ReAct)

- in an infinite loop,

- to do indefinitely long-running, iterative work

- to accomplish a high level goal set by a human user

We really mean "high level" when we say "high level":

@SigGravitas' original AutoGPT demo was: “an AI designed to autonomously develop and run businesses with the sole goal of increasing your net worth”

@yoheinakajima's original prompt was an AI "to “start and grow a mobile AI startup”

Yes, that's it! You then lean on the AI's planning and self prompting, give it the tools it needs (eg. browser search, or writing code), to achieve its set goal by whatever means necessary. Mostly you can just hit "yes" to continue, or if you're feeling lucky/rich, you can run them in "continuous mode" and watch them blow through your @OpenAI budget.

Take the biggest open source AI projects you can think of. I don't care which.

AutoGPT **trounces** all of them. It's ~2 weeks old and it's not even close (see below).

And yet: AutoGPT isn't a new open source foundation model. Doesn't involve any deep ML innovation or understanding whatsoever. It is a pure prompt engineering win.

The key insight:

- applying existing LLM APIs (GPT3, 4, or others)

- and reasoning/tool prompt patterns (e.g. ReAct)

- in an infinite loop,

- to do indefinitely long-running, iterative work

- to accomplish a high level goal set by a human user

We really mean "high level" when we say "high level":

@SigGravitas' original AutoGPT demo was: “an AI designed to autonomously develop and run businesses with the sole goal of increasing your net worth”

@yoheinakajima's original prompt was an AI "to “start and grow a mobile AI startup”

Yes, that's it! You then lean on the AI's planning and self prompting, give it the tools it needs (eg. browser search, or writing code), to achieve its set goal by whatever means necessary. Mostly you can just hit "yes" to continue, or if you're feeling lucky/rich, you can run them in "continuous mode" and watch them blow through your @OpenAI budget.

The core difference between them is surprisingly simple:

@BabyAGI_ is intentionally smol. Initial MVP was <150 LOC, and its core loop is illustrated below.

#AutoGPT is very expansive and has what Liam Neeson would call a particular set of skills, from reasonable ones like Google Search and Browse Website, to Cloning Repos, Sending Tweets, Executing Code, and spawning other agents (!)

@BabyAGI_ is intentionally smol. Initial MVP was <150 LOC, and its core loop is illustrated below.

#AutoGPT is very expansive and has what Liam Neeson would call a particular set of skills, from reasonable ones like Google Search and Browse Website, to Cloning Repos, Sending Tweets, Executing Code, and spawning other agents (!)

Fortunately the @OpenAI strategy of building in safety at the foundation model layer has mitigated the immediate threat of paperclips.

Even when blatantly asked to be a paperclip maximizer, BabyAGI refuses.

Incredibly common OpenAI Safety Team W.

Even when blatantly asked to be a paperclip maximizer, BabyAGI refuses.

Incredibly common OpenAI Safety Team W.

https://twitter.com/ESYudkowsky/status/1640511156254289926

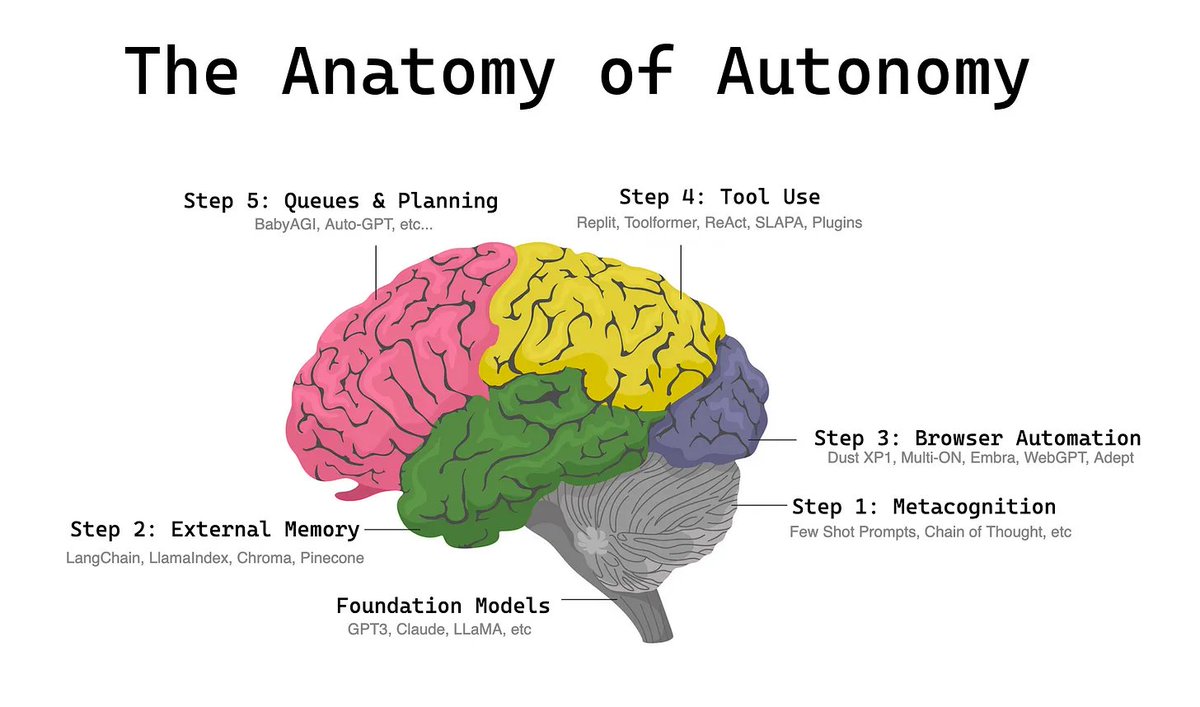

The development of Autonomous AI started with the release of GPT3 just under 3 years ago.

In the beginning, there were Foundation Models. @Francis_YAO_ explains how they provide natural language understanding and generation, store world knowledge, and display in-context learning.

Then we learned to *really* prompt them to improve their reasoning capabilities with @_jasonwei's Chain of Thought and other methods.

Then we learned to add external memory, since you can't retrain models for every usecase or for the passage of time. @danshipper notes they are *Reasoning Engines*, not omniscient oracles.

Then we handed the AI a browser, and let it both read from the Internet as well as write to it. @sharifshameem and @natfriedman's early explorations were a precursor of many browser agents to come.

Then we handed more and more and more tools to the AI, and let it write its own code to fill in the tools it doesn't yet have. @goodside's version of this is my favorite: "You Are GPT-3, and You Cannot Do Math" - but giving it a @replit so it can write whatever python it needs to do math. Brilliant.

@johnvmcdonnell's vision of Action-driven LLMs are here.

In the beginning, there were Foundation Models. @Francis_YAO_ explains how they provide natural language understanding and generation, store world knowledge, and display in-context learning.

Then we learned to *really* prompt them to improve their reasoning capabilities with @_jasonwei's Chain of Thought and other methods.

Then we learned to add external memory, since you can't retrain models for every usecase or for the passage of time. @danshipper notes they are *Reasoning Engines*, not omniscient oracles.

Then we handed the AI a browser, and let it both read from the Internet as well as write to it. @sharifshameem and @natfriedman's early explorations were a precursor of many browser agents to come.

Then we handed more and more and more tools to the AI, and let it write its own code to fill in the tools it doesn't yet have. @goodside's version of this is my favorite: "You Are GPT-3, and You Cannot Do Math" - but giving it a @replit so it can write whatever python it needs to do math. Brilliant.

@johnvmcdonnell's vision of Action-driven LLMs are here.

What's the last capability needed for Autonomous AI?

Planning.

Look at the 4 agents at work inside of BabyAGI. There's one of them we've never really seen before.

We are asking the LLM to prioritize, reflect, and plan ahead - things that @SebastienBubeck's team (authors of the Sparks of AGI paper) specifically noted that even GPT-4 was bad at.

This is the new frontier, and the new race. People with the best planning models and prompts will be able to make the best agents. (and games!)

@hwchase17's recent LangChain Agents webinar (excellent summary here ) also highlighted the emerging need to orchestrate agents as they run into and communicate with each other.

Planning.

Look at the 4 agents at work inside of BabyAGI. There's one of them we've never really seen before.

We are asking the LLM to prioritize, reflect, and plan ahead - things that @SebastienBubeck's team (authors of the Sparks of AGI paper) specifically noted that even GPT-4 was bad at.

This is the new frontier, and the new race. People with the best planning models and prompts will be able to make the best agents. (and games!)

@hwchase17's recent LangChain Agents webinar (excellent summary here ) also highlighted the emerging need to orchestrate agents as they run into and communicate with each other.

Is all this just for fun? Or a serious opportunity?

I argue that it is. Civilization advances by extending the number of operations we can perform without thinking about them. By building automations, and autonomous agents, we are extending the reach of our will.

I argue that it is. Civilization advances by extending the number of operations we can perform without thinking about them. By building automations, and autonomous agents, we are extending the reach of our will.

AI may appear further away than they seem in this funhouse mirror, though.

Self-Driving Cars have been perpetually "5 years away" for a decade. We're seeing that now with Autonomous Agents - 2023 AI Agents are like 2015 Self Driving Cars.

AutoGPT is more like "level 1 Autonomy" and needs a lot of help to do something slower than we'd take without their help anyway.

But still, the Level 5 future is clearly valuable.

Self-Driving Cars have been perpetually "5 years away" for a decade. We're seeing that now with Autonomous Agents - 2023 AI Agents are like 2015 Self Driving Cars.

AutoGPT is more like "level 1 Autonomy" and needs a lot of help to do something slower than we'd take without their help anyway.

But still, the Level 5 future is clearly valuable.

excellent, short, and overlooked @mattrickard post about how humans convey information in natural language

i think everyone building agents will eventually have to come to terms with how they react to the different kinds of human feedback and this the first good model ive seen

i think everyone building agents will eventually have to come to terms with how they react to the different kinds of human feedback and this the first good model ive seen

That's a relatively uncontroversial prediction. One thing I neglected to address tho is "how does this give insight towards AGI?"

I avoid most AGI debates because of difficulty of definition, but if it wasn't obvious from my human brain analogy, I do think developing a good planning/priorities AI gets us very very far in AGI process.

We will probably need a different architecture than autoregressive generation to do this, but then again, we're *already making* a different architecture as we add things like memory and tools/browsers.

Assuming we solve this, I have a few related candidates for next frontiers:

- hypothesis forming

- symbolic, self pruning world model

- personality

- empathy and full theory of mind

(i touched on a few in )

I avoid most AGI debates because of difficulty of definition, but if it wasn't obvious from my human brain analogy, I do think developing a good planning/priorities AI gets us very very far in AGI process.

We will probably need a different architecture than autoregressive generation to do this, but then again, we're *already making* a different architecture as we add things like memory and tools/browsers.

Assuming we solve this, I have a few related candidates for next frontiers:

- hypothesis forming

- symbolic, self pruning world model

- personality

- empathy and full theory of mind

(i touched on a few in )

https://twitter.com/blurb_istheword/status/1648557177634684928?s=20

Lol I just got done saying that LLMs cant do planning very well and so we are safe until GPT5 drops…

and then 1 week later Cornell kids come along and point out that you can just give LLMs a planning tool and it Just Works lmao 🤦♂️

never underestimate AI progress, holy hell

and then 1 week later Cornell kids come along and point out that you can just give LLMs a planning tool and it Just Works lmao 🤦♂️

never underestimate AI progress, holy hell

https://twitter.com/mathemagic1an/status/1651724212971929600

@lilianweng as always comes in with the definitive survey:

https://twitter.com/lilianweng/status/1673535600690102273

very cool to say @sashaorloff use the 5 level autonomy framing to describe his product - more agent type companies should use it

https://twitter.com/sashaorloff/status/1686807083243782144?s=20

• • •

Missing some Tweet in this thread? You can try to

force a refresh