𝑨𝑹𝑬 𝒀𝑶𝑼 𝑷𝑨𝒀𝑰𝑵𝑮 𝑨𝑻𝑻𝑬𝑵𝑻𝑰𝑶𝑵?

6th of December 2017... over FIVE years ago, researchers explored

🔥 𝑻𝒓𝒂𝒏𝒔𝒇𝒐𝒓𝒎𝒆𝒓𝒔 🔥 (the "T" in GPT)

arxiv.org/pdf/1706.03762…

What even 𝑨𝑹𝑬 they??

👀🕳️🐇🧵

#100DaysOfChatGPT

Explore Emerging Tech at @AtmanAcademy

6th of December 2017... over FIVE years ago, researchers explored

🔥 𝑻𝒓𝒂𝒏𝒔𝒇𝒐𝒓𝒎𝒆𝒓𝒔 🔥 (the "T" in GPT)

arxiv.org/pdf/1706.03762…

What even 𝑨𝑹𝑬 they??

👀🕳️🐇🧵

#100DaysOfChatGPT

Explore Emerging Tech at @AtmanAcademy

@AtmanAcademy The truth is, I'm still trying to wrap my head around it...

But got a little bit further last night with the help of #ChatGPT.

I've heard someone describe it like a Rubix Cube (?!) so I knew there was a 3D sorta component to it conceptually.

Let's hop on in!

👀🕳️🐇🧵

But got a little bit further last night with the help of #ChatGPT.

I've heard someone describe it like a Rubix Cube (?!) so I knew there was a 3D sorta component to it conceptually.

Let's hop on in!

👀🕳️🐇🧵

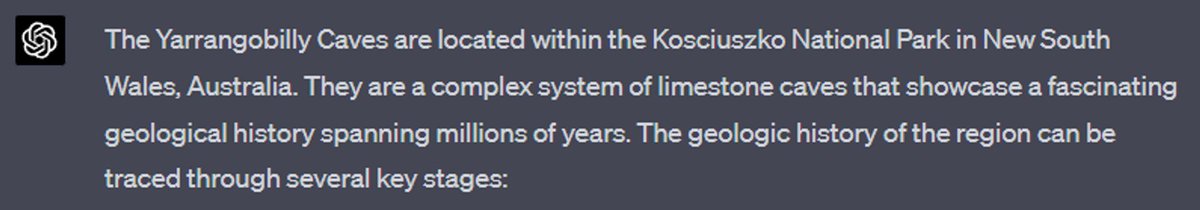

@AtmanAcademy 𝑳𝑨𝒀𝑬𝑹𝑺

𝑯𝑬𝑨𝑫𝑺

𝑻𝑶𝑲𝑬𝑵𝑺

Remember these three... that's about all I could take away from all these WORDS

It was a bit more than I was ready to try and digest.

👀🕳️🐇🧵

𝑯𝑬𝑨𝑫𝑺

𝑻𝑶𝑲𝑬𝑵𝑺

Remember these three... that's about all I could take away from all these WORDS

It was a bit more than I was ready to try and digest.

👀🕳️🐇🧵

@AtmanAcademy "Explain this like I'm 15"... not like I'm 5, but not like I'm a college graduate either.

THAT's more like it - I can actually follow these!

(Can you?)

Again - say it with me:

𝑳𝑨𝒀𝑬𝑹𝑺

𝑯𝑬𝑨𝑫𝑺

𝑻𝑶𝑲𝑬𝑵𝑺

👀🕳️🐇🧵

THAT's more like it - I can actually follow these!

(Can you?)

Again - say it with me:

𝑳𝑨𝒀𝑬𝑹𝑺

𝑯𝑬𝑨𝑫𝑺

𝑻𝑶𝑲𝑬𝑵𝑺

👀🕳️🐇🧵

@AtmanAcademy So it's all starting to come together (or come apart?):

Larger Layers,

More Attention Heads and a

Bigger Context Window (more input tokens)

Give the newer models superior performance.

👀🕳️🐇🧵

Larger Layers,

More Attention Heads and a

Bigger Context Window (more input tokens)

Give the newer models superior performance.

👀🕳️🐇🧵

@AtmanAcademy That's probably enough to chew on for tonight.

To (try) and summarise:

Layers - enable context abstraction

Heads - gives the "weighting" (or attention) to the words/tokens

Tokens - broken up words and sub-words for processing

So yeah - bigger is better. 🤓

👀🕳️🐇

To (try) and summarise:

Layers - enable context abstraction

Heads - gives the "weighting" (or attention) to the words/tokens

Tokens - broken up words and sub-words for processing

So yeah - bigger is better. 🤓

👀🕳️🐇

@AtmanAcademy Day 93 of #100𝑫𝒂𝒚𝒔𝑶𝒇𝑪𝒉𝒂𝒕𝑮𝑷𝑻; exploring, experimenting and growing through interactions with ChatGPT.

If this content inspires you, please: Comment/Like/Retweet/Follow.

GRAB THE CHEAT SHEET from the ChatGPT workshop here:

AtmanAcademy.io

Hop on in!

👀🕳️🐇

If this content inspires you, please: Comment/Like/Retweet/Follow.

GRAB THE CHEAT SHEET from the ChatGPT workshop here:

AtmanAcademy.io

Hop on in!

👀🕳️🐇

• • •

Missing some Tweet in this thread? You can try to

force a refresh

Read on Twitter

Read on Twitter