I think there needs to be a public-interest influence ops & anti-disinfo institution.

I've been going through the basis in my head the past few days, let me see what people think.

(🧵)

I've been going through the basis in my head the past few days, let me see what people think.

(🧵)

Start with the idea that disinformation & digital radicalization move fast and unpredictably.

Narratives shift in days, sometimes hours; in the past week in #NAFO, we've seen disinfo about Russian strikes on underground bunkers, Patriot batteries and downed Russian aircraft.

Narratives shift in days, sometimes hours; in the past week in #NAFO, we've seen disinfo about Russian strikes on underground bunkers, Patriot batteries and downed Russian aircraft.

As a social problem disinformation belief cuts across government, media, private industry and NGOs.

This is, I think, by design. It's not just "flooding the zone" vertically, it's also horizontally, so that it's always someone else's problem or area of expertise.

This is, I think, by design. It's not just "flooding the zone" vertically, it's also horizontally, so that it's always someone else's problem or area of expertise.

Digital movements like #NAFO, or for that matter #jagarhar (look it up), or even Black Twitter uniquely address problems of disinformation belief and potentially solve them, long-term, by creating social institutions that "market" civic dubiety and pro-social engagement.

Digital anti-disinfo movements are also inherently unpredictable and unsecure. I've written about this a bit: we have to self-police, yes, but also have to assume that #NAFO is infiltrated.

If you were in Russian public diplomacy or disinfo (probably both) wouldn't you?

If you were in Russian public diplomacy or disinfo (probably both) wouldn't you?

Funding anti-disinformation efforts is also a unique problem. I've noticed this looking for a job lately; all the companies are either small startups or large prime or secondary contractors for the Federal government.

It's because of how disinfo works as a social problem.

It's because of how disinfo works as a social problem.

The problem is that disinformation belief is a generalized society-wide problem with specific personal pain points

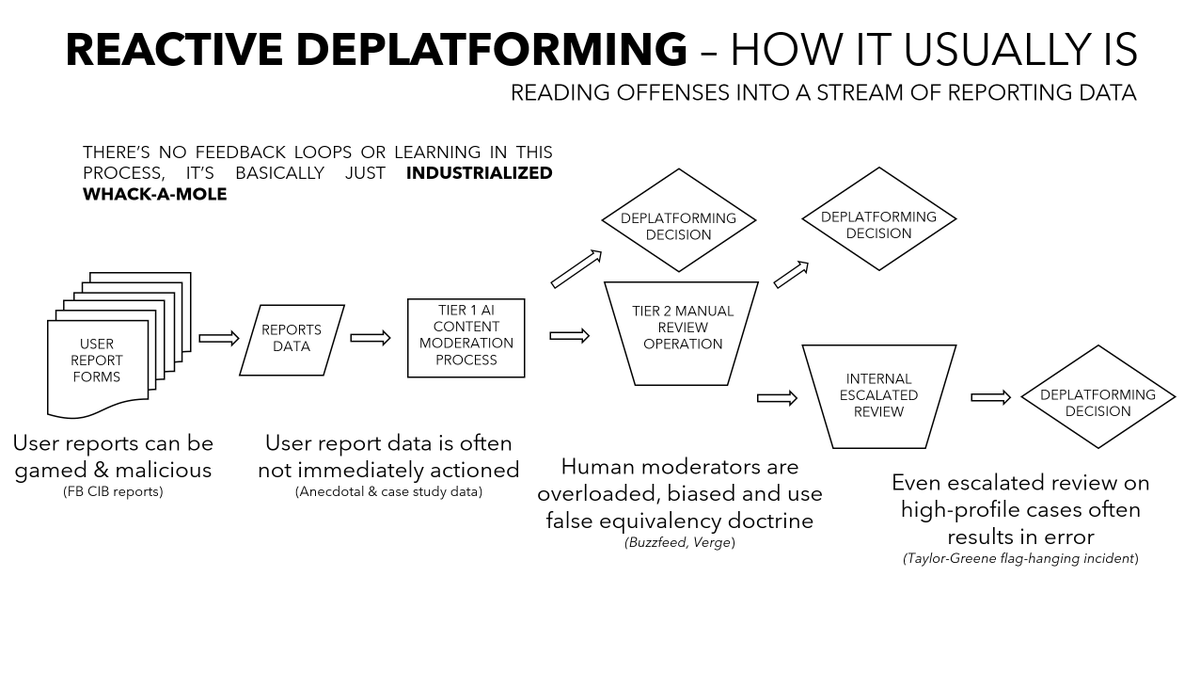

Think of it as basically an Elon problem he's outsourcing to users. Facebook does the same thing, if more discreetly (and with AI that gets it wrong constantly).

Think of it as basically an Elon problem he's outsourcing to users. Facebook does the same thing, if more discreetly (and with AI that gets it wrong constantly).

That means that anti-disinformation orgs have to have transparent funding that doesn't set an agenda in order to maintain credibility.

This is particularly important because if you just take whatever donations you can get, that opens up avenues of control for foreign agents.

This is particularly important because if you just take whatever donations you can get, that opens up avenues of control for foreign agents.

So disinfo and extremism as a social problem needs a specific type of entity to address it, as I've sketched out, it needs to be:

- cross-disciplinary

- authentically grassroots

- reactive and able to shift targets rapidly

- proactive and conducive to long-term social stability

- cross-disciplinary

- authentically grassroots

- reactive and able to shift targets rapidly

- proactive and conducive to long-term social stability

Casting the funding issue in terms of a crowd-funded 501(c)(3) makes it solvable, if difficult.

The key idea is basically creating a publicly funded group that runs transparent anti-disinformation ops and anti-extremism interventions.

That sounds fancy, examples help.

The key idea is basically creating a publicly funded group that runs transparent anti-disinformation ops and anti-extremism interventions.

That sounds fancy, examples help.

Things I want to do here are, concretely:

- research & target vulnerable Republicans in ‘24 General Election races with digital ads & volunteer influence ops targeting their states/districts

- research & target vulnerable Republicans in ‘24 General Election races with digital ads & volunteer influence ops targeting their states/districts

- create public awareness of and lobby for sanctions against state-sponsored Russian disinformation outlets like RT, running the "follow the money" playbook that Damian Williams established for SDNY's sanctions enforcement cases

- assist in the development and distribution of webapps for activists to enhance their ability to produce & distribute anti-disinfo content like memes, threads, reusable "copypasta" and Article 5 calls against high-priority disinfo targets

- create a compliance database of social media extremists, charting recurrence, efforts to remove & speed of removal. Social media "externalizes" their content moderation by making users do it; we don't know how much it's costing society yet, but this will find that out.

Basically the solution as I see it starts to look like a "white hat", radically transparent, crowd-funded digital influence shop.

You know that thing in Twitter bio about being a "one-man intelligence agency funded on Patreon, seriously?"

You know that thing in Twitter bio about being a "one-man intelligence agency funded on Patreon, seriously?"

The person who said that was a whistleblower lawyer who wrote sanctions law and works with scary (I am not using that word lightly) intelligence-community lawyers.

He said that because I developed confidential human source intel that no one else had.

By, like, asking people.

He said that because I developed confidential human source intel that no one else had.

By, like, asking people.

I think of it as sort of funnily modest, like, haha, look how seriously I take myself.

Real talk, though, it doesn't need to be a one-man shop, but... there does need to be something like that, I think.

A capability that society has, that's how I'm thinking of it lately.

Real talk, though, it doesn't need to be a one-man shop, but... there does need to be something like that, I think.

A capability that society has, that's how I'm thinking of it lately.

• • •

Missing some Tweet in this thread? You can try to

force a refresh

Read on Twitter

Read on Twitter