Currently watching the conference talk for CCTEST: Testing and Repairing Code Completion Systems.

Paper: arxiv.org/pdf/2208.08289…

Paper: arxiv.org/pdf/2208.08289…

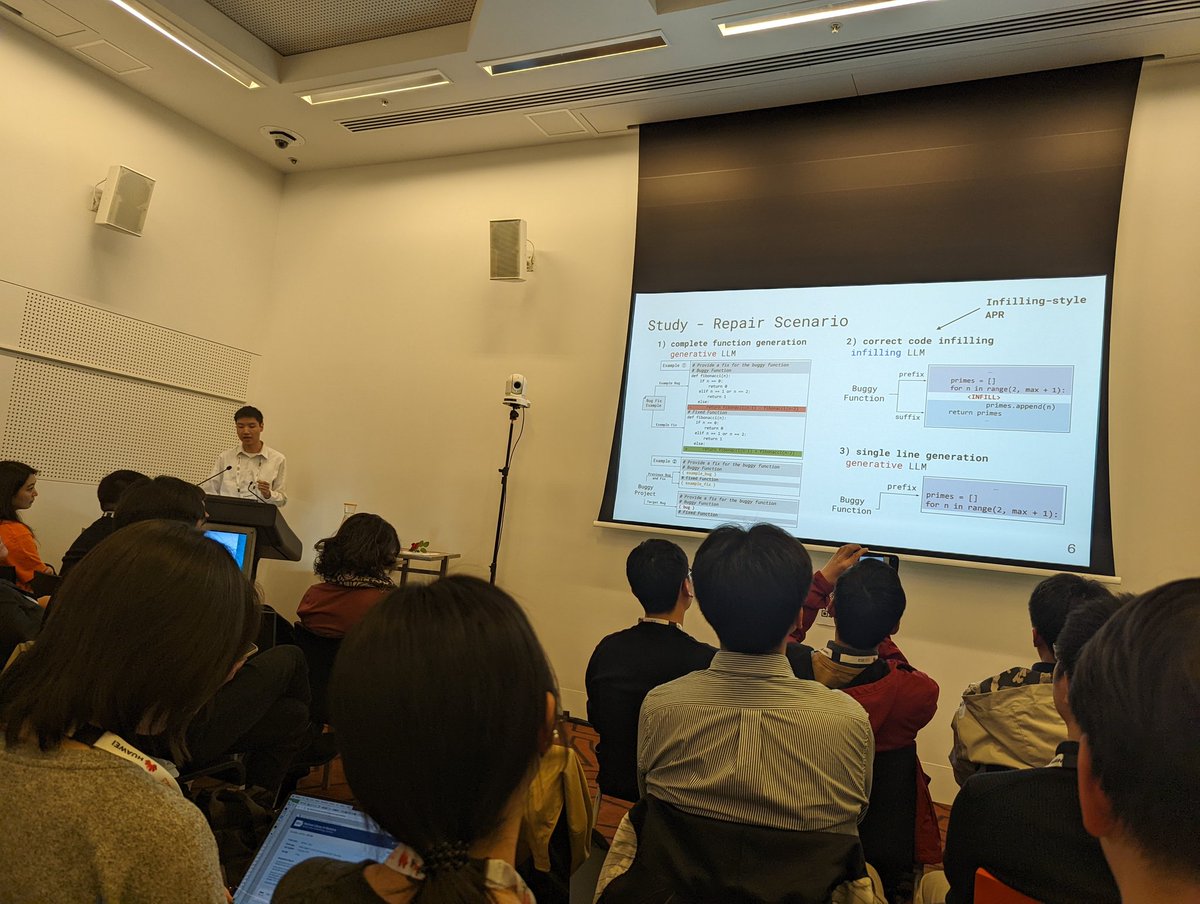

Motivation of this is how we can actually find and fix LLM-generated buggy code completions

Important problem. I'm not yet following the approach. Seems to involve some kind of mutation testing. I will need to read this. They are talking about why unit tests aren't good oracles, which I buy. But what is their oracle? Still lost on that

It's cool that they're actually catching bugs in LLM-generated code, though. That's important. I think I am still unclear about the repair approach and whether I'd trust it though---especially since they seem to use metrics like BLEU I don't trust

They did some human evaluation of found bugs, which is definitely good

It'd be good to, at the very least, set a higher standard of testing for these systems, whether or not I buy the repair part. I think I am too scared that automatic repair could introduce bugs in the LLM-generated code that are not caught by the testing process

Anyways, it's good people are thinking about this problem seriously

• • •

Missing some Tweet in this thread? You can try to

force a refresh

Read on Twitter

Read on Twitter