AI won't just replace interface designers. It will replace interfaces.

A vision for digital products in the age of AI 🧵👇

1/31

A vision for digital products in the age of AI 🧵👇

1/31

2/ I'm often asked how AI will change design. There's a lot to contemplate:

What design skills are future-proof?

How will AI augment a designer's job?

What parts of design will be automated?

Will AI replace designers altogether?

Truthfully, these are the wrong questions to ask.

What design skills are future-proof?

How will AI augment a designer's job?

What parts of design will be automated?

Will AI replace designers altogether?

Truthfully, these are the wrong questions to ask.

3/ To better understand the role of the designer, examine the thing being designed. Reframed:

What interfaces are future-proof?

How will AI augment a user's experience?

What interfaces will be automated?

Will AI replace interfaces altogether?

Now imagine the role of a designer.

What interfaces are future-proof?

How will AI augment a user's experience?

What interfaces will be automated?

Will AI replace interfaces altogether?

Now imagine the role of a designer.

4/ Design's objective has always been to understand intent, and create patterns that make it easy to achieve it.

The shapes and colors on a screen are the means, never the end.

Good UX makes things easy and intuitive—I know what button you want, and I'll put it within reach.

The shapes and colors on a screen are the means, never the end.

Good UX makes things easy and intuitive—I know what button you want, and I'll put it within reach.

5/ But *great* UX anticipates + fulfills needs—I know what you want and I'll do it for you without having to press any buttons.

As Alan Cooper once said:

"As great as your interface is, it would be better if there were less of it."

As Alan Cooper once said:

"As great as your interface is, it would be better if there were less of it."

6/ Uber is a great example of this.

The best part of Uber's app isn't the clean layout, smooth shadows, beautiful illustrations, or snappy animations.

They're world-class, but those aren't the reasons you use it.

The best part of Uber's app isn't the clean layout, smooth shadows, beautiful illustrations, or snappy animations.

They're world-class, but those aren't the reasons you use it.

7/ Uber's value is what you don't see. Take a moment to remember your last ride.

How did you pay?

How did you pay?

8/ You get in and out, sometimes without even speaking. You don't have to do anything else.

You focus on your destination, and the app takes care of everything in the background.

The payment is invisible. No card swiping, no checkout or confirmation button.

You focus on your destination, and the app takes care of everything in the background.

The payment is invisible. No card swiping, no checkout or confirmation button.

9/ The same phenomenon happens with the Nest.

You dial it a few times, and it just knows to reduce energy when you've left for work and to make it colder while you sleep.

The interface becomes mostly invisible after a little bit of time.

You dial it a few times, and it just knows to reduce energy when you've left for work and to make it colder while you sleep.

The interface becomes mostly invisible after a little bit of time.

10/ In both of these examples, the products are *magical* because they anticipate our needs and take action on our behalf.

We can think less about the buttons we need to press and just enjoy the desired outcome (i.e. getting to our destination, a comfortable temperature).

We can think less about the buttons we need to press and just enjoy the desired outcome (i.e. getting to our destination, a comfortable temperature).

11/ Sure, designers create visual delight along the way.

But the purpose of an interface is to instruct a machine do what we want it to do (i.e. send a message), give us a machine's status (i.e. "message sent"), or present us with relevant information (i.e. today's weather).

But the purpose of an interface is to instruct a machine do what we want it to do (i.e. send a message), give us a machine's status (i.e. "message sent"), or present us with relevant information (i.e. today's weather).

12/ What happens if the machine can give itself instructions? The way it does with your thermostat but for all other aspects of your life?

What buttons will you need to press?

What buttons will you need to press?

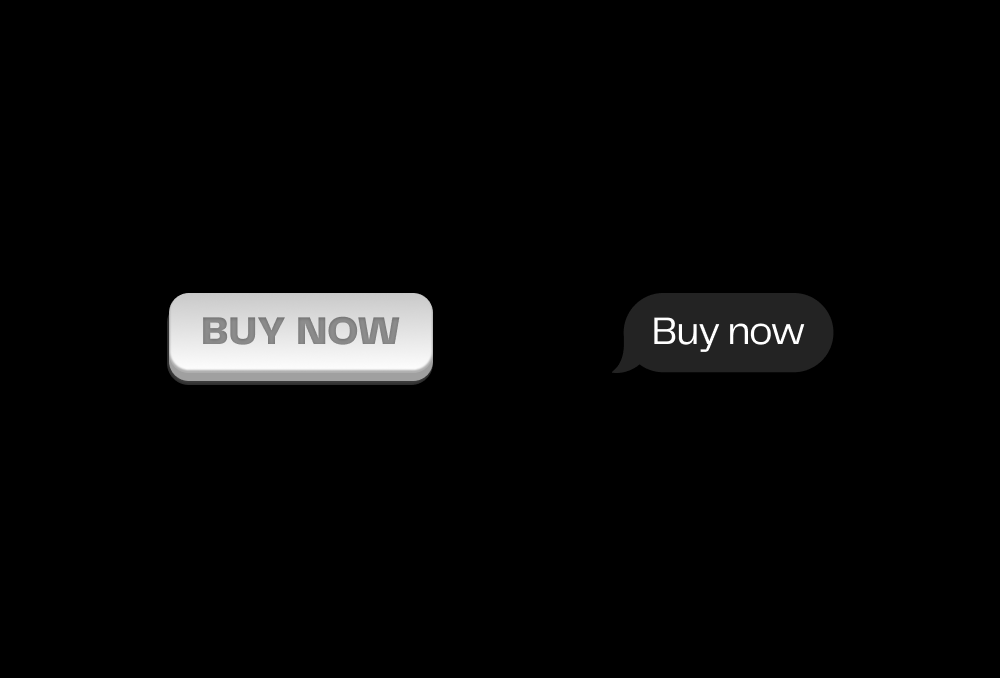

13/ Right now, we're in a period of transition in software: moving from digital interfaces (buttons on touch screens) to conversations.

Conversations are the most natural way for us to provide instructions.

Conversations are the most natural way for us to provide instructions.

14/ Since the beginning of computers, we've pressed buttons on screens bc it's the only way to communicate intent.

The buttons and the screens have evolved many times over. They've become more accessible, more mobile, more beautiful, and more dynamic.

But they're still buttons.

The buttons and the screens have evolved many times over. They've become more accessible, more mobile, more beautiful, and more dynamic.

But they're still buttons.

15/ And today, those buttons can do seemingly miraculous things. Press this button, talk to mom. Press that one, watch a movie. And that one? Make food magically appear at your door.

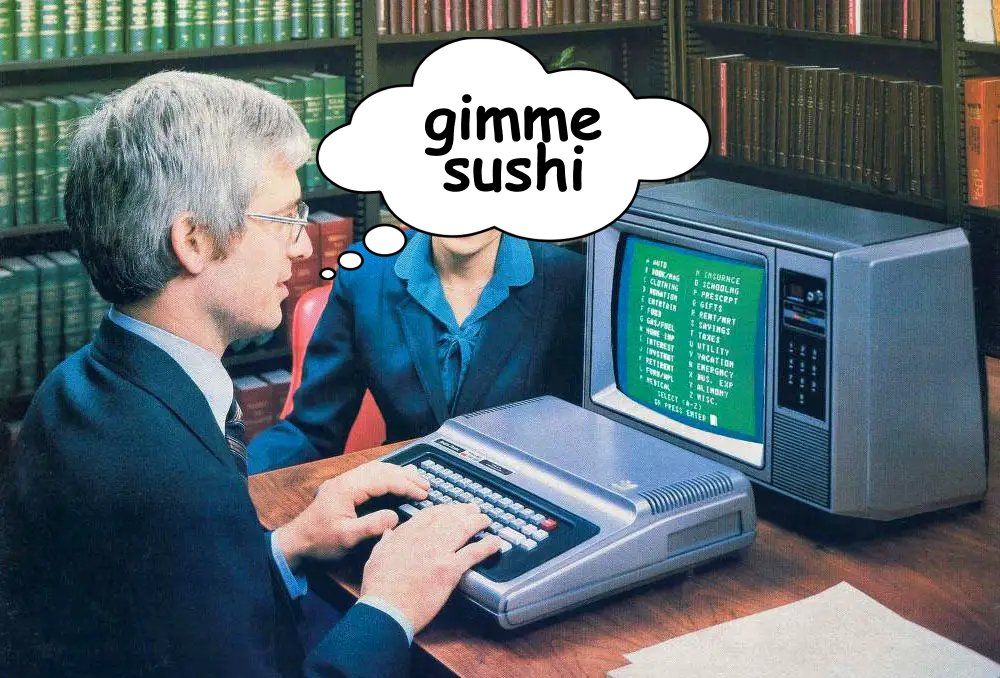

16/ Let's quickly break down the buttons you need to press for sushi:

1. Tap Doordash icon

2. Tap "Sushi"

3. Tap a nearby restaurant

4. Tap California Roll

5. Tap add to cart

6. Checkout (+/- 3-4 taps)

If you do this often, it should take 1-2 min.

1. Tap Doordash icon

2. Tap "Sushi"

3. Tap a nearby restaurant

4. Tap California Roll

5. Tap add to cart

6. Checkout (+/- 3-4 taps)

If you do this often, it should take 1-2 min.

17/ It's still amazing to me—absolutely marvelous—that I can make sushi magically appear with such little effort. But wait, there's more.

Now imagine instead just typing or speaking "order a California Roll."

That's possible now. Today. Right now.

Now imagine instead just typing or speaking "order a California Roll."

That's possible now. Today. Right now.

18/ It turns a 2 minute task into a 2 second task. And it can be trained to make its own decisions about filtering for restaurant ratings, item preferences, and price range.

Not only is the input faster and more natural, but it's fewer inputs, less browsing, less energy.

Not only is the input faster and more natural, but it's fewer inputs, less browsing, less energy.

19/ Let's take this one step further.

If an AI can understand intent through words AND understand intent through behavior patterns (think: nest), then even speaking is an inefficient input.

If I order Sushi every Sunday night at 7p, why wouldn't an AI preemptively order for me?

If an AI can understand intent through words AND understand intent through behavior patterns (think: nest), then even speaking is an inefficient input.

If I order Sushi every Sunday night at 7p, why wouldn't an AI preemptively order for me?

20/ Similarly, imagine this:

+ Groceries ordered based on your lifestyle

+ Routes mapped as soon as you leave the door

+ Workouts planned in real-time based on your biometrics

Every product predicts your intent and does the repetitive, inconvenient button press on your behalf.

+ Groceries ordered based on your lifestyle

+ Routes mapped as soon as you leave the door

+ Workouts planned in real-time based on your biometrics

Every product predicts your intent and does the repetitive, inconvenient button press on your behalf.

21/ And instead of you pressing buttons to initiate an action, you press a button or speak intent to alter, edit, or abort actions.

Apple watch messaging is a great example of this.

You speak into the device, it drafts the message and gives you the option for "Don't Send."

Apple watch messaging is a great example of this.

You speak into the device, it drafts the message and gives you the option for "Don't Send."

22/ This vision comes with caveats: ofc not all interfaces should be invisible.

Most of the UI we use day-to-day that are task-based can be conveniently and delightfully automated, like the examples I've provided.

Others are better with more control—like video games.

Most of the UI we use day-to-day that are task-based can be conveniently and delightfully automated, like the examples I've provided.

Others are better with more control—like video games.

23/ Video games for example are interfaces designed for experiential interactions, not tasks. The more control you have over the machine, the better.

And although it might be for another thread, it still remains to be seen whether a human or AI designs and generates the UI.

And although it might be for another thread, it still remains to be seen whether a human or AI designs and generates the UI.

24/ A general rule of thumb for designing interactions could be:

Invisible = task-based

Visible = experience-based

Invisible = task-based

Visible = experience-based

25/ This is where the role of the designer in the age of AI emerges:

To determine what interactions to *craft* and what to *automate*

As much as their role is about determining how the interaction looks.

To determine what interactions to *craft* and what to *automate*

As much as their role is about determining how the interaction looks.

26/ Because, the truth is, there are valid concerns of what the other side of this world looks like:

+ Harmful AI mistakes

+ Loss of human agency

+ Surveillance concerns

+ And potential manipulation risk

There's a lot that needs to be thought through.

+ Harmful AI mistakes

+ Loss of human agency

+ Surveillance concerns

+ And potential manipulation risk

There's a lot that needs to be thought through.

27/ People might not always prefer automated and predictive interfaces. Some might prefer to maintain control of their interactions, and too much automation might lead to frustration if the AI makes incorrect assumptions.

The designers role is to strike this balance.

The designers role is to strike this balance.

28/ AI making decisions on a user's behalf would require access to a vast amount of personal data.

It's the designer's role to give the user control over what data they feel comfortable sharing, and have full visibility into what's being shared.

It's the designer's role to give the user control over what data they feel comfortable sharing, and have full visibility into what's being shared.

29/ Not everyone interacts with products in the same way. Different cultures, abilities, and age groups might have unique requirements that an AI might neglect.

It's the designer's role to monitor and course-correct these cases.

It's the designer's role to monitor and course-correct these cases.

30/ We've exhausted this conversation in web2 but I believe, more than ever, product designers should have as deep of an understanding of psychology, behavior, systems and ethics as they do visual design (if not more).

31/ Ultimately, the future of interfaces in the age of AI is less about eliminating the role of the designer and more about a shift in what we're designing.

We're not just creating interfaces; we're crafting experiences.

And more and more of them will be invisible.

We're not just creating interfaces; we're crafting experiences.

And more and more of them will be invisible.

• • •

Missing some Tweet in this thread? You can try to

force a refresh

Read on Twitter

Read on Twitter