Most people think that you have to be a programming expert to create an AI app.

Not true at all, beginner Python skills are already enough to get you started.

You can easily build the backend of your AI app with just a few lines of code using @LangChainAI Chains.

Here's how 👇

Not true at all, beginner Python skills are already enough to get you started.

You can easily build the backend of your AI app with just a few lines of code using @LangChainAI Chains.

Here's how 👇

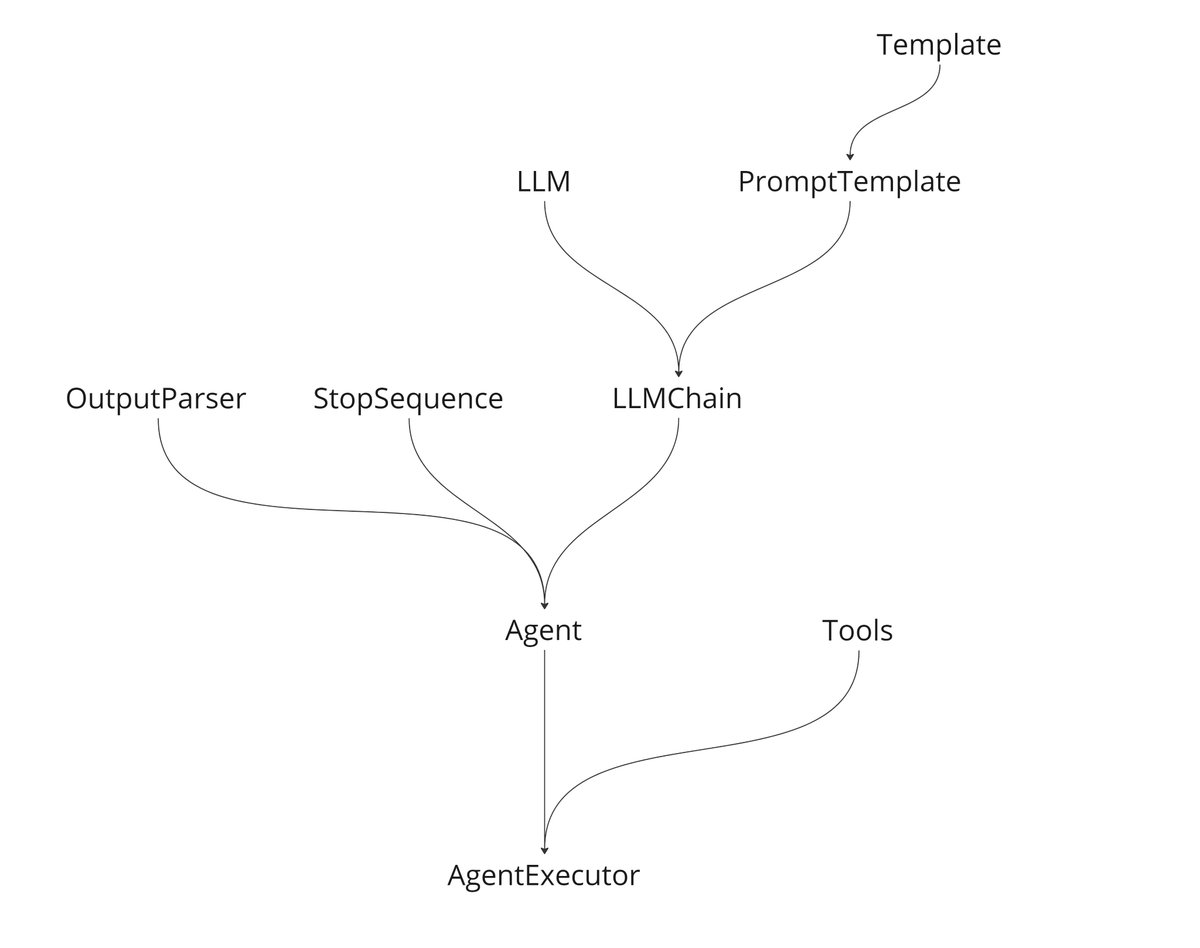

Chains are essential to Langchain as they handle most of the backend code.

They deal with the LLM APIs, process responses, return output, and ensure that everything runs smoothly to create a single, coherent application.

All of that in just 3 or 4 lines of code on your part.

They deal with the LLM APIs, process responses, return output, and ensure that everything runs smoothly to create a single, coherent application.

All of that in just 3 or 4 lines of code on your part.

The LLMChain is a straightforward chain that requires only an input to generate an output.

It is ideal for single-call applications that do not require much interaction between the user and the AI and do not need to store past interactions in memory.

It is ideal for single-call applications that do not require much interaction between the user and the AI and do not need to store past interactions in memory.

If you want to create a chatbot-like app, where users can chat with the AI, you should use the conversation chain.

This chain really works akin to a chatbot - where both of you take turns talking - and requires a memory parameter to remember its past interactions.

This chain really works akin to a chatbot - where both of you take turns talking - and requires a memory parameter to remember its past interactions.

One of the main functions of AI today is to interact with internal documents, databases, or lengthy books.

Thankfully, the Document QA chain serves this exact purpose.

This chain enables the app to retrieve and analyze info from the docs you provide with very little code.

Thankfully, the Document QA chain serves this exact purpose.

This chain enables the app to retrieve and analyze info from the docs you provide with very little code.

If your AI app requires multiple chains to work one after the other, you can create a sequence of chains using the Simple Sequential Chain.

Each chain's output will serve as the input for the next.

Below, we create a chain that creates an article on a topic then summarizes it.

Each chain's output will serve as the input for the next.

Below, we create a chain that creates an article on a topic then summarizes it.

Of course, there are other chains available, so it's important to review Langchain’s documentation.

However, if you're new to creating AI applications, this is likely all of the chains you will need to begin with.

So go ahead and start experimenting with them.

However, if you're new to creating AI applications, this is likely all of the chains you will need to begin with.

So go ahead and start experimenting with them.

• • •

Missing some Tweet in this thread? You can try to

force a refresh

Read on Twitter

Read on Twitter