A lot of people in AI policy are talking about licensing in the context of AI risk. Here’s a little thread exploring what this means, what it could look like, and some challenges worth keeping in mind. 🏛

NB: it's worth noting that I’m not covering agreements between AI developers and users on how an API or software can be used. Instead I'm focusing on regulatory licenses awarded by governments to control and regulate certain activities or industries.

A licensing scheme essentially imposes requirements on individuals, businesses, products, services, or activities to ensure they follow certain safety rules. You apply for a license, the regulator awards it or certifies you, and you're good to go. Here are some examples: ✈️📻🛰️☢️

In aviation, you need a Production Certificate from the FAA to build aircrafts. Depending on the type of license sought (commercial, instrument rating etc.) pilots will also undergo different tests. This is why air travel is so safe, with about 0.01 deaths per 100 million miles.

The FCC licenses the use of electromagnetic spectrum (a finite resource) for various types of communication like broadcasting and mobile services. A license can be sub-leased to a group, which is free to use that spectrum as it sees fit within certain regulatory parameters.

Similarly, the Energy Reorganization Act of 1974 imposes licensing and regulatory restrictions over commercial reactors, nuclear materials and waste. As President Gerard Ford at the time noted, this was necessary given "the special potential hazards" involved with nuclear fuels.

So what does this have to do with AI? Well, as models get more powerful, some researchers fear that they may unlock capabilities powerful enough to pose greater threats. Malicious actors could repurpose AI to be highly destructive. arxiv.org/abs/2305.15324

Of course many harms already exist today. But as models get more powerful, harmful dynamics could also worsen in parallel. I don't personally think *existing* models warrant licensing - but we should keep a close eye on how things develop.

https://twitter.com/sebkrier/status/1664616777987358720

Precisely defining the target of a licensing regime is important but difficult. How exactly do you define 'frontier models'? You could look at parameters or size, but these are imperfect proxies and models will likely get more efficient over time. linkedin.com/pulse/moment-w…

.@tshevl suggests that a model should be seen as dangerous if it has a capability profile that is sufficient for extreme harm, assuming misuse and/or misalignment. This seems useful, although more research is needed to standardize evaluations. See also: isaduan.github.io/isabelladuan.g…

You may want evaluations completed before a frontier model is allowed to use tools or connect to other models/APIs. You could also require monitoring and reporting of misuse, and controls around where a model can be used (e.g. critical infrastructure).

https://twitter.com/sebkrier/status/1514584013993914369

A licensing regime could also ensure frontier models do not proliferate uncontrollably (e.g. through open source or unrestricted access). Fine to have a power generator in your garden, but maybe not a nuclear reactor. One proposal is structured access: governance.ai/post/sharing-p…

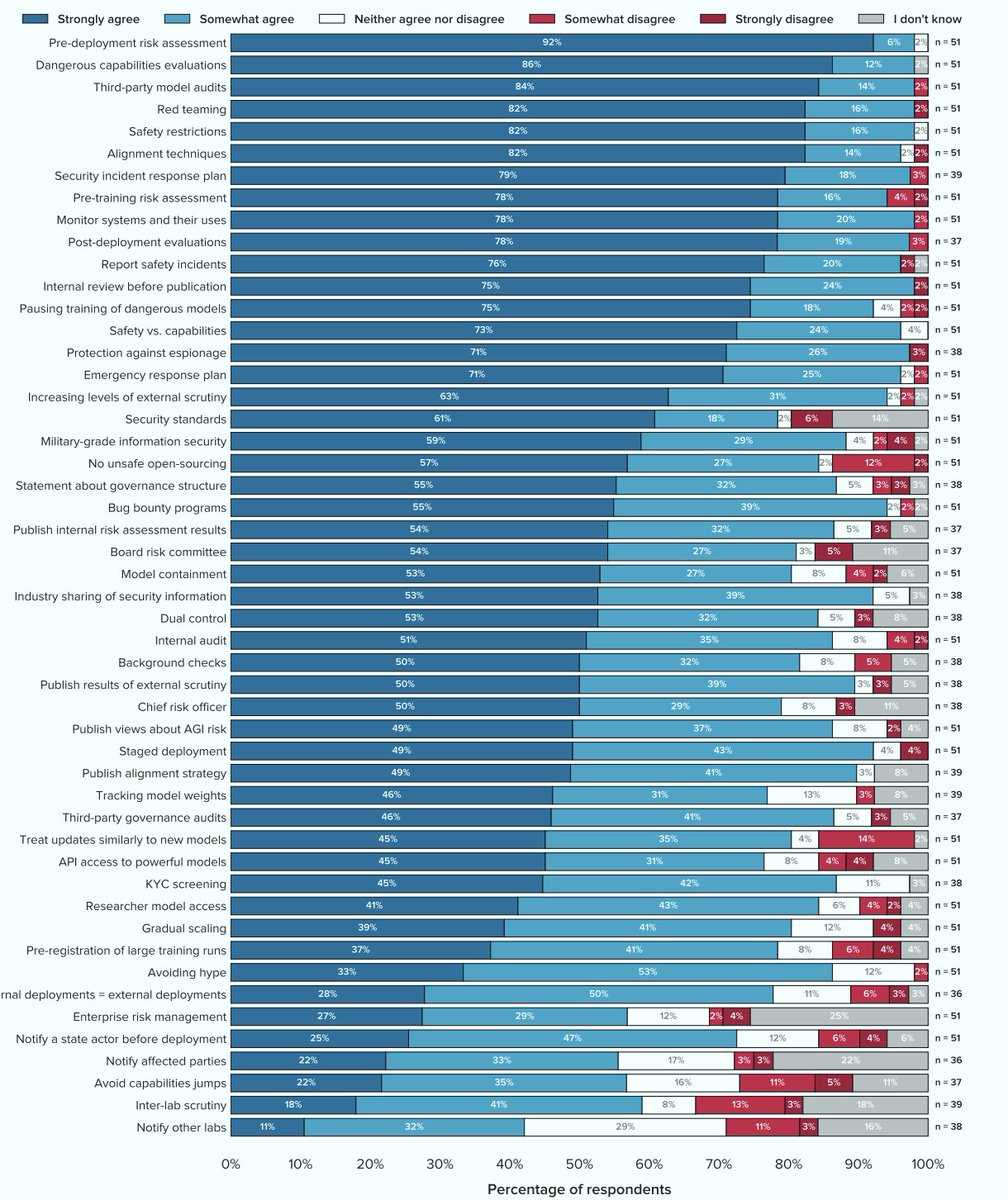

A licensing regime could set appropriate standards licensees must meet. Evals can help better understand frontier models, but other organization-level interventions may be needed. Surprisingly there is a lot of agreement on what these could be: arxiv.org/abs/2305.07153

Standards and evaluation methods are still nascent. Impressive progress is being made in various areas, yet we're not at the point of a comprehensive, robust set. For a strong governance or regulatory framework, they must be fully developed first. On this: forum.effectivealtruism.org/posts/idrBxfsH…

Another difficulty is the complexity of the AI value chain. For example some suggest compute providers could be subject to requirements such as KYC and the registration of training runs. See also: arxiv.org/abs/2303.11341

New or existing regulators and standards bodies should have the right breadth and depth of expertise to give out licenses nimbly. State capacity is crucial given how fast the field moves, and how quickly benchmarks become obsolete. From @AnthropicAI: anthropic.com/index/an-ai-po…

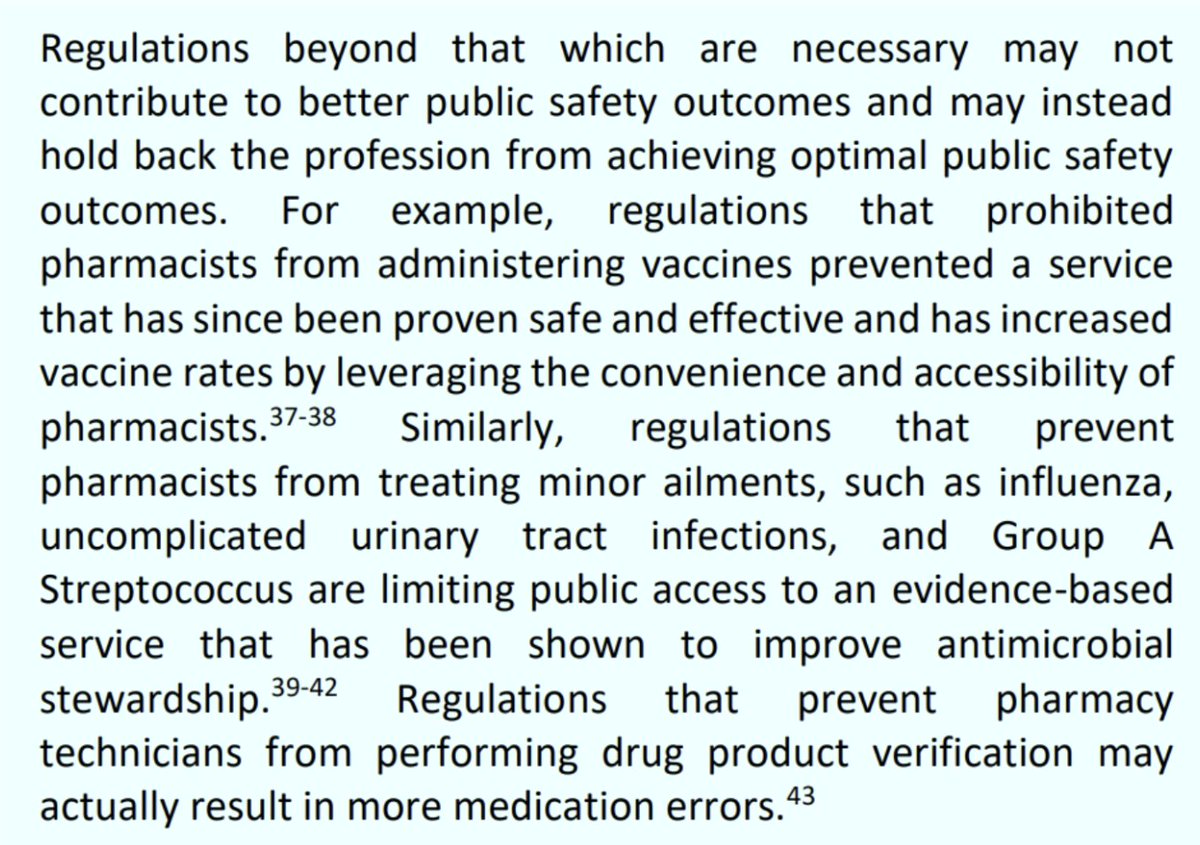

Now, coming up with a licensing regime is hard. As @binarybits notes, it’s important to ensure such a regime addresses the stuff we’re concerned with. This doesn’t mean ignoring other problems, but it’s not clear licensing is the solution for everything. understandingai.org/p/congress-sho…

Licensing regimes also risk cementing the dominance of industry incumbents, or affording too much power to governments. A careful balance should be struck to ensure companies and researchers can still build, access and deploy other models.

https://twitter.com/sebkrier/status/1658185822909128734

There are also plenty of examples of harms caused by licensing, and proponents should not forget about potential trade-offs and externalities. See for example:

https://twitter.com/sebkrier/status/1221455641774043136

https://twitter.com/sebkrier/status/1483029687127453699

https://twitter.com/sebkrier/status/1099049043852963840

https://twitter.com/sebkrier/status/1086737222941007873

https://twitter.com/sebkrier/status/1027630218733924353

These difficulties shouldn't lead to paralysis: a lot more reach is needed, and experts should carefully explore different licensing models, the benchmarks and evaluations needed, relevant thresholds, and so on. & don't underestimate the costs of bad laws!

https://twitter.com/adamkovac/status/1660770261593980931

• • •

Missing some Tweet in this thread? You can try to

force a refresh

Read on Twitter

Read on Twitter