Perhaps an open discussion on reasonable usage of VRAM for visual return is coming - talking with @HeyItsThatDon about Diablo 4/reading the @ComputerBase article I am scratching my head. These are the textures you need to use to get a stutter free experience on a 12GB GPU: (1/5)

IMO like those textures in the screenshot above from Computerbase and the one below from @HeyItsThatDon make me wonder why these textures are those you need for a 12GB GPU. They honestly looks lower res than games that came from a decade ago! Surely we can expect better... (2/5)

As a contrast here are screenshots from the Witcher 3 Complete Edition with a Diablo 4 camera angle where each screenshot is 8 GB VRAM or less at 4K (no RT on): a lot higher fidelity textures here in TW3 with more reasonable VRAM utilisation. (3/5)

In diablo 4 in comparison the textures in the screenshot below are those required to get a stable experience with *12 GB* of VRAM -> surely these Doom 3 level normal maps (honestly I think it looks worse) should not be what one should expect with a 12 GB GPU? (4/5)

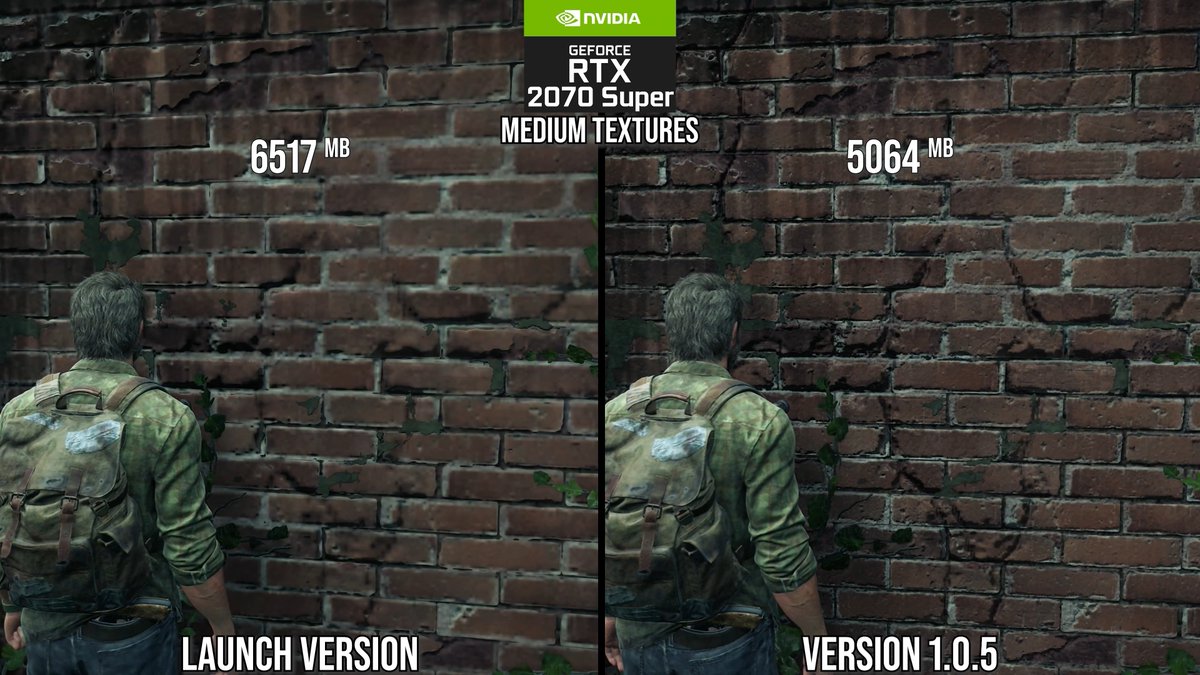

Not wanting to pick on Diablo 4 only but the way some games have questionable texture quality for VRAM requirements is distressing. Especially since games like The Last of Us and Forspoken both saw huge changes in post-launch patches to up quality while even using less VRAM!

• • •

Missing some Tweet in this thread? You can try to

force a refresh