Broadcasting in NumPy is widely used, yet poorly understood❗️

Today, I'll clearly explain how broadcasting works! 🚀

Same rules apply to PyTorch & TensorFlow!

A Thread 🧵👇

Today, I'll clearly explain how broadcasting works! 🚀

Same rules apply to PyTorch & TensorFlow!

A Thread 🧵👇

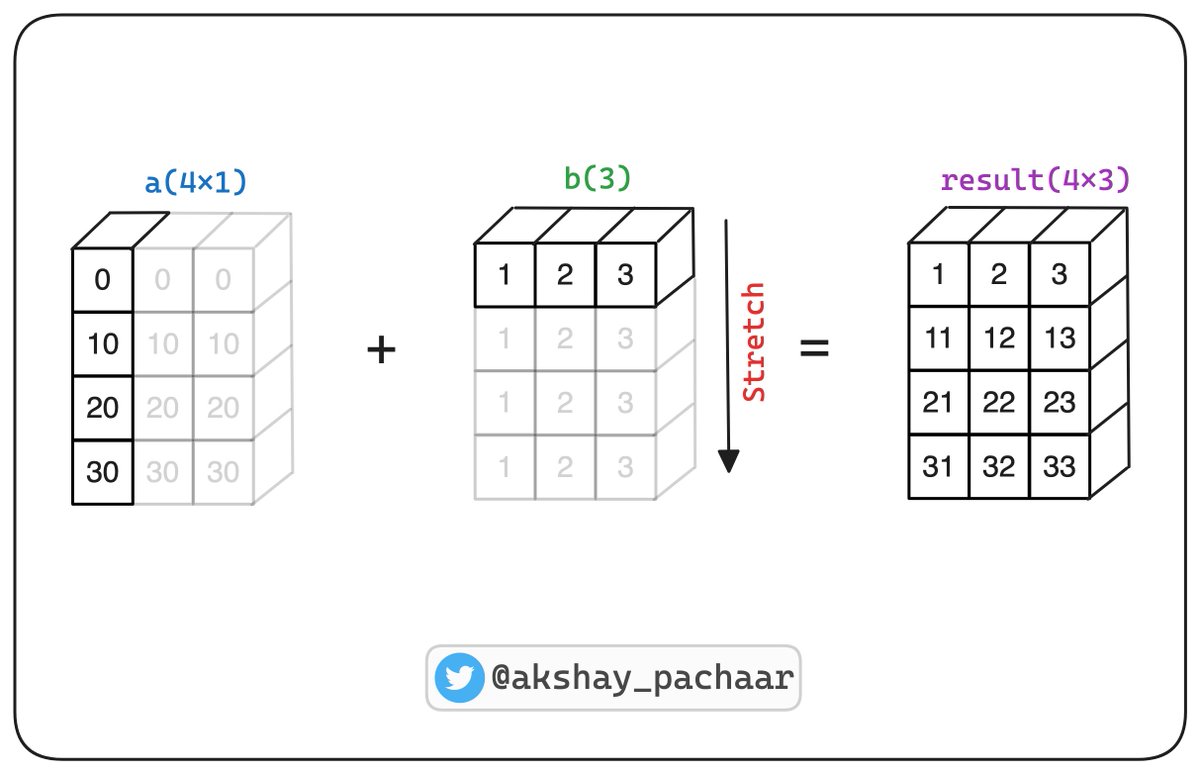

Broadcasting describes how NumPy treats arrays with different shapes during arithmetic operations.

The smaller array is “broadcast” across the larger array, such that the 2 have compatible shapes.

Check this out👇

The smaller array is “broadcast” across the larger array, such that the 2 have compatible shapes.

Check this out👇

In the image below, scalar "b" is being stretched into an array with the same shape as "a".

But how do we generalise these things?

continue reading ... 📖

But how do we generalise these things?

continue reading ... 📖

💫 General Rules:

1) Broadcasting starts with the trailing (i.e. rightmost) dimensions and works its way left .

2) Two dimensions are compatible, either when they are equal or one of them is 1.

Check out the examples 👇

1) Broadcasting starts with the trailing (i.e. rightmost) dimensions and works its way left .

2) Two dimensions are compatible, either when they are equal or one of them is 1.

Check out the examples 👇

When ever a one dimensional array is involved in broadcasting, consider it as a row vector!

Array → [1, 2, 3] ; shape → (3,)

Treated as → [[1, 2, 3]] ; shape → (1, 3)

Remember, broadcasting occurs from trailing dimension!

Check this out👇

Array → [1, 2, 3] ; shape → (3,)

Treated as → [[1, 2, 3]] ; shape → (1, 3)

Remember, broadcasting occurs from trailing dimension!

Check this out👇

Let's check a scenario when broadcasting doesn't occur!

- a(4x3)

- b(4) will be treated as b(1x4)

Now, broadcasting starts from trailing dimension but (4x3) & (1x4) are not compatible!

Check this out👇

- a(4x3)

- b(4) will be treated as b(1x4)

Now, broadcasting starts from trailing dimension but (4x3) & (1x4) are not compatible!

Check this out👇

Let's take one more example to make out understanding concrete!

Remember, 1D array treated as a row vector while broadcasting!

Check this out👇

Remember, 1D array treated as a row vector while broadcasting!

Check this out👇

Why use broadcasting❓

Broadcasting provides a means of vectorising array operations so that looping occurs in C instead of Python.

It does this without making needless copies of data and usually leads to efficient algorithm implementations.

Broadcasting provides a means of vectorising array operations so that looping occurs in C instead of Python.

It does this without making needless copies of data and usually leads to efficient algorithm implementations.

That's a wrap!

If you interested in:

- Python 🐍

- Data Science 📈

- Machine Learning 🤖

- Maths for ML 🧮

- MLOps 🛠

- CV/NLP 🗣

- LLMs 🧠

I'm sharing daily content over here, follow me →@akshay_pachaar if you haven't already!!

Cheers!! 🙂

If you interested in:

- Python 🐍

- Data Science 📈

- Machine Learning 🤖

- Maths for ML 🧮

- MLOps 🛠

- CV/NLP 🗣

- LLMs 🧠

I'm sharing daily content over here, follow me →@akshay_pachaar if you haven't already!!

Cheers!! 🙂

• • •

Missing some Tweet in this thread? You can try to

force a refresh

Read on Twitter

Read on Twitter