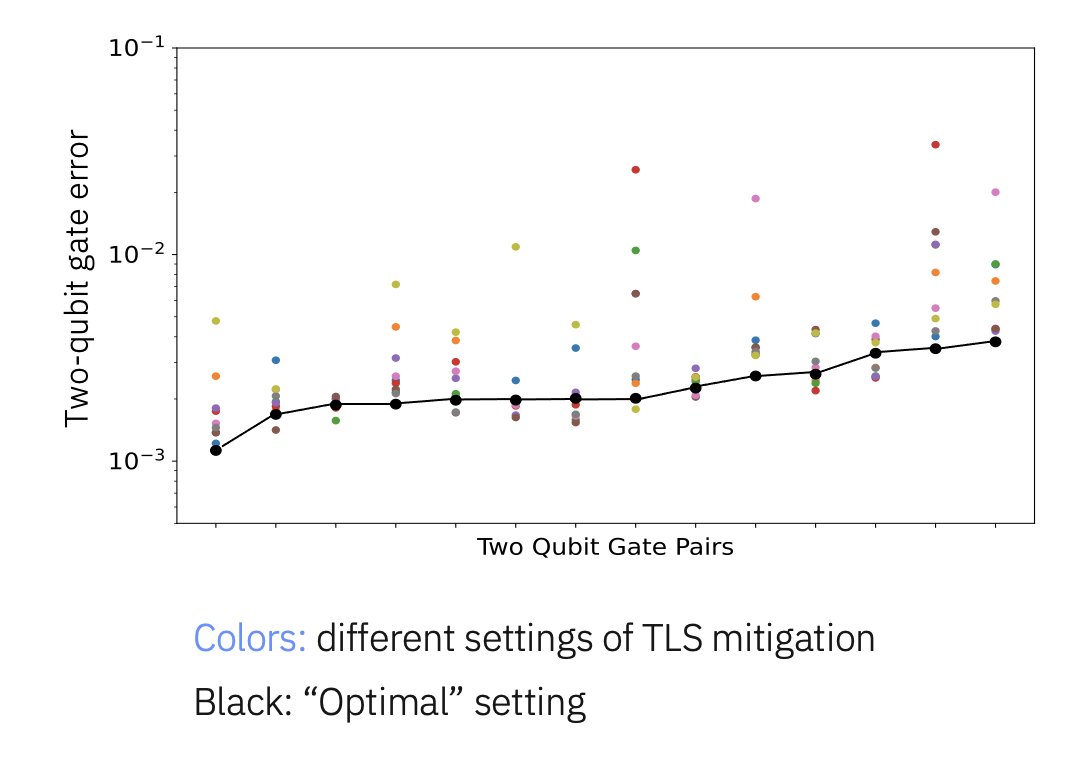

Earl I will make a few comments. The first is pushing average gate fidelity has not been the problem. In fact we regularly see above >99.9 in two qubit gate error. The hard part has been *stability* of two qubit gate error and cross-talk and both of these needed new technology. https://t.co/hKRZdcTnNf

https://twitter.com/earltcampbell/status/1677676307751096327

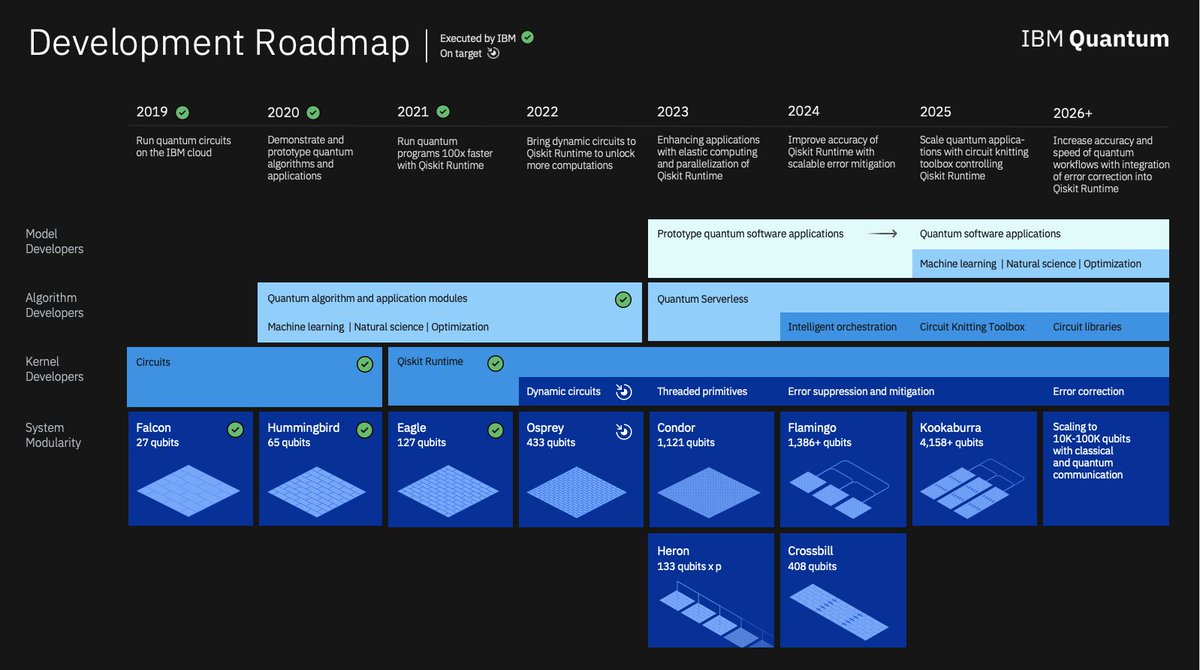

In fact in each of our birds it is has never been about the number of qubits. Falcon - bump bonds, Hummingbird - multiplex readout, Eagle - Multi-level wiring and through substrate via, Osprey - Flex I/O, Condor - I/O and Heron a new gate. Not number of qubits they come for free

This figure show both isolated and simultaneous gates and here you see the that for Heron the cross talk is almost zero. However, what is interesting is that it is no longer linear in a quantile plot so the statistics are not normal.

This can be seen in this plot as well. Egret was a test device at 33 qubits for the Heron chip. The tails have been our problem and tails needs lots of device data to understand.

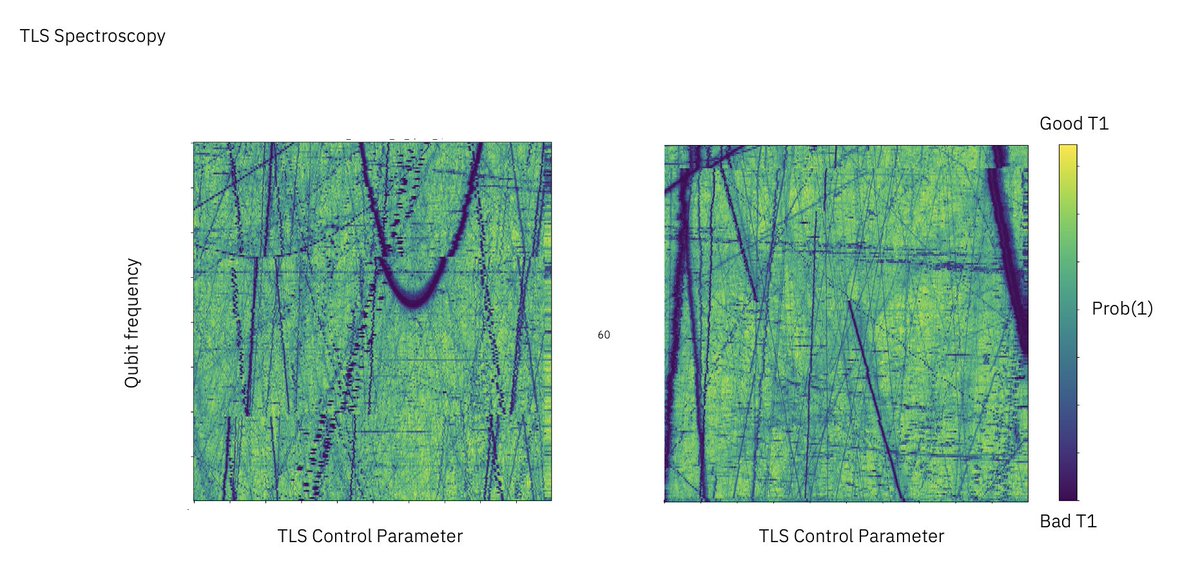

These tails are due to Two-level systems jumping on an off the transom making the gate not stable. In fact if we re-cool a device this all changes again. So by having many device we have learnt a lot about the physics.

So by putting many devices online we have been able to advance error mitigation, error suppression and got lots of device data. From this data we have have learnt that we can tune these TLS in some test devices

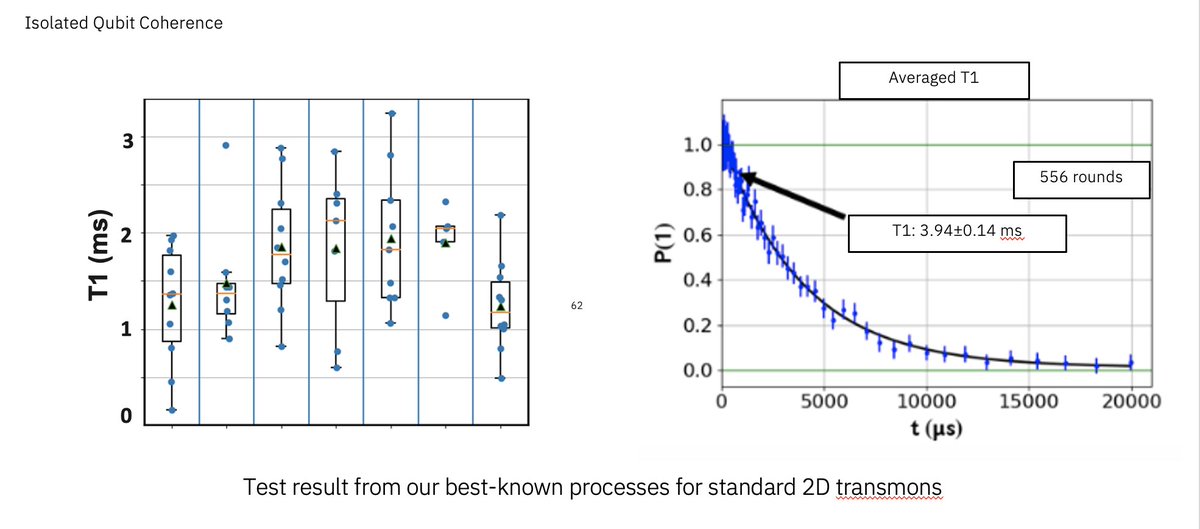

And we have also shown in *many* isolated devices the coherence time can be increased and regular we measure coherence times over a 1ms.

So to me there has been lots of progress enabled by all the data and while we have not integrated the TLS mitigation and the new coherence into the larger devices the future looks very promising for large quantum devices with both lots of qubits and good error rates.

• • •

Missing some Tweet in this thread? You can try to

force a refresh

Read on Twitter

Read on Twitter